Application of a New Hybrid Deep Learning Model That Considers Temporal and Feature Dependencies in Rainfall–Runoff Simulation

Abstract

:1. Introduction

2. Methodologies

2.1. Self-Attention Mechanism

2.2. Neural Network

2.2.1. Long Short-Term Memory Network

2.2.2. Convolutional Neural Network

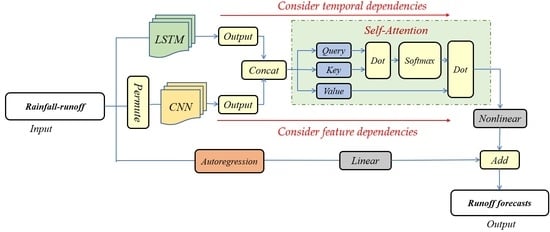

2.3. SA-CNN-LSTM Model

2.4. Network Structure Optimization

2.5. Evaluation Statistics

3. Case Study

3.1. Study Area and Data

3.2. Open-Source Software

4. Results and Discussion

4.1. Comparisons of Evaluation Indicators

4.2. Comparison of Scatter Regression Plots

4.3. Comparisons of Streamflow in Three Categories

4.4. Comparisons of Performance during Actual Flood Events

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, J.; Yuan, X.; Zeng, J.; Jiao, Y.; Li, Y.; Zhong, L.; Yao, L. Ensemble streamflow forecasting over a cascade reservoir catchment with integrated hydrometeorological modeling and machine learning. Hydrol. Earth Syst. Sci. 2022, 26, 265–278. [Google Scholar] [CrossRef]

- Yin, H.; Wang, F.; Zhang, X.; Zhang, Y.; Chen, J.; Xia, R.; Jin, J. Rainfall-runoff modeling using long short-term memory based step-sequence framework. J. Hydrol. 2022, 610, 127901. [Google Scholar] [CrossRef]

- Luo, P.; Liu, L.; Wang, S.; Ren, B.; He, B.; Nover, D. Influence assessment of new Inner Tube Porous Brick with absorbent concrete on urban floods control. Case Stud. Constr. Mater. 2022, 17, e01236. [Google Scholar] [CrossRef]

- Nourani, V.; Baghanam, A.H.; Adamowski, J.; Kisi, O. Applications of hybrid wavelet–Artificial Intelligence models in hydrology: A review. J. Hydrol. 2014, 514, 358–377. [Google Scholar] [CrossRef]

- Hu, R.; Fang, F.; Pain, C.; Navon, I. Rapid spatio-temporal flood prediction and uncertainty quantification using a deep learning method. J. Hydrol. 2019, 575, 911–920. [Google Scholar] [CrossRef]

- Luo, P.; Luo, M.; Li, F.; Qi, X.; Huo, A.; Wang, Z.; He, B.; Takara, K.; Nover, D.; Wang, Y. Urban flood numerical simulation: Research, methods and future perspectives. Environ. Model. Softw. 2022, 156, 105478. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, D.; Wang, G.; Qiu, J.; Long, K.; Du, Y.; Xie, H.; Wei, Z.; Shangguan, W.; Dai, Y. A hybrid deep learning algorithm and its application to streamflow prediction. J. Hydrol. 2021, 601, 126636. [Google Scholar] [CrossRef]

- Lv, N.; Liang, X.; Chen, C.; Zhou, Y.; Li, J.; Wei, H.; Wang, H. A long Short-Term memory cyclic model with mutual information for hydrology forecasting: A Case study in the xixian basin. Adv. Water Resour. 2020, 141, 103622. [Google Scholar] [CrossRef]

- Scharffenberg, W.; Harris, J. Hydrologic Engineering Center Hydrologic Modeling System, HEC-HMS: Interior Flood Modeling. In Proceedings of the World Environmental and Water Resources Congress 2008, Honolulu, HI, USA, 12–16 May 2008; pp. 1–3. [Google Scholar]

- Chu, X.; Steinman, A. Event and Continuous Hydrologic Modeling with HEC-HMS. J. Irrig. Drain. Eng. 2009, 135, 119–124. [Google Scholar] [CrossRef]

- Zhang, S.; Zhou, Y. The analysis and application of an HBV model. Appl. Math. Model. 2012, 36, 1302–1312. [Google Scholar] [CrossRef]

- Ren-Jun, Z. The Xinanjiang model applied in China. J. Hydrol. 1992, 135, 371–381. [Google Scholar] [CrossRef]

- Baker, T.J.; Miller, S.N. Using the Soil and Water Assessment Tool (SWAT) to assess land use impact on water resources in an East African watershed. J. Hydrol. 2013, 486, 100–111. [Google Scholar] [CrossRef]

- Zhou, F.; Xu, Y.; Chen, Y.; Xu, C.-Y.; Gao, Y.; Du, J. Hydrological response to urbanization at different spatio-temporal scales simulated by coupling of CLUE-S and the SWAT model in the Yangtze River Delta region. J. Hydrol. 2013, 485, 113–125. [Google Scholar] [CrossRef]

- Abbott, M.; Bathurst, J.; Cunge, J.; O’Connell, P.; Rasmussen, J. An introduction to the European Hydrological System—Systeme Hydrologique Europeen, “SHE”, 1: History and philosophy of a physically-based, distributed modelling system. J. Hydrol. 1986, 87, 45–59. [Google Scholar] [CrossRef]

- Abbott, M.B.; Bathurst, J.C.; Cunge, J.A.; O’Connell, P.E.; Rasmussen, J. An introduction to the European Hydrological System—Systeme Hydrologique Europeen, “SHE”, 2: Structure of a physically-based, distributed modelling system. J. Hydrol. 1986, 87, 61–77. [Google Scholar] [CrossRef]

- Xu, S.; Chen, Y.; Xing, L.; Li, C. Baipenzhu Reservoir Inflow Flood Forecasting Based on a Distributed Hydrological Model. Water 2021, 13, 272. [Google Scholar] [CrossRef]

- Zhou, F.; Chen, Y.; Wang, L.; Wu, S.; Shao, G. Flood forecasting scheme of Nanshui reservoir based on Liuxihe model. Trop. Cyclone Res. Rev. 2021, 10, 106–115. [Google Scholar] [CrossRef]

- Chen, Y.; Li, J.; Xu, H. Improving flood forecasting capability of physically based distributed hydrological models by parameter optimization. Hydrol. Earth Syst. Sci. 2016, 20, 375–392. [Google Scholar] [CrossRef] [Green Version]

- Lees, T.; Buechel, M.; Anderson, B.; Slater, L.; Reece, S.; Coxon, G.; Dadson, S.J. Benchmarking data-driven rainfall–runoff models in Great Britain: A comparison of long short-term memory (LSTM)-based models with four lumped conceptual models. Hydrol. Earth Syst. Sci. 2021, 25, 5517–5534. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; Sulaiman, S.O.; Deo, R.C.; Chau, K.-W. An enhanced extreme learning machine model for river flow forecasting: State-of-the-art, practical applications in water resource engineering area and future research direction. J. Hydrol. 2018, 569, 387–408. [Google Scholar] [CrossRef]

- Nearing, G.S.; Kratzert, F.; Sampson, A.K.; Pelissier, C.S.; Klotz, D.; Frame, J.M.; Prieto, C.; Gupta, H.V. What Role Does Hydrological Science Play in the Age of Machine Learning? Water Resour. Res. 2021, 57, e2020WR028091. [Google Scholar] [CrossRef]

- Xu, Y.; Hu, C.; Wu, Q.; Jian, S.; Li, Z.; Chen, Y.; Zhang, G.; Zhang, Z.; Wang, S. Research on particle swarm optimization in LSTM neural networks for rainfall-runoff simulation. J. Hydrol. 2022, 608, 127553. [Google Scholar] [CrossRef]

- Yokoo, K.; Ishida, K.; Ercan, A.; Tu, T.; Nagasato, T.; Kiyama, M.; Amagasaki, M. Capabilities of deep learning models on learning physical relationships: Case of rainfall-runoff modeling with LSTM. Sci. Total. Environ. 2021, 802, 149876. [Google Scholar] [CrossRef] [PubMed]

- Xie, K.; Liu, P.; Zhang, J.; Han, D.; Wang, G.; Shen, C. Physics-guided deep learning for rainfall-runoff modeling by considering extreme events and monotonic relationships. J. Hydrol. 2021, 603, 127043. [Google Scholar] [CrossRef]

- Montanari, A.; Rosso, R.; Taqqu, M.S. A seasonal fractional ARIMA Model applied to the Nile River monthly flows at Aswan. Water Resour. Res. 2000, 36, 1249–1259. [Google Scholar] [CrossRef]

- Wang, W.-C.; Chau, K.-W.; Xu, D.-M.; Chen, X.-Y. Improving Forecasting Accuracy of Annual Runoff Time Series Using ARIMA Based on EEMD Decomposition. Water Resour. Manag. 2015, 29, 2655–2675. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Riad, S.; Mania, J.; Bouchaou, L.; Najjar, Y. Predicting catchment flow in a semi-arid region via an artificial neural network technique. Hydrol. Process. 2004, 18, 2387–2393. [Google Scholar] [CrossRef]

- Khalil, A.F.; McKee, M.; Kemblowski, M.; Asefa, T. Basin scale water management and forecasting using artificial neural networks. JAWRA J. Am. Water Resour. Assoc. 2005, 41, 195–208. [Google Scholar] [CrossRef]

- Gao, S.; Huang, Y.; Zhang, S.; Han, J.; Wang, G.; Zhang, M.; Lin, Q. Short-term runoff prediction with GRU and LSTM networks without requiring time step optimization during sample generation. J. Hydrol. 2020, 589, 125188. [Google Scholar] [CrossRef]

- Van, S.P.; Le, H.M.; Thanh, D.V.; Dang, T.D.; Loc, H.H.; Anh, D.T. Deep learning convolutional neural network in rainfall–runoff modelling. J. Hydroinformatics 2020, 22, 541–561. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; Hui, Q.; Xie, W.; Wan, S.; Zhou, Y.; Pei, Q. Convolutional Neural Networks for forecasting flood process in Internet-of-Things enabled smart city. Comput. Networks 2020, 186, 107744. [Google Scholar] [CrossRef]

- Onan, A. Bidirectional convolutional recurrent neural network architecture with group-wise enhancement mechanism for text sentiment classification. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 2098–2117. [Google Scholar] [CrossRef]

- Liu, D.; Jiang, W.; Mu, L.; Wang, S. Streamflow Prediction Using Deep Learning Neural Network: Case Study of Yangtze River. IEEE Access 2020, 8, 90069–90086. [Google Scholar] [CrossRef]

- Feng, D.; Fang, K.; Shen, C. Enhancing Streamflow Forecast and Extracting Insights Using Long-Short Term Memory Networks With Data Integration at Continental Scales. Water Resour. Res. 2020, 56, e2019wr026793. [Google Scholar] [CrossRef]

- Xiang, Z.; Yan, J.; Demir, I. A Rainfall-Runoff Model With LSTM-Based Sequence-to-Sequence Learning. Water Resour. Res. 2020, 56, e2019wr025326. [Google Scholar] [CrossRef]

- Kratzert, F.; Klotz, D.; Herrnegger, M.; Sampson, A.K.; Hochreiter, S.; Nearing, G.S. Toward Improved Predictions in Ungauged Basins: Exploiting the Power of Machine Learning. Water Resour. Res. 2019, 55, 11344–11354. [Google Scholar] [CrossRef] [Green Version]

- Mao, G.; Wang, M.; Liu, J.; Wang, Z.; Wang, K.; Meng, Y.; Zhong, R.; Wang, H.; Li, Y. Comprehensive comparison of artificial neural networks and long short-term memory networks for rainfall-runoff simulation. Phys. Chem. Earth Parts A/B/C 2021, 123, 103026. [Google Scholar] [CrossRef]

- Granata, F.; Di Nunno, F.; de Marinis, G. Stacked machine learning algorithms and bidirectional long short-term memory networks for multi-step ahead streamflow forecasting: A comparative study. J. Hydrol. 2022, 613, 128431. [Google Scholar] [CrossRef]

- Kao, I.F.; Zhou, Y.; Chang, L.C.; Chang, F.J. Exploring a Long Short-Term Memory based Encoder-Decoder framework for multi-step-ahead flood forecasting. J. Hydrol. 2020, 583, 124631. [Google Scholar] [CrossRef]

- Cui, Z.; Qing, X.; Chai, H.; Yang, S.; Zhu, Y.; Wang, F. Real-time rainfall-runoff prediction using light gradient boosting machine coupled with singular spectrum analysis. J. Hydrol. 2021, 603, 127124. [Google Scholar] [CrossRef]

- Yin, H.; Zhang, X.; Wang, F.; Zhang, Y.; Xia, R.; Jin, J. Rainfall-runoff modeling using LSTM-based multi-state-vector sequence-to-sequence model. J. Hydrol. 2021, 598, 126378. [Google Scholar] [CrossRef]

- Chang, L.-C.; Liou, J.-Y.; Chang, F.-J. Spatial-temporal flood inundation nowcasts by fusing machine learning methods and principal component analysis. J. Hydrol. 2022, 612, 128086. [Google Scholar] [CrossRef]

- Ding, Y.; Zhu, Y.; Feng, J.; Zhang, P.; Cheng, Z. Interpretable spatio-temporal attention LSTM model for flood forecasting. Neurocomputing 2020, 403, 348–359. [Google Scholar] [CrossRef]

- Chen, X.; Huang, J.; Han, Z.; Gao, H.; Liu, M.; Li, Z.; Liu, X.; Li, Q.; Qi, H.; Huang, Y. The importance of short lag-time in the runoff forecasting model based on long short-term memory. J. Hydrol. 2020, 589, 125359. [Google Scholar] [CrossRef]

- Wang, Y.K.; Hassan, A. Impact of Spatial Distribution Information of Rainfall in Runoff Simulation Using Deep-Learning Methods. Hydrol. Earth Syst. Sci. 2021, 26, 2387–2403. [Google Scholar] [CrossRef]

- Zheng, Z.; Huang, S.; Weng, R.; Dai, X.-Y.; Chen, J. Improving Self-Attention Networks With Sequential Relations. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1707–1716. [Google Scholar] [CrossRef]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Yan, C.; Hao, Y.; Li, L.; Yin, J.; Liu, A.; Mao, Z.; Chen, Z.; Gao, X. Task-Adaptive Attention for Image Captioning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 43–51. [Google Scholar] [CrossRef]

- Huang, B.; Liang, Y.; Qiu, X. Wind Power Forecasting Using Attention-Based Recurrent Neural Networks: A Comparative Study. IEEE Access 2021, 9, 40432–40444. [Google Scholar] [CrossRef]

- Alizadeh, B.; Bafti, A.G.; Kamangir, H.; Zhang, Y.; Wright, D.B.; Franz, K.J. A novel attention-based LSTM cell post-processor coupled with bayesian optimization for streamflow prediction. J. Hydrol. 2021, 601, 126526. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef] [Green Version]

- Mei, S.; Ji, J.; Hou, J.; Li, X.; Du, Q. Learning Sensor-Specific Spatial-Spectral Features of Hyperspectral Images via Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4520–4533. [Google Scholar] [CrossRef]

- Li, Q.; Chen, Y.; Zeng, Y. Transformer with Transfer CNN for Remote-Sensing-Image Object Detection. Remote. Sens. 2022, 14, 984. [Google Scholar] [CrossRef]

- Yao, G.; Lei, T.; Zhong, J. A review of Convolutional-Neural-Network-based action recognition. Pattern Recognit. Lett. 2018, 118, 14–22. [Google Scholar] [CrossRef]

- Wei, X.; Lei, B.; Ouyang, H.; Wu, Q. Stock Index Prices Prediction via Temporal Pattern Attention and Long-Short-Term Memory. Adv. Multimedia 2020, 2020, 8831893. [Google Scholar] [CrossRef]

- Shih, S.-Y.; Sun, F.-K.; Lee, H.-Y. Temporal pattern attention for multivariate time series forecasting. Mach. Learn. 2019, 108, 1421–1441. [Google Scholar] [CrossRef] [Green Version]

- Wilson, A.C.; Roelofs, R.; Stern, M.; Srebro, N.; Recht, B. The Marginal Value of Adaptive Gradient Methods in Machine Learning. arXiv 2014. [Google Scholar] [CrossRef]

- Leslie, N.; Smith, N.T. Super-Convergence: Very Fast Training of Neural Networks Using Large Learning Rates. arXiv 2017. [Google Scholar] [CrossRef]

- Shamseldin, A.Y. Application of a neural network technique to rainfall-runoff modelling. J. Hydrol. 1997, 199, 272–294. [Google Scholar] [CrossRef]

- Krause, P.; Boyle, D.P.; Bäse, F. Comparison of different efficiency criteria for hydrological model assessment. Adv. Geosci. 2005, 5, 89–97. [Google Scholar] [CrossRef] [Green Version]

- Sergey Ioffe, C.S. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015. [Google Scholar] [CrossRef]

- Tian, Y.; Xu, Y.-P.; Yang, Z.; Wang, G.; Zhu, Q. Integration of a Parsimonious Hydrological Model with Recurrent Neural Networks for Improved Streamflow Forecasting. Water 2018, 10, 1655. [Google Scholar] [CrossRef] [Green Version]

- Gao, S.; Zhang, S.; Huang, Y.; Han, J.; Luo, H.; Zhang, Y.; Wang, G. A new seq2seq architecture for hourly runoff prediction using historical rainfall and runoff as input. J. Hydrol. 2022, 612, 128099. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, T.; Kang, A.; Li, J.; Lei, X. Research on Runoff Simulations Using Deep-Learning Methods. Sustainability 2021, 13, 1336. [Google Scholar] [CrossRef]

- Li, Y.; Wang, G.; Liu, C.; Lin, S.; Guan, M.; Zhao, X. Improving Runoff Simulation and Forecasting with Segmenting Delay of Baseflow from Fast Surface Flow in Montane High-Vegetation-Covered Catchments. Water 2021, 13, 196. [Google Scholar] [CrossRef]

| Super-Parameter | Range |

|---|---|

| LSTM layer | 8–256 |

| Autoregressive layer | 8–256 |

| CNN layer | 8–256 |

| Attention layer | 8–256 |

| Learning rate | [0.1, 0.01, 0.001, 0.0001, 0.00001] |

| Model/Train | Lead Time of 1 h | Lead Time of 2 h | Lead Time of 3 h | |||||||||

| NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | |

| CNN | 0.996 | 1.941 | 0.141 | 3.424 | 0.992 | 2.855 | 0.243 | 4.477 | 0.990 | 3.278 | 0.260 | 5.283 |

| LSTM | 0.994 | 2.178 | 0.163 | 3.894 | 0.991 | 3.017 | 0.222 | 4.910 | 0.990 | 2.985 | 0.197 | 5.217 |

| ANN | 0.994 | 2.555 | 0.222 | 4.073 | 0.991 | 2.979 | 0.234 | 4.853 | 0.990 | 3.133 | 0.249 | 5.062 |

| RF | 0.974 | 2.234 | 0.090 | 8.340 | 0.974 | 2.260 | 0.091 | 8.336 | 0.974 | 2.286 | 0.093 | 8.341 |

| SA-CNN | 0.993 | 1.182 | 0.034 | 4.273 | 0.986 | 1.873 | 0.061 | 6.137 | 0.976 | 2.575 | 0.086 | 8.002 |

| SA-LSTM | 0.994 | 1.186 | 0.035 | 3.835 | 0.989 | 2.261 | 0.099 | 5.328 | 0.973 | 4.918 | 0.241 | 8.439 |

| SA-CNN-LSTM | 0.994 | 1.344 | 0.059 | 3.920 | 0.990 | 1.783 | 0.056 | 5.214 | 0.985 | 2.525 | 0.099 | 6.343 |

| Model/Train | Lead Time of 4 h | Lead Time of 5 h | Lead Time of 6 h | |||||||||

| NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | |

| CNN | 0.986 | 3.674 | 0.275 | 6.056 | 0.983 | 4.031 | 0.287 | 6.830 | 0.976 | 4.572 | 0.304 | 7.950 |

| LSTM | 0.988 | 3.457 | 0.255 | 5.710 | 0.985 | 3.479 | 0.225 | 6.302 | 0.981 | 4.092 | 0.283 | 7.065 |

| ANN | 0.990 | 3.284 | 0.257 | 5.236 | 0.989 | 3.403 | 0.261 | 5.377 | 0.987 | 3.653 | 0.269 | 5.901 |

| RF | 0.974 | 2.314 | 0.094 | 8.365 | 0.973 | 2.344 | 0.096 | 8.409 | 0.973 | 2.382 | 0.098 | 8.511 |

| SA-CNN | 0.965 | 3.080 | 0.077 | 9.704 | 0.950 | 3.859 | 0.110 | 11.512 | 0.868 | 5.048 | 0.166 | 18.742 |

| SA-LSTM | 0.979 | 3.162 | 0.125 | 7.404 | 0.972 | 3.980 | 0.131 | 8.567 | 0.966 | 4.496 | 0.162 | 9.544 |

| SA-CNN-LSTM | 0.980 | 2.866 | 0.082 | 7.223 | 0.976 | 3.491 | 0.143 | 7.970 | 0.972 | 4.050 | 0.209 | 8.703 |

| Model/Train | Lead Time of 7 h | |||||||||||

| NSE | MAE | MRE | RMSE | |||||||||

| CNN | 0.971 | 4.770 | 0.306 | 8.812 | ||||||||

| LSTM | 0.977 | 4.372 | 0.292 | 7.844 | ||||||||

| ANN | 0.984 | 4.098 | 0.283 | 6.582 | ||||||||

| RF | 0.972 | 2.428 | 0.100 | 8.635 | ||||||||

| SA-CNN | 0.926 | 4.997 | 0.217 | 14.074 | ||||||||

| SA-LSTM | 0.963 | 4.580 | 0.193 | 9.965 | ||||||||

| SA-CNN-LSTM | 0.967 | 4.554 | 0.259 | 9.437 | ||||||||

| Model/Test | Lead Time of 1 h | Lead Time of 2 h | Lead Time of 3 h | |||||||||

| NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | |

| CNN | 0.965 | 2.639 | 0.136 | 4.978 | 0.936 | 2.971 | 0.134 | 6.708 | 0.899 | 3.701 | 0.167 | 8.441 |

| LSTM | 0.958 | 1.969 | 0.081 | 5.416 | 0.926 | 3.513 | 0.178 | 7.219 | 0.899 | 3.498 | 0.160 | 8.441 |

| ANN | 0.943 | 2.811 | 0.123 | 6.307 | 0.911 | 3.693 | 0.168 | 7.928 | 0.878 | 4.150 | 0.183 | 9.256 |

| RF | 0.696 | 4.333 | 0.136 | 14.626 | 0.690 | 4.447 | 0.141 | 14.772 | 0.684 | 4.560 | 0.146 | 14.918 |

| SA-CNN | 0.987 | 0.826 | 0.029 | 3.080 | 0.954 | 1.420 | 0.048 | 5.664 | 0.899 | 2.061 | 0.067 | 8.452 |

| SA-LSTM | 0.987 | 0.799 | 0.029 | 2.972 | 0.959 | 1.879 | 0.082 | 5.346 | 0.912 | 4.257 | 0.212 | 7.885 |

| SA-CNN-LSTM | 0.992 | 0.901 | 0.041 | 2.435 | 0.974 | 1.237 | 0.046 | 4.271 | 0.950 | 1.598 | 0.056 | 5.914 |

| Model/Test | Lead Time of 4 h | Lead Time of 5 h | Lead Time of 6 h | |||||||||

| NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | NSE | MAE | MRE | RMSE | |

| CNN | 0.855 | 4.329 | 0.191 | 10.113 | 0.821 | 4.938 | 0.218 | 11.220 | 0.763 | 5.876 | 0.261 | 12.907 |

| LSTM | 0.865 | 3.643 | 0.149 | 9.738 | 0.833 | 3.860 | 0.150 | 10.831 | 0.787 | 5.206 | 0.234 | 12.231 |

| ANN | 0.840 | 4.720 | 0.208 | 10.622 | 0.801 | 5.007 | 0.212 | 11.825 | 0.748 | 5.585 | 0.232 | 13.316 |

| RF | 0.677 | 4.672 | 0.151 | 15.083 | 0.670 | 4.780 | 0.156 | 15.250 | 0.661 | 4.893 | 0.161 | 15.456 |

| SA-CNN | 0.829 | 2.753 | 0.093 | 10.962 | 0.755 | 3.320 | 0.112 | 13.134 | 0.779 | 2.851 | 0.091 | 12.478 |

| SA-LSTM | 0.909 | 2.222 | 0.076 | 7.996 | 0.875 | 3.454 | 0.143 | 9.375 | 0.853 | 3.293 | 0.122 | 10.169 |

| SA-CNN-LSTM | 0.917 | 2.272 | 0.083 | 7.666 | 0.875 | 2.511 | 0.084 | 9.373 | 0.834 | 2.921 | 0.100 | 10.818 |

| Model/Test | Lead Time of 7 h | |||||||||||

| NSE | MAE | MRE | RMSE | |||||||||

| CNN | 0.727 | 5.974 | 0.251 | 13.868 | ||||||||

| LSTM | 0.754 | 5.650 | 0.256 | 13.163 | ||||||||

| ANN | 0.685 | 6.690 | 0.293 | 14.901 | ||||||||

| RF | 0.649 | 5.013 | 0.166 | 15.719 | ||||||||

| SA-CNN | 0.625 | 4.204 | 0.140 | 16.247 | ||||||||

| SA-LSTM | 0.822 | 3.260 | 0.111 | 11.195 | ||||||||

| SA-CNN-LSTM | 0.774 | 3.395 | 0.118 | 12.615 | ||||||||

| Flood | SA-CNN-LSTM | SA-LSTM | SA-CNN | LSTM | CNN | ANN | RF | |

|---|---|---|---|---|---|---|---|---|

| 2020040200 | NSE | 0.99 | 0.99 | 0.99 | 0.98 | 0.99 | 0.98 | 0.55 |

| MAE | 4.86 | 5.98 | 4.88 | 7.36 | 6.42 | 7.71 | 39.41 | |

| MRE | 0.04 | 0.05 | 0.04 | 0.06 | 0.05 | 0.06 | 0.29 | |

| RMSE | 6.49 | 7.88 | 6.22 | 9.72 | 8.84 | 10.31 | 50.82 | |

| PE (305.6 m3/s) | 1.7% (311.04) | 5% (290.14) | 2.2% (298.82) | 7% (327.15) | 1.6% (310.54) | 3% (314.78) | 36.4% (195.23) | |

| 2020042100 | NSE | 0.87 | 0.31 | 0.85 | 0.45 | 0.75 | 0.35 | 0.21 |

| MAE | 5.97 | 11.98 | 5.92 | 13.22 | 10.04 | 15.72 | 15.67 | |

| MRE | 0.09 | 0.19 | 0.08 | 0.23 | 0.22 | 0.29 | 0.23 | |

| RMSE | 10.56 | 24.63 | 11.57 | 22.02 | 14.90 | 23.89 | 26.45 | |

| PE (144 m3/s) | 0.1% (144.21) | 55% (223.7) | 4.6% (150.76) | 24.6% (108.49) | 14.4% (123.2) | 27.7% (104.01) | 57% (61.84) | |

| 2020060800 | NSE | 0.94 | 0.93 | 0.93 | 0.80 | 0.87 | 0.79 | −0.15 |

| MAE | 11.80 | 12.32 | 12.65 | 23.54 | 18.55 | 26.68 | 60.45 | |

| MRE | 0.08 | 0.08 | 0.09 | 0.20 | 0.17 | 0.26 | 0.38 | |

| RMSE | 21.07 | 22.04 | 22.46 | 37.25 | 29.97 | 37.97 | 89.68 | |

| PE (331.6 m3/s) | 0.2% (332.55) | 1.9% (325.18) | 5.5% (351.1) | 8.2% (304.35) | 1% (328.1) | 5.7% (312.42) | 63.3% (121.59) | |

| 2020081109 | NSE | 0.91 | 0.83 | 0.49 | −0.87 | 0.08 | −0.83 | 0.35 |

| MAE | 2.14 | 2.77 | 8.94 | 14.82 | 10.26 | 14.89 | 9.24 | |

| MRE | 0.08 | 0.13 | 0.34 | 0.58 | 0.40 | 0.58 | 0.37 | |

| RMSE | 4.36 | 5.86 | 10.17 | 19.43 | 13.61 | 19.22 | 11.47 | |

| PE (67.33 m3/s) | 2.1% (68.76) | 0.02% (67.31) | 18.9% (80.11) | 12.8% (77.29) | 33.5% (89.9) | 42% (95.65) | 29.9% (47.14) | |

| 2021050900 | NSE | 0.96 | 0.95 | 0.96 | 0.94 | 0.91 | 0.89 | 0.26 |

| MAE | 4.07 | 4.77 | 4.25 | 8.46 | 9.94 | 11.76 | 23.06 | |

| MRE | 0.06 | 0.07 | 0.06 | 0.16 | 0.18 | 0.22 | 0.26 | |

| RMSE | 9.65 | 10.77 | 9.91 | 11.87 | 14.52 | 16.38 | 42.06 | |

| PE (186.6 m3/s) | 1% (188.58) | 4.2% (178.61) | 3.1% (192.41) | 10.9% (166.17) | 4.8% (177.52) | 6.9% (173.74) | 58.5% (77.34) | |

| 2021051612 | NSE | 0.99 | 0.98 | 0.98 | 0.90 | 0.96 | 0.95 | 0.19 |

| MAE | 7.06 | 8.70 | 9.27 | 19.12 | 13.88 | 18.65 | 65.50 | |

| MRE | 0.06 | 0.06 | 0.07 | 0.15 | 0.15 | 0.16 | 0.31 | |

| RMSE | 11.77 | 16.74 | 17.83 | 39.45 | 23.33 | 28.41 | 110.34 | |

| PE (429.1 m3/s) | 1.7% (436.48) | 4.3% (447.88) | 1% (433.61) | 1.6% (436.55) | 3% (415.83) | 6.7% (400.31) | 65.8% (146.4) |

| Lead Time | SA-CNN-LSTM | SA-LSTM | SA-CNN | LSTM | CNN | ANN | RF | |

|---|---|---|---|---|---|---|---|---|

| 1 h | NSE | 0.99 | 0.98 | 0.99 | 0.96 | 0.96 | 0.95 | 0.35 |

| MAE | 4.40 | 7.89 | 4.67 | 11.55 | 13.49 | 15.39 | 44.65 | |

| MRE | 0.04 | 0.06 | 0.04 | 0.10 | 0.13 | 0.16 | 0.24 | |

| RMSE | 7.32 | 12.83 | 8.10 | 17.80 | 18.45 | 20.18 | 70.24 | |

| PE (336.8 m3/s) | 0.01% (336.85) | 4.4% (321.98) | 0.7% (339.38) | 4.1% (322.78) | 1.1% (332.89) | 1.1% (332.88) | 52.6% (159.35) | |

| 3 h | NSE | 0.95 | 0.83 | 0.91 | 0.86 | 0.85 | 0.86 | 0.32 |

| MAE | 12.28 | 23.63 | 14.26 | 20.93 | 24.58 | 24.67 | 46.29 | |

| MRE | 0.11 | 0.19 | 0.11 | 0.17 | 0.21 | 0.24 | 0.25 | |

| RMSE | 19.56 | 35.85 | 25.70 | 33.07 | 34.21 | 32.78 | 71.64 | |

| PE (336.8 m3/s) | 1.5% (342.01) | 9.8% (303.75) | 5% (319.87) | 8.9% (306.63) | 15% (286.23) | 7.7% (310.86) | 51.5% (163.19) | |

| 5 h | NSE | 0.86 | 0.78 | 0.74 | 0.71 | 0.69 | 0.70 | 0.29 |

| MAE | 20.96 | 25.13 | 25.48 | 29.62 | 35.92 | 33.48 | 48.53 | |

| MRE | 0.19 | 0.20 | 0.19 | 0.23 | 0.30 | 0.29 | 0.27 | |

| RMSE | 32.21 | 40.96 | 44.70 | 47.16 | 48.17 | 47.69 | 73.58 | |

| PE (336.8 m3/s) | 1% (340.22) | 11.8% (296.76) | 15.1% (285.69) | 15.1% (285.90) | 21.8% (263.25) | 12.6% (294.19) | 52.5% (159.72) | |

| 7 h | NSE | 0.77 | 0.69 | 0.50 | 0.55 | 0.48 | 0.49 | 0.23 |

| MAE | 27.43 | 30.08 | 34.98 | 35.70 | 48.89 | 44.38 | 50.76 | |

| MRE | 0.24 | 0.24 | 0.24 | 0.26 | 0.41 | 0.39 | 0.28 | |

| RMSE | 42.19 | 48.81 | 61.41 | 58.56 | 63.02 | 62.06 | 76.50 | |

| PE (336.8 m3/s) | 1.3% (341.42) | 13.4% (291.59) | 22.5% (260.80) | 19.8% (269.93) | 27.6% (243.62) | 21.4% (264.51) | 52.3% (160.60) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, F.; Chen, Y.; Liu, J. Application of a New Hybrid Deep Learning Model That Considers Temporal and Feature Dependencies in Rainfall–Runoff Simulation. Remote Sens. 2023, 15, 1395. https://doi.org/10.3390/rs15051395

Zhou F, Chen Y, Liu J. Application of a New Hybrid Deep Learning Model That Considers Temporal and Feature Dependencies in Rainfall–Runoff Simulation. Remote Sensing. 2023; 15(5):1395. https://doi.org/10.3390/rs15051395

Chicago/Turabian StyleZhou, Feng, Yangbo Chen, and Jun Liu. 2023. "Application of a New Hybrid Deep Learning Model That Considers Temporal and Feature Dependencies in Rainfall–Runoff Simulation" Remote Sensing 15, no. 5: 1395. https://doi.org/10.3390/rs15051395