1. Introduction

Three-dimensional spatial information is an essential part of geographic information. With the development of light detection and ranging (LiDAR) sensors, it has been possible to acquire high-precision 3D LiDAR point cloud data in outdoor environments with good global navigation satellite system (GNSS) signals based on LiDAR combined with GNSS and the inertial navigation system (INS) or inertial measurement unit (IMU) technologies. However, acquiring high-precision 3D LiDAR point cloud data in indoor and other GNSS-denied environments is still one of the research hotspots. In recent years, the development of simultaneous localization and mapping (SLAM) technology has brought new ideas to solve this hotspot problem. SLAM refers to simultaneous localization and mapping, a technique for the carrier pose estimation and map construction in unknown environments. According to the different sensors used, SLAM can be divided into visual SLAM based on sensors such as cameras and LiDAR SLAM based on LiDAR sensors. Visual SLAM [

1,

2] has high localization accuracy but is more sensitive to changes in illumination and the viewing angle. LiDAR SLAM is not affected by changes in illumination and viewing angle, and LiDAR has a high-ranging accuracy. LiDAR can be divided into mechanical LiDAR and non-mechanical LiDAR according to the internal structure, and mechanical LiDAR can be divided into single-line LiDAR and multi-line LiDAR. Compared with 2D LiDAR SLAM using single-line LiDAR, 3D LiDAR SLAM using multi-line LiDAR can provide richer 3D environmental information.

In the field of 3D LiDAR SLAM, scholars have proposed many excellent algorithms. One of the most influential algorithms is the LiDAR odometry and mapping (LOAM) algorithm [

3], which views 3D LiDAR SLAM as a framework combining a high-frequency, low-accuracy front-end module with a low-frequency, high-precision back-end module. In the front-end module, feature points (both edge point and surface point types) are extracted for each frame of acquired point cloud data, and the extracted feature points are later used to match between frames to construct the LiDAR odometry. In the back-end module, the bit pose of the front-end odometer output is optimized, and the environmental map is constructed using the results from multiple scan matching. The LOAM algorithm does not have a loop closure module and cannot effectively constrain the cumulative error. The LIO-SAM [

4] algorithm further improves the LOAM algorithm by constructing a tightly coupled LiDAR-IMU odometry framework with factor maps and supporting the introduction of GNSS observations to correct cumulative errors and using IMU observations to motion compensate the LiDAR observations to remove distortions. The algorithm achieves real-time performance using a sliding window-based scan-matching method. The LIO-SAM algorithm has obtained higher accuracy point cloud maps in some outdoor scenarios with the help of GNSS to constrain the localization error. However, in indoor scenarios without a priori constraints or scenarios with poor GNSS signals, only loop closure constraints can be relied on to correct the accumulated positional estimation errors. A Euclidean distance threshold triggers the loop closure constraint, which needs to be more robust. The authors of [

5] introduced descriptors to improve the loop closure part of LIO-SAM to enhance the robustness and speed of the loop closure part of the original algorithm, but the loop closure constraint will only be triggered when revisit occurs, and the constraint is limited for large scenes or no revisit environments. Unlike the LOAM algorithm and its variants, FAST-LIO2 [

6] does not extract feature points for each acquired LiDAR point cloud frame and directly align the original point cloud with the point cloud submap. It introduces more point cloud features to improve the matching accuracy and uses an incremental k-d tree structure to ensure computational efficiency to achieve real-time performance. However, FAST-LIO2 does not have a loop closure module and does not support the introduction of GNSS observations, so it lacks effective constraints in large open scenarios.

Although the above methods have high accuracy in environments with good GNSS signals or rich features, high-accuracy point cloud maps cannot be obtained in structured and GNSS-denied environments such as corridors and parking lots. Many studies have attempted to address this issue. The LeGO-LOAM [

7] algorithm uses a segmentation clustering method to extract the ground in the front-end part for the ground unmanned vehicle LiDAR parallel to the ground, increasing the constraint on the ground and reducing the error in the z-direction. However, the algorithm requires that the LiDAR be parallel to the ground and only apply to some ground unmanned vehicles. Research by [

8,

9,

10] added geometric feature constraints such as lines and planes to improve the robustness and accuracy of the system in vision-based and LiDAR-based SLAM frameworks, respectively. The research by [

11] also focuses on geometric feature constraints. It used the nearest point representation proposed in [

10] to represent the plane, successively fitted the plane corresponding to the ground point cloud and constructed the ground constraint based on the plane relationship of the fitted ground between two different frames to improve the elevation offset problem of the LiDAR SLAM estimated poses in the indoor parking environment.

The above method constructs constraints by introducing geometric information. Although it has some positive effects, the improvement in accuracy is still limited because the geometric features used to construct the constraints come from the current local submap. Cumulative errors have been introduced during the construction of the local submap. A structured environmental assumption can be introduced to better constrain the pose estimation of SLAM using geometric information in the current environment, such as the MW assumption [

12]. The introduction of the MW assumption is a presupposition of the environment, so it avoids the introduction of geometric constraints along with the introduction of pre-existing cumulative errors. The MW assumption proposes the existence of structured features in the artificial environment, i.e., the existence of a large number of parallel and perpendicular relationships, which can be summarized as the existence of three main directions in the environment where the artificial buildings are always identical and orthogonal to each other. In the real world, many structured scenes (e.g., parking lots, corridors, etc.) can be regarded as conforming to the MW assumption, so the three main directions of the MW assumption can be used to assist and improve SLAM in these scenes. In the field of visual-based SLAM, many researchers have already conducted in-depth research on visual SLAM algorithms aided by the MW assumption. The research by [

13,

14,

15,

16] improved the visual-based SLAM framework based on the structured features of the environment expressed by the MW assumption to improve the drift and increase the accuracy in low-texture environments. The authors of [

17] used structured lines as new features in the MW to complete map construction and localization, and the accuracy and robustness of the system were improved due to the introduction of directionality constraints. The research by [

18] proposed a visual-inertial odometer based on multiple Manhattan Worlds overlaying the environment, further improving the generality of the method. In the field of LiDAR SLAM, there are still few related studies. The authors of [

19] used the MW assumption to extract orthogonal planes and generate plane-based maps, which are more convenient for subsequent path planning while occupying less memory. However, the algorithm is more focused on the real-time performance of the system rather than accuracy.

In the MW assumption-based SLAM approach, one core task is extracting the planes in the current environment to construct screen constraints. The random sample consensus (RANSAC) algorithm [

20,

21] is a commonly used method for plane extraction, which is more robust to outliers and still gives good extraction results in the presence of a small number of outliers. For the case where the environment contains multiple planes, the point cloud can also be segmented using the Hough transform [

22] or a point cloud segmentation method such as the region growth method [

23] before plane extraction.

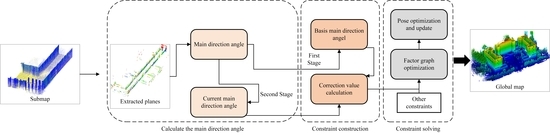

Considering the above problems and existing studies, this paper introduces the MW assumption to express the regular geometric features in the environment. We propose a planar constraint method based on LiDAR in the environment conforming to the MW assumption. This method adopts different processing strategies for the ground and vertical planes, such as walls and columns. It divides the six-degree freedom pose estimation problem of into two sub-problems three-degree of freedom pose estimation and three-degree of freedom pose estimation, which improves the accuracy and robustness of the system. Furthermore, in the non-MW assumption environment, the present method will stop functioning due to the non-detection of a plane that matches the threshold. Still, it will not affect the regular operation of the rest of the SLAM system. The main contributions of this paper are as follows:

A planar constraint method based on the MW assumption for ground and vertical planes separately is designed to introduce planar constraints in the LiDAR SLAM framework.

A two-step strategy for calculating and updating the main direction angles for the vertical plane is used to avoid the introduction of errors in the unoptimized plane by lagging the update of the main direction angles.

Based on the backpack laser scanning system, we collected real data in the indoor parking lot, corridor, and outdoor environments and used the mapping method to obtain the absolute coordinates of the manually set target points in the above environments. The quality and absolute accuracy of the point clouds obtained by three LiDAR SLAM algorithms with good performance and wide application at present, and our method in the above dataset are evaluated. The experimental results show that this method achieves better accuracy in all environments conforming to the MW assumption.

The remainder of this paper is organized as follows:

Section 2 proposes a method for constructing planar constraints based on the MW assumption.

Section 3 verifies the proposed method using a backpack laser scanning system to collect data.

Section 4 provides a discussion of the proposed method in this paper. Finally,

Section 5 concludes this study.

3. Experiment

In this section, we have collected datasets in various environments, including indoor and outdoor, and tested our method, with four algorithms, LeGO-LOAM, LIO-SAM, and FAST-LIO2, using these datasets for comparison. To adapt to the backpack laser scanning system used in this paper, the data input parts of each type of algorithm are modified accordingly. These modifications do not cause differences in the results of the four algorithms. In the previous section, some parameters included in the method of this paper are introduced, and the values of these parameters need to be selected according to the data scenarios. The exact parameter values are shown in

Table 1. In the experimental scenarios of this paper, the parameters in

Table 1 apply to each scenario. These parameters were obtained by the trial-and-error method. Since the parameters for the same function in different algorithms took the same values in this paper’s qualitative and quantitative experiments, the choice of parameters does not affect the experimental results. The default values are used for each parameter of other algorithms.

3.1. Data Description

To verify the effectiveness of the method proposed in this paper, the raw data were collected in three real environments: an outdoor campus, an indoor corridor, and an underground parking, lot using the backpack laser scanning system shown in

Figure 8. The device contains two sixteen-line LiDARs, an IMU with a frequency of 200 Hz, and a GNSS receiver. The calibration has been done manually between the LiDAR, IMU, and GNSS receiver.

The experimental data set (shown in

Figure 9) consists of three sets of data collected in the three different environments mentioned above.

Figure 9a shows the data collected in an above-ground indoor environment containing a straight corridor of approximately 70 m in length and a manually leveled floor.

Figure 9b shows the data collected in an underground parking lot containing vehicles, columns, walls, and artificially leveled floors. This paper considers the corridor and underground parking lot datasets as two typical environments that conform to the MW assumption. We will test the performance of our method in these two datasets. The data shown in

Figure 9c are collected in the campus environment, which is not considered a typical environment in conforming to the MW assumption because it contains more structures that do not conform to the MW assumption, such as vehicles, trees, streetlights, etc. We hope to test the impact of the non- MW assumption environment on the method in this dataset. The acquisition time, trajectory length, and the maximum change of ground elevation during the acquisition for the three datasets are shown in

Table 2. Since the GNSS signals in some sections of the campus dataset were obscured by trees and so on, and the true value data with sufficient accuracy could not be obtained, three sub-datasets with good GNSS signals were selected in the campus dataset, and the details of the three sub-datasets are shown in

Table 3.

In acquiring the three datasets, a segment was acquired in the area with an excellent outdoor GNSS signal. The GNSS trajectory corresponding to this SLAM output trajectory was obtained (the GNSS data were differentially processed to improve the accuracy) so that the conversion relationship between the SLAM global coordinate system and the WGS84 coordinate system provided by GNSS could be derived using these two segments. Therefore, point cloud maps in the WGS84 coordinate system can be obtained for all three experimental datasets. The ground part of the data used to derive the trajectory conversion relationship has been manually cut out for the corridor and parking lot datasets. This part of the data is not discussed in the experimental part. The experimental data were processed on a computer with an Intel Core i5-11400H @ 2.7 GHz processor and 16 GB RAM.

3.2. Evaluation Indicators

To compare the performance of the two algorithms in real environments, the reflective markers shown in

Figure 10 are placed in two GNSS-denied environments, the corridor, and the underground parking lot. The significant laser reflectivity of the reflective markers allows us to find them from the point cloud map using the intensity view of the point cloud. The reflective markers have been placed as evenly and adequately as possible in the GNSS-denied environment. We obtained the absolute coordinates of these reflective markers in the WGS84 coordinate system in three axes by mapping methods using a total station and other measuring instruments. In the quantitative experiments, this coordinate value is used as the true value to evaluate the accuracy of these two indoor scenes.

This paper uses a backpack laser scanning system for outdoor scenes to obtain the GNSS data. It gets more accurate trajectory coordinate data by surveying and mapping post-processing with the data output from an additionally placed GNSS reference station. These trajectory coordinate data are used as the true value to evaluate the accuracy of the outdoor scenes in the quantitative experiments. Since only three sub-datasets of the campus dataset have good GNSS coverage to obtain the true values, the quantitative evaluation of the campus dataset is performed only for these three sub-datasets. Furthermore, trajectory plots, as well as error distribution plots, were performed using the EVO evaluation tool [

27].

This paper uses the root mean square error (RMSE) for quantitative experiments as an accuracy evaluation metric and measures the algorithm performance by this metric. In this paper, RMSE represents the error between the coordinates obtained by manually selecting the center of the ground marker point cloud and measuring the true value in the point cloud map output by SLAM. For the

i−th reflective marker, if its coordinates in the point cloud map are

, and the corresponding true value is

, then the RMSE is calculated as shown in Equation (15):

where

is the total number of reflective marks placed in this scene.

3.3. Qualitative Experiments

This paper analyzes three datasets using our method and three other algorithms. In this subsection, the proposed method will be evaluated qualitatively from two aspects: the evaluation of the vertical plane extraction effect and the evaluation of the ground constraint effect. The effectiveness of our method is evaluated by comparing the trajectory analysis with other methods. The point clouds obtained using our method for the three datasets are shown in

Appendix A, and the point clouds are assigned according to the

Z coordinate values.

3.3.1. Vertical Plane Extraction Evaluation

The results of vertical plane extraction for the three datasets using the method proposed in this paper are shown in

Figure 11, where the point clouds are color assigned according to the intensity values. Results (a–d) show the elevation extraction results for the corridor and the underground parking lot datasets. Combining the top view and front view, we can see that for the corridor and parking lot, which conforms with the assumption of the MW, the vertical plane extraction method in this paper can extract the vertical planes in the scene more completely and can reflect the general outline of the scene. The extracted planes have no floor or ceiling, so the extraction accuracy is good. The red boxes mark some structures within the two types of scenes that do not conform to the MW assumption. Thanks to this paper’s statistical-based principal orientation angle extraction strategy, even if a few structures within the scenes do not conform to the assumptions, they do not significantly impact the basis main direction angle values. Since the campus dataset is not a typical environment conforming to the MW assumption, only a small number of vertical planes are extracted in this dataset. Results (e) and (f) show the partial elevation extraction results of this dataset. In the environment that does not conform to the MW assumption, the facade constraint module of this paper only works in the few cases that conform to the threshold set by the method of this paper and do not affect the system in the rest of the cases.

3.3.2. Ground Constraint Evaluation

After ground constraining the three datasets using the method in this paper, the ground point clouds obtained are compared to the ground point clouds obtained through the other algorithms in terms of elevation, as shown in

Figure 12, and the point clouds are color assigned according to the elevation values (

Z coordinate values). Although the LeGO-LOAM algorithm constrains the ground, it is designed explicitly for ground-based unmanned vehicles. It requires the LiDAR to be installed at a position approximately parallel to the ground or to convert its data to be approximately parallel to the ground. Our backpack laser scanning system does not meet the requirements of this algorithm well, so the LeGO-LOAM algorithm performs poorly in all three dataset species. In the corridor dataset, due to the small environmental elevation change, the ground point clouds (b), (c), and (d) obtained by the other methods do not show significantly different elevation trends, except for the LeGO-LOAM result (a), which shows a significant ground elevation change. However, the blue color from left to right in (b) and (c) gradually deepens, representing the gradual decrease of ground elevation. The color distribution in (d) obtained by our method is more uniform and consistent with the actual situation of no change in the corridor elevation. In the underground parking lot dataset, there is no significant change in the ground elevation in the actual environment. The color distribution of the ground point cloud shown in (g) obtained by our method is uniform and consistent with the actual elevation change. The point clouds in (e) and (f) have a significant elevation change from left to right, and the point cloud on the left is significantly lower than the point cloud on the right and in the middle. In this dataset, the LeGO-LOAM algorithm cannot obtain typical point clouds. In the campus dataset, the actual environment has ground height undulations, and the results (k), (i), and (j) obtained by our method reflect the real environmental elevation changes to some extent, and (h) shows a noticeable point cloud distortion.

The trajectories and trajectory coordinates obtained using the method in this paper and the other methods are shown in

Figure 13 and

Figure 14. Because the trajectories obtained by LeGO-LOAM and the other three methods are too different, we do not depict the trajectory coordinates of the LeGO-LOAM algorithm in

Figure 14 to better describe the trajectory details of the other three algorithms. It can be seen more clearly from the trajectory variations that the trajectories obtained by our method better reflect the elevation variations of the real environment in the corridor dataset and the underground parking lot dataset. In the campus environment, the elevation change trend of our method is similar to that of LIO-SAM and FAST-LIO2 at the significant elevation change. It only plays the role of ground constraint at the slight elevation undulation. The elevations of the LIO-SAM and FAST-LIO2 trajectories differ significantly from those of the present method near the time stamp 106,400 s. We will further explore this issue in our quantitative analysis.

Combined with the above analysis, in structured environments such as corridors and parking lots, the ground constraint method in this paper can better reflect the elevation changes of the real environment. In unstructured environments such as campuses, the ground constraint method in this paper will only work in those parts that conform to the MW assumption.

3.4. Quantitative Experiment

For the corridor dataset and the underground parking lot dataset, we use Equation (15) to calculate the RMSE values of the reflective marks in the point clouds as a metric to quantitatively evaluate the quality of the point cloud maps for these two datasets. To more clearly reflect the method’s performance in this paper, we calculate the RMSE of the reflected signs in the X, Y, and Z directions, respectively, and also calculate the RMSE of the coordinates to describe the overall error.

Although the corridor and underground parking lot datasets included a small portion of the above-ground environment at the time of acquisition, our quantitative evaluation focused only on the indoor part of these two datasets. The RMSEs of the reflection signs for the four methods are shown in

Table 4, with “/” indicating that valid results could not be obtained. There is no GNSS signal indoors or a lack of constraint, so the accuracy of the three methods, LeGO-LOAM, LIO-SAM, and FAST-LIO2, is poor, especially the error in the z-direction, which is significant. In this paper, except for the x-direction of the corridor dataset, the method achieves optimal accuracy in other directions of both datasets and the overall error. The accuracy is greatly improved compared with the other three methods. Compared with the LeGO-LOAM, LIO-SAM, and FAST-LIO2 methods, the overall errors of this paper’s method are reduced by 99.5%, 73.4%, and 93.4% in the corridor data set, and 41.4% and 79.6% in the underground parking lot data set, respectively. Therefore, the method in this paper has high accuracy in the environment that conforms to the MW assumption.

For the campus dataset, we calculate the RMSE of the trajectories obtained by the other three types of methods and the trajectories obtained by this paper relative to the ground truth in each of the three sub-datasets with good GNSS coverage (as shown in

Table 5), and then plot the distribution of the errors in the trajectories as shown in

Figure 15. The accuracy of this paper’s method on the Campus-01 dataset and the Campus-03 dataset differs less from the optimal results in an environment such as the campus dataset, which does not conform to the MW assumption. The RMSE of this method increases by 1.1 cm in the Campus-01 dataset and by 0.9 cm in the Campus-03 dataset compared to the optimal method, while the RMSE of this method increases by 9.2 cm in the Campus-02 dataset. The decrease in accuracy may be due to the error optimization caused by the fact that our parameter settings are the same throughout the entire dataset, in conjunction with our discussion of the significant difference between the point cloud elevations obtained by this method and other methods near the time stamp 106,400 s in our qualitative experiments. We will discuss this issue further in

Section 4.

4. Discussion

This paper hopes to introduce additional constraints to the system to enhance the stability and robustness of the LiDAR SLAM system. Based on this, we use the MW assumption to find the parallel–perpendicular relationship between the planes of man-made buildings and introduce it into the LiDAR SLAM system as an a priori constraint. We believe that this work can be applied and can improve the accuracy in many scenarios such as parking lots, indoor rooms, long corridors, etc. Although there have been some planar constraint algorithms for vision-based SLAM, this effective idea of introducing additional constraints in the field of LiDAR SLAM has not been widely studied.

The method in this paper may be mis-extracted when performing vertical plane extraction. There may be a small number of structures in the scene that do not conform to the MW assumption and thus, are susceptible to interference from these coarse differences in the main direction angle calculation. To eliminate these coarse differences, we adopt a statistical-based two-step principal direction angle calculation and an updating strategy for the elevations to improve the stability and robustness of the system. The possible errors introduced by the unoptimized planes are avoided by lagging the update of the main direction angle. The setting of parameters affects the performance of our method. For scenarios that conform to the assumptions, the structured features, such as the parallel perpendicularity of the planes, are more obvious, and there is no need to change the parameters in the process. However, for environments that do not conform to the MW assumption, it may be necessary to use different parameters at different locations within a dataset to cope with the environmental changes, as the environment is more diverse. Therefore, in the experiments for the campus scenario in

Section 3.3, there will be an increase in trajectory for the errors in the Campus-02 dataset, which may be caused by inappropriate parameter settings, such as an overly large update thresholds for the ground main direction angle. Too large an update threshold will reduce the ability of the method in this paper to describe the real environment terrain. Still, too small a ground main direction angle update threshold will have frequent main direction angle updates. When the main direction angle is updated, it is usually in some undulating terrain area. Since the old ground main direction angle no longer applies to the current environment, only the ground main direction angle can be updated, and no constraints can be added. Therefore, frequent main direction angle updates will reduce the constraints.

In the previous paper, we did not discuss the algorithm’s time efficiency due to the significant time consumption in planar extraction, so the method in this paper cannot achieve real-time performance yet. Currently, the method in this paper still needs 1~1.5 times the acquisition time to complete the calculation.