1. Introduction

In recent years, change detection (CD) has attracted increasing interest due to its wide range of possible applications [

1,

2,

3,

4,

5,

6,

7], such as urban development, land cover monitoring, natural disaster detection, and ecosystem monitoring. CD aims to identify the changing area by comparing two images of the same place taken at two different times [

8].

With the rapid development of remote sensing technologies, obtaining large numbers of hyperspectral images (HSIs) for CD has become much easier. HSIs have more detailed spectral patterns and textural information than other remote sensing images, such as RGB images, multispectral images (MSIs), and synthetic aperture radar (SAR) images [

9,

10,

11]. Consequently, HSIs have the potential to identify more subtle variations [

12], and the detailed composition of different categories are effectively distinguished. However, the high-spatial-resolution characteristics of HSIs will also bring challenges to the CD feature-extraction network. In summary, there are two reasons for this. Firstly, labeled HSI datasets for CD tasks are lacking, making it difficult to train a deep feature extraction network using a supervised learning algorithm. Secondly, because the spectral bands of HSIs are relatively dense, the image-processing methods for multispectral or optical remote sensing images cannot be directly migrated to effectively mine the spectral information of HSI.

Focusing on the challenges mentioned above, some new advances have recently been achieved in improving HSI-CD feature extraction. Among them, principal component analysis (PCA) [

13] is the most commonly used component, which can map the high-dimensional information of HSI to the low-dimensional space. Specifically, in order to acquire the mapping features, take the HSI of

as input, and obtain the coefficient of the

C channel to generate the

covariance matrix. According to this covariance matrix, the mapping transformation operation of each

layer is obtained, and this transformation is linear. Another component worth mentioning is compressed change vector analysis (

VA) [

14], which is inspired by metric learning and can be implemented in two steps. First, it calculates the spatial Euclidean Distance between each

pixel and clusters each pixel with the idea of metric learning. Second, it calculates the spatial angle of each pixel and establishes the phase relationship between different pixels to classify different categories. This transformation is non-linear. The above two methods are the classic work of change detection, and many subsequent works have been improved based on them, such as sequential spectral change vector analysis (

CVA) [

15], Robust Change Vector Analysis (RCVA) [

16]. However, all of the above methods use the statistical information of all pixels in a single channel to determine the importance of the current channel and then compress high-dimensional features into a low-dimensional feature space. Differing from previous work, we consider how to make each pixel select the optimal channel for the final task, and integrate these channels into a new feature channel, to release the potential of the maximally effective information.

Compared with the fully convolutional network (FCN) and convolutional neural network (CNN), the recurrent neural network (RNN) can identify the data association between sequence information. Applying the RNN structure to the feature extraction of HSIs has the following advantages:

The sequence information of HSIs can be retained. The previous band selection method will destroy the time sequence of feature space, while RNN will give each sequence a new weight to maintain the spectral information, and the importance of each spectral can be enhanced or inhibited by the weights.

The sequence process of RNN is beneficial when reducing the redundant information in HSI. As the features between adjacent channels of a hyperspectral channel are similar, it is even possible to predict the current channel’s information from the previous channel’s features. RNN can make full use of this feature to filter redundant information.

Based on the two superiorities mentioned earlier, this paper develops a new feature extraction network based on the hybrid CNN and RNN for HSI-CD tasks. The main contributions are summarized as follows:

A feature extraction network based on RNN is proposed, which can better retain the time sequence information of HSIs and is also more conducive to filtering out redundant information.

The subsequent CNN structure utilizes the spectral information of adjacent regions to suppress noise and improve change detection results.

For binary change detection, our method can extract the most relevant feature channel for each pixel. It can relieve the mixture problem of remote sensing image change detection.

The rest of this paper is organized as follows.

Section 2 gives a brief review of the related work.

Section 3 introduces the preliminary knowledge about our method and describes our proposed method in detail. The datasets and comprehensive experimental results are shown in

Section 4. The discussion of the effectiveness of RNN and the setting of hyperparameters is presented in

Section 5. Finally, in

Section 6, we provide a summary of this paper.

3. Method

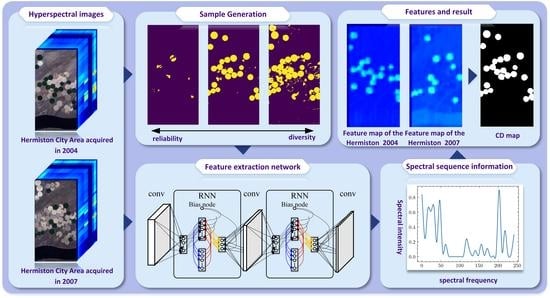

In this work, an effective feature extraction network for HSIs is proposed, where the sequence information of hyperspectral images can be retained and each pixel can select the optimal channel information. The proposed method is based on the hierarchical integration of the RNN and CNN, which are both the current state-of-the-art DNN architecture for temporal sequence processing. Concretely, a graph illustrating the presented method is shown in

Figure 1, from which we can see that the proposed methodology contains three main parts: low-dimensional feature extractor, high-dimensional feature extraction module based on hybrid RNN and CNN, and the final, fully connected layer. The low-dimensional feature extractor and the hybrid feature learning module will be introduced in the next few sections.

3.1. Low-Dimensional Feature Extractor

CNN is a category of neural network that is mainly used to examine, recognize or classify images as it simplifies them for improved understanding. The advantage of CNN is that it requires less labor and pre-processing. Backpropagation is also a part of the learning procedure, making the network more efficient. The design is intimately related to MLP, as it consists of an input layer of neurons, multiple hidden layers, and an output layer. Each individual neuron in one layer is attached to each neuron in a subsequent layer.

As shown in

Figure 1, in our model, we first apply a convolutional layer with size of

on an HSI to extract the shallow features. Then, a filter of size

is repeatedly applied to the sub-matrices of the input feature maps. It is worth noting that the size of the feature map, in this case, is

, meaning that we reduced the channels of the input image to 128 instead of 32 while keeping the length and width the same. This will eliminate the loss of temporal information caused by the CNN dimensionality reduction.The feature maps of two convolutional layers are calculated as follows:

where

and

stand for the convolutional kernel of size

(

) with channel

(

).

represents ReLu function and

and

are parameters of convolutional layers

and

, respectively.

It is important to state that, in addition to the two convolutional layers mentioned above, our model also has a separate convolutional layer following each RNN. The purpose is to better mix the sequence information extracted from the RNN for higher-level semantic representation.

3.2. Feature Learning of Hybrid RNN and CNN

Our initial assumption was to directly feed hyperspectral data with sequence information to an RNN. As depicted in [

37], RNNs have been extensively deployed to deal with sequence data. This extracts both the temporal dependencies between data samples in a sequence and captures the most discriminative features for that sequence to execute various tasks. However, in actual operation, the RNN will be slower than the CNN due to the optimization problem of the parallel computing library. In the first few layers of the network, in order to improve the training speed, we first used the CNN for the dimensionality reduction of the data. Then, the RNN was utilized to capture the temporal information hidden in channels of feature maps generated by CNN for further processing. RNNs have the benefit of acquiring complex temporal dynamics over sequences in comparison to standard feedforward networks. Given an input sequence

, an RNN layer calculates the forward sequence of the hidden states

by iterating from

to

T:

where

is the weight matrix from input to hidden and

is the weight matrix from hidden to hidden. In addition to the input

, the hidden activation

of the former time step is fed to affect the hidden state of the current time step. In a bidirectional RNN, a recurrent external layer is used to evaluate the backward sequence of the hidden outputs

from

to 1.

In experiments, our model stacks two bidirectional recurrent layers if not specifically informed otherwise. At each time step

t, the cascade of forward and backward hidden states

of the current layer is considered as the next recursive layer’s input. In this case, the output at each frame t is the conditional distribution

. For example, a polynomial distribution would be exported with a softmax activation function.

Furthermore, we can compute the probability of each sequence

x by integrating these values using the following equation.

The outputs of the convolutional layer following RNN are flattened and form a feature vector, which may then be used as the input of a fully connected network (see

Figure 1).

3.3. Change Detection Head Based on Fully Connected Network

After the above feature extraction stage, the above module can perform feature extraction on the input hyperspectral images of different phases, respectively. For an input image patch of size , a feature map pair of size can be obtained. The feature map with a size of can be considered a high-dimensional semantic information, representing the region in which the central pixel is located. Intuitively, we take the difference of the two obtained feature maps and expand the obtained result into a vector, which serves as a measure of the change value. Compared with the common CVA method, this method can reduce the dimension of the vector and suppress the overfitting of the network. Differing from the method based on pixel points, this method can better fuse the spatial information and spectral information of the region.

Referring to the common neural network structure design, we used three cascaded fully connected layers and ReLU as the activation function and the subsequent change detection head. Since the dataset is a change detection task for two types of changes, the last layer of the network contains only two neurons, representing the degree of activation for the categories of change and no change, respectively. The overall fully connected layer is used to classify the difference information and generate binary change detection results. Due to the high efficiency of the feature-extraction module, the detection head can achieve better changes without special design.

3.4. Unsupervised Sample Generation

Let

,

be two hyperspectral images taken at time

,

, and the difference map

can be calculated by the formula

We aim to generate high-quality training samples to detect changes from

in an unsupervised manner, dividing the set into two subsets,

and

, corresponding to changed and unchanged samples, respectively. Since the input image is strictly registered, the difference map can represent the intensity of changes in different positions. Therefore, we used the 2-norm of the spectral change vector to express the magnitude of the corresponding regional change, i.e.,

where

C represents the number of spectral channels.

The traditional method uses the Otsu algorithm to automatically determine the threshold for generating the difference map. According to the guidance for generating the threshold, the can easily be divided into two clusters, in which the part with a smaller value is used as the unchanged area data sample, and the part with a larger value is used as the changed area data sample. Using pseudo-label-based unsupervised learning methods can enable the network to mine the deep structural information of the data, reduce the interference of noisy samples, and generate better detection results.

However, the critical samples generated by the hard threshold will reduce the sample quality, increase the proportion of false labels, and make the network difficult to optimize. In the subsequent experiments, if low-quality training samples are used, the network will exhibit poor convergence. The model’s detection accuracy is even worse than that of traditional methods. In this paper, we first calculate the distribution parameters of positive and negative samples, and set an overlap coefficient

. The pseudo-labels’ generation formula is as follows:

where the

,

,

, and

are the mean and variance of the negative and positive samples determined according to the hard threshold, respectively. Among them, when

, we used the absolutely correct sample as the training sample, which can ensure the correctness of the sample, but reduces the diversity of the sample. When the

, we gradually used more types of samples as training samples, but introduced a small number of wrong samples.

3.5. Network Overall Structure and Training Details

The overall structure of the network is shown in

Table 1. The network structure is a module constructed based on the pipeline form, in which the input is the neighborhood at which the center point of the two image blocks is located, and the output is whether the region has changed and the probability of the prediction. The network can also easily be extended to multi-class changes.

We used a Linux workstation to complete the experiments. The computer has an NVIDIA RTX 3090 with 24G GPU memory, and we used pytorch to implement the code construction of the model. For the Hermiston dataset, we used image pairs as input, while setting the to 0.1, and used all pseudo-labeled samples for network training. For the Bay and Santa Barbara datasets, we used image pairs as the input, and set the to 0.5. As the image sizes of above two datasets were larger and the sample distribution was more uniform, among all the generated pseudo-label samples, we randomly sampled 64,000 positive samples and 64,000 negative samples as training sample sets. In training, all experiments used the same training parameters. Adam was used as the network optimizer, and the learning rate of the network was set to 0.0003. The batch size was set to 64, and the network was trained for 10 epochs before testing. In the test, for the Hermiston dataset, all pixels were used to calculate the final accuracy and kappa coefficient. For the Bay and Santa Barbara datasets, because the data contained an uncertain area mask, we only calculated the precision and kappa coefficients of pixels outside the mask area.

4. Result

To validate the effectiveness of the proposed methods, we conducted an experimental analysis on real hyperspectral change detection datasets. We selected several algorithms based on CVA methods and several based on deep learning methods as comparison algorithms. Finally, to demonstrate the effectiveness of the proposed feature extraction structure, we conducted ablation experiments to illustrate the advantages of our algorithm.

4.1. Datasets and Evaluation Criteria

The first dataset is the Hermiston dataset. As shown in

Figure 2, the two hyperspectral images were acquired in 2004 and 2007 over the same area of Hermiston City Area, Oregon [

46]. The co-registered images have the same size of

pixels, with the spatial resolution of 30 m and 242 available bands, as acquired by the Hyperion satellite. The reference image, shown in

Figure 2, is a binary label representing whether the region undergoes meaningful changes.

The other two datasets were the Bay Area dataset and the Santa Barbara dataset. These two datasets were collected from AVIRIS sensor with 224 spectral bands. As shown in

Figure 3, the Bay Area dataset was captured above the Bay Area of Patterson, California, in 2013 and 2015, respectively. Both hyperspectral images and reference images have a spatial resolution of

. As shown in

Figure 4, the Santa Barbara dataset was captured above the Santa Barbara, California in 2013 and 2014, respectively, and the image size was

. Differing from the previous dataset, these two datasets not only mark the changed and unchanged areas, but also mark the areas without accurate labels. Thus, the detection effect of different algorithms can be evaluated more accurately, and the influence of the noise area can be reduced.

To quantitatively evaluate the quality of the results of different algorithms, we use the overall accuracy (

) and kappa coefficients (

) to calculate the results of each algorithm. The

is the proportion of correct samples to the total number of samples, which is calculated as follows:

where

TP,

TN,

FP, and

FN represent true positive, true negative, false positive, and false negative, respectively. The

is based on the confusion matrix and is used to indicate the classification consistency with a large difference in the number of categories, which is calculated as follows:

4.2. Comparison Results and Analysis

In this work, we compare the proposed algorithm with several representative change detection methods to demonstrate the effectiveness and generality of the proposed frameworks. Among them, CVA is a classical spectral-based change detection method that utilizes spectral difference maps to get the final result. DNN takes paired spectral vectors as input, and uses a multi-layer fully connected network to extract features and generate results. CNN utilizes patch images as input, and the convolutional neural network is used to extract features and then feed them to the classifier. GETNET uses the spectral vector to construct a two-dimensional correlation matrix, and uses CNN to reduce the parameters of the model.

Figure 5 shows the results of the compared and the proposed algorithms in Hermiston dataset, and the corresponding quantitative evaluation values are available in

Table 2. As can be seen from

Figure 5b, CVA can detect most of the change areas in the graph; however, due to the influence of the threshold selection, some areas where the change amplitude is at the critical value will lead to false detection, resulting in some small positive areas. As can be seen in table, the deep-learning-based methods consistently achieve better results than traditional methods. Although the DNN method achieves higher detection accuracy, it has more noise points than the CNN-based method, which need to be filtered out using additional pipelines. Compared with the GETNET method, the algorithm proposed in this paper achieves higher detection accuracy. At the same time, since GETNET makes predictions based on pixels, it will face the same problem as DNN, that is, there will be more noise in the results.

The test visualization results of the Bay Area dataset are shown in

Figure 6, and the corresponding detection accuracy indicators are listed in

Table 3. As can be seen from

Figure 6b, the CVA method has a good performance in most positions in the graph. Although limited by the performance of the clustering method, this method inevitably has noise. It is worth noting that since the scene contains many confounding changes, the pixel-based method is more susceptible to noise, and the performance of the DNN and GETNET is even slightly lower than that in the traditional method. Although CNN methods can utilize more neighborhood information, the performance improvements compared to DNN are still limited. Compared with all other algorithms, the CD model proposed in this paper can better suppress noise and fully mine the depth information of pseudo-labels. The proposed method achieves better results in both qualitative and quantitative evaluations.

Similar to the experimental results on Bay Area data set, the Santa Barbara dataset achieves consistent performance gains on the test procedure. As shown in

Figure 7 and

Table 4, both GETNET and the method proposed in this paper have achieved encouraging detection results; however, due to the use of convolutional structure, the network proposed in this paper obtained slightly higher change detection accuracy. There was no change in a mountain area above the dataset; however, due to the influence of shadows and sensor noise, the results of different algorithms in this area are quite different. Likewise, CNN methods always outperform DNN methods due to their ability to integrate spatial information. Benefiting from the use of RNN to extract spectral information, the proposed method showed a a significant improvement in results compared with the CNN method.

4.3. Ablation Study

To demonstrate that RNN can effectively extract spectral information from hyperspectral image data, we designed a contrastive network with the same structure as the network used in this paper, but without RNN.

Table 2 shows that the network structure without RNN does not produce competitive results. Observing

Figure 8, in the annular farmland area, using the RNN method can obtain a relatively more complete farmland area and, compared with the benchmark algorithm, the RNN method produces purer detection results in the boundary area between farmlands. The benchmark method can still produce relatively clean detection results, which also proves that the feature extraction structure of CNN can fully exploit the spatial information in hyperspectral images.

For hyperspectral image patch pairs with input dimensions

,

Table 5 explains the effectiveness of the proposed structure from another perspective. The feature extraction network using RNN has fewer parameters and less computational complexity. Fewer parameters mean that the network is less prone to overfitting, which explains why better detection results and higher detection accuracy can be achieved with RNNs.