The Development and Usability Assessment of an Augmented Reality Decision Support System to Address Burn Patient Management

Abstract

:1. Introduction

2. Materials and Methods

2.1. Augmented Reality Hardware and Development

2.2. Design and Content Development

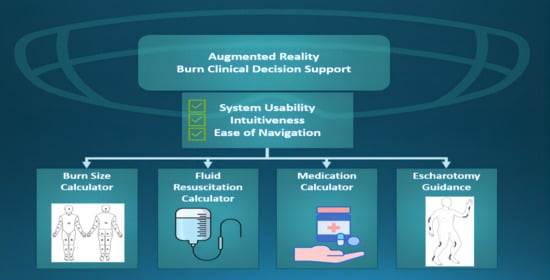

2.3. ARBAM Functionality

2.4. Sub-Application Functionality

2.5. Usability Testing

2.6. Testing

2.7. Data Collection and Analysis

2.8. Post-Simulation Survey and After-Action Reviews

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jeschke, M.G.; van Baar, M.E.; Choudhry, M.A.; Chung, K.K.; Gibran, N.S.; Logsetty, S. Burn Injury. Nat. Rev. Dis. Primers 2020, 6, 11. [Google Scholar] [CrossRef]

- Nessen, S.C.; Gurney, J.; Rasmussen, T.E.; Cap, A.P.; Mann-Salinas, E.; Le, T.D.; Shackelford, S.; Remick, K.N.; Akers, K.S.; Eastridge, B.J.; et al. Unrealized Potential of the US Military Battlefield Trauma System: DOW Rate is Higher in Iraq and Afghanistan than in Vietnam, but CFR and KIA Rates are Lower. J. Trauma Acute Care Surg. 2018, 85, S4–S12. [Google Scholar] [CrossRef] [PubMed]

- Osheroff, J. Improving Outcomes with Clinical Decision Support: An Implementer’s Guide, 2nd ed.; Informa UK Limited: London, UK, 2012. [Google Scholar]

- Parvizi, D.; Giretzlehner, M.; Dirnberger, J.; Owen, R.; Haller, H.L.; Schintler, M.V.; Wurzer, P.; Lumenta, D.B.; Kamolz, L.P. The use of telemedicine in burn care: Development of a mobile system for TBSA documentation and remote assessment. Ann. Burn. Fire Disasters 2014, 27, 94–100. [Google Scholar]

- Wibbenmeyer, L.; Kluesner, K.; Wu, H.; Eid, A.; Heard, J.; Mann, B.; Pauley, A.; Peek-Asa, C. Video-Enhanced Telemedicine Improves the Care of Acutely Injured Burn Patients in a Rural State. J. Burn Care Res. 2016, 37, e531–e538. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Cho, Y.; Harvey, J.; Arnoldo, B.; Levi, B. Telehealth and Burn Care: From Faxes to Augmented Reality. Bioengineering 2022, 9, 211. [Google Scholar] [CrossRef]

- Farshad, M.; Furnstahl, P.; Spirig, J.M. First in Man In-situ Augmented Reality Pedicle Screw Navigation. N. Am. Spine Soc. J. 2021, 6, 100065. [Google Scholar] [CrossRef] [PubMed]

- Wierzbicki, R.; Pawlowicz, M.; Job, J.; Balawender, R.; Kostarczyk, W.; Stanuch, M.; Janc, K.; Skalski, A. 3D Mixed-Reality Visualization of Medical Imaging Data as a Supporting Tool for Innovative, Minimally Invasive Surgery for Gastrointestinal Tumors and Systemic Treatment as a New Path in Personalized Treatment of Advanced Cancer Diseases. J. Cancer Res. Clin. Oncol. 2022, 148, 237–243. [Google Scholar] [CrossRef] [PubMed]

- Bernard, F.; Haemmerli, J.; Zegarek, G.; Kiss-Bodolay, D.; Schaller, K.; Bijlenga, P. Augmented reality-assisted roadmaps during periventricular brain surgery. Neurosurg. Focus 2021, 51, E4. [Google Scholar] [CrossRef]

- Katayama, M.; Ueda, K.; Mitsuno, D.; Kino, H. Intraoperative 3-dimensional Projection of Blood Vessels on Body Surface Using an Augmented Reality System. Plast. Reconstr. Surg. Glob. Open 2020, 8, e3028. [Google Scholar] [CrossRef]

- Tsang, K.D.; Ottow, M.K.; van Heijst, A.F.J.; Antonius, T.A.J. Electronic Decision Support in the Delivery Room Using Augmented Reality to Improve Newborn Life Support Guideline Adherence: A Randomized Controlled Pilot Study. Simul. Healthc. 2022, 17, 293–298. [Google Scholar] [CrossRef]

- D’Urso, A.; Agnus, V.; Barberio, M.; Seeliger, B.; Marchegiani, F.; Charles, A.L.; Geny, B.; Marescaux, J.; Mutter, D.; Diana, M. Computer-assisted quantification and visualization of bowel perfusion using fluorescence-based enhanced reality in left-sided colonic resections. Surg. Endosc. 2021, 35, 4321–4331. [Google Scholar] [CrossRef]

- Andersen, D.; Popescu, V.; Cabrera, M.E.; Shanghavi, A.; Mullis, B.; Marley, S.; Gomez, G.; Wachs, J.P. An Augmented Reality-Based Approach for Surgical Telementoring in Austere Environments. Mil. Med. 2017, 182, 310–315. [Google Scholar] [CrossRef]

- Boissin, C. Clinical Decision-Support for Acute Burn Referral and Triage at Specialized Centres—Contribution from Routine and Digital Health Tools. Glob. Health Action 2022, 15, 2067389. [Google Scholar] [CrossRef]

- Jeffri, N.F.S.; Awang Rambli, D.R. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef] [PubMed]

- Clinical Practice Guidelines (CPGs). Available online: https://jts.health.mil/index.cfm/PI_CPGs/cpgs (accessed on 18 April 2023).

- Joint Trauma System Clinical Practice Guideline. Available online: https://jts.health.mil/assets/docs/cpgs/Burn_Care_11_May_2016_ID12.pdf (accessed on 30 August 2022).

- Joint Trauma System Clinical Practice Guideline. Available online: https://jts.health.mil/assets/docs/cpgs/Burn_Management_PFC_13_Jan_2017_ID57.pdf (accessed on 18 April 2023).

- Joint Trauma System Clinical Practice Guideline. Available online: https://jts.health.mil/assets/docs/cpgs/Analgesia_and_Sedation_Management_during_Prolonged_Field_Care_11_May_2017_ID61.pdf (accessed on 30 August 2022).

- Hipp, D.R. SQLite. Available online: https://www.sqlite.org/index.html (accessed on 18 April 2023).

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Rojas-Muñoz, E.; Lin, C.; Sanchez-Tamayo, N.; Cabrera, M.E.; Andersen, D.; Popescu, V.; Barragan, J.A.; Zarzaur, B.; Murphy, P.; Anderson, K.; et al. Evaluation of an Augmented Reality Platform for Austere Surgical Telementoring: A Randomized Controlled Crossover Study in Cricothyroidotomies. npj Digit. Med. 2020, 3, 75. [Google Scholar] [CrossRef] [PubMed]

- Tejiram, S.; Romanowski, K.S.; Palmieri, T.L. Initial management of severe burn injury. Curr. Opin. Crit. Care 2019, 25, 647–652. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A Quick and Dirty Usability Scale; Redhatch Consulting Ltd.: Reading, UK, 1996; pp. 189–194. [Google Scholar]

- Lewis, J.R. IBM Computer Usability Satisfaction Questionnaires: Psychometric Evaluation and Instructions for Use. Int. J. Hum.-Comput. Interact. 1995, 7, 57–78. [Google Scholar] [CrossRef]

- Sutton, R.T.; Pincock, D.; Baumgart, D.C.; Sadowski, D.C.; Fedorak, R.N.; Kroeker, K.I. An overview of clinical decision support systems: Benefits, risks, and strategies for success. npj Digit. Med. 2020, 3, 17. [Google Scholar] [CrossRef] [PubMed]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Serio-Melvin, M.; Caldwell, N.; Luellen, D.; Samosorn, A.; Fenrich, C.; McGlasson, A.; Colston, P.; Scott, L.; Salinas, J.; Veazey, S. 87 An Augmented Reality Burn Management Application to Guide Care in Austere Environments. J. Burn Care Res. 2023, 44, S48–S49. [Google Scholar] [CrossRef]

- Shear, F. Army accepts prototypes of the most advanced version of IVAS. 2023. [Google Scholar]

- Shakir, U. US Army Orders More Microsoft AR Headsets Now that They No Longer Make Soldiers Want to Barf. The Verge. Available online: https://www.theverge.com/2023/9/13/23871859/us-army-microsoft-ivas-ar-goggles-success-new-contract-hololens (accessed on 13 September 2023).

- Guise, J.M.; Mladenovic, J. In situ simulation: Identification of systems issues. Semin. Perinatol. 2013, 37, 161–165. [Google Scholar] [CrossRef] [PubMed]

- Henriksen, K.; Battles, J.B.; Keyes, M.A.; Grady, M.L. (Eds.) Advances in Patient Safety. In Advances in Patient Safety: New Directions and Alternative Approaches (Vol. 1: Assessment); Agency for Healthcare Research and Quality: Rockville, MD, USA, 2008. [Google Scholar]

- Pamplin, J.C.; Veazey, S.R.; De Howitt, J.; Cohen, K.; Barczak, S.; Espinoza, M.; Luellen, D.; Ross, K.; Serio-Melvin, M.; McCarthy, M.; et al. Prolonged, High-Fidelity Simulation for Study of Patient Care in Resource-Limited Medical Contexts and for Technology Comparative Effectiveness Testing. Crit. Care Explor. 2021, 3, e0477. [Google Scholar] [CrossRef] [PubMed]

| Age Range | 18–24 | 25–29 | 30–34 | 35–39 | 40–44 | 45–50 | 50+ |

|---|---|---|---|---|---|---|---|

| Participants Age % (n) | 9% (1) | 27% (3) | 9% (1) | 18% (2) | 9% (1) | 0% (0) | 27% (3) |

| Gender | Female | Male | |||||

| Gender which you identify % (n) | 64% (7) | 36% (4) | |||||

| Pre-Task Survey Questions | Responses % (n) | |||

|---|---|---|---|---|

| Video Games & Technology | No Experience | Some Experience | Moderate Experience | Highly Experienced |

| I play video games (of any kind on phone, PC, console) | 9% (1) | 27% (3) | 27% (3) | 36% (4) |

| I play first person shooter games | 45% (5) | 27% (3) | 18% (2) | 9% (1) |

| I use voice active technologies (i.e., Alexa or Siri) | 9% (1) | 45% (5) | 27% (3) | 18% (2) |

| I am comfortable with advanced technologies (ie smart watch, mobile tablets, smart phone) | 0% (0) | 0% (0) | 45% (5) | 55% (6) |

| Augmented Reality Technologies | Yes | No | ||

| I knew of AR prior to this study | 82% (9) | 18% (2) | ||

| I have used the Microsoft HoloLensTM | 9% (1) | 91% (10) | ||

| I am comfortable with the gestures used while wearing the HoloLensTM | 100% (11) | 0% (0) | ||

| I have used other head-mounted displays (i.e., Oculus Rift, Google Glass, Magic Leap, Playstation VR, etc.) | 45% (5) | 55% (6) | ||

| SUS Questions | Burn Size (Mean ± Std) | MedCalc (Mean ± Std) | FluidResus (Med ± Std) | Eschar (Mean ± Std) |

|---|---|---|---|---|

| I think that I would like to use this application frequently | 4.1 ± 1.0 | 4.3 ± 0.9 | 4.1 ± 0.8 | 4.2 ± 0.8 |

| I found this application unnecessarily complex | 2.1 ± 1.0 | 2.3 ± 1.0 | 2.0 ± 0.9 | 1.7 ± 0.6 |

| I thought this application was easy to use | 4.0 ± 0.9 | 3.8 ± 0.8 | 4.1 ± 0.7 | 4.5 ± 0.5 |

| I think that I would need assistance to be able to use this application | 2.3 ± 1.0 | 2.4 ± 1.3 | 2.0 ± 0.8 | 1.9 ± 0.7 |

| I found the various functions in this application were well integrated | 4.0 ± 1.0 | 4.0 ± 0.9 | 3.8 ± 1.0 | 3.9 ± 0.9 |

| I thought there was too much inconsistency in this application | 2.0 ± 0.9 | 2.0 ± 0.9 | 1.8 ± 0.6 | 1.8 ± 0.9 |

| I would imagine that most people would learn to use this application very quickly | 3.9 ± 0.5 | 3.8 ± 0.8 | 4.0 ± 0.6 | 4.2 ± 0.4 |

| I found this application very cumbersome/awkward to use | 2.4 ± 1.2 | 2.0 ± 0.8 | 2.0 ± 0.9 | 2.0 ± 1.1 |

| I felt very confident using this application | 4.3 ± 0.6 | 3.9 ± 0.9 | 4.0 ± 0.8 | 4.1 ± 0.7 |

| I needed to learn a lot of things before I could get going with this application | 1.7 ± 1.0 | 2.3 ± 1.1 | 2.2 ± 0.8 | 2.1 ± 0.7 |

| Total SUS Score | 74.5 ± 13.8 | 72.0 ± 17.9 | 75.0 ± 15.3 | 78.2 ± 12.4 |

| Training Question | Overall Mean (std. dev.) | |||

|---|---|---|---|---|

| I was adequately oriented to the equipment used | 4.8 ± 0.4 | |||

| I had adequate time to practice with the HoloLens™ prior to beginning the simulation | 4.8 ± 0.6 | |||

| Scale 1–5 (1 = Do not agree at all, 2 = Slightly agree, 3 = Moderately Agree, 4 = Quite Agree, 5 = Extremely Agree) | ||||

| Application Questions | Burn Size | MedCalc | Fluid Resus | Eschar |

| The software interface/layout was intuitive | 4.0 ± 1.2 | 4.0 ± 1.3 | 4.1 ± 1.0 | 4.2 ± 1.0 |

| The software was easy to navigate | 4.1 ± 0.9 | 4.1 ± 1.0 | 4.1 ± 1.1 | 4.4 ± 0.9 |

| Scale 1–5 (1 = Do not agree at all, 2 = Slightly agree, 3 = Moderately Agree, 4 = Quite Agree, 5 = Extremely Agree) | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Veazey, S.; Caldwell, N.; Luellen, D.; Samosorn, A.; McGlasson, A.; Colston, P.; Fenrich, C.; Salinas, J.; Mike, J.; Rivera, J.; et al. The Development and Usability Assessment of an Augmented Reality Decision Support System to Address Burn Patient Management. BioMedInformatics 2024, 4, 709-720. https://doi.org/10.3390/biomedinformatics4010039

Veazey S, Caldwell N, Luellen D, Samosorn A, McGlasson A, Colston P, Fenrich C, Salinas J, Mike J, Rivera J, et al. The Development and Usability Assessment of an Augmented Reality Decision Support System to Address Burn Patient Management. BioMedInformatics. 2024; 4(1):709-720. https://doi.org/10.3390/biomedinformatics4010039

Chicago/Turabian StyleVeazey, Sena, Nicole Caldwell, David Luellen, Angela Samosorn, Allison McGlasson, Patricia Colston, Craig Fenrich, Jose Salinas, Jared Mike, Jacob Rivera, and et al. 2024. "The Development and Usability Assessment of an Augmented Reality Decision Support System to Address Burn Patient Management" BioMedInformatics 4, no. 1: 709-720. https://doi.org/10.3390/biomedinformatics4010039