1. Introduction

Physical interfaces continue to hold significant relevance in user interface design, primarily due to the inherent absence of distinguishable buttons in entirely digital touch displays. For interactions devoid of visual guidance, as required by individuals with visual impairments, or scenarios necessitating user attention, such as controlling machinery, assembly processes, or wet lab applications, the tactile guidance of haptic feedback assumes paramount importance. The ability to locate and manipulate the correct button for a specific action without visual reliance translates into safer, swifter, and more straightforward interactions.

One domain that uses virtual user interfaces prominently is virtual reality (VR), which offers diverse, immersive visual environments that enable a broad range of experiences: storytelling, games, virtual twins, training, and more. However, to create fully immersive experiences, realistic haptic feedback is important [

1,

2]. One approach to providing haptic feedback is through the use of passive haptic feedback in the form of haptic proxies [

3,

4,

5,

6]. These haptic proxies are made with varying degrees of realism [

7], serving as faithful replicas of virtual objects or approximations thereof.

Creating a fully immersive VR experience with haptic feedback for every virtual object comes with multiple challenges: mapping virtual objects to their physical counterparts [

8], finding and creating the appropriate haptic proxies [

7], and the allocation of sufficient physical space to accommodate these proxies. The scientific literature has proposed various strategies for mapping virtual objects to their physical counterparts. One approach involves manipulating the user’s physical movements by altering the virtual arm or the virtual environment, the so-called visuo-haptic illusions [

9,

10]. A complementary approach integrates passive haptic proxies with actuation mechanisms to reposition them, thereby generating encountered-type haptic interactions. Illustrative implementations encompass the use of robotics [

11,

12], such as robotic graphics [

13,

14] or quadcopters [

15,

16].

Our research contributes to the identification and construction of suitable haptic proxies and the minimization of the spatial requirements associated with these proxies. Striving to offer an exact haptic proxy for every virtual object is impractical and unfeasible: one can easily and instantaneously create a wide variety of virtual objects, while creating their exact physical counterparts is costly and requires time. Consequently, our efforts are centered on the development of haptic proxies capable of representing multiple virtual objects. This approach is viable due to the limited sensitivity of the haptic system and the dominant role of visual cues in human perception [

17,

18,

19].

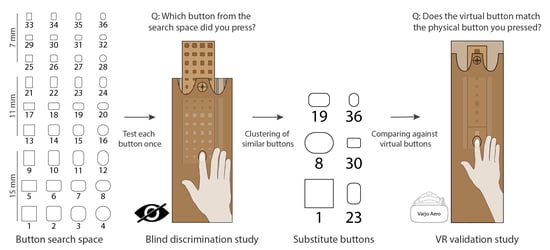

We introduce the concept of “substitute buttons”, comprising a set of six physical buttons designed to serve as versatile haptic proxies for a diverse range of virtual buttons. This contributes to the development of encountered-type haptic experiences and haptic devices capable of emulating an extended array of virtual buttons, all without necessitating a corresponding increase in the physical footprint that would be caused by providing haptic proxies for each virtual button.

This paper presents the following contributions:

A comprehensive, in-depth analysis of the characteristics of physical buttons that play a significant role in tactile perception and button recognition.

An exploratory study determining how users discriminate between different physical buttons in a blind test.

The identification of a set of six substitute buttons that can imitate all physical buttons in our search space and potentially even more during VR interaction.

A validation test to validate substitute buttons within a wide range of buttons.

Our findings are directly applicable in robotic graphics, in which a haptic experience is generated for virtual objects. More generally, non-visual haptic interactions and scenarios where interaction with physical buttons is crucial can benefit from our work.

2. Related Work

In this section, we discuss how our research is situated in the state of the art and provide the appropriate context on possible use cases for our results.

2.1. Characteristics of Buttons

Oulasvirta et al. [

20] investigated the mechanics of pressing buttons, focusing on kinematics, dynamics, and user performance. One of the key aspects was to learn how users compare their expected and obtained sensations of button activation and then bridge this gap. Performance and user experience will likely decline if the gap is too big. Liao et al. [

21] continued this work, providing methods to capture, edit, and simulate force-displacement, vibrations, and velocity-dependence characteristics. Our focus is not on the action of pressing buttons or the efficiency of button usage but complementary, as we look at the sensory perception of the shape and size of a button. The aim is to contribute to optimizing the user experience by using a limited set of physical buttons to represent a wide range of virtual buttons.

Others have investigated the tactile characteristics of buttons. Moore [

22] aimed to identify physical button shapes with the best tactile discriminability. The buttons used in the study had protruding shapes, such as triangles or circles. Participants had to blindly identify each button by comparing it to its visual representation. The resulting confusion matrix was used to cluster the most distinctive buttons. Austin and Sleight [

23] conducted a similar study on the accuracy of tactile discrimination of letters, numerals, and geometric forms. Four conditions were presented to participants (solid or outline figures, with or without finger movement), and the results showed no difference in discriminability based on gender, handedness, or individual fingers. Our study design is similar, but our objectives differ, as our study is focused on finding the least distinctive physical buttons instead of maximizing discriminability.

2.2. Tactile Spatial Resolution of the Fingertip

The Grating Orientation Task (GOT) is an established robust metric for gauging tactile spatial resolution and originates in the discipline of neuroscience [

24]. This task, frequently referenced as the GOT task [

25], requires participants to discern the orientation of a textured surface, specifically discriminating between horizontally and vertically oriented grooves through fingertip contact. The grating’s width is systematically manipulated, gradually decreasing until participants can no longer consistently distinguish the orientation with a threshold of 75 % accuracy. The human fingertip can differentiate orientations in gratings as narrow as one mm [

26,

27].

Tactile spatial resolution generally demonstrates an advantage in female participants, which can be attributed to differences in finger size. On average, women possess smaller fingers, which have been linked to a greater density of Merkel cells, resulting in a more finely detailed perception of tactile stimuli [

28].

In contrast, physical buttons commonly integrated into user interfaces predominantly feature smooth surfaces, with their primary distinguishing feature residing in the button’s contour itself. Notably, these buttons lack the textured gratings or distinct orientations essential for recognition through touch, as exemplified by the grating orientation discrimination tasks. Consequently, it is reasonable to assert that the accuracy metrics reported in the aforementioned studies may not directly apply to our specific use case. Therefore, we contend that the tactile spatial resolution for buttons is likely to be coarser than the previously established threshold of one mm. Moreover, in light of the documented correlation between fingertip area and tactile spatial resolution, we intend to measure fingertip area to assess if this relationship holds true within the context of our study.

2.3. Passive Haptic Feedback

Passive haptic feedback is a type of haptic feedback that relies on physical objects with low complexity. As demonstrated by Shapira et al. [

29], physical objects such as toys can be utilized as proxies for virtual objects in a VR setting. These proxies can be adapted to represent different virtual objects; e.g., a block can represent a house, binoculars, or a replica of the block. Exact replicas of virtual objects provide high levels of fidelity but are limited in their flexibility as a single prop can only correspond to a single or limited set of virtual items, and changes during runtime are hard to handle.

The primary question is the extent to which the fidelity of the props influences VR realism. Simeone et al. [

7] study the impact of mismatches on the user experience in their exploration of substitutional reality. Every physical object surrounding the user is paired, with some degree of discrepancy, with a visual counterpart. They conclude that a more significant mismatch can increase the range of environments that can substitute for the physical environment (the physical proxy can be used in more situations). Still, they can also become an obstacle to the interaction and the experience’s plausibility.

We identify a limited set of physical buttons that can serve as proxies for a much wider variety of virtual buttons. Our study aims to determine the precise boundaries within which a physical button can effectively act as a proxy for multiple buttons without affecting the user’s perception. In contrast to previous work, we focus on buttons instead of generalizing this for a wide range of physical form factors. This allows us to gain a deep insight into how we can obtain a consistent combination of a physical and visual button without affecting the user perception, thus optimizing the immersive sensation for these elementary and ubiquitous interactors.

2.4. Visual Illusion Techniques

The human perception system is dominated by the visual system [

17,

18,

19], making it possible to alter the user’s perception, particularly in VR, where we have control over all the visual information that is presented to the user. This idea has generated a significant body of research, encompassing a range of solutions that address various aspects of human perception in VR. Feick et al. [

30] showed, for instance, that it is possible to reuse a single physical slider for multiple virtual sliders through the use of visual–haptic illusions such as linear translation and linear stretching. Zenner and Krüger [

31] investigated the order of magnitude of hand redirection that can be applied without users noticing. Similarly, Feick et al. [

32] study the impact of different grasping types, movement trajectories, and object masses on the discrepancy introduced by visuo–haptic illusions. VR Grabbers [

33], a controller similar to training chopsticks, combines the passive haptic feedback from closing the chopsticks with a visual representation of grabbing tools, and the pseudo-haptic technique was found to increase task performance. Our work relies similarly on these techniques, using physical buttons as haptic proxies for various virtual buttons.

2.5. Encountered-Type Haptics

Encountered-type haptics, or robotic graphics [

13,

34], are actuation devices that provide haptic feedback to the user on demand at the point of interaction. Gruenbaum et al. [

14] created a robotic system representing a virtual control panel by providing the appropriate input device at the right time. A similar approach by Latham [

35] focuses on airplane cockpits. Snake Charmer [

11] extends a robotic arm to render haptic feedback, including textures, interactions, and temperature. In VRRobot [

12], a robotic arm provides on-demand haptic sensations, while a motion platform allows the user to walk around in VR while staying stationary to remain within reach of the robotic arm. HapticPanel [

36] uses a 2D motion platform instead of a robotic arm to provide a low-cost DIY approach.

Another approach is the use of quadcopters to implement encountered-type haptics. The main advantage of quadcopters is the theoretically unlimited interacting volume. Yamaguchi et al. [

37] use a paper hung from the quadcopter to represent reaction force. Abdullah et al. [

15] represent stiffness and weight with the quadcopter’s thrust for 1D interactions. Abtahi et al. [

16] present a safe-to-touch quadcopter that enables three haptic interactions: dynamic positioning of passive haptics, texture mapping, and animating passive props.

In general, encountered-type haptics provide two types of passive haptic feedback as proxies: approximations or exact copies of the virtual objects. Our results expand the applications of encountered-type haptics by broadening the use cases for physical buttons as proxies.

3. Button Exploration Space

Our study begins by defining a representative set of commonly used physical buttons. Alexander et al. [

38] characterize the physical aspects of over 1500 household push buttons and present a list of 20 unique properties. Button shapes vary, with 71.8% being rectangular, 15.9% circular, 9.5% elliptical, and 2.8% other. Surface area distribution correlates with the finger’s width, with rectangular buttons most common in the 10 mm to 18 mm range (keyboard buttons). In comparison, circular and elliptical buttons have the highest distribution in the 5 to 10 mm range.

MIL-STD-1472F [

39] specifies the US Department of Defense design criteria standard for human engineering used to design and develop military systems. The standard states that push buttons operated by a bare fingertip should have a size between 10 and 25 mm and a minimum size of 19 mm for a gloved fingertip. Buttons sold on a popular online store for electronic components (Newark

https://www.newark.com/c/switches-relays/switches, accessed on 15 June 2022) come in similar sizes. These buttons would be used in practical user interfaces and potentially useful in encountered-type haptics applications. Indexing the (push) buttons sold for electronic components finds a total of 6319 different buttons, with a minimum size of 2 mm and a maximum size of 38 mm. The most common size is between 6 and 22 mm.

Based on these sources, we identified that the most common physical buttons range between 5 and 25 mm in height and width, and the most common shapes are square, rectangular, and round buttons. Therefore, we focus on these three main characteristics of a physical button: size, shape, and roundness.

The

size impacts how much of the button fits underneath the fingertip of the user. The original search space consists of sizes between 5 and 25 mm. We reduced this to three sizes: 7, 11, and 15 mm. Size 15 is the maximum size of buttons because it is bigger than the surface area of the finger that comes into contact with the physical button. The average length of a male fingertip is 26 mm and of a female fingertip 24 mm [

40], but only about 70% of the complete fingertip comes into contact with the button. We took 7 mm as the smallest size, as it allows for slightly smaller rectangular buttons. The selection of 11 mm as an intermediate size balances the other two dimensions, ensuring an array of sizes for evaluation and allowing for overlapping sizes when factoring in button shapes.

The shape is a big factor in the physical appearance of the button. We defined three shapes as the most common ones found in buttons: square, rectangular horizontal, and rectangular vertical. The rectangular horizontal and vertical buttons are identical but rotated 90 degrees. The rectangular buttons have two different lengths of sides. The largest length is the current size of the button. The smallest length of the side is the same size as one button size smaller. For example, the size 11 rectangular button is 11 by 7 mm. For the 7 mm button, the smallest side is 5 mm, which is the smallest size in our search space.

The roundness allows us to change the shape of buttons based on the roundness of the corners, thus enabling us to have slightly elliptical buttons as well. For this, we define four roundness factors: 0%, 33%, 66%, and 100%. Each rounding factor is applied uniformly to every corner of the button. We chose these four values as a step-based range between completely square and rounded corners. The roundness is calculated as half of the smallest size of the button multiplied by the roundness percentage. For example, the rectangular button of 15 by 11 mm with 66% roundness has a rounding of 3.66 mm (=(11/2) × 66%).

Based on these characteristics, we use three size, three shape, and four roundness options, resulting in 36 (3 × 3 × 4) physical buttons to test. These buttons are organized in a four-by-nine grid (

Figure 1), organized as follows: the columns represent the roundness, starting in the first column with 0%. The rows represent the shape, starting with rectangular vertical, then rectangular horizontal, and square. This is repeated three times for each size group. The size is organized in groups, with the first three columns being size 7 mm, then 11 mm, and lastly, 15 mm.

4. Button Discrimination Test

The button discrimination test aims to identify the tactile features of buttons that can be accurately identified without visual cues. Participants receive sensory input exclusively through touch, without visual information about the button being pressed. The less a participant can differentiate between physical buttons, the more feasible it becomes to assign multiple virtual buttons to a single physical button. For this purpose, we perform a blind test using the previously defined button set, in which the participant can only feel the button without seeing it. Our study design was inspired by the work of Austin and Sleight [

23] and Moore [

22].

4.1. Study Design

This section describes the lab study design and the analysis of the results.

4.1.1. Apparatus

The central element of the experimental setup is a custom-made test platform (

Figure 2a), housing all 36 (3D-printed) buttons arranged in a grid (

Figure 1). The platform contains a button holder, as depicted in

Figure 2a. Participants place their hand on the test platform and position their index finger above the designated testing aperture (as depicted in

Figure 2b). The physical button being evaluated is placed beneath this aperture by the experimenter.

The holder incorporates all physical buttons and corresponding cutouts for each button. The position indicator on the platform and the numbered physical cutouts on the button holder facilitate easy placement of the correct button. The button tester has seven internal guide channels aligning with the physical guides on the button holder. The correct button placement is achieved by aligning the button holder with the correct column and positioning it to match the designated button in the correct row.

The study apparatus is fully enclosed in a cardboard box to eliminate any visual cues of the physical buttons (

Figure 3a). An opening on top enables the experimenter to observe the test platform to ensure the accurate placement of physical buttons and monitor the participant’s finger movement (

Figure 3b). A full-scale printout of all physical buttons is positioned on top of the box. The experimenter has a copy of the printout for reference during button placement.

4.1.2. Participants

A total of 30 individuals participated in the study, with an equal gender distribution. The sample was selected to provide a diverse range of finger sizes, as prior research has established a correlation between fingertip area and perceived tactile detail [

28]. Participants needed to have healthy hands without factors affecting fingertip haptic sensitivity, such as calluses. We did not filter the participants on their occupation as we believe button pressing is ubiquitous. The average age of participants was 34, ranging from 21 to 62. Two participants were left-handed, and all others were right-handed. Every participant used their dominant hand to interact with the buttons, which did not affect the results. When pressing a physical button, the total area of the fingertip does not come into contact with the top of the button but only with an ellipse in the middle of the fingertip. Therefore, we measured and calculated this area’s (width and height). Using our custom measurement apparatus (see

Figure 4), the measurement is based on a picture taken from the participant’s index finger. It consists of a webcam and, above it, a Plexiglas plate with reference lines with a known spacing (2 mm) used in the surface area calculation. The average width was 13.0 mm, with a minimum of 11.1 and a maximum of 15.4 mm. The average height was 17.3 mm, with a minimum of 14.8 and a maximum of 19.9 mm. The average area was 177.7 mm

2, with a minimum of 129.5 and a maximum of 240.6 mm

2.

4.2. Study Procedure

The study procedure consists of a training phase and the main study phase. The training begins with instructing the participant on the right button pressing technique and hand placement. Each participant must press every button consistently and uniformly. Participants pressed each button once, maintaining finger contact for a maximum of five seconds without moving to assess its characteristics. The opening on top of the box is utilized to monitor the participant’s compliance, while a timer indicates the end of the five-second duration.

The second part of the training explains the organization of the reference sheet with all physical buttons (same as is annotated in

Figure 1) and the study procedure. The training concludes with a practice round of three buttons. These are buttons with 66% rounding, one of each size, and with a random selection of shapes, yet consistent across the three buttons for each participant. For example, a participant might test button 35

![Mti 08 00015 i001]()

, button 23

![Mti 08 00015 i002]()

, and button 11

![Mti 08 00015 i003]()

. For every button, the participant is asked to verbally state the number of the physical button they believed they had pressed. Participants were only allowed to answer one number per physical button and thus had to make a choice.

During the study, participants were tasked with pressing all 36 physical buttons in random order. They were encouraged to think out loud and explain their thought process. Some reported considering multiple options before deciding on a specific button. After the study, a short informal interview was conducted. Four questions were asked: how well they thought they did in recognizing the roundness, shape, size, and number of correct answers. Participants provided scores from 1 to 10 for each question and their reasoning. We used this information to gain insight into the participants’ decision-making process. Finally, a picture of each participant’s index fingertip was taken.

Throughout the study, participants did not receive feedback on their button selections or accuracy. The participants could not reliably determine the physical button they interacted with and thus could not determine the next physical button based on the movement of the button holder in the study apparatus.

4.3. Study Results

The first outcome of our study was the accuracy with which participants identified the physical buttons they pressed, as measured by the number of buttons correctly answered. It ranges from 3 to 12 correct answers, with an average score of 7.26 and a standard deviation of 2.56. The high variability, with the best-performing participant correctly identifying only a third of the buttons, supports our hypothesis that touch-based button recognition is challenging.

Further analysis of participants’ answers, the think-aloud data, and post-study interviews revealed that accurately recognizing the physical buttons was challenging due to the relatively small differences between the buttons and the difficulty in estimating the correct level of roundness. Participants tended to guess buttons with 33% and 66% roundness from the middle column, as shown in

Figure 5. This suggests that participants guessed values in the middle in cases of uncertainty.

No statistically significant correlation was identified between participants’ fingertip area (Pearson’s r(28) = −0.03, p < 0.44), gender (Pearson’s r(28) = −0.29, p < 0.06), or age (Pearson’s r(28) = −0.02, p < 0.45) and the number of correct answers.

5. Determining the Substitute Buttons

We employ a comprehensive analytical procedure to establish a set of substitute buttons. First, we portray the physical button and the corresponding responses from participants through a confusion matrix. This matrix offers valuable insights into the patterns and tendencies observed in the participant responses. Subsequently, we apply hierarchical clustering techniques, utilizing the confusion matrix as the fundamental data source. This clustering methodology draws inspiration from the method outlined in Moore’s work [

22].

5.1. Confusion Matrix Exploration

The confusion matrix is a 36 by 36 matrix, with each row representing a physical button and the answers given for each physical button (the columns). Each cell of the confusion matrix represents the number of responses for that specific combination of physical and answer buttons (e.g., for physical button 5

![Mti 08 00015 i004]()

, button 6

![Mti 08 00015 i005]()

was chosen nine times). The complete confusion matrix is presented in

Figure 6, with a visualization representing the button size, shape, and roundness of 0 and 100% next to the column and rows. The three highlighted squares correspond to the three size groups, with sizes 15, 11, and 7, respectively. Each group of four rows or columns represents a different shape, and each individual row represents a specific rounding value. The confusion matrix offers valuable visual insights into the data and the recognizability of the buttons.

Clustering is observed around the distinct shape groups in a 4 by 4 grid grouped by roundness. The cluster formation is evident in button groups 17

![Mti 08 00015 i006]()

–20

![Mti 08 00015 i007]()

and answers 17

![Mti 08 00015 i006]()

–20

![Mti 08 00015 i007]()

, representing the four rectangular horizontal buttons with roundness values of 0, 33, 66, and 100%. These results suggest that the shape is highly distinguishable, particularly for rectangular horizontal buttons, while the roundness is more challenging to discern. A similar pattern is observed in button groups 21

![Mti 08 00015 i008]()

–24

![Mti 08 00015 i002]()

, which consist of four rectangular vertical buttons with size 11. Furthermore, an analysis of each size group indicates that the rectangular horizontal button is the most recognizable among all shapes for every size.

The distribution of answers reveals the impact of size on recognition accuracy. For buttons with size 15, most answers are correct regarding size, while some are answers for the rectangular horizontal buttons with size 11 (buttons 17

![Mti 08 00015 i006]()

–19

![Mti 08 00015 i022]()

). This trend is also evident for buttons with size 7, where most answers outside their size group are for buttons 17

![Mti 08 00015 i006]()

–19

![Mti 08 00015 i022]()

. This can be attributed to the fact that buttons with size 15 share the same width, and buttons with size 7 share the same height. For buttons with size 11, the distribution of answers is more dispersed among sizes, with most answers being for size 11, followed by size 15. This is especially pronounced for square buttons with size 11 (buttons 13

![Mti 08 00015 i009]()

–15

![Mti 08 00015 i010]()

), where many answers are given to the rectangular horizontal buttons with size 15 (buttons 5

![Mti 08 00015 i011]()

–7

![Mti 08 00015 i012]()

). This can be explained by the fact that buttons 13

![Mti 08 00015 i009]()

–15

![Mti 08 00015 i010]()

fit inside buttons 5

![Mti 08 00015 i011]()

–7

![Mti 08 00015 i012]()

, with the only difference being the length of the horizontal sides. Hence, when participants are uncertain about the size or find it difficult to estimate the correct size based on the left and right edges, they tend to choose buttons 5

![Mti 08 00015 i011]()

–7

![Mti 08 00015 i012]()

.

An analysis of the columns corresponding to buttons with 100% roundness reveals a low frequency of correct answers. One notable exception is button 16

![Mti 08 00015 i013]()

, which is readily recognized as a round button with either 66% (button 15

![Mti 08 00015 i010]()

) or 100% roundness. This can be attributed to the size of 11 mm, which falls within the average fingertip width (13.0 mm) and height (17.3 mm), making it easily distinguishable as round. Button 24

![Mti 08 00015 i021]()

represents another exceptional case with no accurate answers. The most frequently guessed answer was button 23

![Mti 08 00015 i002]()

, suggesting participants underestimated the roundness. The frequency of correct answers is higher for buttons with size 7 and 100% roundness. This can be attributed to the small size of the buttons, which, in combination with the rounding, makes it difficult to interpret the shape and roundness accurately, but participants do interpret some of these buttons as having 100% roundness.

5.2. Clustering Approach

To determine the substitute buttons, we apply a hierarchical clustering approach. The clusters obtained from the clustering analysis are then utilized to identify the substitute buttons that can effectively represent the entire set of 36 buttons.

To perform the clustering analysis, we calculate the Euclidean distances between the physical buttons (represented as rows) based on the values in the confusion matrix. For instance, when examining the rows corresponding to physical buttons 5 and 6, they have similar values for each column, resulting in a small distance between them (their perceived similarity was high). Then, sklearn’s Single Linkage Clustering algorithm [

41,

42] was applied. This algorithm initiates with each button as an independent cluster and progressively merges the two closest clusters until only a single cluster remains. The resultant hierarchical structure is visualized as a dendrogram, as illustrated in

Figure 7. To extract meaningful clusters, we applied a threshold distance of 9.3. This threshold identified six distinct representative clusters, with an additional four buttons necessitating manual assignment to one of the clusters.

We aimed for a maximum of nine clusters, resulting in one cluster for every shape–size combination, but a lower number of clusters is more desirable. Therefore, the threshold distance of 9.3 is picked, as it generates six distinct clusters. This results in a six-fold reduction in the buttons in the original search space.

The separate buttons, 12

![Mti 08 00015 i014]()

, 15

![Mti 08 00015 i010]()

, 16

![Mti 08 00015 i013]()

, and 20

![Mti 08 00015 i007]()

(highlighted in blue in the dendrogram), were assigned to clusters through manual inspection of the confusion matrix and their distances to the existing clusters:

Button 20

![Mti 08 00015 i007]()

. The nearest neighbor is button 19

![Mti 08 00015 i022]()

of cluster 3. Further examination of the confusion matrix reveals that button 19 is the most frequently classified button for button 20. The size and shape of button 20 also resemble the buttons in cluster 3.

Button 12

![Mti 08 00015 i014]()

. The nearest neighbor is button 9

![Mti 08 00015 i008]()

of cluster 1. Further examination of the confusion matrix shows that the most frequently classified buttons for button 12 belong to cluster 1.

Button 15

![Mti 08 00015 i010]()

. The nearest neighbor is 14

![Mti 08 00015 i010]()

of cluster 2. The confusion matrix also shows similarities between the rows of button 14 and button 15. Button 6

![Mti 08 00015 i005]()

, also belonging to cluster 2, was the second most commonly answered button, with button 15.

Button 16

![Mti 08 00015 i013]()

. The nearest neighbor is button 13

![Mti 08 00015 i009]()

of cluster 2. The confusion matrix shows that button 15 is also the most answered button for button 16, which is also a part of cluster 2.

Finally, each cluster’s most central button was determined by selecting the button with the lowest total distance to the other buttons within the cluster, as this button best represents the other buttons in its cluster. The center buttons for clusters 1 to 6, which serve as a representative substitute for their cluster, were identified as buttons 1

![Mti 08 00015 i015]()

, 8

![Mti 08 00015 i016]()

, 19

![Mti 08 00015 i022]()

, 23

![Mti 08 00015 i002]()

, 30

![Mti 08 00015 i017]()

, and 36

![Mti 08 00015 i001]()

, respectively. The results are illustrated in

Figure 8, with each cluster represented by a different color and the center button highlighted.

6. Validation of the Substitute Buttons

Based on our first test, we found six candidate substitute buttons. With our second study we want to validate how well these substitute buttons can represent virtual buttons inside a VR environment. In this study, the visual information does not necessarily match the physical information provided by the physical buttons, allowing us to also test if the added visual information changes the impression of the tactile sensations of the physical buttons.

6.1. Study Design

Our study design closely resembles our first study: participants interact with a physical button through our test platform and answer questions about the physical button. The main difference in this study is that the participants are wearing a VR HMD (Varjo Aero

https://varjo.com/products/aero/, accessed on 10 August 2023) and are shown virtual buttons (

Figure 9b).

6.1.1. Apparatus

The apparatus is an updated version of the test platform from the button discrimination test. It houses nine 3D-printed buttons that move along only one axis, as shown in

Figure 9a. These buttons consist of the six substitute buttons and three control group buttons (12

![Mti 08 00015 i014]()

, 13

![Mti 08 00015 i009]()

, 25

![Mti 08 00015 i018]()

). For the control group buttons, we selected one button from each size group, ensuring maximal dissimilarity between each control button and its corresponding substitute button of equivalent size. Participants place their dominant hand on the platform and press the button through the aperture. The buttons are connected through an Arduino Uno with the VR environment made in Unity to signal the physical button press to the virtual environment. The experimenter is again responsible for positioning the correct button underneath the aperture and recording the response from the participant.

6.1.2. Participants

Sixteen right-handed participants (15 male, 1 female), aged 20 to 28, participated, with a mean age of 21.5. As our first study showed no correlation between finger size, age, or gender and button recognition, we did not explicitly balance our participants for finger size.

6.2. Study Procedure

The study consists of a training phase and the main study. During the training, participants are guided on hand placement and button pressing. Each participant may only press the button once and is not allowed to maintain contact with the button. This approach is chosen as it mimics real interaction with a button. After every button press, the participant is asked to rate the degree of similarity between the physical button they pressed and the virtual button they saw. We use an ordinal rating scale: 1 = Same, 2 = Unsure, 3 = Different. During the training, the participant will test the three control group buttons, which are always presented together with their matching virtual button.

During the study, the participants tested every substitute button with all the buttons inside its cluster and their neighboring clusters (96 tests). For example, substitute button 19

![Mti 08 00015 i022]()

is tested with all the buttons from cluster 3 (own cluster) and clusters 2 and 4 (neighboring clusters). The three control group buttons are also tested once, resulting in 99 buttons tested by each participant. Participants received no feedback during the study on their answers to minimize the learning effect.

6.3. Results

Our analysis of this study is based on the similarity of each substitute button compared to the virtual buttons of its own cluster and the neighboring clusters. The similarity is defined in the three ordinal categories: same, unsure, and different.

Figure 10 visually represents the data, visually showing the distribution of the answers given over the virtual buttons. Every Substitute Button is represented in its own row with three sub-rows for the three possible answers. Every column represents a virtual button that is shown in the VR environment. The numbers represent the number of times a specific answer is given for a specific combination of substitute button and virtual button. The color gradient maps the median to a specific color: dark blue for a median < 2; light blue for a median = 2; grey for a median = 2.5; and no color for a median = 3.

The visualization provides insight into the distribution of the answers. For example, Substitute Button 19

![Mti 08 00015 i022]()

(green cluster) received mostly ‘same’ answers for the similarity inside its own cluster (buttons 17–20). That same substitute button received mostly ‘different’ answers for the similarity compared against cluster 4 (buttons 21–24). The reverse is true for substitute button 23

![Mti 08 00015 i002]()

(orange cluster). It received a lot of ‘same’ answers for its own cluster and a lot of ‘different’ answers for its neighboring cluster.

Looking at the answers for the buttons of the control, we see that for all three buttons, not all participants found the buttons matching (see

Table 1). Button 13

![Mti 08 00015 i009]()

scored the worst out of the three, as only 11 participants indicated it was the same button. All buttons scored a median score of 1.

6.4. Analysis

We look at the median score for every combination to analyze how well every substitute button can represent the virtual buttons. The median scores indicate the distribution of answers given for each combination of physical and virtual buttons. Our goal is to find buttons that feel similar enough to the participants, so we are interested in the answers when participants indicated they were not sure if it was the same button and when they indicated it was the same button. Therefore, we put our detection threshold for feasible combinations at a median score of two.

Most substitute button/virtual button combinations within the same cluster scored a median score of two or lower. This shows that our clustering based on the button discrimination test results in appropriate substitute buttons for our original search space.

We also investigate the combinations of substitute and virtual buttons tested outside the corresponding substitute cluster. These represent substitute buttons that are recognized as the virtual button outside the original cluster; these can also be seen in

Figure 10. Substitute button 1

![Mti 08 00015 i015]()

can represent all virtual buttons from 1 to 16 besides buttons 12 and 16. Substitute button 8

![Mti 08 00015 i016]()

can represent buttons 1, 2, 3, 10, 20. Substitute button 19

![Mti 08 00015 i022]()

can represent buttons 5 and 7, buttons of the same shape but one size larger. Substitute button 23

![Mti 08 00015 i002]()

can represent buttons 34 and 35, buttons of the same shape but one size smaller. Substitute button 30

![Mti 08 00015 i019]()

can represent buttons 26 and 27.

These results show that some substitute buttons might also work for a broader range of virtual buttons outside of their original cluster. More specifically, substitute button 1

![Mti 08 00015 i015]()

, as it could represent all buttons of size 15 and even some of size 11. Similarly, for substitute button 36

![Mti 08 00015 i001]()

, our results show that it could also represent all buttons of size 7.

The three control group buttons highlight that correctly interpreting the tactile sensation of the physical button is difficult, as these button combinations are not recognized as the same by all the participants (see

Table 1). Some participants report these combinations as unsure or even different.

We found three button combinations (see

Table 2) for which the substitute button is not an appropriate match for the virtual button. Combined with

Figure 10, we can see the distribution of the answers for these combinations.

Button 12![Mti 08 00015 i014]()

. Half of the participants recognize a difference between substitute button 1

![Mti 08 00015 i015]()

and virtual button 12

![Mti 08 00015 i014]()

, while the other half believe they are the same or are unsure about the difference.

Button 16![Mti 08 00015 i013]()

. For this combination, 11 out of 16 participants recognized a difference between substitute button 8

![Mti 08 00015 i016]()

and button 16

![Mti 08 00015 i013]()

. But, if we look at the combination of substitute button 1

![Mti 08 00015 i009]()

and button 16

![Mti 08 00015 i013]()

, we can again see a 50–50 distribution.

Button 32![Mti 08 00015 i020]()

. The combination of substitute button 30

![Mti 08 00015 i019]()

and button 32

![Mti 08 00015 i020]()

is again a 50–50 distribution. Still, here we can see that substitute button 36

![Mti 08 00015 i001]()

and button 32

![Mti 08 00015 i020]()

have only seven different ratings, indicating that the difference in recognition between these two combinations is low.

Because the control group did not score perfect recognition and some button combinations scored better outside their cluster, we propose the following solutions for the three outlier button combinations: 1–12, 8–16, 30–32. Virtual buttons 12

![Mti 08 00015 i014]()

and 32

![Mti 08 00015 i020]()

are both on a median of 2.5 for their respective substitute button but might still work as a substitute button/virtual button combination. Half of the participants found the matching passable; the control group showed difficulty in recognizing the tactile sensations, and we tested in controlled circumstances, while the actual use case might be different. Substitute button 8 in combination with button 16

![Mti 08 00015 i013]()

is not a good match, but in combination with substitute button 1

![Mti 08 00015 i009]()

, it also scores a median of 2.5. Thus, for the same reasons as buttons 12

![Mti 08 00015 i014]()

and 32

![Mti 08 00015 i020]()

, we hypothesize that button 16 is a better fit with substitute button 1. Our findings show that there is only one button that could move to another cluster: button 16 has a stronger association with substitute button 1 than 8

![Mti 08 00015 i016]()

andthus might move from cluster 2 to cluster 1.

7. Applications

To situate our research within the existing literature and haptic feedback devices, we describe several use cases for our results. Secondly, a field study where we test our results within a virtual reality game context is conducted and explained.

7.1. Applications within the Literature

Applying the results of our study, the six substitute buttons enable various haptic devices and applications to increase the number of virtual buttons they can represent without increasing the physical space required for them. Depending on the number of virtual buttons that need representing, at most, the six substitute buttons are required.

The first type of haptic user interface for which our results can be applied are encountered haptic devices such as Snake Charmer [

11], HapticPanel [

36], VRRobot [

12], and robotic graphics applications [

13,

14] in general.

A second type of haptic user interface is handheld haptic devices such as the Haptic Revolver [

43]. They show two example cases where the actuated wheel of the device is fitted with buttons: one set of active buttons and one set of passive buttons. Our six substitute buttons could perfectly fit on the wheel, thus enabling full haptic feedback on all virtual buttons in our search space from using only one haptic device.

A third type of haptic user interface that benefits from our work is those that use haptic retargeting [

44]. The work of Matthews et al. [

45,

46] shows the possibilities of haptic retargeting for physical–virtual interfaces. We build on this work by providing guidelines for reusing a single physical button for multiple virtual buttons. Combined with haptic retargeting, it allows a physical button to represent multiple virtual buttons in different positions in the virtual interface.

Our work expands the literature on the use of passive haptic proxies by increasing the reusability of the haptic proxies for more virtual objects, specifically virtual buttons. In combination with the work of Feick et al. [

30] on reusing physical sliders and linear movement, and DynaKnob [

47], a shape-changing knob, we envision complete virtual interfaces represented by a compact set of haptic proxies for each interaction type, i.e., sliders, buttons, knobs.

Lastly, our research contributes to the literature on button interaction by examining how physical button characteristics are perceived with and without visual information. Our findings demonstrate that specific physical characteristics remain distinguishable even without visual information. Linking these specific buttons to different actions enables the creation of interfaces optimized for interaction without looking at them. This work extends the research of Oulasvirta et al. [

20] and Liao et al. [

21], who focused on the characteristics of internal button mechanisms while pressing them and simulating these internal mechanisms. Our work enables the reuse of the physical characteristics of the buttons. Combining these allows us to theorize a physical interface consisting of the six substitute buttons, with each a dynamic internal mechanism, creating a dynamic physical interface that can represent a broad range of physical buttons in physical and internal characteristics.

7.2. Informal Field Test

We executed an informal field test during a local popular science festival (Nerdland Festival—

https://www.nerdlandfestival.be/nl/herbeleef, accessed on 19 February 2024). This test aimed to evaluate the practical usability of our substitute buttons in a more realistic interactive scenario. Participants played a simple VR game using physical buttons, diverting their focus from the pressed button. In contrast to the previous controlled experiments, participants engaged in a simplified VR game that required them to interact with the substitute buttons (see

Figure 11a). The game objective was to synchronize pressing the physical button with the overlap of the virtual button, inspired by Guitar Hero’s (

https://en.wikipedia.org/wiki/Guitar_Hero, accessed on 11 August 2023) gameplay; see

Figure 11b.

Participants had the same six physical buttons (see

Figure 11a) in front of them, with the virtual button changing between rounds. Following each round, participants were queried regarding any distinctions they observed between the physical and virtual buttons and whether these distinctions influenced their overall enjoyment of the game.

Our informal field test tested each of the 6 substitute buttons in conjunction with 12 distinct virtual buttons. These virtual buttons included those within the same cluster and a subset of neighboring clusters, dependent on the cluster sizes. Each combination involving a substitute button was iterated three times in a round-robin fashion. After completing the full round, the physical buttons were transitioned to the next substitute button. Each participant participated in three game rounds, engaging with three unique combinations of substitute and virtual buttons. The test enlisted the participation of 64 individuals, with demographic information such as age and gender not recorded to streamline the procedure, as our first study showed no correlation between age, finger size, and gender and button recognizability.

Given the test’s integration into the science festival, we opted for brevity, aiming to maintain a duration of a maximum of 5 min, including the explanation. The number of participants combined with the number of tests yielded an average of four responses per combination. Overall, the setup provided a positive experience, as most participants did not perceive the mismatch between physical and virtual buttons. Only where the difference between physical and virtual buttons was outside of the similar clusters, the mismatch was noticed but not detrimental to the game experience.

8. Discussion

We conducted two studies to determine the discriminability of buttons in relation to the shape, size, and roundness of the corners to find a subset of buttons that can represent all the buttons in the original search space. We tested in two different circumstances: without any visual information and with visual information provided through a VR headset. We started with testing without any visual information, as this forced the participants to judge the buttons based solely on the haptic characteristics and without any visual information influencing their decision. This results in baseline data created in the most challenging circumstances. The research has shown that visual information can influence haptic sensations [

17,

18,

19], making these results more generally applicable.

For the second study, we added the visual information of a virtual button through the VR headset, potentially influencing the decision of the physical characteristics of the physical–virtual button combination. For the main part, we see that our results from the first study still apply in this case. Substitute buttons 1

![Mti 08 00015 i015]()

and 36

![Mti 08 00015 i001]()

can represent a larger set of virtual buttons than their original cluster. But, there is a limit to the mismatch between the physical button and virtual buttons that the visual information can overcome. For example, substitute buttons 19

![Mti 08 00015 i022]()

and 23

![Mti 08 00015 i002]()

clearly show that tactile sensations are more important when the mismatch between physical and virtual information is too big. These buttons were easy to identify in the first study, which is still the case for the second study, even with conflicting visual information, showing us that there are limits to the mismatch in haptic versus visual information that can be overcome by visual information only. If the mismatch is too large, the haptic information is the information channel that dominates the users’ perception. For substitute buttons 19

![Mti 08 00015 i022]()

and 23

![Mti 08 00015 i002]()

, we theorize that this is because of the size of 11 mm of these buttons. This size fits nicely inside the average fingertip area and thus provides a very clear tactile sensation on the shape of this button.

The participant’s finger was positioned flat on the testing apparatus (

Figure 2b). The horizontal positioning of the experimental setup was chosen to maximize the contact area between the fingertip and the button, facilitating more informed choices. In the case of a vertical orientation, this contact area is likely smaller, as it would be mostly the tip at the front of the finger, thus decreasing the “amount” of haptic sensing. Therefore, we believe our results also apply to the vertical orientation. However, further testing is needed to validate these assumptions.

Our findings allow us to make assumptions about recognizing buttons beyond the original search space. The two most crucial factors to consider are the shape and size of the buttons. The size is the most easily recognizable attribute, with the shape following closely behind, albeit influenced by size. When searching for a substitute button for a virtual button not included in our study, it is advisable first to identify the closest size group and seek the cluster that best fits that shape. In cases where the difference between size groups is equal, such as a button of size 13 mm, it is recommended to round up, as most misinterpretations of size were overestimations.

Buttons larger than 15 mm can be effectively approximated using button 1

![Mti 08 00015 i015]()

from cluster 1. This is because button 1 is larger than the average fingertip area that makes contact with the button. Any variations in size would not be perceptible as long as the button interaction takes place in the center. On the other hand, buttons with a size smaller than 7 mm can be represented by button 36

![Mti 08 00015 i001]()

, which corresponds to cluster 5. Given their small size, these buttons are likely to pose difficulties in differentiation, and their usage during typical interactions is relatively rare.

The degree of roundness in the button was found to be of secondary importance. Our participants in the first study encountered difficulties in accurately determining the roundness and instead made educated guesses. Hence, when seeking a substitute button, we recommended prioritizing finding a match in terms of shape and size and only considering the roundness as a factor when it lies in the extremes: close to 0% or 100% roundness. Our validation study confirms that the combinations with 0% and 100% roundness result in higher uncertainty about the button matching.

9. Conclusions

In this paper, we introduced the concept of

substitute buttons, a set of carefully selected physical buttons (

![Mti 08 00015 i015]()

,

![Mti 08 00015 i012]()

,

![Mti 08 00015 i007]()

,

![Mti 08 00015 i002]()

,

![Mti 08 00015 i019]()

,

![Mti 08 00015 i001]()

) that represent a wide range of virtual buttons. These substitute buttons were identified in a perception study utilizing a blind identification test. We used a hierarchical clustering approach based on the confusion matrix from the data we gathered to classify groups of physical buttons that a single substitute button can represent. We conducted a second study adding a visual channel using virtual reality (VR) to validate the effectiveness of these substitute buttons in various physical–virtual button combinations. Our findings highlight the challenges of identifying physical buttons solely based on tactile sensations and enabling the reuse of substitute buttons to represent multiple VR buttons while preserving appropriate haptic feedback. This work opens new avenues for enhancing haptic feedback in VR applications by reducing the number of haptic proxies required. For instance, it can be applied to diverse haptic devices such as encountered-type haptics [

11,

36], hand-held haptics [

43], or interfaces developed through haptic retargeting [

45]. Doing so facilitates the development of a broader range of VR applications where appropriate haptic interaction with buttons is important.

Author Contributions

Conceptualization, B.v.D., G.A.R.R. and K.L.; methodology, B.v.D., G.A.R.R., D.V. and K.L.; software, B.v.D. and D.M.B.; validation, B.v.D.; formal analysis, B.v.D. and D.M.B.; investigation, B.v.D.; resources, K.L.; data curation, B.v.D. and D.M.B.; writing—original draft preparation, B.v.D., G.A.R.R. and D.V.; writing—review and editing, G.A.R.R., D.V. and K.L.; visualization, B.v.D. and D.M.B.; supervision, G.A.R.R. and K.L.; project administration, K.L.; funding acquisition, K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Flemish Government under the “Onderzoeksprogramma Artificiële Intelligentie (AI) Vlaanderen” programme, R-13509, and by the Special Research Fund (BOF) of Hasselt University: BOF20OWB23, BOF21DOC19.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of UHasselt (protocol code REC/SMEC/2022-2023/62, approved on 19 October 2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Acknowledgments

We thank our study participants for participating in our user studies.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Joyce, R.D.; Robinson, S. Passive Haptics to Enhance Virtual Reality Simulations. In Proceedings of the AIAA Modeling and Simulation Technologies Conference, Grapevine, TX, USA, 9–13 January 2017; p. 10. [Google Scholar] [CrossRef]

- Insko, B.E. Passive Haptics Significantly Enhances Virtual Environments; The University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 2001. [Google Scholar]

- Muender, T.; Reinschluessel, A.V.; Drewes, S.; Wenig, D.; Döring, T.; Malaka, R. Does It Feel Real? Using Tangibles with Different Fidelities to Build and Explore Scenes in Virtual Reality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019. CHI ’19. pp. 673:1–673:12. [Google Scholar] [CrossRef]

- Arora, J.; Saini, A.; Mehra, N.; Jain, V.; Shrey, S.; Parnami, A. VirtualBricks: Exploring a Scalable, Modular Toolkit for Enabling Physical Manipulation in VR. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019. CHI ’19. pp. 56:1–56:12. [Google Scholar] [CrossRef]

- Schulz, P.; Alexandrovsky, D.; Putze, F.; Malaka, R.; Schöning, J. The Role of Physical Props in VR Climbing Environments. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems—CHI ’19, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Cheng, L.P.; Roumen, T.; Rantzsch, H.; Köhler, S.; Schmidt, P.; Kovacs, R.; Jasper, J.; Kemper, J.; Baudisch, P. TurkDeck: Physical Virtual Reality Based on People. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 8–11 November 2015; ACM: New York, NY, USA, 2015. UIST ’15. pp. 417–426. [Google Scholar] [CrossRef]

- Simeone, A.L.; Velloso, E.; Gellersen, H. Substitutional Reality: Using the Physical Environment to Design Virtual Reality Experiences. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015. CHI ’15. pp. 3307–3316. [Google Scholar] [CrossRef]

- Han, D.T.; Suhail, M.; Ragan, E.D. Evaluating Remapped Physical Reach for Hand Interactions with Passive Haptics in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1467–1476. [Google Scholar] [CrossRef] [PubMed]

- Steinicke, F.; Bruder, G.; Jerald, J.; Frenz, H.; Lappe, M. Analyses of Human Sensitivity to Redirected Walking. In Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology, Bordeaux, France, 27–29 October 2008; ACM: New York, NY, USA, 2008. VRST ’08. pp. 149–156. [Google Scholar] [CrossRef]

- Cheng, L.P.; Ofek, E.; Holz, C.; Benko, H.; Wilson, A.D. Sparse Haptic Proxy: Touch Feedback in Virtual Environments Using a General Passive Prop. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017. CHI ’17. pp. 3718–3728. [Google Scholar] [CrossRef]

- Araujo, B.; Jota, R.; Perumal, V.; Yao, J.X.; Singh, K.; Wigdor, D. Snake Charmer: Physically Enabling Virtual Objects. In Proceedings of the TEI ’16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction-TEI ’16, Eindhoven, The Netherlands, 14–17 February 2016; pp. 218–226. [Google Scholar] [CrossRef]

- Vonach, E.; Gatterer, C.; Kaufmann, H. VRRobot: Robot actuated props in an infinite virtual environment. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 74–83. [Google Scholar] [CrossRef]

- McNeely, W. Robotic graphics: A new approach to force feedback for virtual reality. In Proceedings of the IEEE Virtual Reality Annual International Symposium, Seattle, WA, USA, 18–22 September 1993; pp. 336–341. [Google Scholar] [CrossRef]

- Gruenbaum, P.E.; McNeely, W.A.; Sowizral, H.A.; Overman, T.L.; Knutson, B.W. Implementation of Dynamic Robotic Graphics For a Virtual Control Panel. Presence Teleoperators Virtual Environ. 1997, 6, 118–126. [Google Scholar] [CrossRef]

- Abdullah, M.; Kim, M.; Hassan, W.; Kuroda, Y.; Jeon, S. HapticDrone: An encountered-type kinesthetic haptic interface with controllable force feedback: Example of stiffness and weight rendering. In Proceedings of the 2018 IEEE Haptics Symposium (HAPTICS), San Francisco, CA, USA, 25–28 March 2018; pp. 334–339. [Google Scholar] [CrossRef]

- Abtahi, P.; Landry, B.; Yang, J.J.; Pavone, M.; Follmer, S.; Landay, J. Beyond The Force: Using Quadcopters to Appropriate Objects and the Environment for Haptics in Virtual Reality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems-CHI ’19, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Ernst, M.O.; Banks, M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 2002, 415, 429. [Google Scholar] [CrossRef]

- Rock, I.; Victor, J. Vision and Touch: An Experimentally Created Conflict between the Two Senses. Science 1964, 143, 594–596. [Google Scholar] [CrossRef]

- Srinivasan, M.A. The impact of visual information on the haptic perception of stiffness in virtual environments. ASME Dyn. Syst. Control Div. 1996, 58, 555–559. [Google Scholar]

- Oulasvirta, A.; Kim, S.; Lee, B. Neuromechanics of a Button Press. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018. CHI ’18. pp. 508:1–508:13. [Google Scholar] [CrossRef]

- Liao, Y.C.; Kim, S.; Lee, B.; Oulasvirta, A. Button Simulation and Design via FDVV Models. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020. CHI ’20. pp. 1–14. [Google Scholar] [CrossRef]

- Moore, T. Tactile and kinaesthetic aspects of push-buttons. Appl. Ergon. 1974, 5, 66–71. [Google Scholar] [CrossRef] [PubMed]

- Austin, T.R.; Sleight, R.B. Accuracy of tactual discrimination of letters, numerals, and geometric forms. J. Exp. Psychol. 1952, 43, 239. [Google Scholar] [CrossRef]

- French, B.; Chiaro, N.V.D.; Holmes, N.P. Hand posture, but not vision of the hand, affects tactile spatial resolution in the grating orientation discrimination task. Exp. Brain Res. 2022, 240, 2715–2723. [Google Scholar] [CrossRef]

- Craig, J.C. Grating orientation as a measure of tactile spatial acuity. Somatosens. Mot. Res. 1999, 16, 197–206. [Google Scholar] [CrossRef]

- Manning, H.; Tremblay, F. Age differences in tactile pattern recognition at the fingertip. Somatosens. Mot. Res. 2006, 23, 147–155. [Google Scholar] [CrossRef] [PubMed]

- Boven, R.W.V.; Johnson, K.O. The limit of tactile spatial resolution in humans: Grating orientation discrimination at the lip, tongue, and finger. Neurology 1994, 44, 2361. [Google Scholar] [CrossRef] [PubMed]

- Peters, R.M.; Hackeman, E.; Goldreich, D. Diminutive Digits Discern Delicate Details: Fingertip Size and the Sex Difference in Tactile Spatial Acuity. J. Neurosci. 2009, 29, 15756–15761. [Google Scholar] [CrossRef]

- Shapira, L.; Amores, J.; Benavides, X. TactileVR: Integrating Physical Toys into Learn and Play Virtual Reality Experiences. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Merida, Mexico, 19–23 September 2016; pp. 100–106. [Google Scholar] [CrossRef]

- Feick, M.; Kleer, N.; Zenner, A.; Tang, A.; Krüger, A. Visuo-haptic Illusions for Linear Translation and Stretching using Physical Proxies in Virtual Reality. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021; pp. 1–13. [Google Scholar] [CrossRef]

- Zenner, A.; Krüger, A. Detection Thresholds for Hand Redirection in Virtual Reality. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces, Osaka, Japan, 23–27 March 2019; pp. 47–55. [Google Scholar] [CrossRef]

- Feick, M.; Regitz, K.P.; Tang, A.; Krüger, A. Designing Visuo-Haptic Illusions with Proxies in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; ACM: New York, NY, USA, 2022. CHI ’22. pp. 1–15. [Google Scholar] [CrossRef]

- Yang, J.J.; Horii, H.; Thayer, A.; Ballagas, R. VR Grabbers: Ungrounded Haptic Retargeting for Precision Grabbing Tools. In Proceedings of the The 31st Annual ACM Symposium on User Interface Software and Technology-UIST ’18, Berlin, Germany, 14–17 October 2018; pp. 889–899. [Google Scholar] [CrossRef]

- Yokokohji, Y.; Hollis, R.L.; Kanade, T. WYSIWYF Display: A Visual/Haptic Interface to Virtual Environment. Presence Teleoper. Virtual Environ. 1999, 8, 412–434. [Google Scholar] [CrossRef]

- Latham, R. Designing virtual reality systems: A case study of a system with human/robotic interaction. In Proceedings of the IEEE COMPCON 97. Digest of Papers, San Jose, CA, USA, 23–26 February 1997; pp. 302–307. [Google Scholar] [CrossRef]

- van Deurzen, B.; Goorts, P.; De Weyer, T.; Vanacken, D.; Luyten, K. HapticPanel: An Open System to Render Haptic Interfaces in Virtual Reality for Manufacturing Industry. In Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 8–10 December 2021; ACM: New York, NY, USA, 2021. VRST ’21. pp. 1–3. [Google Scholar] [CrossRef]

- Yamaguchi, K.; Kato, G.; Kuroda, Y.; Kiyokawa, K.; Takemura, H. A Non-grounded and Encountered-type Haptic Display Using a Drone. In Proceedings of the 2016 Symposium on Spatial User Interaction-SUI ’16, Tokyo, Japan, 15–16 October 2016; ACM: New York, NY, USA, 2016; pp. 43–46. [Google Scholar] [CrossRef]

- Alexander, J.; Hardy, J.; Wattam, S. Characterising the Physicality of Everyday Buttons. In Proceedings of the Ninth ACM International Conference on Interactive Tabletops and Surfaces, Dresden, Germany, 16–19 November 2014; ACM: New York, NY, USA, 2014. ITS ’14. pp. 205–208. [Google Scholar] [CrossRef]

- MIL-STD-1472F; Design Criteria Standard, Human Engineering. US Government Printing Office: Washington, DC, USA, 1999.

- Long, C.; Finlay, A. The finger-tip unit—A new practical measure. Clin. Exp. Dermatol. 1991, 16, 444–447. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Sibson, R. SLINK: An optimally efficient algorithm for the single-link cluster method. Comput. J. 1973, 16, 30–34. [Google Scholar] [CrossRef]

- Whitmire, E.; Benko, H.; Holz, C.; Ofek, E.; Sinclair, M. Haptic Revolver: Touch, Shear, Texture, and Shape Rendering on a Reconfigurable Virtual Reality Controller. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018. CHI ’18. pp. 1–12. [Google Scholar] [CrossRef]

- Azmandian, M.; Hancock, M.; Benko, H.; Ofek, E.; Wilson, A.D. Haptic Retargeting: Dynamic Repurposing of Passive Haptics for Enhanced Virtual Reality Experiences. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems-CHI ’16, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA; pp. 1968–1979. [Google Scholar] [CrossRef]

- Matthews, B.J.; Reichherzer, C.; Smith, R.T. Remapped Interfaces: Building Contextually Adaptive User Interfaces with Haptic Retargeting. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; ACM: New York, NY, USA, 2023. CHI EA ’23. pp. 1–4. [Google Scholar] [CrossRef]

- Matthews, B.J.; Thomas, B.H.; Von Itzstein, G.S.; Smith, R.T. Towards Applied Remapped Physical-Virtual Interfaces: Synchronization Methods for Resolving Control State Conflicts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; ACM: New York, NY, USA, 2023. CHI ’23. pp. 1–18. [Google Scholar] [CrossRef]

- van Oosterhout, A.; Hoggan, E.; Rasmussen, M.K.; Bruns, M. DynaKnob: Combining Haptic Force Feedback and Shape Change. In Proceedings of the 2019 on Designing Interactive Systems Conference, San Diego, CA, USA, 23–28 June 2019; ACM: New York, NY, USA, 2019. DIS ’19. pp. 963–974. [Google Scholar] [CrossRef]

Figure 1.

The grid of physical buttons used in the paper, annotated with sizes, shapes, and roundness groupings.

Figure 1.

The grid of physical buttons used in the paper, annotated with sizes, shapes, and roundness groupings.

Figure 2.

Our custom-made test platform to easily test all 36 (3D-printed) buttons. (a) The test platform. (b) A participant is pressing on the physical button.

Figure 2.

Our custom-made test platform to easily test all 36 (3D-printed) buttons. (a) The test platform. (b) A participant is pressing on the physical button.

Figure 3.

The complete study setup. The participant can be seen with their hand on our testing apparatus inside the cardboard box, which hides any visual information about the buttons. (a) The experimenter view. (b) The top view.

Figure 3.

The complete study setup. The participant can be seen with their hand on our testing apparatus inside the cardboard box, which hides any visual information about the buttons. (a) The experimenter view. (b) The top view.

Figure 4.

Fingertip measurement setup. The reference lines have a known spacing (2 mm), which is used as a reference in the picture to calculate the surface area. (a) Side view, showing the finger placement. (b) Top view, showing reference lines on the Plexiglas.

Figure 4.

Fingertip measurement setup. The reference lines have a known spacing (2 mm), which is used as a reference in the picture to calculate the surface area. (a) Side view, showing the finger placement. (b) Top view, showing reference lines on the Plexiglas.

Figure 5.

The guess percentage per button with next to it the button for reference.

Figure 5.

The guess percentage per button with next to it the button for reference.

Figure 6.

The confusion matrix represents all answers given by all participants. Rows represent the physical buttons, and columns represent the possible answers. The color gradient visualizes the amount of times an answer is picked, the darker the green color the higher the amount of answers. The three marked squares represent the three size groups, 15, 11, and 7.

Figure 6.

The confusion matrix represents all answers given by all participants. Rows represent the physical buttons, and columns represent the possible answers. The color gradient visualizes the amount of times an answer is picked, the darker the green color the higher the amount of answers. The three marked squares represent the three size groups, 15, 11, and 7.

Figure 7.

The dendrogram shows the six clusters and the four separate buttons (in blue). The height of each button/cluster combination represents the distance between the two. For example, buttons 17

![Mti 08 00015 i006]()

and 19

![Mti 08 00015 i022]()

have the lowest distance between them and thus are the most similar to each other.

Figure 7.

The dendrogram shows the six clusters and the four separate buttons (in blue). The height of each button/cluster combination represents the distance between the two. For example, buttons 17

![Mti 08 00015 i006]()

and 19

![Mti 08 00015 i022]()

have the lowest distance between them and thus are the most similar to each other.

Figure 8.

The physical buttons show the six distinct clusters with their corresponding color and their center buttons highlighted.

Figure 8.

The physical buttons show the six distinct clusters with their corresponding color and their center buttons highlighted.

Figure 9.

The test platform and VR environment used in the substitute button validation test. (a) The test platform. (b) The VR environment.

Figure 9.

The test platform and VR environment used in the substitute button validation test. (a) The test platform. (b) The VR environment.

Figure 10.

The distribution of answers over the three categories for each Substitute Button (the rows) in combination with the virtual button (the columns). The gradient represents the median score for a specific combination: dark blue represents a median < 2; light blue median = 2; grey median = 2.5.

Figure 10.

The distribution of answers over the three categories for each Substitute Button (the rows) in combination with the virtual button (the columns). The gradient represents the median score for a specific combination: dark blue represents a median < 2; light blue median = 2; grey median = 2.5.

Figure 11.

The test setup and simple VR game used during our field test. The test setup allowed for quickly changing the substitute buttons by removing the current buttons and picking a set of replacement buttons. The replacement set for substitute buttons 8 and 19 is shown. (a) The test setup. (b) The VR game.

Figure 11.

The test setup and simple VR game used during our field test. The test setup allowed for quickly changing the substitute buttons by removing the current buttons and picking a set of replacement buttons. The replacement set for substitute buttons 8 and 19 is shown. (a) The test setup. (b) The VR game.

Table 1.

The distribution of answers over the three control group buttons, with every physical and virtual button being the same.

Table 2.

The three combinations of substitute/virtual button for which the median score is higher than 2, indicating that the combination is most often recognized as different buttons.

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

, button 23

, button 23  , and button 11

, and button 11  . For every button, the participant is asked to verbally state the number of the physical button they believed they had pressed. Participants were only allowed to answer one number per physical button and thus had to make a choice.

. For every button, the participant is asked to verbally state the number of the physical button they believed they had pressed. Participants were only allowed to answer one number per physical button and thus had to make a choice. , button 6

, button 6  was chosen nine times). The complete confusion matrix is presented in Figure 6, with a visualization representing the button size, shape, and roundness of 0 and 100% next to the column and rows. The three highlighted squares correspond to the three size groups, with sizes 15, 11, and 7, respectively. Each group of four rows or columns represents a different shape, and each individual row represents a specific rounding value. The confusion matrix offers valuable visual insights into the data and the recognizability of the buttons.

was chosen nine times). The complete confusion matrix is presented in Figure 6, with a visualization representing the button size, shape, and roundness of 0 and 100% next to the column and rows. The three highlighted squares correspond to the three size groups, with sizes 15, 11, and 7, respectively. Each group of four rows or columns represents a different shape, and each individual row represents a specific rounding value. The confusion matrix offers valuable visual insights into the data and the recognizability of the buttons. –20

–20  and answers 17

and answers 17  –20

–20  , representing the four rectangular horizontal buttons with roundness values of 0, 33, 66, and 100%. These results suggest that the shape is highly distinguishable, particularly for rectangular horizontal buttons, while the roundness is more challenging to discern. A similar pattern is observed in button groups 21