A Technical Survey on Delay Defects in Nanoscale Digital VLSI Circuits

Abstract

:1. Introduction

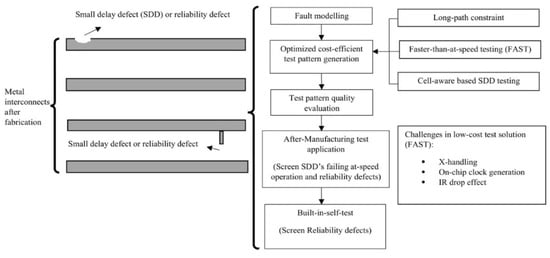

2. Fault Models for Delay Defects

2.1. Advantage of LOC over LOS

3. Small Delay Defects vs. Hidden Delay Defects

3.1. Small Delay Defects

3.2. Hidden Delay Defects

4. Effect of Process, Voltage and Temperature (PVT) in SDD and HDD Testing

5. Long Path Selection

6. Test Pattern Generations for Delay Faults

6.1. ATPG for SDD

6.2. Pattern Grading and Evaluation

6.3. Faster-than-at-Speed-Based ATPG for SDD and HDD

7. Challenges of FAST

7.1. X-Handling

7.2. On-Chip Clock Generation for FAST

On-Chip Clock Generation for Asynchronous Circuit

7.3. IR Drop Effect

8. Cell-Aware Based SDD Testing

9. Diagnosis of SDD and HDD

10. Test Quality Metrics for SDDs and HDDs

10.1. Delay Test Coverage Metric ()

10.2. Quadratic Small Delay Defect Coverage ()

10.3. Statistical Delay Quality Level (SDQL)

10.4. Small Delay Defect Coverage ()

10.5. Weighted Slack Percentage ()

11. Commercially Available EDA Tools

11.1. Mentor Graphics

11.2. Synopsys

11.3. Cadence

12. Conclusions and Future Directions

- 1.

- Addressing the challenges of FAST-based method like X handling, On-chip clock generation, and IR drop to develop a more cost-effective solution, especially for BIST, to catch reliability defects.

- 2.

- Developing a more accurate test quality metric for SDD and HDD testing by considering several other parameters like clock skew, latency, uncertainty, etc., can be a future research direction.

- 3.

- The effect of process variations and IR drop can be included as a parameter in the calculation of test quality metric to precisely evaluate the test quality.

- 4.

- The CA based testing for targeting the SDD and HDD in order to furthur reduce the DPPM can be explored by the researchers.

- 5.

- Developing more efficient diagnostic algorithms to improve diagnostic resolution can be a prospective research direction.

- 6.

- The complex manufacturing process, the multi-fin structure, and the small feature size make the FinFETs more prone to defects. If a single fin has a defect, then the high leakage current causes small delays in the circuit without actually failing the circuit [96]. Moreover, the reliability risk during in-field operations is due to the temperature increase. This finding could give researchers a new way to look at how to address these challenges in FinFETs and develop better CA-based fault modelling to reduce the DPPM.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moore, G.E. Cramming more components onto integrated circuits, Reprinted from Electronics, volume 38, number 8, April 19, 1965, pp.114 ff. IEEE Solid State Circuits Soc. Newsl. 2006, 11, 33–35. [Google Scholar] [CrossRef]

- Brain, R. Interconnect scaling: Challenges and opportunities. In Proceedings of the 2016 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 3–7 December 2016; pp. 9.3.1–9.3.4. [Google Scholar] [CrossRef]

- Piplani, S.; Visweswaran, G.S.; Kumar, A. Impact of crosstalk and process variation on capture power reduction for at-speed test. In Proceedings of the 2016 IEEE 34th VLSI Test Symposium (VTS), Las Vegas, NV, USA, 25–27 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Faisal, W.; Knotter, D.M.; Mud, A.; Kupera, F.G. Impact of particles in ultra pure water on random yield loss in IC production. Microelectron. Eng. 2009, 86, 140–144. [Google Scholar] [CrossRef]

- Montanes, R.; de Gyvez, J.; Volf, P. Resistance characterization for weak open defects. IEEE Des. Test Comput. 2002, 19, 18–26. [Google Scholar] [CrossRef]

- Zisser, W.H.; Ceric, H.; Weinbub, J.; Selberherr, S. Electromigration induced resistance increase in open TSVs. In Proceedings of the 2014 International Conference on Simulation of Semiconductor Processes and Devices (SISPAD), Yokohama, Japan, 23 October 2014; pp. 249–252. [Google Scholar] [CrossRef]

- Ghaida, R.S.; Zarkesh-Ha, P. A Layout Sensitivity Model for Estimating Electromigration-Vulnerable Narrow Interconnects. J. Electron. Test. 2009, 25, 67–77. [Google Scholar] [CrossRef]

- Villacorta, H.; Champac, V.; Gomez, R.; Hawkins, C.; Segura, J. Reliability Analysis of Small-Delay Defects Due to Via Narrowing in Signal Paths. IEEE Des. Test 2013, 30, 70–79. [Google Scholar] [CrossRef]

- Waicukauski, J.A.; Lindbloom, E.; Rosen, B.K.; Iyengar, V.S. Transition Fault Simulation. IEEE Des. Test Comput. 1987, 4, 32–38. [Google Scholar] [CrossRef]

- Pomeranz, I. A Metric for Identifying Detectable Path Delay Faults. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2012, 31, 1734–1742. [Google Scholar] [CrossRef]

- Majhi, A.; Agrawal, V. Delay fault models and coverage. In Proceedings of the Eleventh International Conference on VLSI Design, Chennai, India, 4–7 January 1998; pp. 364–369. [Google Scholar] [CrossRef]

- Savir, J.; Patil, S. On broad-side delay test. IEEE Trans. Very Large Scale Integr. VLSI Syst. 1994, 2, 368–372. [Google Scholar] [CrossRef]

- Savir, J.; Patil, S. Scan-based transition test. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 1993, 12, 1232–1241. [Google Scholar] [CrossRef]

- Pandey, K. A Critical Engineering Dissection of LOS and LOC At-speed Test Approaches. In Proceedings of the 2020 IEEE International Test Conference India, Bangalore, India, 12–14 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Ahmed, N.; Tehranipoor, M.; Jayaram, V. Timing-based delay test for screening small delay defects. In Proceedings of the 2006 43rd ACM/IEEE Design Automation Conference, San Francisco, CA, USA, 24–28 July 2006; pp. 320–325. [Google Scholar] [CrossRef]

- Sato, Y.; Hamada, S.; Maeda, T.; Takatori, A.; Nozuyama, Y.; Kajihara, S. Invisible delay quality-SDQM model lights up what could not be seen. In Proceedings of the IEEE International Conference on Test, Austin, TX, USA, 8 November 2005; pp. 9–1210. [Google Scholar] [CrossRef]

- Qian, X.; Singh, A.D. Distinguishing Resistive Small Delay Defects from Random Parameter Variations. In Proceedings of the 2010 19th IEEE Asian Test Symposium, Shanghai, China, 1–4 December 2010; pp. 325–330. [Google Scholar] [CrossRef]

- Galarza-Medina, F.J.; García-Gervacio, J.L.; Champac, V.; Orailoglu, A. Small-delay defects detection under process variation using Inter-Path Correlation. In Proceedings of the 2012 IEEE 30th VLSI Test Symposium (VTS), Maui, HI, USA, 23–25 April 2012; pp. 127–132. [Google Scholar] [CrossRef]

- Tayade, R.; Sundereswaran, S.; Abraham, J. Small-Delay Defect Detection in the Presence of Process Variations. In Proceedings of the 8th International Symposium on Quality Electronic Design (ISQED’07), Washington, DC, USA, 26–28 March 2007; pp. 711–716. [Google Scholar] [CrossRef]

- Tayade, R.; Abraham, J. Small-delay defect detection in the presence of process variations. Microelectron. J. 2008, 39, 1093–1100, European Nano Systems (ENS) 2006. [Google Scholar] [CrossRef]

- Peng, K.; Yilmaz, M.; Chakrabarty, K.; Tehranipoor, M. Crosstalk- and Process Variations-Aware High-Quality Tests for Small-Delay Defects. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2013, 21, 1129–1142. [Google Scholar] [CrossRef]

- Soleimani, S.; Afzali-Kusha, A.; Forouzandeh, B. Temperature dependence of propagation delay characteristic in FinFET circuits. In Proceedings of the 2008 International Conference on Microelectronics, Sharjah, United Arab Emirates, 14–17 December 2008; pp. 276–279. [Google Scholar] [CrossRef]

- Ahmed, N.; Tehranipoor, M. A Faster-Than-at-Speed Transition-Delay Test Method Considering IR-Drop Effects. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2009, 28, 1573–1582. [Google Scholar] [CrossRef]

- Zolotov, V.; Xiong, J.; Fatemi, H.; Visweswariah, C. Statistical Path Selection for At-Speed Test. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2010, 29, 749–759. [Google Scholar] [CrossRef]

- Liou, J.J.; Krstic, A.; Wang, L.C.; Cheng, K.T. False-path-aware statistical timing analysis and efficient path selection for delay testing and timing validation. In Proceedings of the 2002 Design Automation Conference (IEEE Cat. No.02CH37324), New Orleans, LA, USA, 10–14 June 2002; pp. 566–569. [Google Scholar] [CrossRef]

- Amin, C.; Menezes, N.; Killpack, K.; Dartu, F.; Choudhury, U.; Hakim, N.; Ismail, Y. Statistical static timing analysis: How simple can we get? In Proceedings of the 42nd Design Automation Conference, Anaheim, CA, USA, 13–17 June 2005; pp. 652–657. [Google Scholar] [CrossRef]

- Visweswariah, C.; Ravindran, K.; Kalafala, K.; Walker, S.; Narayan, S.; Beece, D.; Piaget, J.; Venkateswaran, N.; Hemmett, J. First-Order Incremental Block-Based Statistical Timing Analysis. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2006, 25, 2170–2180. [Google Scholar] [CrossRef]

- Lin, X.; Tsai, K.h.; Wang, C.; Kassab, M.; Rajski, J.; Kobayashi, T.; Klingenberg, R.; Sato, Y.; Hamada, S.; Aikyo, T. Timing-Aware ATPG for High Quality At-speed Testing of Small Delay Defects. In Proceedings of the 2006 15th Asian Test Symposium, Fukuoka, Japan, 20–23 November 2006; pp. 139–146. [Google Scholar] [CrossRef]

- Amyeen, M.; Venkataraman, S.; Ojha, A.; Lee, S. Evaluation of the quality of N-detect scan ATPG patterns on a processor. In Proceedings of the 2004 International Conferce on Test, Charlotte, NC, USA, 26–28 October 2004; pp. 669–678. [Google Scholar] [CrossRef]

- Pomeranz, I.; Reddy, S.M. On n-detection test sets and variable n-detection test sets for transition faults. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2000, 19, 372–383. [Google Scholar] [CrossRef]

- Yilmaz, K.C.M.; Tehranipoor, M. Test-Pattern Grading and Pattern Selection for Small-Delay Defects. In Proceedings of the 26th IEEE VLSI Test Symposium, San Diego, CA, USA, 27 April–1 May 2008. [Google Scholar] [CrossRef]

- Chang, C.Y.; Liao, K.Y.; Hsu, S.C.; Li, J.M.; Rau, J.C. Compact Test Pattern Selection for Small Delay Defect. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2013, 32, 971–975. [Google Scholar] [CrossRef]

- Yilmaz, M.; Chakrabarty, K.; Tehranipoor, M. Interconnect-Aware and Layout-Oriented Test-Pattern Selection for Small-Delay Defects. In Proceedings of the 2008 IEEE International Test Conference, Santa Clara, CA, USA, 28–30 October 2008; pp. 1–10. [Google Scholar] [CrossRef]

- Peng, K.; Thibodeau, J.; Yilmaz, M.; Chakrabarty, K.; Tehranipoor, M. A novel hybrid method for SDD pattern grading and selection. In Proceedings of the 2010 28th VLSI Test Symposium (VTS), Santa Cruz, CA, USA, 19–22 April 2010; pp. 45–50. [Google Scholar] [CrossRef]

- Peng, K.; Yilmaz, M.; Tehranipoor, M.; Chakrabarty, K. High-quality pattern selection for screening small-delay defects considering process variations and crosstalk. In Proceedings of the 2010 Design, Automation Test in Europe Conference Exhibition (DATE 2010), Dresden, Germany, 8–12 March 2010; pp. 1426–1431. [Google Scholar] [CrossRef]

- Peng, K.; Yilmaz, M.; Chakrabarty, K.; Tehranipoor, M. A Noise-Aware Hybrid Method for SDD Pattern Grading and Selection. In Proceedings of the 2010 19th IEEE Asian Test Symposium, Shanghai, China, 1–4 December 2010; pp. 331–336. [Google Scholar] [CrossRef]

- Bao, F.; Peng, K.; Tehranipoor, M.; Chakrabarty, K. Generation of Effective 1-Detect TDF Patterns for Detecting Small-Delay Defects. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2013, 32, 1583–1594. [Google Scholar] [CrossRef]

- Foster, R. Why Consider Screening, Burn-In, and 100-Percent Testing for Commercial Devices? IEEE Trans. Manuf. Technol. 1976, 5, 52–58. [Google Scholar] [CrossRef]

- Yoneda, T.; Hori, K.; Inoue, M.; Fujiwara, H. Faster-than-at-speed test for increased test quality and in-field reliability. In Proceedings of the 2011 IEEE International Test Conference, Anaheim, CA, USA, 20–22 September 2011; pp. 1–9. [Google Scholar] [CrossRef]

- Kruseman, B.; Majhi, A.; Gronthoud, G.; Eichenberger, S. On hazard-free patterns for fine-delay fault testing. In Proceedings of the 2004 International Conferce on Test, Charlotte, NC, USA, 26–28 October 2004; pp. 213–222. [Google Scholar] [CrossRef]

- Fu, X.; Li, H.; Li, X. Testable Path Selection and Grouping for Faster Than At-Speed Testing. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2012, 20, 236–247. [Google Scholar] [CrossRef]

- Kampmann, M.; Kochte, M.A.; Schneider, E.; Indlekofer, T.; Hellebrand, S.; Wunderlich, H.J. Optimized Selection of Frequencies for Faster-Than-at-Speed Test. In Proceedings of the 2015 IEEE 24th Asian Test Symposium (ATS), Mumbai, India, 22–25 November 2015; pp. 109–114. [Google Scholar] [CrossRef]

- Hasib, O.T.; Savaria, Y.; Thibeault, C. Optimization of Small-Delay Defects Test Quality by Clock Speed Selection and Proper Masking Based on the Weighted Slack Percentage. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2020, 28, 764–776. [Google Scholar] [CrossRef]

- Kampmann, M.; Hellebrand, S. X Marks the Spot: Scan-Flip-Flop Clustering for Faster-than-at-Speed Test. In Proceedings of the 2016 IEEE 25th Asian Test Symposium (ATS), Hiroshima, Japan, 21–24 November 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Naruse, M.; Porneranz, I.; Reddy, S.; Kundu, S. On-chip compression of output responses with unknown values using lfsr reseeding. In Proceedings of the International Test Conference, Charlotte, NC, USA, 30 September–2 October 2003; Volume 1, pp. 1060–1068. [Google Scholar] [CrossRef]

- Mitra, S.; Mitzenmacher, M.; Lumetta, S.; Patil, N. X-tolerant test response compaction. IEEE Des. Test Comput. 2005, 22, 566–574. [Google Scholar] [CrossRef]

- Singh, A.; Han, C.; Qian, X. An output compression scheme for handling X-states from over-clocked delay tests. In Proceedings of the 2010 28th VLSI Test Symposium (VTS), Santa Cruz, CA, USA, 19–22 April 2010; pp. 57–62. [Google Scholar] [CrossRef]

- Kampmann, M.; Kochte, M.A.; Liu, C.; Schneider, E.; Hellebrand, S.; Wunderlich, H.J. Built-In Test for Hidden Delay Faults. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2019, 38, 1956–1968. [Google Scholar] [CrossRef]

- Urf Maaz, M.; Sprenger, A.; Hellebrand, S. A Hybrid Space Compactor for Adaptive X-Handling. In Proceedings of the 2019 IEEE International Test Conference (ITC), Washington, DC, USA, 9–15 November 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Tayade, R.; Abraham, J.A. On-chip Programmable Capture for Accurate Path Delay Test and Characterization. In Proceedings of the 2008 IEEE International Test Conference, Santa Clara, CA, USA, 28–30 October 2008; pp. 1–10. [Google Scholar] [CrossRef]

- McLaurin, T.; Frederick, F. The testability features of the MCF5407 containing the 4th generation ColdFire(R) microprocessor core. In Proceedings of the International Test Conference 2000 (IEEE Cat. No.00CH37159), Atlantic City, NJ, USA, 3–5 October 2000; pp. 151–159. [Google Scholar] [CrossRef]

- Lin, X.; Press, R.; Rajski, J.; Reuter, P.; Rinderknecht, T.; Swanson, B.; Tamarapalli, N. High-frequency, at-speed scan testing. IEEE Des. Test Comput. 2003, 20, 17–25. [Google Scholar] [CrossRef]

- Jun, H.S.; Chung, S.; Kim, H. Programmable In-Situ Delay Fault Test Clock Generator. U.S. Patent No. 20060242474, 26 October 2006. [Google Scholar]

- Pei, S.; Li, H.; Li, X. An on-chip clock generation scheme for faster-than-at-speed delay testing. In Proceedings of the 2010 Design, Automation Test in Europe Conference Exhibition (DATE 2010), Dresden, Germany, 8–12 March 2010; pp. 1353–1356. [Google Scholar] [CrossRef]

- Pei, S.; Geng, Y.; Li, H.; Liu, J.; Jin, S. Enhanced LCCG: A novel test clock generation scheme for faster-than-at-speed delay testing. In Proceedings of the 20th Asia and South Pacific Design Automation Conference, Chiba, Japan, 19–22 January 2015; pp. 514–519. [Google Scholar] [CrossRef]

- Hasib, O.A.T.; Crépeau, D.; Awad, T.; Dulipovici, A.; Savaria, Y.; Thibeault, C. Exploiting built-in delay lines for applying launch-on-capture at-speed testing on self-timed circuits. In Proceedings of the 2018 IEEE 36th VLSI Test Symposium (VTS), San Francisco, CA, USA, 22–25 April 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Mei, K. Bridging and Stuck-At Faults. IEEE Trans. Comput. 1974, C-23, 720–727. [Google Scholar] [CrossRef]

- Chess, B.; Freitas, A.; Ferguson, F.; Larrabee, T. Testing CMOS logic gates for: Realistic shorts. In Proceedings of the International Test Conference, Washington, DC, USA, 2 October–6 October 1994; pp. 395–402. [Google Scholar] [CrossRef]

- Vierhaus, H.; Meyer, W.; Glaser, U. CMOS bridges and resistive transistor faults: IDDQ versus delay effects. In Proceedings of the IEEE International Test Conference–(ITC), Baltimore, MD, USA, 17–21 October 1993; pp. 83–91. [Google Scholar] [CrossRef]

- Wadsack, R.L. Fault modeling and logic simulation of CMOS and MOS integrated circuits. Bell Syst. Tech. J. 1978, 57, 1449–1474. [Google Scholar] [CrossRef]

- Han, C.; Singh, A.D. Testing cross wire opens within complex gates. In Proceedings of the 2015 IEEE 33rd VLSI Test Symposium (VTS), Napa, CA, USA, 4 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Arai, M.; Suto, A.; Iwasaki, K.; Nakano, K.; Shintani, M.; Hatayama, K.; Aikyo, T. Small Delay Fault Model for Intra-Gate Resistive Open Defects. In Proceedings of the 2009 27th IEEE VLSI Test Symposium, Santa Cruz, CA, USA, 3–7 May 2009; pp. 27–32. [Google Scholar] [CrossRef]

- Hao, H.; McCluskey, E. “Resistive Shorts” within CMOS Gates. In Proceedings of the 1991 International Test Conference, Nashville, TN, USA, 26–30 October 1991; p. 292. [Google Scholar] [CrossRef]

- Hapke, F.; Krenz-Baath, R.; Glowatz, A.; Schloeffel, J.; Hashempour, H.; Eichenberger, S.; Hora, C.; Adolfsson, D. Defect-oriented cell-aware ATPG and fault simulation for industrial cell libraries and designs. In Proceedings of the 2009 International Test Conference, Austin, TX, USA, 1–6 November 2009; pp. 1–10. [Google Scholar] [CrossRef]

- Hapke, F.; Schloeffel, J.; Redemund, W.; Glowatz, A.; Rajski, J.; Reese, M.; Rearick, J.; Rivers, J. Cell-aware analysis for small-delay effects and production test results from different fault models. In Proceedings of the 2011 IEEE International Test Conference, Anaheim, CA, USA, 20–22 September 2011; pp. 1–8. [Google Scholar]

- Cho, K.Y.; Mitra, S.; McCluskey, E. Gate exhaustive testing. In Proceedings of the IEEE International Conference on Test, Austin, TX, USA, 8 November 2005; pp. 7–777. [Google Scholar] [CrossRef]

- Pomeranz, I.; Reddy, S. On n-detection test sets and variable n-detection test sets for transition faults. In Proceedings of the 17th IEEE VLSI Test Symposium (Cat. No.PR00146), San Diego, CA, USA, 26–30 April 1999; pp. 173–180. [Google Scholar] [CrossRef]

- Huang, Y.H.; Lu, C.H.; Wu, T.W.; Nien, Y.T.; Chen, Y.Y.; Wu, M.; Lee, J.N.; Chao, M.C.T. Methodology of generating dual-cell-aware tests. In Proceedings of the 2017 IEEE 35th VLSI Test Symposium (VTS), Las Vegas, NV, USA, 9–12 April 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Hapke, F.; Redemund, W.; Glowatz, A.; Rajski, J.; Reese, M.; Hustava, M.; Keim, M.; Schloeffel, J.; Fast, A. Cell-Aware Test. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2014, 33, 1396–1409. [Google Scholar] [CrossRef]

- Howell, W.; Hapke, F.; Brazil, E.; Venkataraman, S.; Datta, R.; Glowatz, A.; Redemund, W.; Schmerberg, J.; Fast, A.; Rajski, J. DPPM Reduction Methods and New Defect Oriented Test Methods Applied to Advanced FinFET Technologies. In Proceedings of the 2018 IEEE International Test Conference (ITC), Phoenix, AZ, USA, 29 October–1 November 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Nien, Y.T.; Wu, K.C.; Lee, D.Z.; Chen, Y.Y.; Chen, P.L.; Chern, M.; Lee, J.N.; Kao, S.Y.; Chao, M.C.T. Methodology of Generating Timing-Slack-Based Cell-Aware Tests. In Proceedings of the 2019 IEEE International Test Conference (ITC), Washington, DC, USA, 9–15 November 2019; pp. 1–10. [Google Scholar] [CrossRef]

- Nien, Y.T.; Wu, K.C.; Lee, D.Z.; Chen, Y.Y.; Chen, P.L.; Chern, M.; Lee, J.N.; Kao, S.Y.; Chao, M.C.T. Methodology of Generating Timing-Slack-Based Cell-Aware Tests. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2021, 1. [Google Scholar] [CrossRef]

- Venkataraman, S.; Drummonds, S. POIROT: A logic fault diagnosis tool and its applications. In Proceedings of the International Test Conference 2000 (IEEE Cat. No.00CH37159), Atlantic City, NJ, USA, 3–5 October 2000; pp. 253–262. [Google Scholar] [CrossRef]

- Wang, Z.; Marek-Sadowska, M.; Tsai, K.H.; Rajski, J. Delay-fault diagnosis using timing information. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2005, 24, 1315–1325. [Google Scholar] [CrossRef]

- Aikyo, T.; Takahashi, H.; Higami, Y.; Ootsu, J.; Ono, K.; Takamatsu, Y. Timing-Aware Diagnosis for Small Delay Defects. In Proceedings of the 22nd IEEE International Symposium on Defect and Fault-Tolerance in VLSI Systems (DFT 2007), Rome, Italy, 26–28 September 2007; pp. 223–234. [Google Scholar] [CrossRef]

- Mehta, V.J.; Marek-Sadowska, M.; Tsai, K.H.; Rajski, J. Timing-Aware Multiple-Delay-Fault Diagnosis. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2009, 28, 245–258. [Google Scholar] [CrossRef]

- Guo, R.; Cheng, W.T.; Kobayashi, T.; Tsai, K.H. Diagnostic test generation for small delay defect diagnosis. In Proceedings of the 2010 International Symposium on VLSI Design, Automation and Test, Hsin Chu, Taiwan, 26–29 April 2010; pp. 224–227. [Google Scholar] [CrossRef]

- Li, X.; Lee, K.J.; Touba, N.A. Chapter 6–Test Compression. In VLSI Test Principles and Architectures; Wang, L.T., Wu, C.W., Wen, X., Eds.; Morgan Kaufmann: San Francisco, CA, USA, 2006; pp. 341–396. [Google Scholar] [CrossRef]

- Holst, S.; Schneider, E.; Kochte, M.A.; Wen, X.; Wunderlich, H.J. Variation-Aware Small Delay Fault Diagnosis on Compressed Test Responses. In Proceedings of the 2019 IEEE International Test Conference (ITC), Washington, DC, USA, 9–15 November 2019; pp. 1–10. [Google Scholar] [CrossRef]

- Schneider, E.; Kochte, M.A.; Holst, S.; Wen, X.; Wunderlich, H.J. GPU-Accelerated Simulation of Small Delay Faults. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2017, 36, 829–841. [Google Scholar] [CrossRef]

- Holst, S.; Kampmann, M.; Sprenger, A.; Reimer, J.D.; Hellebrand, S.; Wunderlich, H.J.; Wen, X. Logic Fault Diagnosis of Hidden Delay Defects. In Proceedings of the 2020 IEEE International Test Conference (ITC), Washington, DC, USA, 1–6 November 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Devta-Prasanna, N.; Goel, S.K.; Gunda, A.; Ward, M.; Krishnamurthy, P. Accurate measurement of small delay defect coverage of test patterns. In Proceedings of the 2009 International Test Conference, Austin, TX, USA, 1–6 November 2009; pp. 1–10. [Google Scholar] [CrossRef]

- Nigh, P.; Gattiker, A. Test method evaluation experiments and data. In Proceedings of the International Test Conference 2000 (IEEE Cat. No.00CH37159), Atlantic City, NJ, USA, 3–5 October 2000; pp. 454–463. [Google Scholar] [CrossRef]

- Hasib, O.A.T.; Savaria, Y.; Thibeault, C. WeSPer: A flexible small delay defect quality metric. In Proceedings of the 2016 IEEE 34th VLSI Test Symposium (VTS), Las Vegas, NV, USA, 25–27 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Hasib, O.A.-T.; Savaria, Y.; Thibeault, C. Multi-PVT-Point Analysis and Comparison of Recent Small-Delay Defect Quality Metrics. J. Electron. Test. Theory Appl. JETTA 2019, 35, 823–838. [Google Scholar] [CrossRef]

- Tessent® Scan and ATPG User’s Manual v2021.1; Mentorgraphics Corporation: Wilsonville, OR, USA, 2021.

- IEEE Std 1497-2001; IEEE Standard for Standard Delay Format (SDF) for the Electronic Design Process. IEEE: Piscataway, NJ, USA, 2001; pp. 1–80. [CrossRef]

- TestMAX ATPG and TestMAX Diagnosis User Guide Version T-2022.03; Synopsys: Mountain View, CA, USA, 2022.

- IC Compiler II Industry Leading Place and Route System Datasheet. 2019. Available online: https://www.synopsys.com/content/dam/synopsys/implementation&signoff/datasheets/ic-compiler-ii-ds.pdf (accessed on 2 June 2022).

- StarRC Parasitic Extraction Datasheet. 2015. Available online: https://www.synopsys.com/content/dam/synopsys/implementation&signoff/datasheets/starrc-ds.pdf (accessed on 2 June 2022).

- Prime Time Static Timing Analysis. Available online: https://www.synopsys.com/content/dam/synopsys/implementation&signoff/datasheets/primetime-ds.pdf (accessed on 2 June 2022).

- HSPICE User Guide. Available online: https://www.synopsys.com/content/dam/synopsys/verification/datasheets/hspice-ds.pdf (accessed on 2 June 2022).

- Cadence Modus DFT Software Solution. Available online: https://www.cadence.com/en_US/home/tools/digital-design-and-signoff/test/modus-test.html (accessed on 2 June 2022).

- Virtuoso Layout Suite L Datasheet. 2015. Available online: https://www.cadence.com/content/dam/cadence-www/global/en_US/documents/tools/custom-ic-analog-rf-design/virtuoso-vlsl-ds.pdf (accessed on 2 June 2022).

- Gao, Z.; Malagi, S.; Marinissen, E.J.; Swenton, J.; Huisken, J.; Goossens, K. Defect-Location Identification for Cell-Aware Test. In Proceedings of the 2019 IEEE Latin American Test Symposium (LATS), Santiago, Chile, 11–13 March 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Forero, F.; Villacorta, H.; Renovell, M.; Champac, V. Modeling and Detectability of Full Open Gate Defects in FinFET Technology. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2019, 27, 2180–2190. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muthukrishnan, P.; Sathasivam, S. A Technical Survey on Delay Defects in Nanoscale Digital VLSI Circuits. Appl. Sci. 2022, 12, 9103. https://doi.org/10.3390/app12189103

Muthukrishnan P, Sathasivam S. A Technical Survey on Delay Defects in Nanoscale Digital VLSI Circuits. Applied Sciences. 2022; 12(18):9103. https://doi.org/10.3390/app12189103

Chicago/Turabian StyleMuthukrishnan, Prathiba, and Sivanantham Sathasivam. 2022. "A Technical Survey on Delay Defects in Nanoscale Digital VLSI Circuits" Applied Sciences 12, no. 18: 9103. https://doi.org/10.3390/app12189103