1. Introduction

The famous tensor decompositions—Canonical Polyadic Decomposition (CPD), Higher-Order Singular Value Decomposition (HOSVD) [

1,

2,

3], Tensor Trains Decomposition (TTD) [

4], and Hierarchical Tucker decomposition (H Tucker) [

5] - and their modifications [

6] are based on the calculation of the eigen values and eigen vectors of the decomposed tensor. Their basic advantage is that they are optimum with respect to the Mean Square Error (MSE) of the approximation in the case of the truncation of the low-energy decomposition components. For the calculation of the retained components are used various iterative methods (the power method [

7], the Jacoby method [

8], etc.), which require relatively high numbers of calculations to achieve the needed approximation accuracy.

As an alternative, in this work are presented new hierarchical approaches for 3D tensor decomposition, based on well-known deterministic orthogonal transforms: the Walsh-Hadamard Transform (WHT) [

9,

10,

11,

12] and the Complex Hadamard Transform (CHT) [

13]. The offered decompositions are not optimum with respect to MSE minimization, but due to the lack of iterations, they have low Computational Complexity (CC), and as a result, they are suitable for the fast processing of the 3D tensors obtained, for example, from single or sequences of 2D correlated images. In the first case, each single 2D image is divided into N

2 blocks of size N × N for N = 2

n, from which is obtained a sequence of N cubical tensors, each of size N × N × N. In the second case, from each sequence of N 2D images of size N × N is obtained a single cubical tensor of size N × N × N. Examples for the transformation of a single 2D image and of a sequence of 2D video frames into a cubical (3D) tensor are shown on

Figure 1.

The presented approach for fast hierarchical cubical tensor decomposition is applicable not only for images but also for various kinds of multidimensional signals (medical, seismic, spectrometric, etc.) and big data analysis. In the next sections,

Section 2,

Section 3,

Section 4 and

Section 5, are presented the proposed algorithms for 1D Hierarchical Fast Walsh-Hadamard Transform (1D-FWHT), 1D Hierarchical Fast Complex Hadamard Transform (1D-FCHT), and the corresponding 3D Hierarchical Fast real and complex transforms (3D-FWHT and 3D-FCHT). In

Section 6 is shown a comparative analysis of the CC of the presented algorithms for hierarchical orthogonal tensor transforms, with respect to the famous deterministic and statistical transforms: the 3D Fast Fourier Transform (3D-FFT), 3D Discrete Wavelet Transform (3D-DWT) and Hierarchical Tucker Decomposition (H-Tucker). The last section,

Section 7, contains the conclusions.

In

Appendix A.1 and

Appendix A.2 is given the factorization of the matrices for the n-level one-dimensional fast hierarchical transforms 1D-FWHT and 1D-FCHT.

2. One-Dimensional Hierarchical Fast Walsh-Hadamard Transform

The following symbols are introduced for tensors, matrices and vectors, respectively: X for a tensor, X for a matrix and for a vector.

The forward and inverse 1D Walsh-Hadamard Transform (1D-WHT) (frequency-ordered) is represented in scalar form by the equations below [

9,

10,

11]:

where N = 2

n and x(i) and s(u) are, respectively, the N-dimensional discrete signal and its spectrum. The discrete Wash functions are defined by the following relations [

10,

12]:

Here, the operation “exclusive OR” is represented by the symbol ⊕.

The algorithm for the one-dimensional hierarchical fast Walsh-Hadamard transform (1D-FWHT) for the cubical tensor

X of size N × N × N when N = 2

n (a sequence of matrices X

k, each of size N × N for k = 1, 2, …,N) is presented for N = 8 (i.e., for n = 3 levels). The general case of the transform when N = 2

n and n >3, is given in

Appendix A.1.

The execution of the direct and inverse 1D-WHT is grounded on the basic operation “butterfly” for N = 2, in accordance with the following relations [

9,

11]:

The application of the operation “butterfly” to the elements of the couples of matrices in each hierarchical level is represented by the equations below.

In Level 1 of the 1D-FWHT:

The matrix G

1(8) of size 8 × 8 is used to execute the direct 1D-WHT when N = 2 for each couple of neighbor matrices in the sequence X

k for k = 1,2, …,8. These matrices are the components of the matrix-column

. From Equation (3), it follows that the matrix G

1 (8) could be represented in the following way:

Here, I(4) is the identity matrix of size 4 × 4, the symbol ⊗ stands for the Kroneker product of the matrices [

3], and

corresponds to the transformed matrix-column whose components are the matrices Y

k, for k = 1,2,…,8.

After the

rearrangement 1 is obtained:

where

P1(8)—permutation matrix of size 8 × 8; - rearranged matrix-column with components .

In the Level 2 of the 1D-FWHT is obtained:

where G

2(8) = G

1(8) and

. The components of the matrix Z are:

After the

rearrangement 2 is obtained:

where

P2(8)—permutation matrix of size 8 × 8; - rearranged matrix-column with components .

In the Level 3 of the 1D-FWHT is obtained:

where

- transformed matrix-column with components

After the

rearrangement 3 is obtained:

where P

3(8) = P

2(8);

- output matrix-column with components

.

The relation between the input and output matrix-column X and E, correspondingly, defined by the relations in Equations (4)–(13), is:

where

is a frequency-ordered Hadamard matrix, defined as follows:

The rearrangement of the intermediate matrix components in each consecutive transform level must satisfy the requirement for the frequency-ordering of the output matrices [

9,

14]. The chosen method for matrix

factorization permits the operation “butterfly” in the first two levels to be applied to the elements of the neighbor couples of matrices X

k and X

k+1 (for k = 1,2, …,8) from the sequence, which builds the cubical tensor

X of size 8 × 8 × 8. In this way, the decorrelation efficiency for the elements of each couple of transformed matrices is enhanced, which results in higher energy concentration in the low-frequency components of the output matrix-column, E. In the result of the 1D-FWHT is obtained the cubical tensor

E of size 8 × 8 × 8, represented by the sequence of matrices E

l, each of size 8 × 8, when l = 1,2, …,8.

The graph of the 3-level 1D-FWHT algorithm for the tensor

X of size 8 × 8 × 8 is shown in

Figure 2. Here, the basic operation “butterfly” in the first two levels is framed in red.

An example 1D-FWHT is given below for the processing of a sequence of matrices Xk for k = 1, 2, …, 8, in a particular case: the transform of one element of the same spatial position (i,m) in each matrix, k:

Then, in Level 1 of the 1D-HFWHT:

After the

rearrangement 1: In Level 2 of the 1D-HFWHT:

After the

rearrangement 2:In Level 3 of the 1D-HFWHT:

After the

rearrangement 3: Here, and are, correspondingly, the elements of the sequences of matrices Yk, Zk, Dk and Ek for k = 1,2, …,8, calculated in levels 1, 2 and 3, and for the graph output.

In accordance with the generalized equation of Parseval [

15] is obtained:

Here, and are, respectively, the elements of the input tensor X and of the spectral tensor, E. Then, for the example above, for N = 8, from Equation (16) is obtained

In

Appendix A.1 is given, in detail, the frequency-ordered hierarchical n-level 1D-FWHT.

3. One-Dimensional Hierarchical Fast Complex Hadamard Transform

The direct and inverse 1D Complex Hadamard Transform (1D-CHT) (frequency-ordered) is represented in a scalar form by the equations below [

14]:

where N = 2

n≥4 (the minimum possible value is n = 2); x(i) and s(u) are, correspondingly, the N-dimensional discrete signal and its complex spectrum;

; and

and

are the real and the imaginary parts of the spectrum s(u). The coefficients of the 1D-CHT matrix C(N) of size N × N are represented by the equation:

where i, u = 0,1, …,2

n−1. The sign function h(i,u) is:

Here, is an operator, which represents the integer part of the result, obtained after the division. The even coefficients of the 1D-CHT are real, and the odd ones are complex-conjugated.

For N = 4 (respectively, n = 2), Equations (17)–(19) for i, u = 0,1,2,3 are transformed into:

In this case, in accordance with Equation (20), for the calculation of the direct/inverse 1D-CHT are needed four basic “butterfly” operations, whose weight coefficients are, respectively, (+1,−1) for three of the “butterflies” and (+j,−j) for the fourth.

In

Figure 3 is shown the calculation graph of the direct 3-level fast hierarchical 1D-CHT (n = 3) for N = 8, built in accordance with

Figure 2.

The graph for the 1D-FCHT when N = 8 is created on the basis of Equation (20), to which are added four “butterfly” operations with weight coefficients (+1,−1) in the last, third level. In the result of the use of the frequency-ordered 1D-FCHT [

14] is obtained the following system of equations, which represent the relations between the sequences of the input and output matrices, respectively X

k and E

k, for k = 1, 2, …, 8 and N = 8:

Unlike Equation (14), part of the equations in the system above contain the complex variable

, and they represent complex matrices (i.e., these are relations E

3, E

4, E

5 and E

6). The remaining four equations (E

1, E

2, E

7 and E

8) represent real matrices. The components of each complex matrix

(k = 3,4,5,6) are

and

; α

p and β

p are sign functions with values of +1 or −1, defined by Equation (21). The elements e(i,m.k) of the complex matrix E

k are defined as follows:

where

is a module; and

, a phase of the element e(i,m,k).

The matrix Φ

k permits the phase modification of its elements, which could be used (for example) for the resistant digital watermarking of multidimensional signals and images [

14].

In

Appendix A.2 is presented in detail the factorization of the matrix for an n-level hierarchical frequency-ordered 1D-FCHT, based on the “butterfly” operation (Equation (20)) used for the 1D-CHT execution, if N = 4.

4. Hierarchical Cubical Tensor Decomposition through the 3D-FWHT

At first, here is described the decomposition of the cubical tensor

X of size 8 × 8 × 8. In this case, the decomposition could be represented in the simplified form shown in

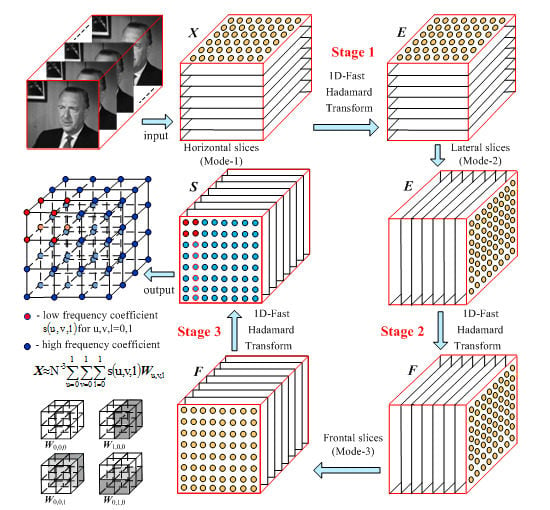

Figure 4.

The decomposition based on the 3D-FWHT comprises three stages. In the first stage, the tensor is divided into horizontal slices (mode-1), after which, on each couple of matrices, is applied the 1D-FWHT, and from the so obtained eight slices is restored the tensor

E. In a similar way are executed the operations in the second and third stages. Unlike in the first, in the second stage, the input tensor

E is divided into eight lateral slices (mode-2). After their transform through the 1D-FWHT, from the obtained eight slices is restored the tensor

F. In the third stage, the tensor

F is divided into eight frontal slices (mode-3), from which, after the 1D-FWHT, is obtained the spectrum tensor

S with elements

. From this tensor, after the reverse execution of the three stages of the inverse 1D-FWHT, is restored the initial tensor

X with elements x(i,j,k). In the result of the decomposition, it is represented as a sum of weighted “basic” tensors

of size 8 × 8 × 8 with elements wal(u,v,l), which are 3D Walsh functions:

The 3D functions wal(u,v,l) are divisible [

16,

17], and they could be represented as the product of three 1D Walsh functions: wal(u).wal(v).wal(l). The coefficients s(u,v,l) for u = 0,1, v = 0,1 and l = 0,1 in the first layer correspond to the lowest spatial frequencies of the spectrum tensor,

S. In

Figure 5a is shown an example for a spectrum tensor

S of size 4 × 4 × 4, and in

Figure 5b, the 8 “basic” tensors, which correspond to coefficients s(u,v,l) from the initial layer of the tensor

S [

18].

The 3D-FWHT algorithm, shown in

Figure 4, could be generalized for the case when N = 2

n by creating an extended n-level computational graph in correspondence with the transform matrix factorization explained in

Appendix A.1. The number of stages needed for the execution of the 1D-FWHT (in accordance with

Figure 4) is three, not only for N = 8 but also for the higher values of N = 16, 32,…

In the general case, the spectrum coefficients s(u,v,l) are calculated through the direct 3D-WHT, in accordance with the relation [

12,

18]:

The 3D inverse WHT (3D-IWHT) is defined by the relation:

Here, the discrete one-dimensional Walsh-Hadamard (WH) functions of N

th order wal(i,u), wal(m,v), wal(k,l) for i,m,k = 0,1, …,N−1, are defined by the following relations:

where

and

are defined by analogy with Equation (2) after converting the decimal numbers i, m, k and u, v, l into binary, respectively:

and

for n = log

2N.

Each 3

d-order tensor

X of size N × N × N could be calculated by using the weighted sum of the N

3 3D WH functions represented through tensors

, each of size N × N × N:

Each “basic” tensor

with frequency (u,v,l) could be represented through the outer product of the vectors

where the vectors, which represent the tensor

, are defined by the relations below:

Then, the tensor decomposition based on the 3D-WHT, is defined by the relation:

As follows from the relations in Equations (25) and (32), the 3D-WHT is reversible, i.e., X could be restored from the tensor S through the 3D-IWTH. The decomposition in Equation (32) of the tensor X of size N × N × N corresponds to the Tucker decomposition [

1,

3,

5]:

Here,

are the entries of the core tensor

G of size R

1 × R

2 × R

3, and R

1, R

2, R

3, the multilinear rank of the tensor

X. For the case, when it is a cube of size N × N × N, the tensor

G is diagonal and of size R×R×R; g

r (for r = 1,2, …,R) are its eigen values, and

, the eigen vectors. In this case, the Tucker decomposition is transformed into CPD [

1], and is represented by the relation below:

Here, R is the rank of the tensor

X, whose value is limited in the range

[

19]. The difference between the decompositions, represented by Equations (33) and (32), is in the numbers of their components; in the first case, it is ≤N

2, and in the second, it is ≤N

3. This shows the higher energy concentration in the first components of the H-Tucker decomposition (respectively, CPD) compared to that of the 3D-WHT.