Conotoxin Prediction: New Features to Increase Prediction Accuracy

Abstract

:1. Introduction

2. Results

2.1. Construction of Datasets

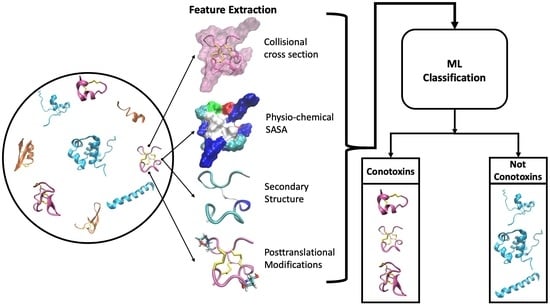

2.2. Feature Extraction and Selection

2.3. Effect of Features on Prediction Performance

2.3.1. Improved Predicting Power from New Features across All Datasets

2.3.2. Conotoxin Prediction Accuracy

2.4. A Comparison of Our Model Performance to Previously Published Models

3. Discussion

4. Materials and Methods

4.1. Construction of Datasets

4.2. Feature Extraction

4.3. Feature Selection Procedure

4.3.1. F-Score

4.3.2. Elimination of Highly Correlated Features

4.3.3. Incremental Feature Selection

4.4. Classifiers

4.5. Using Geometric SMOTE to Handle Imbalanced Datasets

4.6. Performance Evaluation

4.7. Machine Learning Pipeline

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Becker, S.; Terlau, H. Toxins from Cone Snails: Properties, Applications and Biotechnological Production. Appl. Microbiol. Biotechnol. 2008, 79, 1–9. [Google Scholar] [PubMed]

- Verdes, A.; Anand, P.; Gorson, J.; Jannetti, S.; Kelly, P.; Leffler, A.; Simpson, D.; Ramrattan, G.; Holford, M. From Mollusks to Medicine: A Venomics Approach for the Discovery and Characterization of Therapeutics from Terebridae Peptide Toxins. Toxins 2016, 8, 117. [Google Scholar] [PubMed]

- Zouari-Kessentini, R.; Srairi-Abid, N.; Bazaa, A.; El Ayeb, M.; Luis, J.; Marrakchi, N. Antitumoral Potential of Tunisian Snake Venoms Secreted Phospholipases A2. Biomed Res. Int. 2013, 2013, 391389. [Google Scholar]

- Wulff, H.; Castle, N.A.; Pardo, L.A. Voltage-Gated Potassium Channels as Therapeutic Targets. Nat. Rev. Drug Discov. 2009, 8, 982–1001. [Google Scholar] [PubMed]

- de Oliveira Junior, N.G.; e Silva Cardoso, M.H.; Franco, O.L. Snake Venoms: Attractive Antimicrobial Proteinaceous Compounds for Therapeutic Purposes. Cell Mol. Life Sci. 2013, 70, 4645–4658. [Google Scholar]

- Bagal, S.K.; Marron, B.E.; Owen, R.M.; Storer, R.I.; Swain, N.A. Voltage Gated Sodium Channels as Drug Discovery Targets. Channels 2015, 9, 360–366. [Google Scholar]

- Miljanich, G.P. Ziconotide: Neuronal Calcium Channel Blocker for Treating Severe Chronic Pain. Curr. Med. Chem. 2004, 11, 3029–3040. [Google Scholar]

- Krewski, D.; Acosta, D., Jr.; Andersen, M.; Anderson, H.; Bailar, J.C., III; Boekelheide, K.; Brent, R.; Charnley, G.; Cheung, V.G.; Green, S., Jr.; et al. Toxicity Testing in the 21st Century: A Vision and a Strategy. J. Toxicol. Environ. Health B Crit. Rev. 2010, 13, 51–138. [Google Scholar]

- Cole, T.J.; Brewer, M.S. Toxify: A Deep Learning Approach to Classify Animal Venom Proteins. PeerJ 2019, 7, e7200. [Google Scholar]

- Gacesa, R.; Barlow, D.J.; Long, P.F. Machine Learning Can Differentiate Venom Toxins from Other Proteins Having Non-Toxic Physiological Functions. PeerJ 2016, 2, e90. [Google Scholar]

- Naamati, G.; Askenazi, M.; Linial, M. Clantox: A Classifier of Short Animal Toxins. Nucleic Acids Res. 2009, 37, W363–W368. [Google Scholar] [PubMed]

- Gupta, S.; Kapoor, P.; Chaudhary, K.; Gautam, A.; Kumar, R.; Raghava, G.P. In Silico Approach for Predicting Toxicity of Peptides and Proteins. PLoS ONE 2013, 8, e73957. [Google Scholar]

- Fan, Y.X.; Song, J.; Shen, H.B.; Kong, X. Predcsf: An Integrated Feature-Based Approach for Predicting Conotoxin Superfamily. Protein Pept. Lett. 2011, 18, 261–267. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Snyder, G.H. Factors Governing Selective Formation of Specific Disulfides in Synthetic Variants Of Alpha-Conotoxin. Biochemistry 1991, 30, 11343–11348. [Google Scholar] [CrossRef] [PubMed]

- Gehrmann, J.; Alewood, P.F.; Craik, D.J. Structure Determination of the Three Disulfide Bond Isomers of Alpha-Conotoxin Gi: A Model for the Role of Disulfide Bonds in Structural Stability. J. Mol. Biol. 1998, 278, 401–415. [Google Scholar] [CrossRef] [PubMed]

- Xianfang, W.; Junmei, W.; Xiaolei, W.; Yue, Z. Predicting the Types of Ion Channel-Targeted Conotoxins Based on Avc-Svm Model. Biomed. Res. Int. 2017, 2017, 2929807. [Google Scholar]

- Yuan, L.F.; Ding, C.; Guo, S.H.; Ding, H.; Chen, W.; Lin, H. Prediction of the Types of Ion Channel-Targeted Conotoxins Based on Radial Basis Function Network. Toxicol. Vitr. 2013, 27, 852–856. [Google Scholar]

- Dutton, J.L.; Bansal, P.S.; Hogg, R.C.; Adams, D.J.; Alewood, P.F.; Craik, D.J. A New Level of Conotoxin Diversity, a Non-Native Disulfide Bond Connectivity in A-Conotoxin Auib Reduces Structural Definition but Increases Biological Activity. J. Biol. Chem. 2002, 277, 48849–48857. [Google Scholar]

- Tran, H.N.; McMahon, K.L.; Deuis, J.R.; Vetter, I.; Schroeder, C.I. Structural and Functional Insights into the Inhibition of Human Voltage-Gated Sodium Channels by Μ-Conotoxin Kiiia Disulfide Isomers. J. Biol. Chem. 2022, 298, 101728. [Google Scholar]

- Scanlon, M.J.; Naranjo, D.; Thomas, L.; Alewood, P.F.; Lewis, R.J.; Craik, D.J. Solution Structure and Proposed Binding Mechanism of a Novel Potassium Channel Toxin Κ-Conotoxin Pviia. Structure 1997, 5, 1585–1597. [Google Scholar] [CrossRef]

- Atkinson, R.A.; Kieffer, B.; Dejaegere, A.; Sirockin, F.; Lefèvre, J.F. Structural and Dynamic Characterization of Ω-Conotoxin Mviia: The Binding Loop Exhibits Slow Conformational Exchange. Biochemistry 2000, 39, 3908–3919. [Google Scholar] [CrossRef] [PubMed]

- Heerdt, G.; Zanotto, L.; Souza, P.C.; Araujo, G.; Skaf, M.S. Collision Cross Section Calculations Using Hpccs. Methods Mol. Biol. 2020, 2084, 297–310. [Google Scholar] [PubMed]

- Ho Thanh Lam, L.; Le, N.H.; Van Tuan, L.; Tran Ban, H.; Nguyen Khanh Hung, T.; Nguyen, N.T.K.; Huu Dang, L.; Le, N.Q.K. Machine Learning Model for Identifying Antioxidant Proteins Using Features Calculated from Primary Sequences. Biology 2020, 9, 325. [Google Scholar] [CrossRef] [PubMed]

- Manavalan, B.; Basith, S.; Shin, T.H.; Choi, S.; Kim, M.O.; Lee, G. Mlacp: Machine-Learning-Based Prediction of Anticancer Peptides. Oncotarget 2017, 8, 77121–77136. [Google Scholar] [CrossRef]

- ElAbd, H.; Bromberg, Y.; Hoarfrost, A.; Lenz, T.; Franke, A.; Wendorff, M. Amino Acid Encoding for Deep Learning Applications. BMC Bioinform. 2020, 21, 235. [Google Scholar] [CrossRef]

- Dao, F.Y.; Yang, H.; Su, Z.D.; Yang, W.; Wu, Y.; Ding, H.; Chen, W.; Tang, H.; Lin, H. Recent Advances in Conotoxin Classification by Using Machine Learning Methods. Molecules 2017, 22, 1057. [Google Scholar] [CrossRef]

- Joosten, R.P.; Te Beek, T.A.; Krieger, E.; Hekkelman, M.L.; Hooft, R.W.; Schneider, R.; Sander, C.; Vriend, G. A Series of PDB Related Databases for Everyday Needs. Nucleic Acids Res. 2011, 39, D411–D419. [Google Scholar] [CrossRef]

- Kabsch, W.; Sander, C. Dictionary of Protein Secondary Structure: Pattern Recognition of Hydrogen-Bonded and Geometrical Features. Biopolymers 1983, 22, 2577–2637. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.H.; Friedman, J.H. The Elements of Statistical Learning. In Data Mining, Inference, and Prediction; Springer: New York, NY, USA, 2009; Volume 2. [Google Scholar]

- Vapnik, V. The Support Vector Method of Function Estimation. In Nonlinear Modeling: Advanced Black-Box Techniques; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–85. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Kaas, Q.; Yu, R.; Jin, A.H.; Dutertre, S.; Craik, D.J. Conoserver: Updated Content, Knowledge, and Discovery Tools in the Conopeptide Database. Nucleic Acids Res. 2012, 40, D325–D330. [Google Scholar] [CrossRef]

- Berman, H.M.; Henrick, K.; Nakamura, H. Announcing the Worldwide Protein Data Bank. Nat. Struct. Mol. Biol. 2003, 10, 980. [Google Scholar] [CrossRef] [PubMed]

- Hoch, J.C.; Baskaran, K.; Burr, H.; Chin, J.; Eghbalnia, H.R.; Fujiwara, T.; Gryk, M.R.; Iwata, T.; Kojima, C.; Kurisu, G.; et al. Biological Magnetic Resonance Data Bank. Nucleic Acids Res. 2023, 51, D368–D376. [Google Scholar] [CrossRef] [PubMed]

- Zacharias, J.; Knapp, E.-W. Protein Secondary Structure Classification Revisited: Processing Dssp Information with Pssc. J. Chem. Inf. Model. 2014, 54, 2166–2179. [Google Scholar] [CrossRef] [PubMed]

- Dolinsky, T.J.; Czodrowski, P.; Li, H.; Nielsen, J.E.; Jensen, J.H.; Klebe, G.; Baker, N.A. Pdb2pqr: Expanding and Upgrading Automated Preparation of Biomolecular Structures for Molecular Simulations. Nucleic Acids Res. 2007, 35, W522–W525. [Google Scholar] [CrossRef] [PubMed]

- Dolinsky, T.J.; Nielsen, J.E.; McCammon, J.A.; Baker, N.A. Pdb2pqr: An Automated Pipeline for the Setup of Poisson–Boltzmann Electrostatics Calculations. Nucleic Acids Res. 2004, 32, W665–W667. [Google Scholar] [CrossRef]

- Ponder, J.W.; Case, D.A. Force Fields for Protein Simulations. Adv. Protein Chem. 2003, 66, 27–85. [Google Scholar]

- Liu, H.; Setiono, R. Incremental Feature Selection. Appl. Intell. 1998, 9, 217–230. [Google Scholar] [CrossRef]

- Zhang, Y.; Ling, C. A Strategy to Apply Machine Learning to Small Datasets in Materials Science. npj Comput. Mater. 2018, 4, 25. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F. Geometric Smote a Geometrically Enhanced Drop-in Replacement for Smote. J. Inf. Sci. 2019, 501, 118–135. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. Smote: Synthetic Minority over-Sampling Technique. JAIR 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Dalianis, H.; Dalianis, H. Evaluation Metrics and Evaluation. In Clinical Text Mining: Secondary Use of Electronic Patient Records; Springer: Berlin/Heidelberg, Germany, 2018; pp. 45–53. [Google Scholar]

| Dataset Names | Number of Samples | Dataset Names | Number of Samples |

|---|---|---|---|

| Small positive | 154 | Extended positive | 184 |

| Small easy-negative | 180 | Extended easy-negative | 560 |

| Small hard-negative | 178 | Extended hard-negative | 317 |

| Datasets | Feature Sets | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| P | P + SS + CCS | P + P2 | P + SS + CCS + P2 | ||||||

| AA | f1 | AA | f1 | AA | f1 | AA | f1 | ||

| Small Datasets | Conotoxins vs. Easy-negative | 0.9457 | 0.9272 | 0.9311 | 0.9236 | 0.9468 | 0.9338 | 0.9422 | 0.9302 |

| Conotoxins vs. Hard-negative | 0.9376 | 0.9428 | 0.9437 | 0.9392 | 0.9550 | 0.9498 | 0.9489 | 0.9431 | |

| Conotoxins vs. Easy + Hard-negative | 0.9231 | 0.8917 | 0.9237 | 0.8967 | 0.9337 | 0.9045 | 0.9364 | 0.9170 | |

| Extended Datasets | Conotoxins vs. Easy-negative | 0.9490 | 0.9167 | 0.9504 | 0.9080 | 0.9483 | 0.9290 | 0.9571 | 0.9271 |

| Conotoxins vs. Hard-negative | 0.9308 | 0.9089 | 0.9292 | 0.9135 | 0.9396 | 0.9200 | 0.9387 | 0.9259 | |

| Conotoxins vs. Easy + Hard-negative | 0.9052 | 0.8824 | 0.9185 | 0.8854 | 0.9314 | 0.9027 | 0.9333 | 0.9035 | |

| Classifier | PLR | SVM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9328 | 0.8978 | 0.8280 | 0.8953 | 0.8603 | 0.9355 | 0.9134 | 0.8735 | 0.8430 | 0.8580 |

| SS | 0.8884 | 0.8344 | 0.6996 | 0.9070 | 0.7899 | 0.8884 | 0.8344 | 0.6978 | 0.9128 | 0.7909 |

| SS + CCS | 0.8925 | 0.8392 | 0.7054 | 0.9186 | 0.7980 | 0.8804 | 0.8252 | 0.6797 | 0.9128 | 0.7792 |

| P + CCS | 0.9368 | 0.9058 | 0.8453 | 0.8895 | 0.8669 | 0.9341 | 0.9021 | 0.8398 | 0.8837 | 0.8612 |

| P + SS | 0.9462 | 0.9230 | 0.8793 | 0.8895 | 0.8844 | 0.9530 | 0.9303 | 0.8870 | 0.9128 | 0.8997 |

| P + SS + CCS | 0.9570 | 0.9365 | 0.8977 | 0.9186 | 0.9080 | 0.9476 | 0.9216 | 0.8715 | 0.9070 | 0.8889 |

| P + P2 | 0.9543 | 0.9389 | 0.9107 | 0.8895 | 0.9000 | 0.9570 | 0.9444 | 0.9217 | 0.8895 | 0.9053 |

| SS + P2 | 0.9328 | 0.8931 | 0.8081 | 0.9302 | 0.8649 | 0.9368 | 0.8993 | 0.8205 | 0.9302 | 0.8719 |

| CCS + SS + P2 | 0.9261 | 0.8836 | 0.7910 | 0.9244 | 0.8525 | 0.9328 | 0.8924 | 0.8050 | 0.9360 | 0.8656 |

| P + SS + CCS + P2 | 0.9664 | 0.9536 | 0.9298 | 0.9244 | 0.9271 | 0.9610 | 0.9444 | 0.9133 | 0.9186 | 0.9159 |

| Classifier | RF | XGBoost | ||||||||

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9624 | 0.9540 | 0.9390 | 0.8953 | 0.9167 | 0.9489 | 0.9312 | 0.8988 | 0.8779 | 0.8882 |

| SS | 0.9355 | 0.9080 | 0.8563 | 0.8663 | 0.8613 | 0.9382 | 0.9130 | 0.8663 | 0.8663 | 0.8663 |

| SS + CCS | 0.9435 | 0.9206 | 0.8779 | 0.8779 | 0.8779 | 0.9328 | 0.9030 | 0.8466 | 0.8663 | 0.8563 |

| P + CCS | 0.9597 | 0.9501 | 0.9329 | 0.8895 | 0.9107 | 0.9462 | 0.9273 | 0.8929 | 0.8721 | 0.8824 |

| P + SS | 0.9610 | 0.9511 | 0.9333 | 0.8953 | 0.9139 | 0.9597 | 0.9449 | 0.9176 | 0.9070 | 0.9123 |

| P + SS + CCS | 0.9556 | 0.9416 | 0.9162 | 0.8895 | 0.9027 | 0.9543 | 0.9357 | 0.9012 | 0.9012 | 0.9012 |

| P + P2 | 0.9677 | 0.9599 | 0.9458 | 0.9128 | 0.9290 | 0.9610 | 0.9493 | 0.9281 | 0.9012 | 0.9145 |

| SS + P2 | 0.9570 | 0.9462 | 0.9268 | 0.8837 | 0.9048 | 0.9530 | 0.9361 | 0.9053 | 0.8895 | 0.8974 |

| CCS + SS + P2 | 0.9597 | 0.9520 | 0.9383 | 0.8837 | 0.9102 | 0.9503 | 0.9308 | 0.8947 | 0.8895 | 0.8921 |

| P + SS + CCS + P2 | 0.9651 | 0.9579 | 0.9451 | 0.9012 | 0.9226 | 0.9624 | 0.9522 | 0.9337 | 0.9012 | 0.9172 |

| Classifier | PLR | SVM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9159 | 0.9021 | 0.8580 | 0.8978 | 0.8740 | 0.9205 | 0.9033 | 0.8488 | 0.9164 | 0.8786 |

| SS | 0.8737 | 0.8787 | 0.8946 | 0.7800 | 0.8301 | 0.8716 | 0.8789 | 0.9022 | 0.7727 | 0.8294 |

| SS + CCS | 0.8723 | 0.8779 | 0.8958 | 0.7757 | 0.8287 | 0.8694 | 0.8766 | 0.8996 | 0.7690 | 0.8262 |

| P + CCS | 0.9171 | 0.9059 | 0.8701 | 0.8908 | 0.8770 | 0.9213 | 0.9062 | 0.8582 | 0.9115 | 0.8810 |

| P + SS | 0.9229 | 0.9139 | 0.8856 | 0.8937 | 0.8867 | 0.9225 | 0.9126 | 0.8812 | 0.8954 | 0.8857 |

| P + SS + CCS | 0.9212 | 0.9118 | 0.8820 | 0.8931 | 0.8843 | 0.9227 | 0.9132 | 0.8829 | 0.8961 | 0.8864 |

| P + P2 | 0.9290 | 0.9180 | 0.8831 | 0.9120 | 0.8943 | 0.9344 | 0.9222 | 0.8835 | 0.9260 | 0.9014 |

| SS + P2 | 0.9153 | 0.9161 | 0.9189 | 0.8521 | 0.8819 | 0.9112 | 0.9130 | 0.9190 | 0.8434 | 0.8772 |

| CCS + SS + P2 | 0.9162 | 0.9166 | 0.9183 | 0.8547 | 0.8831 | 0.9075 | 0.9109 | 0.9217 | 0.8353 | 0.8734 |

| P + SS + CCS + P2 | 0.9314 | 0.9242 | 0.9015 | 0.9035 | 0.8995 | 0.9356 | 0.9264 | 0.8974 | 0.9176 | 0.9048 |

| Classifier | RF | XGBoost | ||||||||

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9386 | 0.9289 | 0.8979 | 0.9237 | 0.9089 | 0.9347 | 0.9256 | 0.8968 | 0.9152 | 0.9031 |

| SS | 0.9165 | 0.9091 | 0.8859 | 0.8786 | 0.8793 | 0.9129 | 0.9033 | 0.8727 | 0.8790 | 0.8728 |

| SS + CCS | 0.9120 | 0.9052 | 0.8835 | 0.8696 | 0.8733 | 0.9125 | 0.9038 | 0.8766 | 0.8754 | 0.8727 |

| P + CCS | 0.9399 | 0.9305 | 0.9005 | 0.9261 | 0.9103 | 0.9358 | 0.9258 | 0.8941 | 0.9212 | 0.9049 |

| P + SS | 0.9395 | 0.9289 | 0.8951 | 0.9297 | 0.9096 | 0.9339 | 0.9243 | 0.8938 | 0.9158 | 0.9019 |

| P + SS + CCS | 0.9424 | 0.9315 | 0.8970 | 0.9350 | 0.9135 | 0.9340 | 0.9247 | 0.8954 | 0.9151 | 0.9025 |

| P + P2 | 0.9463 | 0.9364 | 0.9051 | 0.9396 | 0.9199 | 0.9440 | 0.9367 | 0.9134 | 0.9265 | 0.9175 |

| SS + P2 | 0.9428 | 0.9344 | 0.9074 | 0.9275 | 0.9154 | 0.9222 | 0.9147 | 0.8908 | 0.8892 | 0.8867 |

| CCS + SS + P2 | 0.9392 | 0.9314 | 0.9070 | 0.9187 | 0.9104 | 0.9229 | 0.9153 | 0.8910 | 0.8899 | 0.8877 |

| P + SS + CCS + P2 | 0.9506 | 0.9408 | 0.9092 | 0.9467 | 0.9259 | 0.9431 | 0.9345 | 0.9070 | 0.9289 | 0.9158 |

| Classifier | PLR | SVM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9370 | 0.9159 | 0.8848 | 0.7700 | 0.8197 | 0.9403 | 0.9190 | 0.8877 | 0.7805 | 0.8273 |

| SS | 0.8840 | 0.8916 | 0.9028 | 0.5980 | 0.7166 | 0.8737 | 0.8850 | 0.9017 | 0.5722 | 0.6979 |

| SS + CCS | 0.8814 | 0.8899 | 0.9024 | 0.5908 | 0.7113 | 0.8759 | 0.8882 | 0.9063 | 0.5777 | 0.7032 |

| P + CCS | 0.9436 | 0.9199 | 0.8851 | 0.7963 | 0.8352 | 0.9453 | 0.9228 | 0.8896 | 0.8020 | 0.8402 |

| P + SS | 0.9469 | 0.9253 | 0.8934 | 0.8054 | 0.8444 | 0.9467 | 0.9248 | 0.8925 | 0.8048 | 0.8433 |

| P + SS + CCS | 0.9437 | 0.9260 | 0.8998 | 0.7901 | 0.8380 | 0.9458 | 0.9292 | 0.9047 | 0.7965 | 0.8443 |

| P + P2 | 0.9535 | 0.9276 | 0.8894 | 0.8371 | 0.8599 | 0.9524 | 0.9234 | 0.8808 | 0.8376 | 0.8554 |

| SS + P2 | 0.9191 | 0.9162 | 0.9120 | 0.6919 | 0.7841 | 0.9163 | 0.9195 | 0.9242 | 0.6818 | 0.7821 |

| CCS + SS + P2 | 0.9201 | 0.9203 | 0.9205 | 0.6949 | 0.7891 | 0.9105 | 0.9182 | 0.9295 | 0.6611 | 0.7705 |

| P + SS + CCS + P2 | 0.9534 | 0.9315 | 0.8992 | 0.8322 | 0.8618 | 0.9503 | 0.9262 | 0.8907 | 0.8242 | 0.8518 |

| Classifier | RF | XGBoost | ||||||||

| Feature Sets | OA | AA | Recall | Precision | f1 | OA | AA | Recall | Precision | f1 |

| P | 0.9658 | 0.9087 | 0.8248 | 0.9579 | 0.8824 | 0.9611 | 0.9161 | 0.8499 | 0.9078 | 0.8743 |

| SS | 0.9516 | 0.8771 | 0.7676 | 0.9209 | 0.8324 | 0.9466 | 0.8881 | 0.8020 | 0.8631 | 0.8267 |

| SS + CCS | 0.9505 | 0.8718 | 0.7560 | 0.9222 | 0.8274 | 0.9431 | 0.8798 | 0.7866 | 0.8538 | 0.8141 |

| P + CCS | 0.9670 | 0.9092 | 0.8242 | 0.9667 | 0.8876 | 0.9642 | 0.9214 | 0.8584 | 0.9167 | 0.8836 |

| P + SS | 0.9666 | 0.9064 | 0.8179 | 0.9711 | 0.8844 | 0.9646 | 0.9223 | 0.8600 | 0.9188 | 0.8850 |

| P + SS + CCS | 0.9659 | 0.9052 | 0.8158 | 0.9665 | 0.8819 | 0.9646 | 0.9224 | 0.8604 | 0.9186 | 0.8854 |

| P + P2 | 0.9716 | 0.9188 | 0.8409 | 0.9811 | 0.9027 | 0.9702 | 0.9336 | 0.8798 | 0.9342 | 0.9033 |

| SS + P2 | 0.9673 | 0.9139 | 0.8352 | 0.9585 | 0.8895 | 0.9583 | 0.9051 | 0.8269 | 0.9089 | 0.8621 |

| CCS + SS + P2 | 0.9664 | 0.9103 | 0.8277 | 0.9588 | 0.8849 | 0.9610 | 0.9137 | 0.8441 | 0.9108 | 0.8730 |

| P + SS + CCS + P2 | 0.9720 | 0.9201 | 0.8438 | 0.9789 | 0.9035 | 0.9683 | 0.9266 | 0.8652 | 0.9363 | 0.8961 |

| Method | Training Set | Acc | Recall | Types of Features | Usage |

|---|---|---|---|---|---|

| This paper (RF) | See Methods | 0.9624 | 0.9390 | Sequence | Used to predict conotoxins from non-toxic peptides |

| This paper (RF) | See Methods | 0.9677 | 0.9458 | Sequence and PTMs | Used to predict conotoxins from non-toxic peptides |

| TOXIFY [9] | Swiss-Prot-derived | 0.8600 | 0.7600 | Sequence | Used to predict if a peptide is toxic |

| ToxClassifier [10] | Swiss-Prot-derived | 0.7700 | 0.5600 | Sequence | Used to predict if a peptide is toxic |

| ClanTox [11] | Swiss-Prot-derived | 0.6800 | 0.5400 | Sequence | Used to predict if a peptide is toxic |

| ToxinPred [12] | Composite dataset | 0.9450 | 0.9380 | Sequence | Used to predict if a peptide is toxic |

| Pred-CSF [13] | S2 | 0.9065 | 0.8793 | Sequence | Used to predict conotoxin super families |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monroe, L.K.; Truong, D.P.; Miner, J.C.; Adikari, S.H.; Sasiene, Z.J.; Fenimore, P.W.; Alexandrov, B.; Williams, R.F.; Nguyen, H.B. Conotoxin Prediction: New Features to Increase Prediction Accuracy. Toxins 2023, 15, 641. https://doi.org/10.3390/toxins15110641

Monroe LK, Truong DP, Miner JC, Adikari SH, Sasiene ZJ, Fenimore PW, Alexandrov B, Williams RF, Nguyen HB. Conotoxin Prediction: New Features to Increase Prediction Accuracy. Toxins. 2023; 15(11):641. https://doi.org/10.3390/toxins15110641

Chicago/Turabian StyleMonroe, Lyman K., Duc P. Truong, Jacob C. Miner, Samantha H. Adikari, Zachary J. Sasiene, Paul W. Fenimore, Boian Alexandrov, Robert F. Williams, and Hau B. Nguyen. 2023. "Conotoxin Prediction: New Features to Increase Prediction Accuracy" Toxins 15, no. 11: 641. https://doi.org/10.3390/toxins15110641