A Comprehensive Survey on SAR ATR in Deep-Learning Era

Abstract

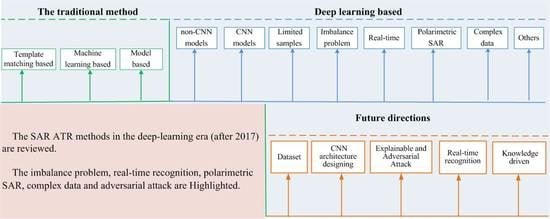

:1. Introduction

2. Related Work

3. Datasets and Evaluation Metrics

3.1. Datasets

3.2. Evaluation Metrics

4. The Traditional Methods

4.1. Template-Matching-Based Methods

4.2. Machine-Learning-Based Methods

4.3. Model-Based Method

5. The Deep-Learning-Based Methods

5.1. The Non-CNN Models

5.2. The CNN Models

5.2.1. The Off-the-Shell CNN Borrowed from Computer Vision

5.2.2. Specialized CNN for SAR ATR

- a.

- The shallow CNN

- b.

- The deep CNN

5.2.3. Attention-Based CNN

5.2.4. Capsule Network

5.2.5. Others

5.3. Methods to Solve the Problem Raised by Limited Samples

5.3.1. Data Augmentation

5.3.2. GAN for Generating New Samples

5.3.3. Electromagnetic Simulation for Generating New Samples

5.3.4. Transfer Learning

5.3.5. Few-Shot Learning

5.3.6. Semi-Supervised Learning

5.3.7. Metric Learning

5.3.8. Adding Domain Knowledge

5.4. Imbalance across Classes

5.5. Real-Time Recognition

5.6. Polarimetric SAR

5.7. Complex Data

5.8. Others

5.8.1. The Usage of ASC

5.8.2. Combining the Traditional Features with CNN

5.8.3. Explainable

5.8.4. Adversarial Attack

6. Future Directions

6.1. The Dataset

6.2. CNN Architecture Designing

6.3. Knowledge-Driven Dataset

6.4. Real-Time Recognition

6.5. Explainable and Adversarial Attack

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Reigber, A.; Scheiber, R.; Jager, M.; Prats-Iraola, P.; Hajnsek, I.; Jagdhuber, T.; Papathanassiou, K.P.; Nannini, M.; Aguilera, E.; Baumgartner, S.; et al. Very-high-resolution airborne synthetic aperture radar imaging: Signal processing and applications. Proc. IEEE 2013, 101, 759–783. [Google Scholar] [CrossRef] [Green Version]

- Ross, T.D.; Bradley, J.J.; Hudson, L.J. SAR ATR: So what’s the problem? An MSTAR perspective. In Proceedings of the SPIE 3721, Algorithms for Synthetic Aperture Radar Imagery VI, Orlando, FL, USA, 13 April 1999; SPIE: Bellingham, WA, USA, 1999; pp. 662–672. [Google Scholar] [CrossRef]

- Li, H.C.; Hong, W.; Wu, Y.R.; Fan, P.Z. An efficient and flexible statistical model based on generalized Gamma distribution for amplitude SAR images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2711–2722. [Google Scholar] [CrossRef]

- Achim, A.; Kuruoglu, E.E.; Zerubia, J. SAR image filtering based on the heavy-tailed Rayleigh model. IEEE Trans. Image Process. 2006, 15, 2686–2693. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A modified faster R-CNN based on CFAR algorithm for SAR ship detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017; IEEE: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Kreithen, D.E.; Halversen, S.D.; Owirka, G.J. Discriminating targets from clutter. Linc. Lab. J. 1993, 6, 25–52. [Google Scholar]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P.; Mdakane, L.W.; Meyer, R.G. Very deep learning for ship discrimination in Synthetic Aperture Radar imagery. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 104–107. [Google Scholar] [CrossRef]

- Li, Y.; Chang, Z.; Ning, W. A survey on feature extraction of SAR Images. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010; IEEE: New York, NY, USA, 2010; pp. V1-312–V1-317. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Fei-Fei, L. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Fan, W.; Chao, W.; Bo, Z.; Hong, Z.; Xiaojuan, T. Study on Vessel Classification in SAR Imagery: A Survey. Remote Sens. Technol. Appl. 2014, 29, 1–8. [Google Scholar] [CrossRef]

- Wang, Z.; Xin, Z.; Huang, X.; Sun, Y.; Xuan, J. Overview of SAR Image Feature Extraction and Target Recognition. In 3D Imaging Technologies—Multi-Dimensional Signal Processing and Deep Learning; Jain, L.C., Kountchev, R., Shi, J., Eds.; Smart Innovation, Systems and Technologies; Springer: Singapore, 2021; Volume 234. [Google Scholar] [CrossRef]

- Kechagias-Stamatis, O.; Aouf, N. Automatic target recognition on synthetic aperture radar imagery: A survey. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 56–81. [Google Scholar] [CrossRef]

- El-Darymli, K.; Gill, E.W.; Mcguire, P.; Power, D.; Moloney, C. Automatic Target Recognition in Synthetic Aperture Radar Imagery: A State-of-the-Art Review. IEEE Access 2016, 4, 6014–6058. [Google Scholar] [CrossRef] [Green Version]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef] [Green Version]

- Keydel, E.R.; Lee, S.W.; Moore, J.T. MSTAR extended operating conditions: A tutorial. In Proceedings of the 3rd SPIE Conference Algorithms SAR Imagery, Orlando, FL, USA, 10 June 1996; SPIE: Bellingham, WA, USA, 1996; Volume 2757, pp. 228–242. [Google Scholar] [CrossRef]

- Huang, L.; Liu, B.; Li, B.; Guo, W.; Yu, W.; Zhang, Z.; Yu, W. OpenSARShip: A dataset dedicated to Sentinel-1 ship interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 195–208. [Google Scholar] [CrossRef]

- Li, B.; Liu, B.; Huang, L.; Guo, W.; Zhang, Z.; Yu, W. OpenSARShip 2.0: A large-volume dataset for deeper interpretation of ship targets in Sentinel-1 imagery. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; IEEE: New York, NY, USA, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Z.; Yao, W.; Datcu, M.; Xiong, H.; Yu, W. OpenSARUrban: A Sentinel-1 SAR image dataset for urban interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 187–203. [Google Scholar] [CrossRef]

- Hou, X.; Ao, W.; Song, Q.; Lai, J.; Wang, H.; Xu, F. FUSAR-Ship: Building a high-resolution SAR-AIS matchup dataset of Gaofen-3 for ship detection and recognition. Sci. China Inf. Sci. 2020, 63, 140303. [Google Scholar] [CrossRef] [Green Version]

- Sellers, S.R.; Collins, P.J.; Jackson, J.A. Augmenting simulations for SAR ATR neural network training. In Proceedings of the 2020 IEEE International Radar Conference (RADAR), Washington, DC, USA, 28–30 April 2020; pp. 309–314. [Google Scholar] [CrossRef]

- Ikeuchi, K.; Shakunaga, T.; Wheeler, M.D.; Yamazaki, T. Invariant histograms and deformable template matching for SAR target recognition. In Proceedings of the Proceedings CVPR IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 18–20 June 1996; IEEE: New York, NY, USA, 1996; pp. 100–105. [Google Scholar] [CrossRef]

- Fu, K.; Dou, F.Z.; Li, H.C.; Diao, W.H.; Sun, X.; Xu, G.L. Aircraft recognition in SAR images based on scattering structure feature and template matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4206–4217. [Google Scholar] [CrossRef]

- Meth, R.; Chellappa, R. Feature matching and target recognition in synthetic aperture radar imagery. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, AZ, USA, 15–19 March 1999; IEEE: New York, NY, USA, 1999; pp. 3333–3336. [Google Scholar] [CrossRef]

- Nicoli, L.P.; Anagnostopoulos, G.C. Shape-based recognition of targets in synthetic aperture radar images using elliptical Fourier descriptors. In Automatic Target Recognition XVIII; SPIE: Bellingham, WA, USA, 2008; Volume 6967, pp. 148–159. [Google Scholar] [CrossRef]

- Park, J.I.; Park, S.H.; Kim, K.T. New discrimination features for SAR automatic target recognition. IEEE Geosci. Remote Sens. Lett. 2012, 10, 476–480. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, Z.; Todorovic, S.; Li, J. Adaptive boosting for SAR automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 112–125. [Google Scholar] [CrossRef]

- Diemunsch, J.R.; Wissinger, J. Moving and stationarytarget acquisition and recognition (MSTAR) model-basedautomatic target recognition: Search technology for a robustATR. In Proceedings of the SPIE 3370, Algorithms for Synthetic Aperture Radar Imagery V, Orlando, FL, USA, 14–17 April 1998; SPIE: Bellingham, WA, USA, 1998; pp. 481–492. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1492–1500. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 2261–2269. [Google Scholar]

- Larochelle, H.; Mandel, M.; Pascanu, R.; Bengio, Y. Learning algorithms for the classification restricted Boltzmann machine. J. Mach. Learn. Res. 2012, 13, 643–669. [Google Scholar]

- Hua, Y.; Guo, J.; Zhao, H. Deep belief networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015; IEEE: New York, NY, USA, 2015; pp. 1–4. [Google Scholar]

- Zhao, Z.; Jiao, L.; Zhao, J.; Gu, J.; Zhao, J. Discriminant deep belief network for high-resolution SAR image classification. Pattern Recognit. 2017, 61, 686–701. [Google Scholar] [CrossRef]

- Sun, Z.; Xue, L.; Xu, Y. Recognition of SAR target based on multilayer auto-encoder and SNN. Int. J. Innov. Comput. Inf. Control 2013, 9, 4331–4341. [Google Scholar]

- Guo, J.; Wang, L.; Zhu, D.; Hu, C. Compact convolutional autoencoder for SAR target recognition. IET Radar Sonar Navig. 2020, 14, 967–972. [Google Scholar] [CrossRef]

- Li, X.; Li, C.; Wang, P.; Men, Z.; Xu, H. SAR ATR based on dividing CNN into CAE and SNN. In Proceedings of the 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015; IEEE: New York, NY, USA, 2015; pp. 676–679. [Google Scholar] [CrossRef]

- Bentes, C.; Velotto, D.; Lehner, S. Target classification in oceanographic SAR images with deep neural networks: Architecture and initial results. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; IEEE: New York, NY, USA, 2015; pp. 3703–3706. [Google Scholar] [CrossRef]

- Shao, J.; Qu, C.; Li, J. A performance analysis of convolutional neural network models in SAR target recognition. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Fu, Z.; Zhang, F.; Yin, Q.; Li, R.; Hu, W.; Li, W. Small Sample Learning Optimization for Resnet Based Sar Target Recognition. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 2330–2333. [Google Scholar] [CrossRef]

- Soldin, R.J. SAR Target Recognition with Deep Learning. In Proceedings of the 2018 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 9–11 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Anas, H.; Majdoulayne, H.; Chaimae, A.; Nabil, S.M. Deep Learning for SAR Image Classification. In Intelligent Systems and Applications. IntelliSys 2019; Bi, Y., Bhatia, R., Kapoor, S., Eds.; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2019; Volume 1037. [Google Scholar] [CrossRef]

- Morgan, D.A.E. Deep convolutional neural networks for ATR from SAR imagery. In Proceedings of the SPIE 9475, Algorithms for Synthetic Aperture Radar Imagery XXII, 94750F, Baltimore, MD, USA, 13 May 2015. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, K.; Ying, Z.; Shang, L.; Liu, J.; Zhai, Y.; Piuri, V.; Scotti, F. SAR Automatic Target Recognition Based on Deep Convolutional Neural Network. In Image and Graphics. ICIG 2017; Zhao, Y., Kong, X., Taubman, D., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10668. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Xu, Y.; Li, H.; Miao, Z.; Zhang, Y. DeepSAR-Net: Deep convolutional neural networks for SAR target recognition. In Proceedings of the 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, 10–12 March 2017; IEEE: New York, NY, USA, 2017; pp. 740–743. [Google Scholar] [CrossRef]

- Liu, Q.; Li, S.; Mei, S.; Jiang, R.; Li, J. Feature Learning for SAR Images Using Convolutional Neural Network. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 7003–7006. [Google Scholar] [CrossRef]

- Qiao, W.; Zhang, X.; Fen, G. An automatic target recognition algorithm for SAR image based on improved convolution neural network. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; IEEE: New York, NY, USA, 2017; pp. 551–555. [Google Scholar] [CrossRef]

- Zhou, F.; Wang, L.; Bai, X.; Hui, Y. SAR ATR of Ground Vehicles Based on LM-BN-CNN. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7282–7293. [Google Scholar] [CrossRef]

- Cho, J.H.; Park, C.G. Additional feature CNN based automatic target recognition in SAR image. In Proceedings of the 2017 Fourth Asian Conference on Defence Technology—Japan (ACDT), Tokyo, Japan, 29 November–1 December 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, P.; Liu, K.; Zou, H.; Zhen, X. Multi-stream convolutional neural network for SAR automatic target recognition. Remote Sens. 2018, 10, 1473. [Google Scholar] [CrossRef] [Green Version]

- Lang, P.; Fu, X.; Feng, C.; Dong, J.; Qin, R.; Martorella, M. LW-CMDANet: A Novel Attention Network for SAR Automatic Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6615–6630. [Google Scholar] [CrossRef]

- Zhai, Y.; Ma, H.; Cao, H.; Deng, W.; Liu, J.; Zhang, Z.; Guan, H.; Zhi, Y.; Wang, J.; Zhou, J. MF-SarNet: Effective CNN with data augmentation for SAR automatic target recognition. J. Eng. 2019, 2019, 5813–5818. [Google Scholar] [CrossRef]

- Xie, Y.; Dai, W.; Hu, Z.; Liu, Y.; Li, C.; Pu, X. A novel convolutional neural network architecture for SAR target recognition. J. Sens. 2019, 2019, 1246548. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, X.; Hui, J.; Wang, Z.; Zhang, Z. A novel group squeeze excitation sparsely connected convolutional networks for SAR target classification. Int. J. Remote Sens. 2019, 40, 4346–4360. [Google Scholar] [CrossRef]

- Dong, G.; Liu, H. Global Receptive-Based Neural Network for Target Recognition in SAR Images. IEEE Trans. Cybern. 2021, 51, 1954–1967. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, C.; Tian, J.; Ou, J.; Li, J. A SAR Image Target Recognition Approach via Novel SSF-Net Models. Comput. Intell. Neurosci. 2020, 2020, 8859172. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Jiang, Q.; Song, D.; Zhang, Q.; Sun, M.; Fu, X.; Wang, J. SAR vehicle recognition via scale-coupled Incep_Dense Network (IDNet). Int. J. Remote Sens. 2021, 42, 9109–9134. [Google Scholar] [CrossRef]

- Feng, B.; Yang, H.; Zhang, C.; Wang, J.; Li, G.; Gao, Y. SAR Image Target Recognition Algorithm Based on Convolutional Neural Network. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Industrial Design (AIID), Guangzhou, China, 28–30 May 2021; IEEE: New York, NY, USA, 2021; pp. 364–368. [Google Scholar] [CrossRef]

- Pei, J.; Wang, Z.; Sun, X.; Huo, W.; Zhang, Y.; Huang, Y.; Wu, J.; Yang, J. FEF-Net: A Deep Learning Approach to Multiview SAR Image Target Recognition. Remote Sens. 2021, 13, 3493. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, C.; Pei, J.; Huang, Y.; Zhang, Y.; Yang, H.; Xing, Z. Multi-View SAR Automatic Target Recognition Based on Deformable Convolutional Network. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 3585–3588. [Google Scholar] [CrossRef]

- Shang, R.; Wang, J.; Jiao, L.; Stolkin, R.; Hou, B.; Li, Y. SAR Targets Classification Based on Deep Memory Convolution Neural Networks and Transfer Parameters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2834–2846. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Kang, M.; Leng, X.; Zou, H. Deep convolutional highway unit network for SAR target classification with limited labeled training data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1091–1095. [Google Scholar] [CrossRef]

- Wang, L.; Bai, X.; Zhou, F. SAR ATR of Ground Vehicles Based on ESENet. Remote Sens. 2019, 11, 1316. [Google Scholar] [CrossRef] [Green Version]

- Shi, B.; Zhang, Q.; Wang, D.; Li, Y. Synthetic Aperture Radar SAR Image Target Recognition Algorithm Based on Attention Mechanism. IEEE Access 2021, 9, 140512–140524. [Google Scholar] [CrossRef]

- Zhang, M.; An, J.; Yang, L.D.; Wu, L.; Lu, X.Q. Convolutional Neural Network with Attention Mechanism for SAR Automatic Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Li, R.; Wang, X.; Wang, J.; Song, Y.; Lei, L. SAR Target Recognition Based on Efficient Fully Convolutional Attention Block CNN. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Su, B.; Liu, J.; Su, X.; Luo, B.; Wang, Q. CFCANet: A Complete Frequency Channel Attention Network for SAR Image Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11750–11763. [Google Scholar] [CrossRef]

- Wang, C.; Liu, X.; Pei, J.; Huang, Y.; Zhang, Y.; Yang, J. Multiview Attention CNN-LSTM Network for SAR Automatic Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12504–12513. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. Adv. Neural Inf. Process. Syst. 2017, 30, 3859–3869. [Google Scholar]

- Shah, R.; Soni, A.; Mall, V.; Gadhiya, T.; Roy, A.K. Automatic Target Recognition from SAR Images Using Capsule Networks. In Pattern Recognition and Machine Intelligence. PReMI 2019; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11942. [Google Scholar] [CrossRef]

- Yang, Z.; Jing, S. SAR image classification method based on improved capsule network. J. Phys. Conf. Ser. IOP Publ. 2020, 1693, 012181. [Google Scholar] [CrossRef]

- Guo, Y.; Pan, Z.; Wang, M.; Wang, J.; Yang, W. Learning Capsules for SAR Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4663–4673. [Google Scholar] [CrossRef]

- Ren, H.; Yu, X.; Zou, L.; Zhou, Y.; Wang, X.; Bruzzone, L. Extended convolutional capsule network with application on SAR automatic target recognition. Signal Process. 2021, 183, 108021. [Google Scholar] [CrossRef]

- Feng, Q.; Peng, D.; Gu, Y. Research of regularization techniques for SAR target recognition using deep CNN models. In Proceedings of the SPIE 11069, Tenth International Conference on Graphics and Image Processing (ICGIP 2018), Chengdu, China, 6 May 2019; SPIE: Bellingham, WA, USA, 2019. 110693p. [Google Scholar] [CrossRef]

- Kuang, W.; Dong, W.; Dong, L. The Effect of Training Dataset Size on SAR Automatic Target Recognition Using Deep Learning. In Proceedings of the 2022 IEEE 12th International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 15–17 July 2022; IEEE: New York, NY, USA, 2022; pp. 13–16. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, Y. A SAR Target Recognition Method via Combination of Multilevel Deep Features. Comput. Intell. Neurosci. 2021, 2021, 2392642. [Google Scholar] [CrossRef]

- Li, S.; Pan, Z.; Hu, Y. Multi-Aspect Convolutional-Transformer Network for SAR Automatic Target Recognition. Remote Sens. 2022, 14, 3924. [Google Scholar] [CrossRef]

- Zhao, P.; Huang, L. Multi-Aspect SAR Target Recognition Based on Efficientnet and GRU. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; IEEE: New York, NY, USA, 2020; pp. 1651–1654. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Ding, B.; Wen, G.; Huang, X.; Ma, C.; Yang, X. Data Augmentation by Multilevel Reconstruction Using Attributed Scattering Center for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 979–983. [Google Scholar] [CrossRef]

- Furukawa, H. Deep learning for target classification from SAR imagery: Data augmentation and translation invariance. arXiv 2017, arXiv:1708.07920. [Google Scholar]

- Jiang, T.; Cui, Z.; Zhou, Z.; Cao, Z. Data Augmentation with Gabor Filter in Deep Convolutional Neural Networks for Sar Target Recognition. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 689–692. [Google Scholar] [CrossRef]

- Lei, Y.; Xia, W.; Liu, Z. Synthetic Images Augmentation for Robust SAR Target Recognition. In Proceedings of the 2021 The 5th International Conference on Video and Image Processing, Hayward, CA, USA, 22–25 December 2021; IEEE: New York, NY, USA, 2021; pp. 19–25. [Google Scholar] [CrossRef]

- Ni, J.; Zhang, F.; Yin, Q.; Zhou, Y.; Li, H.C.; Hong, W. Random neighbor pixel-block-based deep recurrent learning for polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7557–7569. [Google Scholar] [CrossRef]

- Lv, J.; Liu, Y. Data Augmentation Based on Attributed Scattering Centers to Train Robust CNN for SAR ATR. IEEE Access 2019, 7, 25459–25473. [Google Scholar] [CrossRef]

- Goodfellow, P.A.; Mirza, X.; Warde-Farley, O. Generative adversarial nets. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2014; pp. 2672–2680. [Google Scholar] [CrossRef]

- Guo, J.; Lei, B.; Ding, C.; Zhang, Y. Synthetic aperture radar image synthesis by using generative adversarial nets. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1111–1115. [Google Scholar] [CrossRef]

- Bao, X.; Pan, Z.; Liu, L.; Lei, B. SAR image simulation by generative adversarial networks. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9995–9998. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of Wasserstein GANs. arXiv 2017, arXiv:1704.00028. [Google Scholar]

- Cui, Z.; Zhang, M.; Cao, Z.; Cao, C. Image data augmentation for SAR sensor via generative adversarial nets. IEEE Access 2019, 7, 42255–42268. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 2242–2251. [Google Scholar]

- Liu, L.; Pan, Z.; Qiu, X.; Peng, L. SAR target classification with CycleGAN transferred simulated samples. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 4411–4414. [Google Scholar] [CrossRef]

- Wagner, S.A. SAR ATR by a combination of convolutional neural network and support vector machines. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2861–2872. [Google Scholar] [CrossRef]

- Hwang, J.; Shin, Y. Image Data Augmentation for SAR Automatic Target Recognition Using TripleGAN. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 20–22 October 2021; IEEE: New York, NY, USA, 2021; pp. 312–314. [Google Scholar] [CrossRef]

- Luo, Z.; Jiang, X.; Liu, X. Synthetic minority class data by generative adversarial network for imbalanced sar target recognition. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; IEEE: New York, NY, USA, 2020; pp. 2459–2462. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, W.; Yang, J.; Li, W. SAR Target Recognition Using cGAN-Based SAR-to-Optical Image Translation. Remote Sens. 2022, 14, 1793. [Google Scholar] [CrossRef]

- Niu, S.; Qiu, X.; Peng, L.; Lei, B. Parameter prediction method of SAR target simulation based on convolutional neural networks. In Proceedings of the 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018; IEEE: New York, NY, USA, 2018; pp. 106–110. [Google Scholar]

- Malmgren-Hansen, D.; Kusk, A.; Dall, J.; Nielsen, A.A.; Engholm, R.; Skriver, H. Improving SAR Automatic Target Recognition Models with Transfer Learning from Simulated Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1484–1488. [Google Scholar] [CrossRef] [Green Version]

- Cha, M.; Majumdar, A.; Kung, H.T.; Barber, J. Improving Sar Automatic Target Recognition Using Simulated Images Under Deep Residual Refinements. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: New York, NY, USA, 2018; pp. 2606–2610. [Google Scholar] [CrossRef]

- Ahmadibeni, A.; Borooshak, L.; Jones, B.; Shirkhodaie, A. Aerial and ground vehicles synthetic SAR dataset generation for automatic target recognition. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery XXVII, Online, 24 April 2020; SPIE: Bellingham, WA, USA, 2020; Volume 11393, pp. 96–107. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Y.; Liu, H.; Sun, Y.; Hu, L. SAR Target Recognition Using Only Simulated Data for Training by Hierarchically Combining CNN and Image Similarity. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Kang, C.; He, C. SAR image classification based on the multi-layer network and transfer learning of mid-level representations. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; IEEE: New York, NY, USA, 2016; pp. 1146–1149. [Google Scholar] [CrossRef]

- Marmanis, D.; Yao, W.; Adam, F.; Datcu, M.; Reinartz, P.; Schindler, K.; Wegner, J.D.; Stilla, U. Artificial generation of big data for improving image classification: A generative adversarial network approach on SAR data. arXiv 2017, arXiv:1711.02010. [Google Scholar]

- Lu, C.; Li, W. Ship Classification in High-Resolution SAR Images via Transfer Learning with Small Training Dataset. Sensors 2019, 19, 63. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhai, Y.; Deng, W.; Xu, Y.; Ke, Q.; Gan, J.; Sun, B.; Zeng, J.; Piuri, V. Robust SAR Automatic Target Recognition Based on Transferred MS-CNN with L2-Regularization. Comput. Intell. Neurosci. 2019, 2019, 9140167. [Google Scholar] [CrossRef] [Green Version]

- Ying, Z.; Xuan, C.; Zhai, Y.; Sun, B.; Li, J.; Deng, W.; Mai, C.; Wang, F.; Labati, R.D.; Piuri, V.; et al. TAI-SARNET: Deep Transferred Atrous-Inception CNN for Small Samples SAR ATR. Sensors 2020, 20, 1724. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.; Li, J.; Gao, P.; Li, L.; Tian, T.; Tian, J. Two-Stage Cross-Modality Transfer Learning Method for Military-Civilian SAR Ship Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, J.; Heng, W.; Ren, K.; Song, J. Transfer learning with convolutional neural networks for SAR ship recognition. IOP Conf. Ser. Mater. Sci. Eng. 2018, 322, 072001. [Google Scholar] [CrossRef]

- Huang, Z.; Dumitru, C.O.; Pan, Z.; Lei, B.; Datcu, M. Classification of large-scale high-resolution SAR images with deep transfer learning. IEEE Geosci. Remote Sens. Lett. 2020, 18, 107–111. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Zhu, Y.; Fu, Q. Deep Transfer Learning Based on Generative Adversarial Networks for SAR Target Recognition with label limitation. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- He, Q.; Zhao, L.; Ji, K.; Kuang, G. SAR target recognition based on task-driven domain adaptation using simulated data. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, Z.L.; Xu, X.H.; Zhang, L. Study of deep transfer learning for SAR ATR based on simulated SAR images. J. Univ. Chin. Acad. Sci. 2020, 37, 516–524. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, G.; Leung, H. SAR Target Recognition Based on Cross-Domain and Cross-Task Transfer Learning. IEEE Access 2019, 7, 153391–153399. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer Learning with Deep Convolutional Neural Network for SAR Target Classification with Limited Labeled Data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef] [Green Version]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.P.; Schölkopf, B.; Smola, A.J. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 2006, 22, e49–e57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, Z.; Pan, Z.; Lei, B. What, where and how to transfer in SAR target recognition based on deep CNNs. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2324–2336. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Bai, X.; Zhou, F. Few-Shot SAR ATR Based on Conv-BiLSTM Prototypical Networks. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, G. SAR Target Recognition via Meta-Learning and Amortized Variational Inference. Sensors 2020, 20, 5966. [Google Scholar] [CrossRef]

- Wang, L.; Bai, X.; Gong, C.; Zhou, F. Hybrid Inference Network for Few-Shot SAR Automatic Target Recognition. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9257–9269. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, G.; Xu, Y.; Leung, H. SAR Target Recognition Based on Probabilistic Meta-Learning. IEEE Geosci. Remote Sens. Lett. 2021, 18, 682–686. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Y.; Liu, H.; Sun, Y. Attribute-Guided Multi-Scale Prototypical Network for Few-Shot SAR Target Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12224–12245. [Google Scholar] [CrossRef]

- Li, L.; Liu, J.; Su, L.; Ma, C.; Li, B.; Yu, Y. A Novel Graph Metalearning Method for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Fu, K.; Zhang, T.; Zhang, Y.; Wang, Z.; Sun, X. Few-Shot SAR Target Classification via Metalearning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training GANs. In Proceedings of the NIPS, Barcelona, Spain, 5–10 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; pp. 2234–2242. [Google Scholar]

- Gao, F.; Yang, Y.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images. Remote Sens. 2018, 10, 846. [Google Scholar] [CrossRef] [Green Version]

- Zheng, C.; Jiang, X.; Liu, X. Semi-Supervised SAR ATR via Multi-Discriminator Generative Adversarial Network. IEEE Sens. J. 2019, 19, 7525–7533. [Google Scholar] [CrossRef]

- Gao, F.; Ma, F.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. Semi-Supervised Generative Adversarial Nets with Multiple Generators for SAR Image Recognition. Sensors 2018, 18, 2706. [Google Scholar] [CrossRef] [Green Version]

- El-Darymli, K.; McGuire, P.; Power, D.; Moloney, C. Target detection in synthetic aperture radar imagery: A state-of-the-art survey. J. Appl. Remote Sens. 2013, 7, 071598. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Shi, J.; Zhou, Y.; Yang, X.; Zhou, Z.; Wei, S.; Zhang, X. Semisupervised Learning-Based SAR ATR via Self-Consistent Augmentation. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4862–4873. [Google Scholar] [CrossRef]

- Gao, F.; Shi, W.; Wang, J.; Hussain, A.; Zhou, H. A Semi-Supervised Synthetic Aperture Radar (SAR) Image Recognition Algorithm Based on an Attention Mechanism and Bias-Variance Decomposition. IEEE Access 2019, 7, 108617–108632. [Google Scholar] [CrossRef]

- Gao, F.; Yue, Z.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. A novel active semisupervised convolutional neural network algorithm for SAR image recognition. Comput. Intell. Neurosci. 2017, 2017, 3105053. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Guo, X.; Ren, H.; Li, L. Multi-view classification with semi-supervised learning for SAR target recognition. Signal Process. 2021, 183, 108030. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, J.; Qi, P.; Yin, G.; Zhang, L. Multi-Block Mixed Sample Semi-Supervised Learning for SAR Target Recognition. Remote Sens. 2021, 13, 361. [Google Scholar] [CrossRef]

- Chen, K.; Pan, Z.; Huang, Z.; Hu, Y.; Ding, C. Learning From Reliable Unlabeled Samples for Semi-Supervised SAR ATR. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Xu, Y.; Lang, H.; Chai, X.; Ma, L. Distance metric learning for ship classification in SAR images. In Proceedings of the SPIE 10789, Image and Signal Processing for Remote Sensing XXIV, 107891C, Berlin, Germany, 9 October 2018. [Google Scholar] [CrossRef]

- Pan, Z.; Bao, X.; Zhang, Y.; Wang, B.; An, Q.; Lei, B. Siamese network based metric learning for SAR target classification. In Proceedings of the IGARSS, Yokohama, Japan, 28 July–2 August 2019; IEEE: New York, NY, USA, 2019; pp. 1342–1345. [Google Scholar] [CrossRef]

- Wang, B.; Pan, Z.; Hu, Y.; Ma, W. SAR Target Recognition Based on Siamese CNN with Small Scale Dataset. Radar Sci. Technol. 2019, 17, 603–609. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Sun, Q.; Dong, Q. SAR Image Classification Using CNN Embeddings and Metric Learning. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, C.; Gu, H.; Su, W. SAR Image Classification Using Contrastive Learning and Pseudo-Labels with Limited Data. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, L.; Leng, X.; Feng, S.; Ma, X.; Ji, K.; Kuang, G.; Liu, L. Domain Knowledge Powered Two-Stream Deep Network for Few-Shot SAR Vehicle Recognition. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Li, L.; Ansari, N. Deep knowledge integration of heterogeneous features for domain adaptive SAR target recognition. Pattern Recognit. 2022, 126, 108590. [Google Scholar] [CrossRef]

- Shao, Q.; Qu, C.; Li, J.; Peng, S. CNN based ship target recognition of imbalanced SAR image. Electron. Opt. Control 2019, 26, 90–97. [Google Scholar]

- Cao, C.; Cui, Z.; Wang, L.; Wang, J.; Cao, Z.; Yang, J. Cost-Sensitive Awareness-Based SAR Automatic Target Recognition for Imbalanced Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, C.; Quan, S.; Xiao, H.; Kuang, G.; Liu, L. A Class Imbalance Loss for Imbalanced Object Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2778–2792. [Google Scholar] [CrossRef]

- Yang, C.Y.; Hsu, H.M.; Cai, J.; Hwang, J.N. Long-tailed recognition of sar aerial view objects by cascading and paralleling experts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Conference, 19–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 142–148. [Google Scholar]

- Zhang, Y.; Lei, Z.; Zhuang, L.; Yu, H. A CNN Based Method to Solve Class Imbalance Problem in SAR Image Ship Target Recognition. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; IEEE: New York, NY, USA, 2021; pp. 229–233. [Google Scholar] [CrossRef]

- Li, G.; Pan, L.; Qiu, L.; Tan, Z.; Xie, F.; Zhang, H. A Two-Stage Shake-Shake Network for Long-Tailed Recognition of SAR Aerial View Objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 249–256. [Google Scholar]

- Shao, J.; Qu, C.; Li, J.; Peng, S. A lightweight convolutional neural network based on visual attention for SAR image target classification. Sensors 2018, 18, 3039. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Zhou, G.; Zhou, S.; Yin, J. A Lightweight Fully Convolutional Neural Network for SAR Automatic Target Recognition. Remote Sens. 2021, 13, 3029. [Google Scholar] [CrossRef]

- Zhang, F.; Liu, Y.; Zhou, Y.; Yin, Q.; Li, H.C. A lossless lightweight CNN design for SAR target recognition. Remote Sens. Lett. 2020, 11, 485–494. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, F.; Tang, B.; Yin, Q.; Sun, X. Slim and efficient neural network design for resource-constrained SAR target recognition. Remote Sens. 2018, 10, 1618. [Google Scholar] [CrossRef] [Green Version]

- Min, R.; Lan, H.; Cao, Z.; Cui, Z. A gradually distilled CNN for SAR target recognition. IEEE Access 2019, 7, 42190–42200. [Google Scholar] [CrossRef]

- Zhong, C.; Mu, X.; He, X.; Wang, J.; Zhu, M. SAR Target Image Classification Based on Transfer Learning and Model Compression. IEEE Geosci. Remote Sens. Lett. 2019, 16, 412–416. [Google Scholar] [CrossRef]

- Wang, Z.; Du, L.; Li, Y. Boosting Lightweight CNNs Through Network Pruning and Knowledge Distillation for SAR Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8386–8397. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Hou, B.; Kou, H.; Jiao, L. Classification of Polarimetric SAR Images Using Multilayer Autoencoders and Superpixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3072–3081. [Google Scholar] [CrossRef]

- Liu, X.; Jiao, L.; Tang, X.; Sun, Q.; Zhang, D. Polarimetric Convolutional Network for PolSAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3040–3054. [Google Scholar] [CrossRef] [Green Version]

- Mullissa, A.G.; Persello, C.; Stein, A. PolSARNet: A Deep Fully Convolutional Network for Polarimetric SAR Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 5300–5309. [Google Scholar] [CrossRef]

- Hua, W.; Wang, S.; Xie, W.; Guo, Y.; Jin, X. Dual-Channel Convolutional Neural Network for Polarimetric SAR Images Classification. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: New York, NY, USA, 2019; pp. 3201–3204. [Google Scholar] [CrossRef]

- Li, L.; Ma, L.; Jiao, L.; Liu, F.; Sun, Q.; Zhao, J. Complex Contourlet-CNN for Polarimetric SAR Image Classification. Pattern Recognit. 2020, 10, 107110. [Google Scholar] [CrossRef]

- Xi, Y.; Xiong, G.; Yu, W. Feature-loss Double Fusion Siamese Network for Dual-polarized SAR Ship Classification. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Shang, R.; He, J.; Wang, J.; Xu, K.; Jiao, L.; Stolkin, R. Dense connection and depthwise separable convolution based CNN for polarimetric SAR image classification. Knowl.-Based Syst. 2020, 194, 105542. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Squeeze-and-Excitation Laplacian Pyramid Network with Dual-Polarization Feature Fusion for Ship Classification in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zeng, L.; Zhu, Q.; Lu, D.; Zhang, T.; Wang, H.; Yin, J.; Yang, J. Dual-Polarized SAR Ship Grained Classification Based on CNN With Hybrid Channel Feature Loss. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Xiong, G.; Xi, Y.; Chen, D.; Yu, W. Dual-Polarization SAR Ship Target Recognition Based on Mini Hourglass Region Extraction and Dual-Channel Efficient Fusion Network. IEEE Access 2021, 9, 29078–29089. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A polarization fusion network with geometric feature embedding for SAR ship classification. Pattern Recognit. 2022, 123, 108365. [Google Scholar] [CrossRef]

- Scarnati, T.; Lewis, B. Complex-Valued Neural Networks for Synthetic Aperture Radar Image Classification. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 7–14 May 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, X.; Pan, Z. SAR ATR Using Complex-Valued CNN. In Proceedings of the 2020 Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2020; IEEE: New York, NY, USA, 2020; pp. 125–128. [Google Scholar] [CrossRef]

- Wang, R.; Wang, Z.; Xia, K.; Zou, H.; Li, J. Target Recognition in Single-Channel SAR Images Based on the Complex-Valued Convolutional Neural Network with Data Augmentation. IEEE Trans. Aerosp. Electron. Syst. 2022, 1–8. [Google Scholar] [CrossRef]

- Zeng, Z.; Sun, J.; Han, Z.; Hong, W. SAR Automatic Target Recognition Method Based on Multi-Stream Complex-Valued Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Hou, B.; Wang, L.; Wu, Q.; Han, Q.; Jiao, L. Complex Gaussian–Bayesian Online Dictionary Learning for SAR Target Recognition with Limited Labeled Samples. IEEE Access 2019, 7, 120626–120637. [Google Scholar] [CrossRef]

- Feng, S.; Ji, K.; Zhang, L.; Ma, X.; Kuang, G. SAR Target Classification Based on Integration of ASC Parts Model and Deep Learning Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10213–10225. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, L.; Wen, Z.; Li, K.; Pan, Q. Multilevel Scattering Center and Deep Feature Fusion Learning Framework for SAR Target Recognition. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Li, C.; Du, L.; Li, Y.; Song, J. A Novel SAR Target Recognition Method Combining Electromagnetic Scattering Information and GCN. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Jiang, C.; Zhou, Y. Hierarchical Fusion of Convolutional Neural Networks and Attributed Scattering Centers with Application to Robust SAR ATR. Remote Sens. 2018, 10, 819. [Google Scholar] [CrossRef] [Green Version]

- Li, T.; Du, L. SAR Automatic Target Recognition Based on Attribute Scattering Center Model and Discriminative Dictionary Learning. IEEE Sens. J. 2019, 19, 4598–4611. [Google Scholar] [CrossRef]

- Zhang, J.; Xing, M.; Xie, Y. FEC: A Feature Fusion Framework for SAR Target Recognition Based on Electromagnetic Scattering Features and Deep CNN Features. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2174–2187. [Google Scholar] [CrossRef]

- Zhang, X. Noise-robust target recognition of SAR images based on attribute scattering center matching. Remote Sens. Lett. 2019, 10, 186–194. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Injection of Traditional Hand-Crafted Features into Modern CNN-Based Models for SAR Ship Classification: What, Why, Where, and How. Remote Sens. 2021, 13, 2091. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Integrate Traditional Hand-Crafted Features into Modern CNN-based Models to Further Improve SAR Ship Classification Accuracy. In Proceedings of the 2021 7th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Virtual Conference, 1–3 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Pannu, H.S.; Malhi, A. Deep learning-based explainable target classification for synthetic aperture radar images. In Proceedings of the 2020 13th International Conference on Human System Interaction (HSI), Tokyo, Japan, 6–8 June 2020; IEEE: New York, NY, USA, 2020; pp. 34–39. [Google Scholar] [CrossRef]

- Guo, W.; Zhang, Z.; YU, W.; Sun, X. Perspective on explainable SAR target recognition. J. Radars 2020, 9, 462–476. [Google Scholar] [CrossRef]

- Feng, Z.; Zhu, M.; Stanković, L.; Ji, H. Self-Matching CAM: A Novel Accurate Visual Explanation of CNNs for SAR Image Interpretation. Remote Sens. 2021, 13, 1772. [Google Scholar] [CrossRef]

- Li, P.; Feng, C.; Hu, X.; Tang, Z. SAR-BagNet: An Ante-hoc Interpretable Recognition Model Based on Deep Network for SAR Image. Remote Sens. 2022, 14, 2150. [Google Scholar] [CrossRef]

- Huang, T.; Zhang, Q.; Liu, J.; Hou, R.; Wang, X.; Li, Y. Adversarial attacks on deep-learning-based SAR image target recognition. J. Netw. Comput. Appl. 2020, 162, 102632. [Google Scholar] [CrossRef]

- Sun, H.; Xu, Y.; Kuang, G.; Chen, J. Adversarial Robustness Evaluation of Deep Convolutional Neural Network Based SAR ATR Algorithm. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 5263–5266. [Google Scholar] [CrossRef]

- Du, C.; Zhang, L. Adversarial Attack for SAR Target Recognition Based on UNet-Generative Adversarial Network. Remote Sens. 2021, 13, 4358. [Google Scholar] [CrossRef]

- Zhang, F.; Meng, T.; Xiang, D.; Ma, F.; Sun, X.; Zhou, Y. Adversarial Deception Against SAR Target Recognition Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4507–4520. [Google Scholar] [CrossRef]

- Peng, B.; Peng, B.; Zhou, J.; Xia, J.; Liu, L. Speckle-Variant Attack: Toward Transferable Adversarial Attack to SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

| Years | Before 2016 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 |

|---|---|---|---|---|---|---|---|---|

| Traditional-method-based | 48 | 14 | 21 | 17 | 18 | 12 | 14 | 2 |

| Deep-learning-based | 6 | 8 | 21 | 40 | 45 | 56 | 76 | 31 |

| Percentages of deep-learning-based | 11.1% | 36.4% | 50% | 70. 2% | 71.4% | 82.4% | 84.4% | 93.9% |

| OpenSARShip | 11,346 SAR ship chips | 41 Sentinel-1 images |

| OpenSARShip 2.0 | 34,528 ship chips | 87 Sentinel-1 images |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Yu, Z.; Yu, L.; Cheng, P.; Chen, J.; Chi, C. A Comprehensive Survey on SAR ATR in Deep-Learning Era. Remote Sens. 2023, 15, 1454. https://doi.org/10.3390/rs15051454

Li J, Yu Z, Yu L, Cheng P, Chen J, Chi C. A Comprehensive Survey on SAR ATR in Deep-Learning Era. Remote Sensing. 2023; 15(5):1454. https://doi.org/10.3390/rs15051454

Chicago/Turabian StyleLi, Jianwei, Zhentao Yu, Lu Yu, Pu Cheng, Jie Chen, and Cheng Chi. 2023. "A Comprehensive Survey on SAR ATR in Deep-Learning Era" Remote Sensing 15, no. 5: 1454. https://doi.org/10.3390/rs15051454