Three-Dimensional Gridded Radar Echo Extrapolation for Convective Storm Nowcasting Based on 3D-ConvLSTM Model

Abstract

:1. Introduction

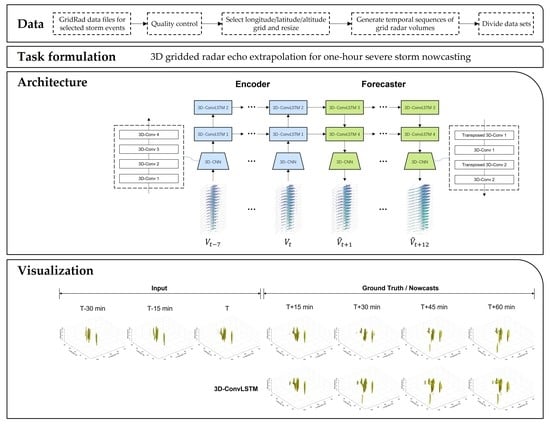

2. Data and Task Formulation

2.1. Data

2.2. Task Formulation

3. Proposed Method

3.1. The 3D-ConvLSTM Model

3.2. Loss

3.3. Metrics

4. Experiment

4.1. Experimental Settings

4.2. Evaluation of Nowcasts of Grid Radar Volumes on Test Set

4.3. Evaluation of Nowcasts for Selected Altitude Levels on Test Set

4.4. Comparative Verification of 2D and 3D REE Models for 1 km Altitude Level

4.5. Case Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, P.W.; Lai, E.S.T. Short-range quantitative precipitation forecasting in Hong Kong. J. Hydrol. 2004, 288, 189–209. [Google Scholar] [CrossRef]

- Wilson, J.W.; Feng, Y.; Chen, M.; Roberts, R.D. Nowcasting challenges during the Beijing Olympics: Successes, failures, and implications for future nowcasting systems. Weather Forecast. 2010, 25, 1691–1714. [Google Scholar] [CrossRef]

- Sun, J.; Xue, M.; Wilson, J.W.; Zawadzki, I.; Ballard, S.P.; Onvlee-Hooimeyer, J.; Joe, P.; Barker, D.M.; Li, P.W.; Golding, B.; et al. Use of NWP for nowcasting convective precipitation: Recent progress and challenges. Bull. Am. Meteorol. Soc. 2014, 95, 409–426. [Google Scholar] [CrossRef]

- Dixon, M.; Wiener, G. TITAN: Thunderstorm identification, tracking, analysis, and nowcasting—A radar-based methodology. J. Atmos. Ocean. Technol. 1993, 10, 785–797. [Google Scholar] [CrossRef]

- Johnson, J.T.; MacKeen, P.L.; Witt, A.; Mitchell, E.D.W.; Stumpf, G.J.; Eilts, M.D.; Thomas, K.W. The storm cell identification and tracking algorithm: An enhanced WSR-88D algorithm. Weather Forecast. 1998, 13, 263–276. [Google Scholar] [CrossRef]

- Hering, A.; Morel, C.; Galli, G.; Sénési, S.; Ambrosetti, P.; Boscacci, M. Nowcasting thunderstorms in the Alpine region using a radar based adaptive thresholding scheme. In Proceedings of the Eradication, Visby, Sweden, 6–10 September 2004. [Google Scholar]

- Ayzel, G.; Heistermann, M.; Winterrath, T. Optical flow models as an open benchmark for radar-based precipitation nowcasting (rainymotion v0.1). Geosci. Model Dev. 2019, 12, 1387–1402. [Google Scholar] [CrossRef]

- Pulkkinen, S.; Nerini, D.; Pérez Hortal, A.A.; Velasco-Forero, C.; Seed, A.; Germann, U.; Foresti, L. Pysteps: An open-source python library for probabilistic precipitation nowcasting (v1.0). Geosci. Model Dev. 2019, 12, 4185–4219. [Google Scholar] [CrossRef]

- Rinehart, R.E.; Garvey, E.T. Three-dimensional storm motion detection by conventional weather radar. Nature 1978, 273, 287–289. [Google Scholar] [CrossRef]

- Li, L.; Schmid, W.; Joss, J. Nowcasting of motion and growth of precipitation with radar over a complex orography. J. Appl. Meteorol. Climatol. 1995, 34, 1286–1300. [Google Scholar] [CrossRef]

- Bowler, N.E.; Pierce, C.E.; Seed, A.W. STEPS: A probabilistic precipitation forecasting scheme which merges an extrapolation nowcast with downscaled NWP. Q. J. R. Meteorol. Soc. A J. Atmos. Sci. Appl. Meteorol. Phys. Oceanogr. 2006, 132, 2127–2155. [Google Scholar] [CrossRef]

- Pulkkinen, S.; Chandrasekar, V.; von Lerber, A.; Harri, A.M. Nowcasting of convective rainfall using volumetric radar observations. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7845–7859. [Google Scholar] [CrossRef]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Fitzsimons, M.; Athanassiadou, M.; Kashem, S.; Madge, S.; et al. Skilful precipitation nowcasting using deep generative models of radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.-C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. Predrnn: Recurrent neural networks for predictive learning using spatiotemporal lstms. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 879–888. [Google Scholar]

- Jing, J.; Li, Q.; Peng, X.; Ma, Q.; Tang, S. HPRNN: A hierarchical sequence prediction model for long-term weather radar echo extrapolation. In Proceedings of the ICASSP 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 4142–4146. [Google Scholar]

- Luo, C.; Li, X.; Wen, Y.; Ye, Y.; Zhang, X. A novel LSTM model with interaction dual attention for radar echo extrapolation. Remote Sens. 2021, 13, 164. [Google Scholar] [CrossRef]

- Agrawal, S.; Barrington, L.; Bromberg, C.; Burge, J.; Gazen, C.; Hickey, J. Machine learning for precipitation nowcasting from radar images. arXiv 2019, arXiv:1912.12132. [Google Scholar]

- Han, L.; Liang, H.; Chen, H.; Zhang, W.; Ge, Y. Convective precipitation nowcasting using U-Net Model. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4103508. [Google Scholar] [CrossRef]

- Che, H.; Niu, D.; Zang, Z.; Cao, Y.; Chen, X. ED-DRAP: Encoder–decoder deep residual attention prediction network for radar echoes. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1004705. [Google Scholar] [CrossRef]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Deep learning for precipitation nowcasting: A benchmark and a new model. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5618–5628. [Google Scholar]

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in memory: A predictive neural network for learning higher-order non-stationarity from spatiotemporal dynamics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9154–9162. [Google Scholar]

- Jing, J.; Li, Q.; Peng, X. MLC-LSTM: Exploiting the spatiotemporal correlation between multi-level weather radar echoes for echo sequence extrapolation. Sensors 2019, 19, 3988. [Google Scholar] [CrossRef]

- Klein, B.; Wolf, L.; Afek, Y. A dynamic convolutional layer for short range weather prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4840–4848. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Trebing, K.; Staǹczyk, T.; Mehrkanoon, S. SmaAt-UNet: Precipitation nowcasting using a small attention-UNet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- Ayzel, G.; Scheffer, T.; Heistermann, M. RainNet v1.0: A convolutional neural network for radar-based precipitation nowcasting. Geosci. Model Dev. 2020, 13, 2631–2644. [Google Scholar] [CrossRef]

- Veillette, M.; Samsi, S.; Mattioli, C. Sevir: A storm event imagery dataset for deep learning applications in radar and satellite meteorology. Adv. Neural Inf. Process. Syst. 2020, 33, 22009–22019. [Google Scholar]

- Pan, X.; Lu, Y.; Zhao, K.; Huang, H.; Wang, M.; Chen, H. Improving nowcasting of convective development by incorporating polarimetric radar variables into a deep-learning model. Geophys. Res. Lett. 2021, 48, e2021GL095302. [Google Scholar] [CrossRef]

- Wang, C.; Wang, P.; Wang, P.; Xue, B.; Wang, D. Using conditional generative adversarial 3-D convolutional neural network for precise radar extrapolation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5735–5749. [Google Scholar] [CrossRef]

- Niu, D.; Huang, J.; Zang, Z.; Xu, L.; Che, H.; Tang, Y. Two-stage spatiotemporal context refinement network for precipitation nowcasting. Remote Sens. 2021, 13, 4285. [Google Scholar] [CrossRef]

- Leinonen, J.; Hamann, U.; Germann, U.; Mecikalski, J.R. Nowcasting thunderstorm hazards using machine learning: The impact of data sources on performance. Nat. Hazards Earth Syst. Sci. 2022, 22, 577–597. [Google Scholar] [CrossRef]

- Kim, D.-K.; Suezawa, T.; Mega, T.; Kikuchi, H.; Yoshikawa, E.; Baron, P.; Ushio, T. Improving precipitation nowcasting using a three-dimensional convolutional neural network model from Multi Parameter Phased Array Weather Radar observations. Atmos. Res. 2021, 262, 105774. [Google Scholar] [CrossRef]

- Han, L.; Sun, J.; Zhang, W. Convolutional neural network for convective storm nowcasting using 3-D Doppler weather radar data. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1487–1495. [Google Scholar] [CrossRef]

- Otsuka, S.; Tuerhong, G.; Kikuchi, R.; Kitano, Y.; Taniguchi, Y.; Ruiz, J.J.; Satoh, S.; Ushio, T.; Miyoshi, T. Precipitation nowcasting with three-dimensional space–time extrapolation of dense and frequent phased-array weather radar observations. Weather Forecast. 2016, 31, 329–340. [Google Scholar] [CrossRef]

- Tran, Q.-K.; Song, S.-K. Multi-channel weather radar echo extrapolation with convolutional recurrent neural networks. Remote Sens. 2019, 11, 2303. [Google Scholar] [CrossRef] [Green Version]

- Heye, A.; Venkatesan, K.; Cain, J. Precipitation nowcasting: Leveraging deep recurrent convolutional neural networks. In Proceedings of the Cray User Group (CUG), Redmond, WA, USA, 6 May 2017. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the International conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar]

- School of Meteorology/University of Oklahoma. GridRad-Severe–Three-Dimensional Gridded NEXRAD WSR-88D Radar Data for Severe Events. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory. 2021. Available online: https://rda.ucar.edu/datasets/ds841.6/ (accessed on 9 April 2022).

- Homeyer, C.R.; Bowman, K.P. Algorithm Description Document for Version 4.2 of the Three-Dimensional Gridded NEXRAD WSR-88D Radar (GridRad) Dataset; Technical Report; University of Oklahoma: Norman, OK, USA; Texas A & M University: College Station, TX, USA, 2022. [Google Scholar]

- Mustafa, M.A. A Data-Driven Learning Approach to Image Registration. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2016. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

| Period | Number of Sequences | |

|---|---|---|

| Training | 2013.1–2018.5 | 4905 |

| Validation | 2018.6–2018.12 | 716 |

| Test | 2019.1–2019.12 | 967 |

| Layer | Kernel/Stride | Output Size (D × H × W × C) |

|---|---|---|

| 3D-Conv 1 | 3 × 3 × 3/(1,1,1) | 16 × 120 × 120 × 32 |

| 3D-Conv 2 | 3 × 3 × 3/(2,2,2) | 8 × 60 × 60 × 64 |

| 3D-Conv 3 | 3 × 3 × 3/(1,1,1) | 8 × 60 × 60 × 64 |

| 3D-Conv 4 | 3 × 3 × 3/(2,1,1) | 4 × 60 × 60 × 64 |

| Layer | Kernel/Stride | Output Size (D × H × W × C) |

|---|---|---|

| 3D-ConvLSTM 1/2/3/4 | 2 × 3 × 3/(1,1,1) | 4 × 60 × 60 × 64 |

| Layer | Kernel/Stride | Output Size (D × H × W × C) |

|---|---|---|

| Transposed 3D-Conv 1 | 3 × 3 × 3/(2,2,2) | 8 × 120 × 120 × 64 |

| 3D-Conv 1 | 1 × 3 × 3/(1,1,1) | 8 × 120 × 120 × 64 |

| Transposed 3D-Conv 2 | 3 × 3 × 3/(2,1,1) | 16 × 120 × 120 × 64 |

| 3D-Conv 2 | 1 × 1 × 1/(1,1,1) | 16 × 120 × 120 × 1 |

| Will a Storm Occur? Observation | |||

| Yes | No | ||

| Will a storm occur? Prediction | Yes | Hits (H) | False alarms (F) |

| No | Misses (M) | Correct negatives | |

| Model | aCSI35 | aCSI45 | twaCSI35 | twaCSI45 |

|---|---|---|---|---|

| Persistence | 0.1701 | 0.0410 | 0.1172 | 0.0161 |

| 3D-OF | 0.2466 | 0.0875 | 0.1868 | 0.0524 |

| 3D-UNet | 0.3505 | 0.1474 | 0.3079 | 0.1029 |

| PredRNN | 0.3882 | 0.1537 | 0.3335 | 0.1030 |

| ConvLSTM | 0.3963 | 0.1594 | 0.3463 | 0.1081 |

| 3D-ConvLSTM | 0.4171 | 0.1834 | 0.3657 | 0.1272 |

| Altitude Levels (km) | Proportion (%) | |

|---|---|---|

| ≥35 dBZ | ≥45 dBZ | |

| 1 | 0.998 | 0.063 |

| 2 | 1.681 | 0.099 |

| 3 | 1.718 | 0.089 |

| 5 | 0.326 | 0.030 |

| 7 | 0.109 | 0.012 |

| 9 | 0.049 | 0.005 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, N.; Zhou, Z.; Li, Q.; Jing, J. Three-Dimensional Gridded Radar Echo Extrapolation for Convective Storm Nowcasting Based on 3D-ConvLSTM Model. Remote Sens. 2022, 14, 4256. https://doi.org/10.3390/rs14174256

Sun N, Zhou Z, Li Q, Jing J. Three-Dimensional Gridded Radar Echo Extrapolation for Convective Storm Nowcasting Based on 3D-ConvLSTM Model. Remote Sensing. 2022; 14(17):4256. https://doi.org/10.3390/rs14174256

Chicago/Turabian StyleSun, Nengli, Zeming Zhou, Qian Li, and Jinrui Jing. 2022. "Three-Dimensional Gridded Radar Echo Extrapolation for Convective Storm Nowcasting Based on 3D-ConvLSTM Model" Remote Sensing 14, no. 17: 4256. https://doi.org/10.3390/rs14174256