1. Introduction

Apple picking is a labor-intensive and time-intensive task. To save labor and to promote the automation of agricultural production, apple-picking robots are being employed to replace manual fruit picking. In the past few years, several researchers have extensively studied fruit- and vegetable-picking robots [

1,

2,

3,

4,

5]. In a natural unstructured environment, due to sunlight and obstruction by branches and leaves, light and dark spots appear on the apple’s surface [

6]. These spots are a special kind of noise that distort the information of the target area in the image, affect the processing of the image, increase the difficulty of recognition and segmentation, and affect the precise positioning of the fruit target and the execution of the picking task [

7].

In the existing fruit harvesting robot’s vision system, the influence of sunlight is reduced by changing the imaging conditions before collection or by optimizing the image after collection. Before the acquisition, optical filters on camera lenses or large shades with artificial auxiliary light sources are applied to improve the imaging conditions [

1]. This manual intervention and the auxiliary light source method can simplify the follow-up process to obtain light-independent images. However, the practical utility of this method is limited. The frequent replacement of the filter’s color absorber and the power consumption of the auxiliary light source increase the system’s cost, which is not conducive for its promotion and application [

8]. Besides, most of the fruit and vegetable images need to be captured in natural light. Under extremely bad lighting conditions, segmentation results in the absence of an auxiliary light source are not ideal for the image robustness and processing speed [

9,

10,

11].

Another solution is to optimize the image capturing algorithm to reduce or eliminate the influence of light (with shadow) on the target area of the image. Ji et al. [

12] first selected a batch of pixels that may be apples, and then used the Euclidean distance between the pixels in the RGB space as a measure of similarity, combining the color and texture features to be used by the region growth method and support vector machine (SVM) algorithm to complete the apple segmentation. Lü et al. [

13] used red-green (R-G) color difference and combined it with the Otsu dynamic threshold method to realize fast segmentation of an apple. Liu et al. [

14] proposed a nighttime apple image segmentation method based on pixel color and location. Based on the RGB value and the HSI value of each pixel, a back-propagation neural network was used for classification. The apple was then segmented again according to the relative position and color difference of the pixels around the segmented fruit. Lv et al. [

15] proposed a red-blue (R-B) color difference map segmentation method, which extracts the main colors in the image, then reconstructs the image, and segments the original image according to the threshold to obtain the apple target. Although these algorithms are good at segmentation, they cannot adapt to conditions with varying illumination [

16]. In the natural environment of the orchard, a segmentation method that works with varying illumination is yet to be demonstrated. The segmentation effect under the influence of alternating lighting and shadows remains to be verified.

Besides the above segmentation methods based on chromatic aberration and threshold, segmentation algorithms that can account for the effect of illumination and shadows have also been proposed. Tu et al. [

17] applied the principle of illumination invariance for the recognition of apple targets. First, the median filter algorithm was used to eliminate the noise in the image. Then the illumination invariant graph was extracted from the processed color image to eliminate the influence of variation in light. Finally, the Otsu threshold segmentation method was used to extract the target fruit. However, the work does not discuss the algorithm’s processing effect on the apple targets that are affected by shadow. Huang and He [

18] segmented apple targets and the background based on the fuzzy-2 partition entropy algorithm in Lab color space, and used the exhaustive search algorithm to find the optimal threshold for image segmentation. However, this method can only work under a specific light condition. Song et al. [

19] used the illumination invariant image principle to obtain the illumination invariant image of the shadowed apple image. They then extracted the red component information of the original image, added it to the illumination invariant image, and performed adaptive threshold segmentation on the added image to remove shadows. However, the shadow in the image background had a greater impact on the segmentation results, resulting in a high false segmentation rate. Song et al. [

20] proposed a method for removing shadows on the surface using a fuzzy set theory. In this algorithm, the image is viewed as a fuzzy matrix. The membership function is used for de-blurring the image to enhance its quality and to minimize the influence of shadow on apple segmentation. The applicability of this method needs further research and discussion. Sun et al. [

6] proposed an improved visual attention mechanism named the Grab Cut (GrabCut) model, combined it with the normalized cut (Ncut) algorithm to identify green apples in the orchard with varying illumination, and achieved good segmentation accuracy. The algorithm improves the image target segmentation’s accuracy by removing the influence of shadows and lighting. However, the images were processed in a pixel-wise fashion, and the inherent spatial information between pixels was ignored. This is not ideal for processing the images in a natural orchard environment [

21].

Superpixel segmentation algorithm is a segmentation algorithm that fully considers the special relationship between the adjacent pixels. This avoids the segmentation errors caused by a single-pixel mutation effectively. Liu et al. [

16] divided the entire image into several superpixel units, then extracted the color and texture features of the superpixels and used SVM to classify the superpixels and to segment the apples. However, the running speed was slow. Xu et al. [

22] combined group pixels and edge probability maps to generate an apple image with superpixel blocks. They removed the effect of shadows by re-illuminating. This method can effectively remove the shadows from the apple’s surface in the image. However, the divided superpixels will eventually affect the segmentation accuracy. Xie et al. [

23] proposed sparse representation and dictionary learning methods for the classification of hyperspectral images, using the pixel blocks to improve the classification accuracy of the images. However, dictionary learning is used to represent images with complex structures, and the learning process is time consuming and labor intensive.

Recently, deep convolutional neural networks (DCNNs) have dominated many fields of computer vision, such as image recognition [

24] and object detection [

25]. The DCNNs have also dominated the field of image semantic segmentation, such as fully convolutional network (FCN) [

26], and an improved deep neural network, DaSNet-v2, which can perform detection and instance segmentation on fruits [

27]. However, these methods require large, labelled training data sets and a lot of computing power before a reliable result can be calculated. Therefore, new segmentation methods in the color space are needed, based on the apple’s characteristics, so that the apples can be identified in real-time in the natural scene of the orchard.

The segmentation process becomes easier when the difference between the fruit and the background is large. However, the interference of natural light and other factors in the orchard environment reduces this difference, and increases the difficulty in identification [

28]. In this study, we propose a method to segment the apple image based on gray-centered RGB color space. In gray-centered RGB color space, this paper presents a novel color feature selection method which accounts for the influence of halation and shadow in apple images. By exploring both color features and local variation contents in apple images, we propose an efficient patch-based segmentation algorithm that is a generalization of the K-means clustering algorithm. Extensive experiments prove the effectiveness and superiority of the proposed method.

2. Materials and Methods

2.1. Apple Image Acquisition

The variety of apple tested in this study was Fuji, which is the most popular variety in China. Experiments were carried out in the Baishui Apple experimental demonstration station of Northwest A & F University, located in Baishui County, Shaanxi Province (109°E, 35.12°N). It is in the transitional zone between the Guanzhong Plain and the Northern Shaanxi Plateau.

The image data used in this paper were collected from the apple orchards during cloudy and sunny weather conditions. All the apples in the orchard had reached maturity. The apples were collected between 8 a.m. and 5 p.m., to acquire apple images in a weak atmospheric environment as well as a strong light environment. A database was built with 300 apple images taken under natural light conditions in the orchard. One hundred and eighty images were randomly selected to test the performance of the algorithm. Out of these 180 images, 60 images had shadows on the apples to varying degrees. 60 images had halation to varying degrees (existing with the edge of the apple or the inside of the apple), and 60 images had both shadows and halation to varying degrees. The image was acquired using a Canon PowerShot G16 camera and Intel Realsense Depth camera D435, with a resolution of 4000 × 3000 pixels. The shots were taken from a distance of 30–60 cm and were saved in JPEG format as 24-bit RGB color images. The proposed algorithms were evaluated using MATLAB (R2018b, © 1994–2020 The MathWorks, Inc., Natickc, MA, USA). The computations were performed using an Intel Core i9-9880H CPU with 8 GB memory hardware.

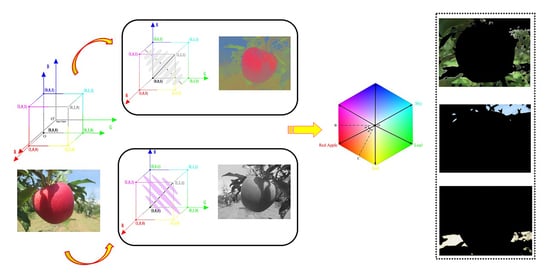

2.2. Gray-Centered RGB Color Space

In this paper, we work in gray-centered RGB color space (i.e., the origin of the RGB color space is placed at the center of the color cube). For 24-bit color images, the translation is achieved by simply subtracting (127.5, 127.5, 127.5) from each pixel value in RGB space. By so doing, all pixels along the same direction from mid-gray have the same hue [

29]. This translation operation effectively moves all the pixels on the apple image by half the distance from their own pixels in the RGB color space, forming a new coordinate system with medium gray as the origin. The conversion of this space coordinate system is shown in

Figure 1.

2.3. Color Features Extraction

2.3.1. Quaternion

Quaternion algebra, the first discovered hypercomplex algebra, is a mathematical tool to realize the reconstruction of a three-dimensional color image signal [

30,

31,

32]. The quaternion-based method imitates human perception of the visual environment and processes RGB channel information in parallel [

33].

A quaternion has four parts, and can be written as

, where

, and where

,

,

satisfy the condition

. For the apple image, the RGB color triple is represented as a purely imaginary quaternion, and can be written as

[

34,

35,

36].

We assume that two pure quaternions

P and

Q are multiplied together as shown in Equation (1).

If

is a unit pure quaternion,

P can be decomposed into parallel and perpendicular components about

as shown in Equation (2).

where

,

, respectively, represent the component parallel to

and the component perpendicular to

.

Let

C denote the chosen color of interest (COI), while

is a unit pure quaternion. Given a pure quaternion

U and a unit pure quaternion

C,

U may be decomposed into components that are parallel and perpendicular to

C shown in Equation (3):

2.3.2. Color Features Decomposition of the Apple Image

Using the quaternion algebra and the COI characteristic, vector decompositions of an image that are parallel and perpendicular to the “grayscale” direction (1, 1, 1) are obtained. The work is carried out in the gray-centered RGB color space in which the origin of the RGB color space is shifted to the center of the color cube. The image is first switched from the original RGB color space to gray-centered RGB color space. Thus, all the pixels along the same direction from the mid-gray have a similar hue. Subsequently, the algebraic operations of the quaternion are used to complete the vector decomposition of the image. Finally, all pixels are shifted back to the origin of RGB color space. The decomposition diagram is shown in

Figure 2.

For apple images, all pixels resolved in the direction parallel to “grayscale” direction (1, 1, 1) show the hue of the images, while those resolved in the direction perpendicular to the “grayscale” direction (1, 1, 1) show the information about color.

Figure 3 shows the decomposition results of four images from the apple dataset based on the quaternion algebra. These four images represent four kinds of apple images, (a) those without any intense light irradiation and without any shadow, (b) those having shadows but without any intense light irradiation, (c) those having intense light irradiation but without any shadows, and (d) those having both shadows and intense light irradiation.

In this paper, apple image features are obtained from the gray-center color space. In the gray-center color space, vector decompositions parallel/perpendicular to the gray direction of the apple image are still color images. The perpendicular components to the “grayscale” direction (1, 1, 1) mainly show the images’ color. The difference between the apple and the background is more prominent in these images, which weakens the influence of shadow and halation on the apple surface to a certain extent. Therefore, in this paper, we search for features on these perpendicular components.

2.3.3. Choice of COI and Features

In the gray-center RGB space, a vector red color (127.5, −127.5, −127.5) is selected as COI in this study, since red color is the prominent color information of an apple. As mentioned above, in gray-center RGB color space, all the pixels along this component have the same hue. Although red color (127.5, −127.5, −127.5) is the prominent color information, apples at the matured stage have other components along with the red component. Other color components may also belong to the apple. Thus, the COI does not represent all the color pixels of the apple. So, in the perpendicular switched space, the angle between the COI and the vector from the gray center to every pixel can be used to indicate the difference between the pixel and the apple. In this paper, the cosine of the angle between COI and the vector from the gray center to every pixel is used as the feature for the whole apple image. In this study, we define that pixels in all vectors within 30° of the selected COI angle belong to the apple. Therefore, all pixels within the range constitute the apple features constructed in this paper.

2.4. A Patch-Based Feature Segmentation Algorithm

In the 1960s, MacQueen proposed the classic K-means clustering algorithm [

37]. It is a kind of unsupervised method whose main idea is to divide objects into

K clusters based on their distance from the cluster centers. If

xk are data samples and

ci are cluster centers, then the mean deviation of clustering is expressed as shown in Equation (4):

With each iteration, the energy (1) is minimized.

In image processing, data samples are pixels, which can be divided into

K clusters to minimize (1). However, this calculation ignores the spatial relationship between pixels in the image, leading to poor segmentation results in complex images. In particular, the local variations of apple images obviously cannot be effectively described by pixel-based methods. In this study, based on the pixel patches, the proposed clustering segmentation model is as shown in Equation (5):

where

fj(

x) is the

jth color feature of the original apple image

f(

x),

is the

patch vector,

Cij is clustering center, and

is the label function, whose value can be 0 or 1.

As can be seen from Equation (5), both color features and local contents in apple images are all considered in our model, which makes it robust to halation, shadows, and local variations in apple images.

The superscript is used to represent the kth iteration. The solving process of iteration can be divided into two steps:

In the first step, in Equation (5),

is fixed and

is updated, and the optimization problem that is needed to be solved is given by Equation (6).

By differentiating, we get:

Next, in the second step, in Equation (5),

is fixed and

is updated, resulting in the following optimization problem given by Equation (8).

This results in Equation (9).

The overall procedure of the segmentation model is as follows (Algorithm 1).

Algorithm review:| Algorithm 1K-means clustering algorithm based on pixel block-based |

| Input: Original apple images |

| Segmentation region |

| Initialization: Randomly initialize, |

| Iteration: According to Equation (6), calculate |

| According to Equation (7), calculate |

|

| Until |

| Output: |

2.5. Criteria Methods

The criteria for evaluating the image segmentation algorithm are the performances of the algorithm, which can be evaluated by comparing ground truth and the segmented image by their pixel points [

38]. Using LabelMe, the apple target area in the test image is marked as ground truth [

39].

For the binary classification, the actual results and the predicted results are compared.

Figure 4 shows the confusion matrix composed of four cases, each corresponding to a different result: (1) truth positives (TP): the number of pixels that are correctly segmented as belonging to the apple; (2) false positives (FN): the number of pixels belonging to the apple that are incorrectly segmented as the background; (3) false negatives (FP): the number of pixels belonging to the background that are incorrectly classified as those belonging to the apple; (4) truth negatives (TN): the number of pixels that are correctly segmented as belonging to the background.

Recall rate, precision rate, false positive rate (FPR), and false negative rate (FNR) can be obtained from the confusion matrix. These indexes can be used to evaluate the proposed algorithm’s segmentation performance [

40]. In Equations (10) and (11), recall rate and precision can be used to measure the ability of the algorithm to identify the apple correctly. In Equation (10), FPR defines gives the percentage of the pixels that belong to the background but are classified as the target. In Equation (13), FNR gives the percentage of the pixels belonging to the target that are incorrectly classified as the background.

3. Experimental Results and Analysis

The apple test images used in this paper were collected during the ripening season. In order to test the performance of the proposed algorithm in a comprehensive range, a test set of 180 images was selected that includes images with varying degrees of halation and shadows.

3.1. Visualization of Segmentation Results

The test data shown in

Figure 5a have light and heavy shadows on the surface of the apple. The test data shown in

Figure 5b have small and large areas that are strongly illuminated. The test data shown in

Figure 5c have both shadows and light to varying degrees.

The original image and the outcome of segmentation are shown in

Figure 5. The segmentation is based on the 3 × 3 pixel block clustering segmentation in the gray center color space proposed in this paper. The number of clusters is taken as 2, that is, one for the apples and another for the background (branches and leaves, sky, ground). The blue line marks the edge of the apple. The result of segmentation has two colors, black and white. From the results, it can be seen that most of the background area has been removed.

3.2. Comparison and Quantitative Analysis of the Results of Segmentation

In this subsection, we show a quantitative analysis of the proposed method and compare it with the existing segmentation methods. The images in the data set used in this article have 12 million pixels (4000 × 3000) each. To reduce the processing time, the original image is scaled using the bilinear difference method without affecting the clustering result. Reducing the number of pixels to 750,000 (1000 × 750) can save image processing time and improve the practicality of the algorithm. We compare our method with the fuzzy 2-partition entropy [

18] method, in which, based on color feature selection like ours, the color histogram threshold in Lab space is used to segment images. We also compare our method with the superpixel-based fast fuzzy C-means clustering method (SFFCM) for image segmentation [

41]. This method defines a multiscale morphological gradient reconstruction operation to obtain a superpixel image with accurate contour, and uses the color histogram as a fuzzy cluster class objective function to achieve color image binarization segmentation. This method provides good results for images with normal lighting and shadows. In addition, we compare our method with the mask regions with convolutional neural network (Mask R-CNN) algorithm by the popular deep learning-based method, which is verified as a state-of-the art method [

42].

Four apple images with varying degrees of halation and shadows are shown in the first row of

Figure 6. The second row shows the segmentation results based on the fuzzy 2-partition entropy method. As can be seen, when there is strong light on the surface of the apple and the background (branches, leaves) is bright, or the apple surface has shadows and the background (branches, leaves) is dark, it is easy to classify similar parts into one category. From the third row of the

Figure 6, we can see that the superpixel-based fast fuzzy C-means clustering method provides good results for images with normal lighting and shadows. However, it is unable to provide complete segmentation for images with strong lighting and strong shadow areas. From the fourth row of the

Figure 6, the mask R-CNN algorithm also shows outstanding segmentation results, but it requires a large amount of training data. Our approach does not require any training data. If the branches and leaves do not appear in the background, it may be segmented into apple regions when there are. The fifth row in

Figure 6 shows the segmentation results of the proposed algorithm. As the proposed method is designed based on the apple characteristics in the gray-scale center color space, it is more robust to different degrees of shadow and halation. Thus, a complete apple target can be obtained.

In order to quantitatively analyze the performance difference between the algorithm proposed in this paper and those existing in literature, the fruit area in the test images of the data set is manually marked by LabelMe software, and the marked result is recorded as ground truth. The real data of the segmented image, the background and the labeled image, are compared pixel by pixel. Each pixel is divided into true positive (real apple), false positive (real background), false negative (false apple), and true negative (false background). Using the confusion matrix, the recall, precision, FNR, and FPR are calculated for each of these algorithms, and the results are given in

Table 1.

By comparing the average computation time of four kinds of segmentation methods, the statistical results are shown in

Figure 7. From the figure, our proposed algorithm is just slightly lower than that of fuzzy C-means, but it has a higher efficiency. The average time required for image processing was 1.37 s, which indicates that the proposed algorithm can be implemented in real-time.

The average recall, precision, FPR, and FNR of the proposed algorithm are 98.69%, 99.26%, 0.06%, and 1.44%, respectively. The average recall, precision, FPR, and FNR of the threshold segmentation algorithm based on fuzzy 2-partition entropy are 87.75%, 84.87%, 9.36%, and 12.44%, respectively. These parameters for the superpixel-based fast fuzzy C-means clustering are 94.34%, 96.87%, 1.37%, and 2.97%, respectively. Finally, these parameters for the mask R-CNN instance segmentation algorithm are 97.02%, 98.16%, 0.47%, and 2.54%, respectively. Therefore, the average value of recall and precision of the proposed algorithm have improved by 10.94% and 14.39%, respectively, and the average value of FPR and FNR have decreased by 9.30% and 11.00%, respectively, when compared to the parameters obtained using the threshold segmentation algorithm based on fuzzy 2-partition entropy. The average value of recall and precision of the proposed algorithm have improved by 4.35% and 2.39%, respectively, and the average value of FPR and FNR have decreased by 1.31% and 1.53%, respectively, as compared to the algorithm based on superpixel-based fast fuzzy C-means clustering. The average value of recall and precision of the proposed algorithm have improved by 1.67% and 1.10%, respectively, and the average value of FPR and FNR have decreased by 0.41% and 1.10%, respectively, as compared to the mask R-CNN instance segmentation algorithm.

3.3. Double and Multi-Fruit Split Results

To develop an eye-in-hand vision servo-picking robot, a distance of 30–40 cm was used for capturing the images having only one apple target. However, apple growth is complicated in unstructured orchards, and shots from a distance of 40–60 cm are unavoidable, resulting in the images having multiple fruit targets.

Figure 8 shows the recognition results of the proposed algorithm for multiple targets, and

Figure 8a–c show the segmentation results for images with multiple fruits.

5. Conclusions

In this study, a patch-based segmentation algorithm in gray-center color space was proposed, realizing stable apple segmentation in a natural apple orchard environment (shadow, light, and background). Specifically, quaternion was used to obtain the parallel/vertical decomposition for the apple image. Then, a vector (COI) that points from the gray center to red in the resulting vertical image was selected based on the cosine of the angle between the vector pointing out from the gray center and the COI, which represents the color information of the image. This was used as the decision condition for extracting the features of an apple. Finally, both color features and local contents in apple images were adopted to realize the segmentation of the target area.

The proposed algorithm has several characteristics as compared to the traditional clustering algorithms and deep learning instance segmentation algorithms: (i) the color degree of pixels in the image is perpendicular to the gray center of gray direction of RGB color space, and the color degree of pixels whose initial point direction are gray center are equal; (ii) the color information of the original image was better reflected by the vertical decomposition; and (iii) the geometrical shape of the segmented target was well maintained, and the segmentation error was significantly reduced, using the proposed patch-based segmentation algorithm.

The experimental results showed that the recall and precision of the proposed segmentation method were 10.94% and 14.39%, respectively, higher than that of the modified threshold segmentation method, while FPR and FNR were 9.30% and 11.00% lower. Compared with the recall and precision of the modified clustering algorithm, their value increased by 4.35% and 2.39%, respectively, and FPR and FNR decreased by 1.31% and 1.53%, respectively. Finally, compared with the deep learning segmentation algorithm, the recall and precision of the proposed segmentation method were 1.67% and 1.10% higher, and FPR and FNR were 0.41% and 1.10% lower.

As shown in previous experiments, our method can deal with the problems of illumination and shadow. In more complex environments, for specific targets, the focus of future research will be on the application of the proposed method. Our method may be utilized to segment other fruit targets such as bananas, grapes, pears, and so on. Future work includes the segmentation of unmanned aerial vehicle (UAV)-based remote sensing images using the methods of this research. However, color feature selection and characteristics of different images need to be studied more delicately.