Aircraft Detection in High Spatial Resolution Remote Sensing Images Combining Multi-Angle Features Driven and Majority Voting CNN

Abstract

:1. Introduction

2. Materials and Methods

2.1. Related Work

2.1.1. One-Stage Target Detection Algorithm Based on Convolution Neural Network

2.1.2. Two-Stage Target Detection Algorithm Based on Convolution Neural Network

2.1.3. Improvement on Mature Target Detection Networks

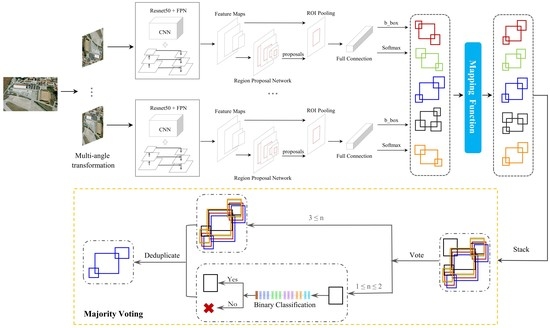

2.2. Methods

2.2.1. Aircraft Detection Based on Multi-Angle Features Driven Strategy

2.2.2. Detection Boxes Processing Based on Majority Voting Strategy

2.2.3. The Binary Classification Network

2.2.4. Comprehensive Accuracy Evaluation Method

3. Experiments

3.1. Datasets Description

3.1.1. Object Detection Datasets

3.1.2. Binary Classification Network Datasets for “Inferiority Box” Discrimination

3.2. Training and Parameters Setting for Network

3.2.1. Training of the Network

3.2.2. Parameters Setting

3.3. Experimental Results

4. Discussion

4.1. Comparison with the Advanced Models

4.2. Ablation Experiment

4.2.1. The Effectiveness of Multi-Angle Features Driven Strategy

4.2.2. The Effectiveness of Majority Voting Strategy

4.3. The Limitation of the Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, Z.-Z.; Weise, T.; Wang, Y.; Wang, Y. Convolutional Neural Network Based Weakly Supervised Learning for Aircraft Detection From Remote Sensing Image. IEEE Access 2020, 8, 158097–158106. [Google Scholar] [CrossRef]

- Wu, Z.-Z.; Wan, S.-H.; Wang, X.-F.; Tan, M.; Zou, L.; Li, X.-L.; Chen, Y. A benchmark data set for aircraft type recognition from remote sensing images. Appl. Soft Comput. 2020, 89, 106132. [Google Scholar] [CrossRef]

- Zhao, A.; Fu, K.; Wang, S.; Zuo, J.; Zhang, Y.; Hu, Y.; Wang, H. Aircraft recognition based on landmark detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1413–1417. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Fu, Z.; Chen, Y.; Yong, H.; Jiang, R.; Zhang, L.; Hua, X.-S. Foreground gating and background refining network for surveillance object detection. IEEE Trans. Image Process. 2019, 28, 6077–6090. [Google Scholar] [CrossRef]

- Dai, X. HybridNet: A fast vehicle detection system for autonomous driving. Signal. Process. Image Commun. 2019, 70, 79–88. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Xu, C.; Lv, Y.; Fu, C.; Xiao, H.; He, Y. A lightweight feature optimizing network for ship detection in SAR image. IEEE Access 2019, 7, 141662–141678. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chang, D.; Ding, Y.; Xie, J.; Bhunia, A.K.; Li, X.; Ma, Z.; Wu, M.; Guo, J.; Song, Y.-Z. The devil is in the channels: Mutual-channel loss for fine-grained image classification. IEEE Trans. Image Process. 2020, 29, 4683–4695. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santiago, Chile, 13–16 December 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Hu, G.; Yang, Z.; Han, J.; Huang, L.; Gong, J.; Xiong, N. Aircraft detection in remote sensing images based on saliency and convolution neural network. Eurasip J. Wirel. Commun. Netw. 2018, 2018, 1–16. [Google Scholar] [CrossRef]

- Shi, L.; Tang, Z.; Wang, T.; Xu, X.; Liu, J.; Zhang, J. Aircraft detection in remote sensing images based on deconvolution and position attention. Int. J. Remote Sens. 2021, 42, 4241–4260. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Zhang, Z.; Jiang, R.; Mei, S.; Zhang, S.; Zhang, Y. Rotation-invariant feature learning for object detection in VHR optical remote sensing images by double-net. IEEE Access 2019, 8, 20818–20827. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.; Gong, M.; Bai, Z.; Zhao, W.; Guo, Q.; Chen, X.; Miao, Q. A coarse-to-fine network for ship detection in optical remote sensing images. Remote Sens. 2020, 12, 246. [Google Scholar] [CrossRef] [Green Version]

- Zhu, M.; Xu, Y.; Ma, S.; Li, S.; Ma, H.; Han, Y. Effective airplane detection in remote sensing images based on multilayer feature fusion and improved nonmaximal suppression algorithm. Remote Sens. 2019, 11, 1062. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Chen, F.; Ren, R.; Van de Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast automatic airport detection in remote sensing images using convolutional neural networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Bai, H.; Zhou, Y.; Li, W. DF-SSD: A deep convolutional neural network-based embedded lightweight object detection framework for remote sensing imagery. J. Appl. Remote Sens. 2020, 14, 014521. [Google Scholar] [CrossRef]

- Xie, Y.; Cai, J.; Bhojwani, R.; Shekhar, S.; Knight, J. A locally-constrained yolo framework for detecting small and densely-distributed building footprints. Int. J. Geogr. Inf. Sci. 2020, 34, 777–801. [Google Scholar] [CrossRef]

- Chen, K.; Li, J.; Lin, W.; See, J.; Wang, J.; Duan, L.; Chen, Z.; He, C.; Zou, J. Towards accurate one-stage object detection with ap-loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5119–5127. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, H.; Zhang, H.; Zhang, J.; Xu, F. Typical target detection in satellite images based on convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 2956–2961. [Google Scholar]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Liu, Q.; Xiang, X.; Wang, Y.; Luo, Z.; Fang, F. Aircraft detection in remote sensing image based on corner clustering and deep learning. Eng. Appl. Artif. Intell. 2020, 87, 103333. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, L.; Zhang, M. A multi-scale target detection method for optical remote sensing images. Multimed. Tools Appl. 2019, 78, 8751–8766. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Zhao, J.; Tan, W. Aircraft Detection in Remote Sensing Images Based on Deep Convolutional Neural Network. In Proceedings of the 2017 International Conference on Computer Technology, Electronics and Communication (ICCTEC), Dalian, China, 20–21 October 2018; pp. 942–945. [Google Scholar]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Bao, S.; Zhong, X.; Zhu, R.; Zhang, X.; Li, Z.; Li, M. Single shot anchor refinement network for oriented object detection in optical remote sensing imagery. IEEE Access 2019, 7, 87150–87161. [Google Scholar] [CrossRef]

- Qu, J.; Su, C.; Zhang, Z.; Razi, A. Dilated convolution and feature fusion SSD network for small object detection in remote sensing images. IEEE Access 2020, 8, 82832–82843. [Google Scholar] [CrossRef]

- Yin, R.; Zhao, W.; Fan, X.; Yin, Y. AF-SSD: An Accurate and Fast Single Shot Detector for High Spatial Remote Sensing Imagery. Sensors 2020, 20, 6530. [Google Scholar] [CrossRef]

- Pham, M.-T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-stage detector of small objects under various backgrounds in remote sensing images. Remote Sens. 2020, 12, 2501. [Google Scholar] [CrossRef]

- Long, Z.; Suyuan, W.; Zhongma, C.; Jiaqi, F.; Xiaoting, Y.; Wei, D. Lira-YOLO: A lightweight model for ship detection in radar images. J. Syst. Eng. Electron. 2020, 31, 950–956. [Google Scholar] [CrossRef]

- Honari, S.; Yosinski, J.; Vincent, P.; Pal, C. Recombinator networks: Learning coarse-to-fine feature aggregation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5743–5752. [Google Scholar]

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Zhou, Y.; Liu, X.; Zhao, J.; Ma, D.; Yao, R.; Liu, B.; Zheng, Y. Remote sensing scene classification based on rotation-invariant feature learning and joint decision making. Eurasip J. Image Video Process. 2019, 2019, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Yan, H. Aircraft detection in remote sensing images using centre-based proposal regions and invariant features. Remote Sens. Lett. 2020, 11, 787–796. [Google Scholar] [CrossRef]

- Li, L.; Zhang, S.; Wu, J. Efficient object detection framework and hardware architecture for remote sensing images. Remote Sens. 2019, 11, 2376. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Z.; Liu, Q.; Tang, G.; Zhai, X. Elliptic Fourier transformation-based histograms of oriented gradients for rotationally invariant object detection in remote-sensing images. Int. J. Remote Sens. 2015, 36, 618–644. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

| Baseline | Paper | Characteristic | Advantage | Disadvantage |

|---|---|---|---|---|

| Faster R-CNN | Chen et al. [22] | Add a constraint to sieve low quality positive samples | High precision | Low utilization of image information |

| Feng et al. [36] | Optimize the generation method of foreground samples, reduce the ineffective foreground samples | Relatively high time consumption | ||

| Li et al. [37] | Modify the anchor box size, scale and loss function of the network for specific target | High precision and less time-consuming | Only for small-scale images | |

| Fu et al. [38] | Add a rotation-aware object detector to solve the problem of inconsistent target orientation in remote sensing images | High precision | Complex structure and large amount of calculation | |

| SSD | Bao et al. [39] | Determine the detection boxes and target category through two consecutive regressions | Real-time and high precision | Low utilization of image information |

| Guo et al. [23] | The DepthFire module is added, which reduces the amount of calculation and improves processing efficiency | Real-time | Compared with two-stage target detection, the overall accuracy is low | |

| Qu et al. [40] | Combination of dilated convolution and feature fusion | |||

| Yin et al. [41] | Design encoding-decoding module to detect small objects | Real-time and high precision | Low utilization of image information | |

| YOLO-V1 | Xie et al. [24] | Designed a Locally–Constrained module to improve the detection performance for cluster small targets | High accuracy of small target detection | Only for small objects |

| YOLO-V3 | Pham et al. [42] | Replace the large-scale factors in YOLO-V3 with (very) small-scale factors for small target detection | Real-time and high accuracy of small target detection | |

| Zhou et al. [43] | Combine the idea of dense connections, residual connections and group convolution | Lightweight | Universality to be investigated |

| Number | Dataset | Resolution/m | Image Size/Pixel | Number of Images | Number of Aircrafts | Purpose |

|---|---|---|---|---|---|---|

| I | RSOD | 0.5–2.0 | 1000 × 900 | 446 | 4993 | train |

| II | DIOR [part] | 0.5–30 | 800 × 800 | 300 | 3943 | test |

| III | Private Dataset | 0.6–1.2 | 1400 × 900–3000 × 2500 | 25 | 1064 | test |

| Dataset | Number of Image | Number of Aircraft | AP (%) | Average Time (s) |

|---|---|---|---|---|

| II | 300 | 3943 | 94.82 | 0.49 |

| III | 25 | 1064 | 95.25 | 0.63 |

| Model | Backbone | AP (%) | Average Time (s) |

|---|---|---|---|

| SSD300 | VGG16 | 87.20 | 0.07 |

| YOLOV4 | Darknet | 93.91 | 0.09 |

| Faster R-CNN(with FPN) | Resnet50 | 88.01 | 0.26 |

| Ours | Resnet50 | 94.82 | 0.49 |

| Model | Backbone | AP (%) | Average Time (s) |

|---|---|---|---|

| SSD300 | VGG16 | 40.92 | 0.30 |

| YOLOV4 | Darknet | 69.33 | 0.28 |

| Faster R-CNN(with FPN) | Resnet50 | 86.27 | 0.38 |

| Ours | Resnet50 | 95.25 | 0.63 |

| Model | AP (%) | Average Time (s) |

|---|---|---|

| Faster R-CNN (with FPN) | 88.01 | 0.26 |

| Ours (with FPN and Multi-Angle) | 93.09 | 0.28 |

| Model | AP (%) | Average Time (s) |

|---|---|---|

| Faster R-CNN (with FPN) | 86.27 | 0.38 |

| Ours (with FPN and Multi-Angle) | 94.51 | 0.40 |

| Model | AP (%) | Average Time (s) |

|---|---|---|

| Ours (Without Voting) | 93.09% | 0.28 s |

| Ours | 94.82% | 0.49 s |

| Model | AP (%) | Average Time (s) |

|---|---|---|

| Ours (Without Voting) | 94.51% | 0.40 s |

| Ours | 95.25% | 0.63 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, F.; Ming, D.; Zeng, B.; Yu, J.; Qing, Y.; Du, T.; Zhang, X. Aircraft Detection in High Spatial Resolution Remote Sensing Images Combining Multi-Angle Features Driven and Majority Voting CNN. Remote Sens. 2021, 13, 2207. https://doi.org/10.3390/rs13112207

Ji F, Ming D, Zeng B, Yu J, Qing Y, Du T, Zhang X. Aircraft Detection in High Spatial Resolution Remote Sensing Images Combining Multi-Angle Features Driven and Majority Voting CNN. Remote Sensing. 2021; 13(11):2207. https://doi.org/10.3390/rs13112207

Chicago/Turabian StyleJi, Fengcheng, Dongping Ming, Beichen Zeng, Jiawei Yu, Yuanzhao Qing, Tongyao Du, and Xinyi Zhang. 2021. "Aircraft Detection in High Spatial Resolution Remote Sensing Images Combining Multi-Angle Features Driven and Majority Voting CNN" Remote Sensing 13, no. 11: 2207. https://doi.org/10.3390/rs13112207