Semantic-Guided Attention Refinement Network for Salient Object Detection in Optical Remote Sensing Images

Abstract

:1. Introduction

- (1)

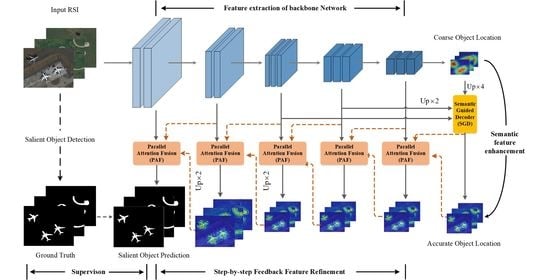

- We design a novel semantic-guided attention refinement network (SARNet) for SOD in optical RSI. The network has better robustness and generalization through the high-level semantic information guidance and top-down boundary refinement strategy to improve scale-varying objects’ saliency detection performance;

- (2)

- The proposed semantic guided decoding (SGD) module combines several high-level feature representations to improve the semantic feature differences in long-distance space. Simultaneously, the accurate salient area location information is used to guide the subsequent multi-level feature fusion;

- (3)

- The proposed parallel perception fusion (PAF) module models global semantic information and cross-level features to fill the differences between different visual representation levels and gradually restore salient objects’ edge details;

- (4)

- We compare the proposed methods with 14 SOTA approaches on two challenging optical RSI datasets and additional NSI datasets. Without bells and whistles, our method achieves the best performance under five evaluation metrics. Besides, the model has a real-time inference speed of 47.3 FPS on a single GPU. The code will be available at https://github.com/laoyezi/SARNet (accessed on 29 May 2021).

2. Related Works

2.1. Saliency Detection for NSI

2.2. Saliency Detection for RSI

2.3. Attention Mechanism in SOD

3. Approach

3.1. Overall Network Architecture

3.2. Semantic Guided Decoder (SGD)

3.3. Parallel Attention Fusion (PAF) Module

3.4. Loss Function

4. Experiments

4.1. Experimental Settings

4.1.1. Datasets

4.1.2. Evaluation Metrics

- (1)

- MAE evaluates the average difference between all the corresponding pixels of the predicted saliency map and GT map after normalization processing. We compute the score by:where W and H are the width and height of the evaluate map.

- (2)

- Mean F-measure and weighted F-measure can improve interpolation, dependency, and equality problems, leading to inaccurate estimates of and original F-measure values [60]. This metric is calculated as follows:where different weight values are set to emphasize the importance of recall or precision. It is customary to set 0.5 (i.e., ) to treat equally or 0.3 (i.e., ) to emphasize precision over recall in previous works [29,46,51,59].

- (3)

- Mean E-measure combines the image-level average’s local value with the image-level average to obtain global statistical information and local pixel matching information, an evaluation metric based on cognitive vision. It is computed as:where is the alignment matrix and denotes the enhanced alignment matrix [61]. To make a fair comparison, we took the mean score of the evaluation index in the experiment.

- (4)

- S-measure takes the structural similarity of region-aware and object-aware as the evaluation of structural information to consider the structural information of the image. is calculated as follows:where is a trade-off parameter, usually set to 0.5.

4.1.3. Implementation Details

4.2. Comparison with SOTAs

4.2.1. Quantitative Comparison

4.2.2. Visual Comparison

- (1)

- Accurately locate the object. The proposed model perceives and accurately locates salient objects with varied scales and shapes in various scenes and has excellent background suppression ability. In detecting the scene with small objects in Figure 8a, most of the methods will miss aircraft and ships’ detection. For the river area (i.e., the second row of (b) and the first row of (d) in Figure 8), LVNet [18], EGNet [22], and SMD [31] can only roughly discover the potential location of the object.

- (2)

- The sharp edge of the object. How to get a clear object edge has always been a hot issue in the field of SOD. For all the specific challenging scenarios in Figure 8, the competitors can hardly get a saliency map with sharp edges. On the contrary, the proposed method can obtain precise and reliable object edges, especially in small objects and low contrast scenes.

- (3)

- The internal integrity of the object. From the second image of (b) and two images of (d) in Figure 8, it can be seen that most models cannot maintain the integrity of the object for the saliency detection of scenes containing slender and large targets, such as LVNet [18], F3Net [50], GateNet [59], and MINet [19]. In comparison, our SARNet can obtain internally consistent saliency maps.

4.2.3. Extension Experiment on NSI Datasets

4.3. Ablation Study

- Scalability. Table 1 shows that the performance of SARNet can be effectively improved by using better backbones, and it also demonstrates the scalability of the proposed architecture. As shown in Table 1, the benchmark results on two RSI datasets show that the performance of SARNet can be effectively improved through a better backbone, which demonstrates the scalability of the proposed network architecture. As shown by the expansion experiments on NSI datasets in Table 2, the proposed model has exceptional competitive detection performance on multiple natural scene datasets, which further shows the compatibility and robustness of our SARNet.

- Aggregation strategy. Table 3 quantitatively shows the interaction and contribution of the proposed semantic guidance and cascade refinement mechanism on two RSI datasets. “” and “” respectively involve only three low-level features () and three high-level features (). “” and “”, respectively, refer to only the combination of and in the semantic guidance stage. “” and “”, respectively, use the combination of and in the parallel feature fusion stage. It can be seen from the metric scores in Table 3 that the proposed model benefits from the additional global semantic features and the feature aggregation strategy adopted.

- Module. We conducted some evaluations on the effectiveness of the proposed modules. The baseline model (BM) used is a network with FPN structure. We assembled the SGD and PAF modules on the BM during the experiment, and the scores are shown in Table 4. Note that multi-level features are integrated by simple concatenate or addition operations to replace the proposed feature aggregation modules in the experiment. From the experimental results of the two datasets, we can see that both SGD and PAF modules can improve the model’s performance in varying degrees. The PAF module contributes more to the network than the SGD module. With the combination of the two modules, the proposed model can achieve the best performance.

4.4. Complexity Analysis

4.5. Failure Case

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, Z.; Zhang, T.; Ouyang, C. End-to-end airplane detection using transfer learning in remote sensing images. Remote Sens. 2018, 10, 139. [Google Scholar] [CrossRef] [Green Version]

- Nie, T.; Han, X.; He, B.; Li, X.; Liu, H.; Bi, G. Ship detection in panchromatic optical remote sensing images based on visual saliency and multi-dimensional feature description. Remote Sens. 2020, 12, 152. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Zhao, D.; Shi, Z.; Jiang, Z. Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection. Remote Sens. 2019, 11, 1089. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Luo, B.; Hong, H.; Su, X.; Wang, Y.; Liu, J.; Wang, C.; Zhang, J.; Wei, L. Object detection based on global-local saliency constraint in aerial images. Remote Sens. 2020, 12, 1435. [Google Scholar] [CrossRef]

- Wang, W.; Lai, Q.; Fu, H.; Shen, J.; Ling, H.; Yang, R. Salient object detection in the deep learning era: An in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Cong, R.; Lei, J.; Fu, H.; Cheng, M.M.; Lin, W.; Huang, Q. Review of visual saliency detection with comprehensive information. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2941–2959. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Shi, M.; Tao, D.; Xu, C. Database saliency for fast image retrieval. IEEE Trans. Multimed. 2015, 17, 359–369. [Google Scholar] [CrossRef]

- Ma, C.; Miao, Z.; Zhang, X.P.; Li, M. A saliency prior context model for real-time object tracking. IEEE Trans. Multimed. 2017, 19, 2415–2424. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhuge, Y.; Lu, H.; Zhang, L. Joint learning of saliency detection and weakly supervised semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7223–7233. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Zhou, T.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Pranet: Parallel reverse attention network for polyp segmentation. In Proceedings of the 23rd International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 263–273. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2777–2787. [Google Scholar]

- Zhao, D.; Ma, Y.; Jiang, Z.; Shi, Z. Multiresolution airport detection via hierarchical reinforcement learning saliency model. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 2855–2866. [Google Scholar] [CrossRef]

- Hou, B.; Wang, Y.; Liu, Q. A saliency guided semi-supervised building change detection method for high resolution remote sensing images. Sensors 2016, 16, 1377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feng, W.; Sui, H.; Tu, J.; Huang, W.; Sun, K. A novel change detection approach based on visual saliency and random forest from multi-temporal high-resolution remote-sensing images. Int. J. Remote Sens. 2018, 39, 7998–8021. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, Z.; He, G.; Wei, M. An improved grabcut method based on a visual attention model for rare-earth ore mining area recognition with high-resolution remote sensing images. Remote Sens. 2019, 11, 987. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Liu, Y.; Zhang, J. Saliency detection based on self-adaptive multiple feature fusion for remote sensing images. Int. J. Remote Sens. 2019, 40, 8270–8297. [Google Scholar] [CrossRef]

- Li, C.; Cong, R.; Guo, C.; Li, H.; Zhang, C.; Zheng, F.; Zhao, Y. A parallel down-up fusion network for salient object detection in optical remote sensing images. Neurocomputing 2020, 415, 411–420. [Google Scholar] [CrossRef]

- Li, C.; Cong, R.; Hou, J.; Zhang, S.; Qian, Y.; Kwong, S. Nested network with two-stream pyramid for salient object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9156–9166. [Google Scholar] [CrossRef] [Green Version]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Multi-Scale Interactive Network for Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 9413–9422. [Google Scholar]

- Zhang, Q.; Cong, R.; Li, C.; Cheng, M.M.; Fang, Y.; Cao, X.; Zhao, Y.; Kwong, S. Dense Attention Fluid Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Image Process. 2020, 30, 1305–1317. [Google Scholar] [CrossRef] [PubMed]

- Hou, Q.; Cheng, M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P. Deeply Supervised Salient Object Detection with Short Connections. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, J.X.; Liu, J.J.; Fan, D.P.; Cao, Y.; Yang, J.; Cheng, M.M. EGNet: Edge guidance network for salient object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8779–8788. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 385–400. [Google Scholar]

- Zhao, T.; Wu, X. Pyramid feature attention network for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA; pp. 3085–3094. [Google Scholar]

- Zhao, J.X.; Cao, Y.; Fan, D.P.; Cheng, M.M.; Li, X.Y.; Zhang, L. Contrast prior and fluid pyramid integration for RGBD salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2019; pp. 3927–3936. [Google Scholar]

- Fan, D.P.; Zhai, Y.; Borji, A.; Yang, J.; Shao, L. BBS-Net: RGB-D salient object detection with a bifurcated backbone strategy network. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 275–292. [Google Scholar]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. Denseaspp for semantic segmentation in street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3684–3692. [Google Scholar]

- Kim, J.; Han, D.; Tai, Y.W.; Kim, J. Salient region detection via high-dimensional color transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 883–890. [Google Scholar]

- Huang, Z.; Chen, H.X.; Zhou, T.; Yang, Y.Z.; Wang, C.Y.; Liu, B.Y. Contrast-weighted dictionary learning based saliency detection for VHR optical remote sensing images. Pattern Recognit. 2020, 113, 107757. [Google Scholar] [CrossRef]

- Huang, Z.; Chen, H.; Liu, B. Deep Convolutional Sparse Coding Network for Salient Object Detection in VHR Remote Sensing Images. In Proceedings of the 17th International Computer Conference on Wavelet Active Media Technology and Information Processing, Chengdu, China, 18–20 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 361–365. [Google Scholar]

- Peng, H.; Li, B.; Ling, H.; Hu, W.; Xiong, W.; Maybank, S.J. Salient object detection via structured matrix decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 818–832. [Google Scholar] [CrossRef] [Green Version]

- Zhou, L.; Yang, Z.; Zhou, Z.; Hu, D. Salient region detection using diffusion process on a two-layer sparse graph. IEEE Trans. Image Process. 2017, 26, 5882–5894. [Google Scholar] [CrossRef]

- Huang, Z.; Chen, H.X.; Zhou, T.; Yang, Y.Z.; Wang, C.Y. Multi-level Cross-modal Interaction Network for RGB-D Salient Object Detection. Neurocomputing 2021, 452, 200–211. [Google Scholar] [CrossRef]

- Li, G.; Yu, Y. Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 5455–5463. [Google Scholar]

- Zhao, R.; Ouyang, W.; Li, H.; Wang, X. Saliency detection by multi-context deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1265–1274. [Google Scholar]

- Deng, Z.; Hu, X.; Zhu, L.; Xu, X.; Qin, J.; Han, G.; Heng, P.A. R3net: Recurrent residual refinement network for saliency detection. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Menlo Park, CA, USA, 2018; pp. 684–690. [Google Scholar]

- Yao, X.; Han, J.; Guo, L.; Bu, S.; Liu, Z. A coarse-to-fine model for airport detection from remote sensing images using target-oriented visual saliency and CRF. Neurocomputing 2015, 164, 162–172. [Google Scholar] [CrossRef]

- Chaudhari, S.; Polatkan, G.; Ramanath, R.; Mithal, V. An attentive survey of attention models. arXiv 2019, arXiv:1904.02874. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7132–7141. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. arXiv 2015, arXiv:1506.02025. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3156–3164. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 603–612. [Google Scholar]

- Kuen, J.; Wang, Z.; Wang, G. Recurrent attentional networks for saliency detection. In Proceedings of the IEEE Conference on computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3668–3677. [Google Scholar]

- Chen, S.; Tan, X.; Wang, B.; Hu, X. Reverse attention for salient object detection. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–4 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 234–250. [Google Scholar]

- Wang, W.; Zhao, S.; Shen, J.; Hoi, S.C.; Borji, A. Salient object detection with pyramid attention and salient edges. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1448–1457. [Google Scholar]

- Fan, D.P.; Lin, Z.; Zhang, Z.; Zhu, M.; Cheng, M.M. Rethinking RGB-D Salient Object Detection: Models, Data Sets, and Large-Scale Benchmarks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2075–2089. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Gao, S.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P.H. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, J.; Wang, S.; Huang, Q. F3Net: Fusion, Feedback and Focus for Salient Object Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 12321–12328. [Google Scholar]

- Chen, Z.; Xu, Q.; Cong, R.; Huang, Q. Global context-aware progressive aggregation network for salient object detection. arXiv 2020, arXiv:2003.00651. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Lu, H.; Wang, Y.; Feng, M.; Wang, D.; Yin, B.; Ruan, X. Learning to detect salient objects with image-level supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 136–145. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M.H. Saliency detection via graph-based manifold ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3166–3173. [Google Scholar]

- Chen, Z.; Xu, Q.; Cong, R.; Huang, Q. Global Context-Aware Progressive Aggregation Network for Salient Object Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 10599–10606. [Google Scholar]

- Liu, J.J.; Hou, Q.; Cheng, M.M.; Feng, J.; Jiang, J. A simple pooling-based design for real-time salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3917–3926. [Google Scholar]

- Zhao, X.; Pang, Y.; Zhang, L.; Lu, H.; Zhang, L. Suppress and balance: A simple gated network for salient object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 35–51. [Google Scholar]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 248–255. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Menlo Park, CA, USA, 2018; pp. 698–704. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4548–4557. [Google Scholar]

- Zhang, L.; Dai, J.; Lu, H.; He, Y.; Wang, G. A bi-directional message passing model for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1741–1750. [Google Scholar]

- Liu, N.; Han, J.; Yang, M.H. Picanet: Learning pixel-wise contextual attention for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3089–3098. [Google Scholar]

- Feng, M.; Lu, H.; Ding, E. Attentive feedback network for boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1623–1632. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7479–7489. [Google Scholar]

- Zhou, T.; Fan, D.P.; Cheng, M.M.; Shen, J.; Shao, L. RGB-D salient object detection: A survey. Comput. Vis. Media 2021, 7, 37–69. [Google Scholar] [CrossRef] [PubMed]

| Models | ORSSD [18] | EORSSD [20] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sξ ↑ | mEϕ ↑ | mFβ ↑ | wFβ ↑ | Sξ ↑ | mEϕ ↑ | mFβ ↑ | wFβ ↑ | |||

| HDCT [28] | 0.620 | 0.650 | 0.424 | 0.372 | 0.131 | 0.597 | 0.639 | 0.403 | 0.266 | 0.109 |

| DSG [32] | 0.719 | 0.734 | 0.575 | 0.566 | 0.104 | 0.643 | 0.661 | 0.462 | 0.402 | 0.125 |

| SMD [31] | 0.764 | 0.775 | 0.621 | 0.557 | 0.072 | 0.711 | 0.731 | 0.550 | 0.409 | 0.077 |

| R3Net † [36] | 0.814 | 0.868 | 0.738 | 0.738 | 0.040 | 0.819 | 0.831 | 0.632 | 0.418 | 0.017 |

| DSS † [21] | 0.826 | 0.836 | 0.696 | 0.621 | 0.036 | 0.787 | 0.764 | 0.582 | 0.461 | 0.019 |

| PFA † [24] | 0.861 | 0.855 | 0.731 | 0.672 | 0.024 | 0.836 | 0.866 | 0.679 | 0.549 | 0.016 |

| EGNet † [22] | 0.872 | 0.901 | 0.750 | 0.645 | 0.022 | 0.860 | 0.877 | 0.697 | 0.538 | 0.011 |

| MINet † [19] | 0.849 | 0.894 | 0.779 | 0.709 | 0.028 | 0.858 | 0.915 | 0.772 | 0.694 | 0.013 |

| GateNet † [59] | 0.893 | 0.927 | 0.827 | 0.763 | 0.015 | 0.880 | 0.904 | 0.770 | 0.643 | 0.011 |

| F3Net † [50] | 0.908 | 0.947 | 0.827 | 0.763 | 0.015 | 0.907 | 0.944 | 0.810 | 0.769 | 0.010 |

| SMFF ‡ [16] | 0.531 | 0.568 | 0.268 | 0.250 | 0.185 | 0.540 | 0.521 | 0.301 | 0.209 | 0.143 |

| CMC ‡ [3] | 0.603 | 0.642 | 0.345 | 0.311 | 0.127 | 0.580 | 0.590 | 0.270 | 0.201 | 0.106 |

| LVNet †‡ [18] | 0.882 | 0.926 | 0.800 | 0.751 | 0.021 | 0.864 | 0.883 | 0.736 | 0.631 | 0.015 |

| DAFNet †‡ [20] | 0.919 | 0.954 | 0.844 | 0.756 | 0.011 | 0.918 | 0.938 | 0.798 | 0.652 | 0.005 |

| SARNet-VGG16 | 0.913 | 0.948 | 0.862 | 0.851 | 0.019 | 0.924 | 0.955 | 0.854 | 0.830 | 0.010 |

| SARNet-ResNet50 | 0.921 | 0.952 | 0.867 | 0.853 | 0.014 | 0.926 | 0.955 | 0.849 | 0.832 | 0.008 |

| SARNet-Res2Net50 | 0.935 | 0.966 | 0.887 | 0.872 | 0.010 | 0.929 | 0.961 | 0.857 | 0.824 | 0.008 |

| Models | DUT-OMRON [56] | DUTS-TE [55] | HKU-IS [34] | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sξ ↑ | mEϕ ↑ | wFβ ↑ | Sξ ↑ | mEϕ ↑ | wFβ ↑ | Sξ ↑ | mEϕ ↑ | wFβ ↑ | |||||

| 2018 | BMPM [63] | 0.809 | 0.837 | 0.681 | 0.064 | 0.862 | 0.860 | 0.761 | 0.049 | 0.907 | 0.937 | 0.859 | 0.039 |

| PiCA [64] | 0.832 | 0.841 | 0.695 | 0.065 | 0.869 | 0.862 | 0.754 | 0.043 | 0.904 | 0.936 | 0.840 | 0.042 | |

| RAS [44] | 0.814 | 0.846 | 0.695 | 0.062 | 0.839 | 0.861 | 0.740 | 0.059 | 0.887 | 0.929 | 0.843 | 0.045 | |

| 2019 | PAGE [45] | 0.824 | 0.853 | 0.722 | 0.062 | 0.854 | 0.869 | 0.769 | 0.052 | 0.903 | 0.940 | 0.866 | 0.037 |

| AFNet [65] | 0.826 | 0.853 | 0.717 | 0.057 | 0.867 | 0.879 | 0.785 | 0.046 | 0.905 | 0.942 | 0.869 | 0.036 | |

| BASNet [66] | 0.836 | 0.869 | 0.751 | 0.056 | 0.866 | 0.884 | 0.803 | 0.048 | 0.909 | 0.946 | 0.889 | 0.032 | |

| 2020 | F3Net [50] | 0.838 | 0.870 | 0.747 | 0.053 | 0.888 | 0.902 | 0.835 | 0.035 | 0.917 | 0.953 | 0.900 | 0.028 |

| GateNet [59] | 0.838 | 0.862 | 0.729 | 0.055 | 0.885 | 0.889 | 0.809 | 0.040 | 0.915 | 0.949 | 0.880 | 0.033 | |

| MINet [19] | 0.833 | 0.865 | 0.738 | 0.055 | 0.884 | 0.898 | 0.825 | 0.037 | 0.919 | 0.953 | 0.897 | 0.029 | |

| SARNet | 0.843 | 0.873 | 0.773 | 0.058 | 0.890 | 0.904 | 0.827 | 0.037 | 0.920 | 0.956 | 0.914 | 0.028 | |

| Settings | ORSSD [18] | EORSSD [20] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sξ ↑ | mEϕ ↑ | mFβ ↑ | wFβ ↑ | Sξ ↑ | mEϕ ↑ | mFβ ↑ | wFβ ↑ | |||

| 0.875 | 0.831 | 0.742 | 0.682 | 0.032 | 0.843 | 0.825 | 0.732 | 0.688 | 0.031 | |

| 0.889 | 0.862 | 0.778 | 0.691 | 0.022 | 0.881 | 0.846 | 0.755 | 0.681 | 0.026 | |

| 0.904 | 0.918 | 0.833 | 0.792 | 0.019 | 0.894 | 0.901 | 0.812 | 0.773 | 0.023 | |

| 0.918 | 0.931 | 0.866 | 0.849 | 0.014 | 0.902 | 0.928 | 0.838 | 0.792 | 0.017 | |

| 0.924 | 0.938 | 0.873 | 0.864 | 0.012 | 0.920 | 0.946 | 0.845 | 0.816 | 0.011 | |

| 0.917 | 0.927 | 0.868 | 0.853 | 0.014 | 0.916 | 0.937 | 0.841 | 0.810 | 0.013 | |

| SARNet | 0.935 | 0.966 | 0.887 | 0.872 | 0.010 | 0.929 | 0.961 | 0.857 | 0.824 | 0.008 |

| Settings | ORSSD [18] | EORSSD [20] | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BM | SGD | PAF | ||||||||||

| ✔ | 0.807 | 0.855 | 0.727 | 0.742 | 0.047 | 0.796 | 0.832 | 0.711 | 0.724 | 0.045 | ||

| ✔ | ✔ | 0.868 | 0.896 | 0.812 | 0.800 | 0.028 | 0.857 | 0.894 | 0.811 | 0.793 | 0.024 | |

| ✔ | ✔ | 0.876 | 0.923 | 0.834 | 0.815 | 0.018 | 0.867 | 0.927 | 0.835 | 0.807 | 0.017 | |

| ✔ | ✔ | ✔ | 0.935 | 0.966 | 0.887 | 0.872 | 0.010 | 0.929 | 0.961 | 0.857 | 0.824 | 0.008 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Chen, H.; Liu, B.; Wang, Z. Semantic-Guided Attention Refinement Network for Salient Object Detection in Optical Remote Sensing Images. Remote Sens. 2021, 13, 2163. https://doi.org/10.3390/rs13112163

Huang Z, Chen H, Liu B, Wang Z. Semantic-Guided Attention Refinement Network for Salient Object Detection in Optical Remote Sensing Images. Remote Sensing. 2021; 13(11):2163. https://doi.org/10.3390/rs13112163

Chicago/Turabian StyleHuang, Zhou, Huaixin Chen, Biyuan Liu, and Zhixi Wang. 2021. "Semantic-Guided Attention Refinement Network for Salient Object Detection in Optical Remote Sensing Images" Remote Sensing 13, no. 11: 2163. https://doi.org/10.3390/rs13112163