1. Introduction

Compared to visible light and infrared remote sensing, the advantages of microwave remote sensing technology include the ability to capture images regardless of weather and light conditions [

1], the ability to penetrate clouds and vegetation, being not easily affected by meteorological conditions or the level of sunshine, and the ability to detect ground targets. Microwave image data can provide information outside the infrared and the visible light image range. Therefore, it plays an important role in weather monitoring and disaster prediction. The microwave radiometer is one of the most important microwave remote sensing sensors. Due to the limitations on antenna size, system noise, and scanning mode, the image data obtained by microwave radiometers have low spatial resolution. However, low spatial resolution data does not satisfy the current needs; for example, the inversion of soil moisture [

2] requires the low-frequency data of microwave imagers, while the spatial resolution of low-frequency data is low. Additionally, some geophysical parameters require the combination of brightness temperature data from different bands [

3,

4,

5,

6,

7]. Therefore, improving the resolution of microwave radiometers using the deconvolution method is important.

The deconvolution of a microwave radiometer involves reconstructing an estimate of a brightness temperature image from the antenna temperature image. Two degradation factors are considered in the imaging process of microwave radiometers: the diffraction effect of the antenna pattern because the size is finite, and the overlap of footprints in the sampling process [

8].

Many algorithms have been proposed for radiometer image deconvolution, such as the reconstruction technique in Banach spaces [

9], the Backus–Gilbert (BG) inversion method [

10], and scatterometer image reconstruction (SIR) [

11]. These algorithms have been introduced to increase the spatial resolution of microwave radiometer image data. The reconstruction technique in Banach spaces enhances the spatial resolution by generalizing the gradient method. This approach allows the reduction of over-smoothing effects and oscillations without any reduction in the numerical complexity. The BG method uses redundant information from the footprint overlap region and prior knowledge of the antenna pattern to eliminate the overlap blur effect with an inverse matrix. The estimate of a brightness temperature image can be obtained from the antenna temperature image using a previously reported procedure [

12,

13,

14,

15]. The SIR algorithm is implemented through an iterative process to obtain the optimal estimate of brightness temperature as another form of multiplication algebra reconstruction technique (MART) [

16,

17]. Although these methods can achieve good performance, they are limited by the fact that the noise is also amplified when the resolution increases too much. Another deconvolution method using Wiener filtering was proposed to remove the diffraction blur due to the low-pass filtering effect of the antenna pattern. Wiener filtering can reconstruct the brightness temperature [

18,

19] using Fourier transform and filter theories to invert the convolution processing during the measurement procedure. However, serious ringing artifacts occur when the resolution increases past a certain point.

The image deconvolution of radiometer data is a challenging inverse problem, and the key is to obtain an optimal solution given the complex degradation conditions. Wiener filtering can eliminate the influence of the diffraction blur of the radiometer data using a deconvolution operation, but the noise is also amplified during this process [

18]. Furthermore, due to the influence of the curvature of the Earth and many degradation factors, the deconvolution technology based on Wiener filtering needs further improvement [

8]. Overall, Wiener filtering cannot achieve accurate reconstruction results.

In this paper, a deconvolution method based on the idea of deep learning is proposed to remove the diffraction blur caused by the antenna pattern. In addition, other degradation factors in the imaging process of the radiometer are solved using general framework-based learning. The deconvolution process is viewed as a nonlinear regression problem in a spatial domain different from the traditional Wiener filtering, which is designed by a filter model in the Fourier transform domain. The convolutional neural network (CNN)—a deep learning model—is used to directly learn deconvolution operations. In this procedure, the estimate of brightness temperature is obtained through feature extraction and multiple feature transform. Prior knowledge of the antenna pattern of the microwave radiometer is required for this process.

This work presents a significant step toward the practical use of microwave radiometer brightness temperature data. Existing algorithms have shown limited performance on measured data. The CNN deconvolution method obtains a more accurate estimate of brightness temperature images using a large number of data and prior knowledge, compared to the Wiener filtering method. It is essential that microwave radiometer data can be used effectively.

The outline of this paper is as follows.

Section 2 describes the background of the proposed algorithm. The data used and related work are introduced in

Section 3. The method for image deconvolution using CNN is described in

Section 4. Experiments and results are described in

Section 5 and

Section 6, respectively, followed by a discussion and conclusions in

Section 7 and

Section 8, respectively.

2. Background

Deep learning [

20] techniques are driving development in a variety of fields. For classical computer vision tasks such as image classification [

21], image object detection [

22], and image segmentation [

23], deep learning methods have achieved very good results. Researchers have also proposed the use of deep learning techniques to improve the image quality of the natural environment. In Dong et al. [

24], deep learning was proposed for image super-resolution using convolutional neural networks. This study presents the CNN model for directly learning end-to-end mapping between the low- and high-resolution images. The image super-resolution method based on CNN has been studied [

25,

26]. In Dietrich et al. [

27], the convolutional neural network model was applied to image deburring. CNN have also achieved good results in image denoising [

28,

29].

Deep learning uses a multi-layer neural network to learn the internal features of an image and solves a variety of visual problems [

30]. A universal remote sensing image quality improvement method using deep learning was reported [

31]. In Ducournau and Fablet [

32], the deep learning-based super-resolution algorithm was used to address ocean surface brightness temperature data. The research on deep learning techniques has demonstrated the potential of image restoration. However, little effort has been focused on remote sensing images, and especially microwave remote sensing images. In our work, for the radiometer image data degradation problem caused by the finite size of the antenna, the deep learning technique is proposed to improve the image quality of microwave radiometer brightness temperature data.

The microwave radiometer data are affected by many degradation factors, but existing deconvolution algorithms have some deficiencies. For example, the Backus–Gilbert (BG) algorithm is limited by noise amplification when reconstructing data. Wiener filtering needs different point spread functions (PSFs) to be designed to adapt to the change in the Earth’s curvature. These algorithms tend to solve one major degradation factor, whereas other factors require further processing. The degradation factors (e.g., the Earth’s curvature, system noise, and deformation caused by the antenna reflection surface) are relatively complicated during the actual working of the radiometer. Hence, in this case, the complexity of the deconvolution model increases and the reconstruction accuracy cannot be guaranteed.

For complex degradation during the imaging process, a CNN is expected to be able to accurately perform deconvolution operations on the radiometer data. During this process, the CNN simultaneously considers many kinds of degradation factors and then obtains accurate reconstruction results through multiple feature transformations. The contributions of this paper are as follows. (1) Aimed at the complex degradation of radiometer data, a deep learning-based general framework is proposed to address the degradation problem caused by a multitude of factors. (2) The CNN was found to be able to achieve higher reconstruction accuracy for radiometer data using multiple feature space transformation. (3) A flexible dataset creation method is proposed. By using the characteristics of the multiple radiometer frequency bands, the high-resolution channel data were selected as the ground truth image of the dataset to train the model.

4. Method

4.1. Radiometer Image Degradation

The impact of image degradation involves degradation factors that include the antenna pattern, system noise, and geometric deformation. As a priori information, these factors were included in the dataset through the image degradation process. In our study for feature learning, the 89 GHz antenna temperature image was used as the 18.7 GHz simulated apparent temperature. The 18.7 GHz simulated antenna temperature was obtained from the 18.7 GHz simulated apparent temperature and prior information based on Equation (3). The CNN directly estimates the apparent temperature image using the CNN model that was trained on the dataset. In the reconstruction process, the CNN considers a variety of degradation factors and is able to handle the connections among these factors. The PSF, which includes the geometric deformation and system noise in Equation (3), is described in detail below.

The 18.7 GHz channel antenna pattern was selected as the blur kernel, and the degradation image was obtained from the convolution between the 18.7 GHz simulated scene apparent temperature and the blur kernel. During deconvolution, the PSF was used to perform the convolution process, instead of directly using the antenna pattern function. The antenna pattern of the 18.7 GHz channel was determined by the half power beam width of the antenna, which is shown in

Table 1. The PSF was simulated from the known 18.7 GHz antenna pattern by using a Gaussian function.

In the MWRI scanning process, the geometric parameters of the data in the same track direction are consistent, so in the PSF design, only the initial scan line was considered. To simulate the effects of the geometric deformation of the Earth on the data, 254 PSFs were used to degrade the entire image. This more closely aligns the degradation process to the actual imaging situation. The final reconstruction image consists of a line in each reconstruction image. The reconstruction mathematical expression is as follows:

where

is a weighting function,

is the estimate of apparent temperature,

is the antenna temperature, and

is the two-dimensional Fourier transform of the PSF. Q is the deconvolution function about

. The weighting function—which chooses the right row in the image—is the Kronecker delta function. That is,

The PSF design contributes to image deconvolution and effectively enhances the edge. During Wiener filtering deconvolution [

8], the calculation of each image requires many deconvolution operations that are time-consuming and highly complex. In our algorithm, the geometric deformation was seen as a priori information, and the PSF design was only executed in the degradation process of creating the dataset. The prior information was learned by the CNN model using a trained model, reducing the time requirement and complexity of the model.

The system noise was simulated by randomly generating Gaussian white noise. The value of the noise standard deviation of the specific channel is the NE△T, which is shown in

Table 1. The NE△T of 18.7 GHz is 0.5 K, which was added to the image degradation process after the convolution operation.

The image degradation model was constructed with the PSF design, including the Earth’s geometric deformation and the system noise in the 18.7 GHz channel. The model can be implemented in the Fourier transform domain. The dataset was composed of the simulated scene apparent temperature and the degraded image obtained by the degradation model. Since the range of data of the MWRI is 0–340 K, it can be normalized to [0, 1] to achieve a faster convergence rate [

38]. The normalized result was used to prepare the dataset for the deep neural network and model training.

where

is the raw antenna temperature data,

is the normalized data,

is 0, and

is 340.

In this section, a degradation model with multiple degradation factors is established. High-resolution 89 GHz channel data as the 18.7 GHz simulated apparent temperature was used to obtain the 18.7 GHz simulated antenna temperature by this degradation model. The 18.7 GHz simulated apparent temperature data and the 18.7 GHz simulated antenna temperature data constitute a dataset that contains a variety of prior information to train the model. In future work, this flexible method can build a real degradation model according to the actual situation.

4.2. Microwave Radiometer Image Deconvolution

The aim of radiometer image deconvolution is to obtain the optimal estimate of the original scene apparent temperature image

from the antenna temperature image

. As mentioned in the previous section, a diffraction blur occurs in the antenna temperature image

due to the low-pass filtering effect of the antenna pattern. The deconvolution process is the inverse of the radiation measurement in Equation (3), where

is obtained by assuming that

,

, and

are known. As shown in Equation (8), the linear Wiener filtering is defined in the Fourier domain to obtain the optimal apparent temperature estimate:

In our algorithm, the microwave radiometer image deconvolution problem is defined as a regression problem in the spatial domain. From the Bayesian inference framework, the maximum a posteriori (MAP) finds the optimal scene apparent temperature

.

where

is the log-likelihood of antenna temperature

, and

corresponds to the prior distribution of

. Equation (9) can be transformed to represent the loss function:

where

can be obtained by minimizing the

, and a regularization term

can restrict the learning ability of model on the dataset so that the trained model has a stronger generalization ability for the new data. The larger the value of parameter

, the greater the penalty for the model.

From the deep learning point of view, CNN is actually looking for a mapping relationship between to . The radiometric measurement information is included in the antenna temperature image. By knowing the detailed radiometric measurements, the hidden information can be found to estimate the apparent temperature image.

The proposed algorithm was implemented in two main steps. The first step was to establish the image degradation model by using the radiation measurement in Equation (3). For the known simulated apparent temperature, the observed antenna temperature image was obtained using the image degradation model. In the second step, the idea of deep learning was used to establish the CNN model to learn the mapping relationship between and , then the estimate of images were obtained. As the a priori information, the antenna pattern H and the system noise N are required for the image degradation model process. Compared to the design process of Wiener filter, the a priori information is not considered when establishing the CNN model process; it is only used in the first step to obtain .

4.3. Network Architecture

Convolutional neural networks (CNNs) are feed-forward artificial neural networks with strong feature extraction and mapping ability. For MWRI image deconvolution, the CNN model is adopted to learn mapping to obtain the estimate of the apparent temperature image. The network architecture of the proposed CNN is shown in

Figure 2. In this CNN structure, three convolution layers are used: two layers for feature extraction and one for the reconstruction.

In each convolution layer, several convolution kernels are defined, which are used to carry the information from the real apparent temperature image to the antenna temperature image. In the convolution process, the input is a two-dimensional pixel matrix, and the convolution kernel moves over the entire image to produce the final output image, called the feature map. Each convolution layer has many convolution kernels, and each convolution kernel produces a corresponding feature map on the input image.

The rectified linear unit (ReLU) was chosen as an activation function for the first two convolution layers, since it has a faster convergence rate than the sigmoid activation unit [

39]. Equation (11) is a ReLU function, where the output f can be obtained from x. Equation (12) is the activation function for the third convolution layer which is able to reconstruct the final output

Each convolutional layer plays a different role in the CNN model. Feature extraction and representation can be implemented in the first layer. This operation extracts overlapping patches from the antenna temperature image and represents each patch as a high-dimension vector. The second layer implements non-linear mapping. This operation nonlinearly maps each high-dimensional vector onto another high-dimensional vector. The final reconstruction process is completed in the last layer. This operation aggregates these high-resolution patch-wise representations to generate the final reconstructed temperature image. This image is expected to be similar to the ground truth apparent temperature.

During the training process, the input of the CNN model is the apparent temperature and the antenna temperature image, which can be degraded by the apparent temperature image. The main aim of training is to obtain the optimal weight parameters of the CNN model. For the trained CNN model, the antenna temperature image can be used as the input to obtain the apparent temperature image we expected. In this process, no preprocessing of the image is necessary.

5. Experiment

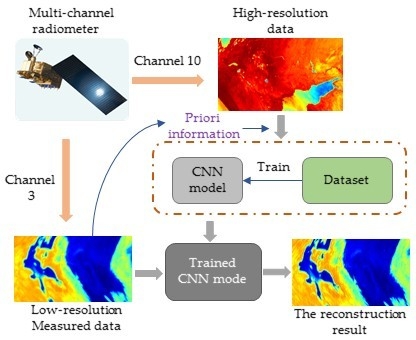

In this paper, the MWRI image deconvolution regression task was implemented by a supervised CNN model that could reconstruct the 18.7 GHz real scene apparent temperature from the observed antenna temperature images. The image deconvolution process is shown in

Figure 3. Channels 1 to 10 correspond to the low-to-high-frequency bands in

Table 1, respectively, so that channel 10 is 89 GHz with H polarization, and channel 4 is 18.7 GHz with H polarization.

The process contains three major steps: image degradation and dataset making, training and testing, and construction for the degraded image in the dataset and the real antenna temperature image. Image degradation is an important step, and simulates the generation of the observed images and contributes to the production of the dataset. The dataset is then used to train the CNN model. The trained CNN model reconstructs the degraded image in the dataset and in the 18.7 GHz measured image data.

In

Section 3, the CNN framework was identified using a three-layer network architecture. In Long et al. [

24], a typical set of parameters was determined. The first convolution layer had 64 convolution kernels, and the second convolution layer contained 32 convolution kernels. However, the observed MWRI targets were the Earth’s land, oceans, and other geographical objects. The edge changes of the temperature image are less complex than that of natural images. So, a set of alternative network parameters were used in our network structure. The number of convolution kernels in the first layer was 20, in the second layer was 10, and the experimental results showed that fewer convolution kernels still achieved good results, with a gain of about 5 dB at peak signal-to-noise ratio (PSNR) compared to the Wiener filtering deconvolution method.

To obtain better features, the antenna temperature image data of the 89 GHz channel—which contains 60 images from January to July 2017—was selected as the 18.7 GHz apparent temperature images dataset. The ground resolution of the selected image was 9 × 15 km, which is the highest resolution of all MWRI channels, meaning the temperature feature can be effectively extracted from these images. The dataset contained the degraded image, obtained by image degradation, in addition to the apparent temperature image. Ten images were randomly selected as the testing set, and the remaining 50 images served as the training set. In the training process, the images in the dataset were cropped to the size of the patch image (33,33). The input image size of the CNN model was (33,33), and the size of each convolution kernel was (9,9), so the output feature size was (33−9+1,33–9+1,20) in first layer, which is (25,25,20). The second layer had 10 kernels that were (5,5), so the size of output feature was (25–5+1,25–5+1,10), which is (21,21,10). In the final layer, a (5,5) convolution kernel was used to output the reconstruction image through the convolution operator, and the convolution kernel number of this layer was one. The size of the final output image was (17,17).

After the parameters were determined, backpropagation and stochastic gradient descendant (SGD) were used for training [

40]. The training samples were divided into many minibatches to train the CNN model, and each minibatch had 128 training samples. In the training process of the experiment, the weight w could be updated with Equation (13) and (14):

where

is the weight of next iteration after the

ith iteration,

v is the momentum variable, and

is the gradient of the cost function with respect to the weights. In Equation (13), the base learning rate

is 0.0001, the momentum

is 0.9, and the weight decay

is 0.0001. The weights were determined by the Gaussian distribution with standard deviation 0.001 in each layer.

The parameters of the CNN model were obtained following the training presented by Caffe [

41], which is an open-source deep learning package. After the training procedure was finished, the optimal CNN model parameters were obtained. The estimate of the apparent temperature image was obtained from the input image using the trained CNN model. In the image degradation process, the PSF of the 18.7 GHz channel was selected as the degradation function for training, then the CNN model reconstructed the degraded images in the dataset as well as the real measured images of the 18.7 GHz channel.

Figure 4 shows the internal reconstruction process used to estimate the apparent temperature image with the CNN model.

The reconstruction of this experiment contained two parts. The first part used the degraded images to reconstruct the corresponding estimate of apparent temperature images and evaluated the model. The second part input the 18.7 GHz real antenna temperature data into the trained CNN model to obtain the estimate of apparent temperature image.

The performance of the trained CNN model in the dataset is of key importance. The effect of the CNN model on the dataset must be monitored. However, the effect of reconstruction in the 18.7 GHz real measured data was also considered in our experiments, as it has more important engineering values for data usage. The goal of this work was for the trained model to ideally reconstruct both the measured data and the dataset.

6. Experimental Results

This part shows the experimental results that include the reconstruction results in the dataset and the real measured data in the 18.7 GHz channel. Before the results are displayed, the evaluation index of this work is outlined.

The image quality needs to be quantitatively described after the reconstruction of the dataset. Each pixel in the reconstruction image must be compared to that on the apparent temperature image, which served as a baseline image. The peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) were chosen to evaluate the quality of the images and the effect of the trained model [

42].

where

and

represent the temperature values of the baseline image and the awaiting evaluation image at the same location, respectively. Equation (15) shows the mean square error (MSE) which represents the difference between the reconstruction image and the high-resolution image. The PSNR calculation process is shown in Equation (16), which provides specific values to assess the quality of the reconstruction image. A larger value represents a better result.

SSIM measures the similarity of the structure between the two images, and the formula is shown in Equation (15). μ is the mean,

is the standard deviation of I

1, and

is the covariance of I

1 and I

2. C is a constant used to ensure the stability of the formula. The SSIM value is between 0 and 1, and a value close to 1 represents a good image deconvolution effect.

The test images contained 10 scenes of images with 254 × 1000 pixels. The evaluation index is shown in

Table 2, and the Wiener filtering deconvolution results are displayed as a comparison for the CNN model. As shown in

Table 2, the PSNR results of the CNN model achieved an average increase of 5.63 dB compared to Wiener filtering. The average SSIM was also improved from 0.90 using Wiener filtering to 0.96 using the CNN model. Furthermore, the CNN model was faster than Wiener filtering, as the geometric deformation is simulated in the degradation process. Fewer calculations are required to obtain the results in the reconstruction procedure. The average run time was 1.23 s using the CNN model and 8.36 s using the Wiener filtering with an Intel i5 computer (the computer is from Lenovo Company in Beijing, China). The run time of CNN model is only related to the number of parameters in the CNN model. However, with Wiener filtering, the deconvolution operation requires multiple PSFs when the curvature of the Earth is considered, so the run time is influenced by the design of the PSF function. For example, for MWRI data, the deconvolution function contained 254 PSF when considering the curvature of the Earth.

Figure 5 shows the Caspian Sea area in test image 10.

Figure 5b is the degraded image obtained from the convolution between

Figure 5a and the 18.7 GHz antenna pattern. Details and edges of the area of Caspian Sea become unclear due to the effect of the antenna pattern.

Figure 5c displays the result using the Wiener filtering method, showing that the contours of the Aral Sea became more apparent, but some details are missing. The CNN model reconstructed a better result than the Wiener filtering. The edges and contours of the lake and coastline were closer to the original image (

Figure 5a) after the CNN reconstruction, suggesting that the diffraction blur caused by the antenna pattern can be further eliminated and more information can be obtained using this proposed method.

Figure 6 and

Figure 7 show the result for the local area in test images 9 and 7, respectively. The experimental results demonstrate that idea-based deep learning is effective at defining the MWRI image deconvolution problem as a regression problem in the spatial domain and solving this problem using the idea of deep learning.

In previous experiments, the CNN model was used and evaluated in the dataset. However, the validity of the CNN model for MWRI real measured data is still unknown.

Figure 8 shows the experimental results for the MWRI 18.7 GHz real measured data in the Baltic Sea area.

Figure 8b is the 36.5 GHz channel real measured data and the black square in the image contains Saaremaa Island and Hiiumaa Island. Their contour is visible due to the high ground resolution (18 × 30 km). However, the edge of these islands is not visible in

Figure 8a due to the low ground resolution (30 × 50 km) of the 18.7 GHz channel.

Figure 8c shows that the improvements due to Wiener filtering are very limited, though this method has long been proposed to solve the diffraction blur problem. Therefore, this result is insufficient for practical applications. A better result—as processed by the CNN model—is displayed in

Figure 8d. The contour of Saaremaa Island is visible after the CNN model reconstruction. Although the reconstruction effect for Hiiumaa Island is less obvious, the reconstruction results of the CNN model were much better than the 18.7 GHz antenna temperature image (

Figure 8a) and the reconstruction image (

Figure 8c) with Wiener filtering.

In

Figure 9, the 140th row of data from

Figure 8 is displayed. The point near the 50th point of this row is the Saaremaa Island area. Due to low resolution, only one peak exists in this area of the 18.7 GHz data distribution curve, so distinguishing the two islands is impossible. This area is also indistinguishable using Wiener filtering in the distribution curve. However, the CNN reconstructed image has two peaks, like the 36.5 GHz image—a very effective improvement, as the CNN reconstructed image can distinguish the two islands. This also shows that the CNN reconstructed image is closer to the real ground scene and achieves a more significant effect on the real measured data than Wiener filtering.

7. Discussion

The ground truth data used in our experiments to train the CNN model was derived from the high-frequency band MWRI data. Because the 18.7 GHz ground apparent temperature is difficult to obtain for feature learning, the 89 GHz antenna temperature image was used as the 18.7 GHz simulated scene apparent temperature to learn the feature. This is a flexible approach that incorporates the multi-band radiometer data and provides a considerable amount of training data, which is also beneficial for CNN extraction features. Using this dataset, the CNN can be trained to obtain optimal model parameters. The experiment shows that the reconstruction results of this method were much better than Wiener filtering.

To obtain better training effects, we increased the number of images in the dataset, with a maximum of 150 images in the dataset. However, we found that too much data did not significantly improve the performance of the model. Using a dataset with 60 images, a good model was obtained. Continually increasing the number of images in the dataset only increased training time length. This result suggests that that the existing dataset covers enough temperature image features, and further increasing the number of images did not significantly contribute to the training of the model. In addition, since the 18.7 GHz ground truth apparent temperature was not available, we selected one row of data from the same position in

Figure 8 in four plots to evaluate the performance of the proposed method. However, this is not an accurate evaluation method, which is a limitation of this experiment. This problem should be addressed in future work. In the design of the CNN model, the hyperparameter set of the CNN model is one of the most important factors to consider, including the number of kernels, size of kernels, and other training parameter settings. When experimentally tuning the model hyperparameters, different sizes of kernels were selected. We found that the size of kernels impacted the reconstructed image. For example, using a size nine kernel as the convolution kernel of the first layer achieved good results because a large kernel size can be more effective in extracting features, whereas increasing the kernel size also means increasing the number of parameters in the model. Therefore, the choice of network parameters is a trade-off between performance and speed.