DXN: Dynamic AI-Based Analysis and Optimisation of IoT Networks’ Connectivity and Sensor Nodes’ Performance

Abstract

:1. Introduction

2. Literature Review

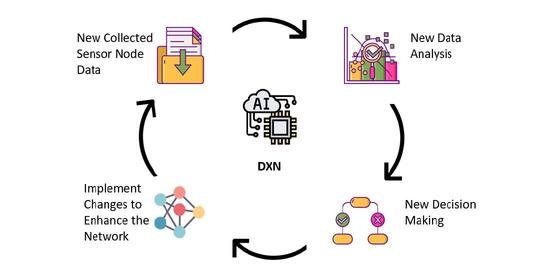

3. Design, Implementation and Test of the DXN Engine

3.1. Why DXN

- When more than one middle/bridging SN is available nearby in the vicinity of an SN that is either blocked or out of GW reach, DXN will decide which of these middle/bridging SNs is used to forward through the messages of the blocked node. This will balance the connectivity amongst middle/bridging nodes without overusing certain bridging SNs more than others.

- By analysing the transmission configurations used by each SN (for example, the used Spreading Factor (SF) or the used LoRaWAN frequency channel), DXN can change these configurations or update the frequency channels list for any SN that is affected by a harsh signal environment to prevent jitter or packet loss.

- To maintain a healthy IoT network with minimum duty cycle restrictions, DXN can adjust the sleep/active times for each SN in the network based on its current transmission/relevance status, or the environment status. The rhythm of SN activity may change in some networks from time/environment to another, especially in agricultural applications, for example.

- Furthermore, to point (3) above, by analysing the trend of the collected data, the activity of SNs could be predicted, and then “activity” will become an effective factor in SNs clustering for better network management, and load-balancing on any chosen middle/bridging node.

- The AI engine productivity could be increased further by including simple extra information data within the SNs packets as inputs that will have a huge impact. However, including such extra information, data should be based on a considerate study to balance between the effort of the SNs and the improvement impact on the connectivity of the whole network. Examples of such data are:

- Security, adding security details to help detecting/preventing certain attacks.

- Priority, including priority details to help deciding the response/process time compared to others.

- Type, including the SN type in the hybrid deployments, to help utilise each SN type efficiently.

- Location, including location details to help make decisions that suit certain areas, such as deploying extra or fewer SNs.

3.2. The Problem Statement

3.2.1. DXN Features

- Bridging optimisation: balance the network usage by dynamically identifying new routes (MNs) for any blocked SN if available.

- Activity optimisation: balance the activity of the network by adjusting the SNs’ Tx Intervals based on their activity in the geographical region.

- Transmission Scheduling: schedule the transmission time for each blocked SN in the network to reduce its listening (wake-up) time (save battery) to the minimum.

3.2.2. Bridging Algorithm

- Every SN will wake up as intended/scheduled to try sending its message to the GW in normal Class A mode (maintaining normal Class A frequencies and duty cycle restrictions). If unsuccessful after three tries (no Acknowledgment message received), it will switch itself to a Class C mode (listening mode frequency). We label such blocked SN node as an EN.

- If any SN is successful in reaching the GW, then (after transmitting its own message on Class A mode and receiving the corresponding successful Acknowledgement message from the GW) it will transmit a “rescue message” in the Class C frequency, in the hope that a nearby EN would receive it and reply within its normal Class C single receive window. We label such a node as an MN. This rescue message will include a chosen Class A frequency that an EN is expected to transmit its message on, and which this MN will be listening to during the “receive window”.

- If steps 1 and then 2 are complete, and if an EN does receive a rescue message on the Class C frequency, it will transmit its message immediately within the normal “receive window” timing on the frequency stated in the rescue message. It will then stay in listening mode at this frequency until it receives an acknowledgement message via MN. Otherwise, if nothing is received within the user-defined timer (depending on the network transmission intervals), or if the MN is permanently removed from the network, the EN will start fresh again from step 1 (i.e., go back being SN, being in Class A, etc.) as this MN will be considered as an unreliable bridge.

- If the MN does receive an EN message within the “receive window”, it will forward it to the GW as a normal Class A message and later it will also forward the Acknowledgment message back to the EN once received, using the pre-agreed frequency stated in the rescue message.

- Both MN and EN nodes will go back to sleep and will wake up in Class A for the next scheduled time as in step 1.

3.3. DXN Algorithms and Suitable AI Engine Implementation

3.4. DXN Tests Scenarios and Experiments

3.4.1. Network Load-Based Optimisation Algorithm

3.4.2. Network Activity-Based Optimisation Algorithm

3.4.3. Nodes Transmission Scheduling Optimisation Algorithm

3.4.4. Overall Evaluation of DXN

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Atlam, F.H.; Walters, R.J.; Wills, G.B. Intelligence of things: Opportunities challenges. In Proceedings of the 2018 3rd Cloudification of the Internet of Things (CIoT), Paris, France, 2–4 July 2018. [Google Scholar] [CrossRef] [Green Version]

- Mikhaylov, K.; Petäjäjärvi, J.; Haenninen, T. Analysis of the Capacity and Scalability of the LoRa Wide Area Network Technology. In Proceedings of the European Wireless 2016, 22th European Wireless Conference, Oulu, Finland, 18–20 May 2016. [Google Scholar]

- Petajajarvi, J.; Mikhaylov, K.; Hamalainen, M.; Iinatti, J. Evaluation of LoRa LPWAN technology for remote health and wellbeing monitoring. In Proceedings of the International Symposium on Medical Information and Communication Technology, ISMICT, Worcester, MA, USA, 20–23 March 2016. [Google Scholar] [CrossRef]

- Petäjäjärvi, J.; Mikhaylov, K.; Roivainen, A.; Hänninen, T.; Pettissalo, M. On the coverage of LPWANs: Range evaluation and channel attenuation model for LoRa technology. In Proceedings of the 2015 14th International Conference on ITS Telecommunications, ITST 2015, Copenhagen, Denmark, 2–4 December 2015; pp. 55–59. [Google Scholar] [CrossRef]

- Tsiropoulou, E.E.; Paruchuri, S.T.; Baras, J.S. Interest, energy and physical-aware coalition formation and resource allocation in smart IoT applications. In Proceedings of the 2017 51st Annual Conference on Information Sciences and Systems, CISS 2017, Baltimore, MD, USA, 22–24 March 2017. [Google Scholar] [CrossRef]

- Zhou, W.; Tong, Z.; Dong, Z.Y.; Wang, Y. Lora-hybrid: A LoRaWAN based multihop solution for regional microgrid. In Proceedings of the 2019 IEEE 4th International Conference on Computer and Communication Systems, ICCCS 2019, Singapore, 23–25 February 2019; pp. 650–654. [Google Scholar] [CrossRef]

- Holin, N.; Sornin, F. LoRaWAN Relay Workshop. In Proceedings of the Thing Network Conference, Amsterdam, The Netherlands, 31 January–1 February 2019. [Google Scholar]

- Jeon, J.; Park, J.H.; Jeong, Y.S. Dynamic Analysis for IoT Malware Detection with Convolution Neural Network Model. IEEE Access 2020, 8, 96899–96911. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2014; Volume 8689, pp. 818–833. [Google Scholar] [CrossRef] [Green Version]

- Rahimi, P.; Chrysostomou, C. Improving the Network Lifetime and Performance of Wireless Sensor Networks for IoT Applications Based on Fuzzy Logic. In Proceedings of the 15th Annual International Conference on Distributed Computing in Sensor Systems, DCOSS 2019, Santorini, Greece, 29–31 May 2019; pp. 667–674. [Google Scholar] [CrossRef]

- Heinzelman, W.R.; Chandrakasan, A.; Balakrishnan, H. Energy-efficient communication protocol for wireless microsensor networks. In Proceedings of the 33rd Annual Hawaii International Conference on System Sciences, Maui, HI, USA, 7 January 2000; p. 223. [Google Scholar] [CrossRef]

- Puschmann, D.; Barnaghi, P.; Tafazolli, R. Adaptive Clustering for Dynamic IoT Data Streams. IEEE Internet of Things J. 2017, 4, 64–74. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Dong, M.; Ota, K. Enabling Computational Intelligence for Green Internet of Things: Data-Driven Adaptation in LPWA Networking. IEEE Comput. Intell. Mag. 2020, 15, 32–43. [Google Scholar] [CrossRef]

- Kumari, P.; Gupta, H.P.; Dutta, T. A Bayesian Game Based Approach for Associating the Nodes to the Gateway in LoRa Network. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Deep Neural Network—An Overview|ScienceDirect Topics. Available online: https://www.sciencedirect.com/topics/computer-science/deep-neural-network (accessed on 9 April 2021).

- Time Series Models—An Overview|ScienceDirect Topics. Available online: https://www.sciencedirect.com/topics/neuroscience/time-series-models (accessed on 9 April 2021).

- BigML. Available online: https://bigml.com/ (accessed on 6 May 2021).

- Kwon, M.; Lee, J.; Park, H. Intelligent IoT Connectivity: Deep Reinforcement Learning Approach. IEEE Sens. J. 2020, 20, 2782–2791. [Google Scholar] [CrossRef]

| EN# | Total Tx Packets | Without DXN | With DXN | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Packets through MN1 | Packets through MN2 | Successful Delivered Packets | PDR | Avg. Overall Tx Time (sec) | Packets through MN1 | Packets through MN2 | Successful Delivered Packets | PDR | Avg. Overall Tx Time (s) | ||

| EN3 | 1152 | 569 | 583 | 1152 | 100% | 4.1 | - | 1152 | 1151 | 99.9% | 4.8 |

| MN# | Without DXN | With DXN | Score | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Packets Sent to GW | Packets Forwarded to GW | Total Packets Sent | Capacity Percentage | Packets Sent to GW | Packets Forwarded to GW | Total Packets Sent | Capacity Percentage | ||

| MN1 | 1152 | 2873 | 4025 | 349% | 1152 | 2304 | 3456 | 300% | 59 |

| MN2 | 1152 | 2887 | 4039 | 350% | 1152 | 3456 | 4608 | 400% | 82 |

| Group# | Nodes | Tx Interval (Hours) | MN Score | New Tx Interval (Hours) | New MN Score |

|---|---|---|---|---|---|

| Group1 | MN1 | 12 | 59 | 8 | 55 |

| EN1 | 3 | ||||

| EN2 | 10 | ||||

| Group2 | MN2 | 6 | 82 | 6 | 82 |

| EN3 | 11 | ||||

| EN4 | 5 | ||||

| EN5 | 4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lami, I.; Abdulkhudhur, A. DXN: Dynamic AI-Based Analysis and Optimisation of IoT Networks’ Connectivity and Sensor Nodes’ Performance. Signals 2021, 2, 570-585. https://doi.org/10.3390/signals2030035

Lami I, Abdulkhudhur A. DXN: Dynamic AI-Based Analysis and Optimisation of IoT Networks’ Connectivity and Sensor Nodes’ Performance. Signals. 2021; 2(3):570-585. https://doi.org/10.3390/signals2030035

Chicago/Turabian StyleLami, Ihsan, and Alnoman Abdulkhudhur. 2021. "DXN: Dynamic AI-Based Analysis and Optimisation of IoT Networks’ Connectivity and Sensor Nodes’ Performance" Signals 2, no. 3: 570-585. https://doi.org/10.3390/signals2030035