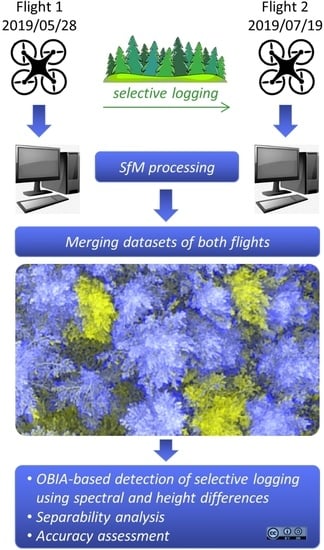

Monitoring Selective Logging in a Pine-Dominated Forest in Central Germany with Repeated Drone Flights Utilizing A Low Cost RTK Quadcopter

Abstract

:1. Introduction

1.1. Relevant Methodological and Technical Background

1.1.1. Structure from Motion Processing Sequence

1.1.2. The 3D Positional Accuracy of SfM Models

1.2. UAS SfM Data-Based Tree Detection

1.3. Laser Scanner-Based Tree Detection

1.3.1. LiDAR-Based Tree Detection

1.3.2. Terrestrial Laser Scanner (TLS)-Based Tree Detection

1.4. Organization of The Paper

2. Materials and Methods

2.1. The Site “Roda Forest”

2.2. Field Work: Acquisition of UAS Data and Check Points

2.3. UAS Data Processing

2.3.1. SfM-Based Generation of Orthomosaics and Point Clouds

2.3.2. Computation of The Spectral Difference Images

2.3.3. Computation of Canopy Height Models (CHM) and CHM Differences

2.4. Collection of Reference Data for Accuracy Assessment and Samples for Separability Analysis

2.5. Automatic Detection of Felled Trees

2.5.1. Segmentation and Classification of Felled Trees Based on Spectral Differences

2.5.2. Classification of Felled Trees based on the ∆CHM

2.5.3. Integration of Spectral Difference- and Height Difference-Based Classifications

2.6. Separability and Accuracy Analysis

3. Results

3.1. Separability Analysis

3.2. Accuracy Analysis

4. Discussion

4.1. Discussion of Impacts on Accuracy

4.2. Related Work

4.3. Outlook

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Milas, A.S.; Cracknell, A.P.; Warner, T.A. Drones-the third generation source of remote sensing data. Int. J. Remote Sens. 2018, 39, 7125–7137. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.J.; Chen, X.Y. Unmanned Aerial Vehicle for Remote Sensing Applications-A Review. Remote Sens. 2019, 11, 22. [Google Scholar] [CrossRef] [Green Version]

- Schonberger, J.L.; Frahm, J.M. IEEE Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27−30 June 2016; pp. 4104–4113. [Google Scholar]

- Padua, L.; Vanko, J.; Hruska, J.; Adao, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. IEEE Data acquisition and analysis methods in UAV-based applications for Precision Agriculture. In 2019 15th International Conference on Distributed Computing in Sensor Systems; IEEE: New York, NY, USA, 2019; pp. 377–384. [Google Scholar]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Zachariah, D.F.; Terry, L.P. An orientation based correction method for SfM-MVS point clouds-Implications for field geology. J. Struct. Geol. 2018, 113, 76–89. [Google Scholar] [CrossRef]

- Tscharf, A.; Rumpler, M.; Fraundorfer, F.; Mayer, G.; Bischof, H. On The Use of UAVs In Mining and Archaeology - Geo-Accurate 3D Reconstructions using Various Platforms and Terrestrial Views. Isprs Uav-G2015 2015, 15–22. [Google Scholar] [CrossRef] [Green Version]

- Kersten, J.; Rodehorst, V.; Hallermann, N.; Debus, P.; Morgenthal, G. Potentials of autonomous UAS and automated image analysis for structural health monitoring. In Proceedings of the 40th IABSE Symposium, Nantes, France, 19–21 September 2018. [Google Scholar]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef] [Green Version]

- Förstner, W. A framework for low level feature extraction. In Proceedings of the European Conference on Computer Vision, Stockholm, Sweden, 2–6 May 1994; pp. 383–394. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Zhuo, X.Y.; Koch, T.; Kurz, F.; Fraundorfer, F.; Reinartz, P. Automatic UAV Image Geo-Registration by Matching UAV Images to Georeferenced Image Data. Remote Sens. 2017, 9, 25. [Google Scholar] [CrossRef] [Green Version]

- Nister, D. An efficient solution to the five-point relative pose problem. Ieee Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J.; Obdrzalek, S. Enhancing ransac by generalized model optimization. In Proceedings of the ACCV, Jeju, Korea, 27–30 January 2004; pp. 812–817. [Google Scholar]

- Triggs, B.; Mclauchlan, P.; Hartley, R.i.; Fitzgibbon, A. Bundle Adjustment – A Modern Synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar]

- Tippetts, B.; Lee, D.J.; Lillywhite, K.; Archibald, J. Review of stereo vision algorithms and their suitability for resource-limited systems. J. Real-Time Image Process. 2016, 11, 5–25. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, D.; Burningham, H. Comparison of pre- and self-calibrated camera calibration models for UAS-derived nadir imagery for a SfM application. Prog. Phys. Geogr. -Earth Environ. 2019, 43, 215–235. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 16. [Google Scholar] [CrossRef] [Green Version]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef] [Green Version]

- Tomastik, J.; Mokros, M.; Salon, S.; Chudy, F.; Tunak, D. Accuracy of Photogrammetric UAV-Based Point Clouds under Conditions of Partially-Open Forest Canopy. Forests 2017, 8, 16. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Tompalski, P.; Crawford, P.; Day, K.J.K. Updating residual stem volume estimates using ALS-and UAV-acquired stereo-photogrammetric point clouds. Int. J. Remote Sens. 2017, 38, 2938–2953. [Google Scholar] [CrossRef]

- Yancho, J.M.M.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Plowright, A. Fine-Scale Spatial and Spectral Clustering of UAV-Acquired Digital Aerial Photogrammetric (DAP) Point Clouds for Individual Tree Crown Detection and Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4131–4148. [Google Scholar] [CrossRef]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest. Forests 2017, 8, 17. [Google Scholar] [CrossRef] [Green Version]

- Li, D.; Guo, H.D.; Wang, C.; Li, W.; Chen, H.Y.; Zuo, Z.L. Individual Tree Delineation in Windbreaks Using Airborne-Laser-Scanning Data and Unmanned Aerial Vehicle Stereo Images. Ieee Geosci. Remote Sens. Lett. 2016, 13, 1330–1334. [Google Scholar] [CrossRef]

- Hernandez-Clemente, R.; Navarro-Cerrillo, R.M.; Ramirez, F.J.R.; Hornero, A.; Zarco-Tejada, P.J. A Novel Methodology to Estimate Single-Tree Biophysical Parameters from 3D Digital Imagery Compared to Aerial Laser Scanner Data. Remote Sens. 2014, 6, 11627–11648. [Google Scholar] [CrossRef] [Green Version]

- Panagiotidis, D.; Abdollahnejad, A.; Surovy, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Kang, J.; Wang, L.; Chen, F.; Niu, Z. Identifying tree crown areas in undulating eucalyptus plantations using JSEG multi-scale segmentation and unmanned aerial vehicle near-infrared imagery. Int. J. Remote Sens. 2017, 38, 2296–2312. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.W.; Hyyppa, J.; Saari, H.; Polonen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 34. [Google Scholar] [CrossRef] [Green Version]

- Guerra-Hernandez, J.; Gonzalez-Ferreiro, E.; Monleon, V.J.; Faias, S.P.; Tome, M.; Diaz-Varela, R.A. Use of Multi-Temporal UAV-Derived Imagery for Estimating Individual Tree Growth in Pinus pinea Stands. Forests 2017, 8, 19. [Google Scholar] [CrossRef]

- Thiel, C.; Baade, J.; Schmullius, C. Comparison of UAV photograph-based and airborne lidarbased point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38, 4765. [Google Scholar] [CrossRef]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L.J. Trends in Automatic Individual Tree Crown Detection and Delineation-Evolution of LiDAR Data. Remote Sens. 2016, 8, 26. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.S.; Hyyppa, J.; Liang, X.L.; Kaartinen, H.; Yu, X.W.; Lindberg, E.; Holmgren, J.; Qin, Y.C.; Mallet, C.; Ferraz, A.; et al. International Benchmarking of the Individual Tree Detection Methods for Modeling 3-D Canopy Structure for Silviculture and Forest Ecology Using Airborne Laser Scanning. Ieee Trans. Geosci. Remote Sens. 2016, 54, 5011–5027. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.L.; Kankare, V.; Hyyppa, J.; Wang, Y.S.; Kukko, A.; Haggren, H.; Yu, X.W.; Kaartinen, H.; Jaakkola, A.; Guan, F.Y.; et al. Terrestrial laser scanning in forest inventories. Isprs J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Jakubowski, M.K.; Li, W.K.; Guo, Q.H.; Kelly, M. Delineating Individual Trees from Lidar Data: A Comparison of Vector- and Raster-based Segmentation Approaches. Remote Sens. 2013, 5, 4163–4186. [Google Scholar] [CrossRef] [Green Version]

- Bienert, A.; Georgi, L.; Kunz, M.; Maas, H.G.; von Oheimb, G. Comparison and Combination of Mobile and Terrestrial Laser Scanning for Natural Forest Inventories. Forests 2018, 9, 25. [Google Scholar] [CrossRef] [Green Version]

- Jaakkola, A.; Hyyppa, J.; Yu, X.W.; Kukko, A.; Kaartinen, H.; Liang, X.L.; Hyyppa, H.; Wang, Y.S. Autonomous Collection of Forest Field Reference-The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 12. [Google Scholar] [CrossRef] [Green Version]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 14. [Google Scholar] [CrossRef]

- Wieser, M.; Mandlburger, G.; Hollaus, M.; Otepka, J.; Glira, P.; Pfeifer, N. A Case Study of UAS Borne Laser Scanning for Measurement of Tree Stem Diameter. Remote Sens. 2017, 9, 11. [Google Scholar] [CrossRef] [Green Version]

- Marinelli, D.; Paris, C.; Bruzzone, L. A Novel Approach to 3-D Change Detection in Multitemporal LiDAR Data Acquired in Forest Areas. Ieee Trans. Geosci. Remote Sens. 2018, 56, 3030–3046. [Google Scholar] [CrossRef]

- Marinelli, D.; Paris, C.; Bruzzone, L. An Approach to Tree Detection Based on the Fusion of Multitemporal LiDAR Data. Ieee Geosci. Remote Sens. Lett. 2019, 16, 1771–1775. [Google Scholar] [CrossRef]

- Lu, X.C.; Guo, Q.H.; Li, W.K.; Flanagan, J. A bottom-up approach to segment individual deciduous trees using leaf-off lidar point cloud data. Isprs J. Photogramm. Remote Sens. 2014, 94, 1–12. [Google Scholar] [CrossRef]

- Mongus, D.; Zalik, B. An efficient approach to 3D single tree-crown delineation in LiDAR data. Isprs J. Photogramm. Remote Sens. 2015, 108, 219–233. [Google Scholar] [CrossRef]

- Hu, X.B.; Chen, W.; Xu, W.Y. Adaptive Mean Shift-Based Identification of Individual Trees Using Airborne LiDAR Data. Remote Sens. 2017, 9, 23. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.L.; Litkey, P.; Hyyppa, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic Stem Mapping Using Single-Scan Terrestrial Laser Scanning. Ieee Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Xia, S.B.; Wang, C.; Pan, F.F.; Xi, X.H.; Zeng, H.C.; Liu, H. Detecting Stems in Dense and Homogeneous Forest Using Single-Scan TLS. Forests 2015, 6, 3923–3945. [Google Scholar] [CrossRef] [Green Version]

- Oveland, I.; Hauglin, M.; Gobakken, T.; Naesset, E.; Maalen-Johansen, I. Automatic Estimation of Tree Position and Stem Diameter Using a Moving Terrestrial Laser Scanner. Remote Sens. 2017, 9, 15. [Google Scholar] [CrossRef] [Green Version]

- Maas, H.G.; Bienert, A.; Scheller, S.; Keane, E. Automatic forest inventory parameter determination from terrestrial laser scanner data. Int. J. Remote Sens. 2008, 29, 1579–1593. [Google Scholar] [CrossRef]

- DJI. DJI Phantom 4 RTK User Manual v1.4. 2018.

- PPM. 10xx GNSS Sensor. Available online: http://www.ppmgmbh.com/ppm_design/10xx-GNSS-Sensor.html (accessed on 5 November 2019).

- Conrady, A.E. Lens-systems, decentered. Mon. Not. R. Astron. Soc. 1919, 79, 384–390. [Google Scholar] [CrossRef] [Green Version]

- Khosravipour, A.; Skidmore, A.K.; Isenburg, M. Generating spike-free digital surface models using LiDAR raw point clouds: A new approach for forestry applications. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 104–114. [Google Scholar] [CrossRef]

| UAS | DJI Phantom 4 RTK |

|---|---|

| Frequencies used for RTK | GPS: L1/L2 GLONASS: L1/L2 BeiDou: B1/B2 Galileo: E1/E5a |

| Positioning accuracy | Horizontal: 1 cm + 1 ppm Vertical: 2 cm + 1 ppm |

| Image sensor | DJI FC6310R (Bayer), 1″ CMOS Focal length 24 mm (35 mm equivalent) |

| No. of pixels/pixel size | 5472 × 3648/2.41 µm × 2.41 µm |

| Field of view | 84° |

| Mechanical shutter | 8-1/2000 s |

| Data format | JPEG, EXIF with 3D RTK CDGNSS location |

| Acquisition Dates | 28 May 2019 | 19 July 2019 |

|---|---|---|

| Time (UTC+2) of first shot | 01.45 pm | 01.40 pm |

| Wind speed | 0.5–1.0 ms−1 | 1.5–2.5 ms−1 |

| Clouds | overcast (8/8) | overcast (8/8) |

| Mission duration | 35 min (2 batteries) | 35 min (2 batteries) |

| No. images | 541 | 542 |

| Image overlap (front/side) | 90%/80% | 90%/80% |

| Flight speed | 4 ms−1 | 4 ms−1 |

| Shutter priority | yes (1/320 s) | yes (1/320 s) |

| Distortion correction | yes | yes |

| Gimbal angle | –90° (nadir) | –90° (nadir) |

| Flight altitude over canopy | 100 m | 100 m |

| ISO sensitivity | ISO200 | ISO200 |

| Aperture | F/3.5–F/4.0 (exposure value −0.3) | F/3.0–F/4.5 (exposure value –1.0) |

| Geometric resolution (ground) | 3.12 cm | 3.12 cm |

| Area covered by UAS mission | 0.465 km2 | 0.467 km2 |

| Acquisition Dates | 28 May 2019 | 19 July 2019 |

|---|---|---|

| Photo alignment accuracy | High (full resolution) | High (full resolution) |

| Image preselection | Generic/Reference | Generic/Reference |

| Key point limit | 40,000 | 40,000 |

| Tie point limit | 10,000 | 10,000 |

| Adaptive camera model fitting | Off | Off |

| Camera positional accuracy | 0.02 m | 0.02 m |

| Tie point accuracy | 1 pix | 1 pix |

| Optimize camera alignment | Yes | Yes |

| Adapted camera parameters | f, b1, b2, cx, cy, k1–k3, p1, p2 | Camera model from 28 May 2019 |

| Dense cloud quality | Medium | Medium |

| Depth filtering | Mild | Mild |

| 2.5 D mesh | High | High |

| Orthomosaic blending mode | Mosaic | Mosaic |

| Orthomosaic hole filling | Yes | Yes |

| Orthomosaic pixel spacing | 5 cm × 5 cm | 5 cm × 5 cm |

| 28 May 2019 | 19 July 2019 | |

|---|---|---|

| No. of tie points | 444,946 | 449,662 |

| Effective reprojection error | 0.90762 pix | 0.73758 pix |

| No. of points (dense cloud) | 66,228,345 | 66,229,154 |

| No. of faces | 13,178,528 | 13,178,688 |

| f | 3632.89 | |

| b1, b2 | 0.536603, 0.528095 | |

| cx, cy | 12.8635, 21.3182 | |

| k1, k2, k3 | −0.00318397, −0.00736057, 0.00563009 | |

| p1, p2 | 0.000432895, 0.00112522 | |

| Average error of camera pos. (x, y, z), mm | 1.30, 1.64, 3.58 | 4.20, 3.45, 5.34 |

| RMSE of check points (x, y, z), mm | 5.83, 16.56, 16.41 | - |

| Command | Parameter | Value |

|---|---|---|

| LAS2DEM | CPU64 dem | |

| spike_free | 1.0 | |

| step | 0.05 | |

| kill | 3.0 | |

| cores | 8 |

| Method | Parameter | Value |

|---|---|---|

| Multiresolution Segmentation | Scope | Pixel level |

| Condition | --- | |

| Map | From parent | |

| Overwrite existing level | Yes | |

| Level name | Level1 | |

| Compatibility mode | Latest version | |

| Image layer weights | 1 | |

| Scale parameter | 150 | |

| Shape | 0.3 | |

| Compactness | 0.5 | |

| Spectral difference segmentation | Scope | Image object level |

| Level | Level2 | |

| Class filter | None | |

| Condition | --- | |

| Map | From parent | |

| Region | From parent | |

| Max. number of objects | All | |

| Level usage | Use current | |

| Maximum spectral difference | 10 | |

| Image layer weights | 1 | |

| Hierarchical classification | Scope | Image object level |

| Level | Level2 | |

| Class filter | None | |

| Condition | --- | |

| Map | From parent | |

| Region | From parent | |

| Used object features | Area, Roundness, Mean difference to neighbors, Mean difference to darker neighbors | |

| Active classes | All | |

| Use class-related features | Yes |

| tp | fn | fn [%] | fp | fp [%] | Precision | Recall | |

|---|---|---|---|---|---|---|---|

| Spectral | 349 | 31 | 8.2 | 22 | 5.8 | 94.1 | 91.8 |

| Spectral + Height | 348 | 32 | 8.5 | 9 | 2.4 | 97.5 | 91.6 |

| Authors | Data | Site/Forest type | Accuracy |

|---|---|---|---|

| LiDAR-based selective logging detection | |||

| Marinelli et al. [41,42] | Bi-temporal LiDAR for change detection, 10–50 pls/m2 | Italy, Southern Alps/needle-leaved forest | tp = 97.7% fp = 1.7% fn = 2.3% |

| UAS SfM-based individual tree detection | |||

| Mohan et al. [25] | SfM point clouds | USA, Wyoming/mixed conifer forest | tp = 85%. |

| Thiel et al. [32] | SfM point clouds | Germany, Thuringia/mixed conifer forest | tp = 93%. |

| Nevalainen et al. [30] | SfM point clouds and hyperspectral images | Southern Finland/pine, spruce, birch, larch | tp = 64%–96%. |

| Li et al. [26] | SfM point clouds and imagery | China, Huailai area/aspen | tp = 47%–67% |

| LiDAR-based individual tree detection | |||

| Lu et al. [43] | LiDAR 10 pls/m2 | USA Pennsylvania/deciduous species (leaf-off) | tp = 84% |

| Mongus and Zalik [44] | LiDAR 26–97 pls/m2 | Slovenia, Alps/mixed conifer forest | av. precision = 0.75 |

| Hu et al. [45] | LiDAR 15 pt/m2 | Southern China/multi-layered evergreen broad-leaved forest | av. precision = 0.92 |

| Terrestrial laser scanner (TLS)-based individual tree (stem) detection | |||

| Liang et al. [46] | Single scan TLS | Finland, Evo/pine, spruce, birch, larch | tp = 73% |

| Xia et al. [47] | Single scan TLS | China, Sichuan Giant Panda Sanctuaries/dense bamboo forest | tp = 88% |

| Oveland et al. [48] | Single scan (low cost) TLS | Norway/Gran municipality in southeastern Norway/spruce and scots pine | tp = 78% fn = 22% |

| Maas et al. [49] | Multiple scan TLS | Austria, Ireland/conifer forest, broad-leaved forest | tp = 97% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thiel, C.; Müller, M.M.; Berger, C.; Cremer, F.; Dubois, C.; Hese, S.; Baade, J.; Klan, F.; Pathe, C. Monitoring Selective Logging in a Pine-Dominated Forest in Central Germany with Repeated Drone Flights Utilizing A Low Cost RTK Quadcopter. Drones 2020, 4, 11. https://doi.org/10.3390/drones4020011

Thiel C, Müller MM, Berger C, Cremer F, Dubois C, Hese S, Baade J, Klan F, Pathe C. Monitoring Selective Logging in a Pine-Dominated Forest in Central Germany with Repeated Drone Flights Utilizing A Low Cost RTK Quadcopter. Drones. 2020; 4(2):11. https://doi.org/10.3390/drones4020011

Chicago/Turabian StyleThiel, Christian, Marlin M. Müller, Christian Berger, Felix Cremer, Clémence Dubois, Sören Hese, Jussi Baade, Friederike Klan, and Carsten Pathe. 2020. "Monitoring Selective Logging in a Pine-Dominated Forest in Central Germany with Repeated Drone Flights Utilizing A Low Cost RTK Quadcopter" Drones 4, no. 2: 11. https://doi.org/10.3390/drones4020011