Enhanced Wearable Force-Feedback Mechanism for Free-Range Haptic Experience Extended by Pass-Through Mixed Reality

Abstract

:1. Introduction

2. Materials and Methods

2.1. Problem Description

2.2. Antecedent Prototype Description

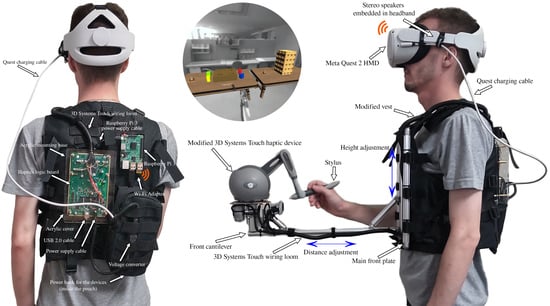

2.2.1. Hardware Overview

2.2.2. Software Overview

2.2.3. Pilot Experience Overview

2.3. Preliminary Validation

2.3.1. Summary of a Pilot Experiment

2.3.2. Summary of a Performance Experiment

2.4. Identified Deficiencies

3. Resulting Enhancements

3.1. Weight Reduction

3.2. Wireless Connectivity

3.3. Power Delivery

3.4. Haptic Feedback Intensity

3.5. Stylus-to-Avatar Alignment and Reset Function

3.6. Transition between Stylus Use and Hand-Tracking

3.7. Mixed Reality Pass-Through

4. Enhancements Validation

4.1. Conditions: Virtual Reality and Mixed Reality

4.1.1. Procedure and Controls

4.1.2. Participants

4.1.3. Data Acquisition and Composition

4.1.4. Results

5. Discussion

Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | Three-dimensional |

| API | Application programming interface |

| AR | Augmented reality |

| CAD | Computer-aided design |

| DLL | Dynamic-link library |

| GTDD | Geomagic touch device drivers |

| HDAPI | Haptic device API |

| HLAPI | Haptic library API |

| LAN | Local area network |

| HMD | Head-mounted display |

| MRTK | Mixed reality toolkit |

| SDK | Software development kit |

| USB | Universal serial bus |

| VR | Virtual reality |

| XR | Extended reality |

| MR | Mixed reality |

References

- Skarbez, R.; Smith, M.; Whitton, M.C. Revisiting Milgram and Kishino’s Reality-Virtuality Continuum. Front. Virtual Real. 2021, 2, 647997. [Google Scholar] [CrossRef]

- Hillmann, C. Comparing the Gear VR, Oculus Go, and Oculus Quest. In Unreal Mob. Standalone VR; Apress: Berkeley, CA, USA, 2019; pp. 141–167. [Google Scholar] [CrossRef]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Billinghurst, M.; Kato, H. Collaborative augmented reality. Commun. ACM 2002, 45, 64–70. [Google Scholar] [CrossRef]

- Burdea, G. Force and Touch Feedback for Virtual Reality; John Wiley & Son, Inc.: Hoboken, NJ, USA, 1996. [Google Scholar]

- Slater, M. Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3549–3557. [Google Scholar] [CrossRef]

- Dangxiao, W.; Yuan, G.; Shiyi, L.; Yuru, Z.; Weiliang, X.; Jing, X. Haptic display for virtual reality: Progress and challenges. Virtual Real. Intell. Hardw. 2019, 1, 136. [Google Scholar] [CrossRef]

- Perret, J.; Vander Poorten, E. Touching Virtual Reality: A Review of Haptic Gloves. In Proceedings of the ACTUATOR International Conference on New Actuators, Bremen, Germany, 25–27 June 2018; pp. 1–5. [Google Scholar]

- Kang, D.; Lee, C.G.; Kwon, O. Pneumatic and acoustic suit: Multimodal haptic suit for enhanced virtual reality simulation. Virtual Real. 2023, 1, 1–23. [Google Scholar] [CrossRef]

- Valentini, P.P.; Biancolini, M.E. Interactive Sculpting Using Augmented-Reality, Mesh Morphing, and Force Feedback: Force-Feedback Capabilities in an Augmented Reality Environment. IEEE Consum. Electron. Mag. 2018, 7, 83–90. [Google Scholar] [CrossRef]

- Tzemanaki, A.; Al, G.A.; Melhuish, C.; Dogramadzi, S. Design of a wearable fingertip haptic device for remote palpation: Characterisation and interface with a virtual environment. Front. Robot. AI 2018, 5, 62. [Google Scholar] [CrossRef] [PubMed]

- Kishishita, Y.; Das, S.; Ramirez, A.V.; Thakur, C.; Tadayon, R.; Kurita, Y. Muscleblazer: Force-feedback suit for immersive experience. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1813–1818. [Google Scholar] [CrossRef]

- Son, E.; Song, H.; Nam, S.; Kim, Y. Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology. Electronics 2022, 11, 2274. [Google Scholar] [CrossRef]

- Bermejo, C.; Hui, P. A Survey on Haptic Technologies for Mobile Augmented Reality. ACM Comput. Surv. 2021, 54, 184. [Google Scholar] [CrossRef]

- Kudry, P.; Cohen, M. Prototype of a wearable force-feedback mechanism for free-range immersive experience. In Proceedings of the RACS International Conference on Research in Adaptive and Convergent Systems, Virtual, 3–6 October 2022; pp. 178–184. [Google Scholar] [CrossRef]

- Haptic Devices; 3D Systems: Rock Hill, SC, USA, 2022.

- About the Oculus XR Plugin | Oculus XR Plugin | 3.3.0. 2023. Available online: https://docs.unity3d.com/Packages/com.unity.xr.oculus@3.3/ (accessed on 16 June 2023).

- MRTK2-Unity Developer Documentation—MRTK 2 | Microsoft Learn. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/mrtk2/?view=mrtkunity-2022-05 (accessed on 16 June 2023).

- OpenHaptics® Toolkit Version 3.5.0 API Reference Guide Original Instructions. 2018. Available online: https://s3.amazonaws.com/dl.3dsystems.com/binaries/Sensable/OH/3.5/OpenHaptics_Toolkit_API_Reference_Guide.pdf (accessed on 16 June 2023).

- OpenHaptics® Toolkit Version 3.5.0 Programmer’s Guide. 2018. Available online: https://s3.amazonaws.com/dl.3dsystems.com/binaries/Sensable/OH/3.5/OpenHaptics_Toolkit_ProgrammersGuide.pdf (accessed on 16 June 2023).

- Kudry, P.; Cohen, M. Development of a wearable force-feedback mechanism for free-range haptic immersive experience. Front. Virtual Real. 2022, 3. [Google Scholar] [CrossRef]

- Home | VirtualHere. Available online: https://www.virtualhere.com/ (accessed on 16 June 2023).

- Interaction SDK Overview | Oculus Developers. Available online: https://developer.oculus.com/ (accessed on 16 June 2023).

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- R Core Team. lm: Fitting Linear Models; R Foundation for Statistical Computing: Vienna, Austria, 2023. [Google Scholar]

- Russell, V.; Lenth, E.A. emmeans: Estimated Marginal Means, aka Least-Squares Means, R Package Version 1.8.7. 2023. Available online: https://cran.r-project.org/web/packages/emmeans/index.html (accessed on 16 June 2023).

- R Core Team. glmer: Fitting Generalized Linear Mixed-Effects Models; R Foundation for Statistical Computing: Vienna, Austria, 2023. [Google Scholar]

- Wilkinson, M.; Brantley, S.; Feng, J. A Mini Review of Presence and Immersion in Virtual Reality. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2021, 65, 1099–1103. [Google Scholar] [CrossRef]

- Slater, M. Immersion and the illusion of presence in virtual reality. Br. J. Psychol. 2018, 109, 431–433. [Google Scholar] [CrossRef] [PubMed]

| Statement | Mean ± SD |

|---|---|

| The VR mode was immersive. | 5.5 ± 0.92 |

| The MR mode was immersive. | 6.1 ± 0.83 |

| The wearable harness was comfortable. | 5.9 ± 1.22 |

| The harness was heavy. | 2.5 ± 0.81 |

| The tethered connection bothered me. | 2.7 ± 1.27 |

| The intensity of simulated force-feedback was adequate. | 5.7 ± 1.27 |

| The stylus and hand-tracking were adequately precise and reliable. | 5.1 ± 1.51 |

| The transition between stylus use and hand-tracking was seamless. | 5.5 ± 1.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kudry, P.; Cohen, M. Enhanced Wearable Force-Feedback Mechanism for Free-Range Haptic Experience Extended by Pass-Through Mixed Reality. Electronics 2023, 12, 3659. https://doi.org/10.3390/electronics12173659

Kudry P, Cohen M. Enhanced Wearable Force-Feedback Mechanism for Free-Range Haptic Experience Extended by Pass-Through Mixed Reality. Electronics. 2023; 12(17):3659. https://doi.org/10.3390/electronics12173659

Chicago/Turabian StyleKudry, Peter, and Michael Cohen. 2023. "Enhanced Wearable Force-Feedback Mechanism for Free-Range Haptic Experience Extended by Pass-Through Mixed Reality" Electronics 12, no. 17: 3659. https://doi.org/10.3390/electronics12173659