User-Aware Audio Marker Using Low Frequency Ultrasonic Object Detection and Communication for Augmented Reality

Abstract

:1. Introduction

2. Review of Low Frequency Ultrasonic Communication-based Audio Markers

2.1. Audio Marker Transmitter

2.2. Audio Marker Receiver

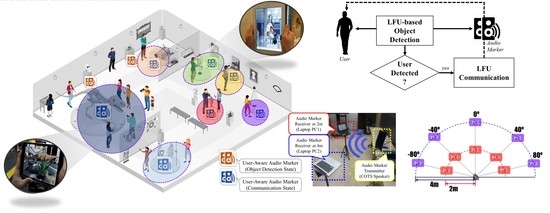

3. Proposed User-Aware Audio Marker System Using Low Frequency Ultrasonic-based Object Detection and Communication

3.1. LFU-Based Object Detection

3.2. LFU Communication for Audio Marker Transmission

4. Performance Evaluation and Discussion

4.1. LFU-Based Object Detection Performance

4.2. LFU Communication Performance

4.3. Robustness to Ambient Noise

4.4. Comparison with Other Positioning Systems for AR applications

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Rubino, I.; Xhembulla, J.; Martina, A.; Bottino, A.; Malnati, G. MusA: Using indoor positioning and navigation to enhance cultural experiences in a museum. Sensors 2013, 13, 17445–17471. [Google Scholar] [CrossRef]

- Lee, C.-W.; Chondro, P.; Ruan, S.-J.; Christen, O.; Naroska, E. Improving mobility for the visually impaired: A wearable indoor positioning system based on visual markers. IEEE Consum. Electron. Mag. 2018, 7, 12–20. [Google Scholar] [CrossRef]

- Kawai, N.; Yamasaki, M.; Sato, T.; Yokoya, N. AR marker hiding based on image inpainting and reflection of illumination changes. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 293–294. [Google Scholar]

- Lee, T.; Hollerer, T. Handy AR: Markerless inspection of augmented reality objects using fingertip tracking. In Proceedings of the 11th IEEE International Symposium on Wearable Computers, Boston, MA, USA, 11–13 October 2007; pp. 83–90. [Google Scholar]

- Mihara, S.; Sakamoto, A.; Shimada, H.; Sato, K. Augmented reality marker for operating home appliances. In Proceedings of the IFIP 9th International Conference on Embedded and Ubiquitous Computing, Melbourne Australia, 24–26 October 2011; pp. 372–377. [Google Scholar]

- Tian, Y.; Guan, T.; Wang, C. Real-time occlusion handling in augmented reality based on an object tracking approach. Sensors 2010, 10, 2885–2900. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Hong, H. Use of gradient based shadow detection for estimating environmental illumination distribution. Appl. Sci. 2018, 8, 2255. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; Felix, R.; Hinsch, C. Augmented reality marketing: How mobile AR-apps can improve brands through inspiration. J. Retail. Consum. Serv. 2019, 49, 43–53. [Google Scholar] [CrossRef]

- Yang, X.; Cheng, K.-T. LDB: An ultra-fast feature for scalable augmented reality on mobile devices. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 49–57. [Google Scholar]

- Behringer, R. Registration for outdoor augmented reality applications using computer vision techniques and hybrid sensors. In Proceedings of the IEEE Virtual Reality, Houston, TX, USA, 13–17 March 1999; pp. 244–251. [Google Scholar]

- Chaves-Diéguez, D.; Pellitero-Rivero, A.; García-Coego, D.; González-Castaño, F.J.; Rodríguez-Hernández, P.S.; Piñeiro-Gómez, Ó.; Gil-Castiñeira, F.; Costa-Montenegro, E. Providing IoT services in smart cities through dynamic augmented reality markers. Sensors 2015, 15, 16083–16104. [Google Scholar] [CrossRef] [PubMed]

- Lifton, J.; Laibowitz, M.; Harry, D.; Gong, N.-G.; Mittal, M.; Paradiso, J.A. Metaphor and manifestation cross-reality with ubiquitous sensor/actuator networks. IEEE Pervasive Comput. 2009, 8, 24–33. [Google Scholar] [CrossRef]

- Jeon, K.M.; Chun, C.J.; Kim, H.K.; Lee, M.J. Application of low frequency ultrasonic communication to audio marker for augmented reality. In Proceedings of the IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 8–10 January 2017; pp. 139–140. [Google Scholar]

- Jeon, K.M.; Kim, H.K.; Lee, M.J. Non-coherent low-frequency ultrasonic communication system with optimum symbol length. Int. J. Distrib. Sens. Netw. 2016, 12, 9713180. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, C.; Chan, S.-C.; Wei, X.; Ho, C.-H. New object detection, tracking, and recognition approaches for video surveillance over camera network. IEEE Sens. J. 2015, 15, 2679–2691. [Google Scholar] [CrossRef]

- Lifton, J.; Paradiso, J.A. Dual reality: Merging the real and virtual. In Proceedings of the International Conference on Facets of Virtual Environments, Berlin, Germany, 27–29 July 2009; pp. 12–28. [Google Scholar]

- McHugh, J.M.; Konrad, J.; Saligrama, V.; Jodoin, P.-M. Foreground-adaptive background subtraction. IEEE Signal Process. Lett. 2009, 16, 390–393. [Google Scholar] [CrossRef]

- Elia, M. Algebraic decoding of the (23,12,7) Golay code. IEEE Trans. Inf. Theory 1987, 33, 150–151. [Google Scholar] [CrossRef]

- Sklar, B. Digital Communications: Fundamentals and Applications; Prentice Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Lee, H.; Kim, T.H.; Choi, J.W.; Choi, S. Chirp signal based aerial acoustic communication for smart devices. In Proceedings of the IEEE Conference on Computer Communications, Kowloon, Hong Kong, 26 April–1 May 2015; pp. 2407–2415. [Google Scholar]

- Sobral, A.; Vacavant, A. A comprehensive review of background subtraction algorithms evaluated with synthetic and real videos. Comput. Vis. Image Underst. 2014, 122, 4–21. [Google Scholar] [CrossRef]

- Sohn, J.; Kim, N.S.; Sung, W. A statistical model based voice activity detection. IEEE Signal Process. Lett. 1999, 6, 1–3. [Google Scholar] [CrossRef]

- Murrian, M.J.; Gonzalez, C.W.; Humphreys, T.E.; Novlan, T.D. A dense reference network for mass-market centimeter-accurate positioning. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Savannah, GA, USA, 11–14 April 2016; pp. 243–254. [Google Scholar]

- Oliveira, L.C.; Andrade, A.O.; Oliveira, E.C.; Soares, A.; Cardoso, A.; Lamounier, E. Indoor navigation with mobile augmented reality and beacon technology for wheelchair users. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Orlando, FL, USA, 16–19 February 2017; pp. 37–40. [Google Scholar]

| Distance | 1 m | 2 m | 4 m | |

|---|---|---|---|---|

| Azimuth | ||||

| 80° | 100.0 | 96.6 | 86.6 | |

| 40° | 100.0 | 100.0 | 93.3 | |

| 0° | 100.0 | 100.0 | 100.0 | |

| −40° | 100.0 | 100.0 | 93.3 | |

| −80° | 100.0 | 93.3 | 80.0 | |

| Measure | False Negative Rate Inside of MTZ (%) | False Positive Rate Outside of MTZ (%) | |

|---|---|---|---|

| Method | |||

| Conventional audio marker [14] | 2.24 | 93.28 | |

| Proposed audio marker | 2.08 | 14.28 | |

| Systems | GNSS-based Dense Reference Network [24] | Bluetooth Beacon [25] | Proposed Audio Marker | |

|---|---|---|---|---|

| Attribute | ||||

| Range | <20 km | <70 m | <4 m | |

| Resolution | ~10 cm | ~5 cm | ~30 cm | |

| Sensor type | Antenna station | Bluetooth beacon, modem | COTS speaker, microphone | |

| Application | Outdoor positioning | Indoor positioning | Location-specific broadcasting | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, K.M.; Chun, C.J.; Kim, H.K.; Lee, M.J. User-Aware Audio Marker Using Low Frequency Ultrasonic Object Detection and Communication for Augmented Reality. Appl. Sci. 2019, 9, 2004. https://doi.org/10.3390/app9102004

Jeon KM, Chun CJ, Kim HK, Lee MJ. User-Aware Audio Marker Using Low Frequency Ultrasonic Object Detection and Communication for Augmented Reality. Applied Sciences. 2019; 9(10):2004. https://doi.org/10.3390/app9102004

Chicago/Turabian StyleJeon, Kwang Myung, Chan Jun Chun, Hong Kook Kim, and Myung J. Lee. 2019. "User-Aware Audio Marker Using Low Frequency Ultrasonic Object Detection and Communication for Augmented Reality" Applied Sciences 9, no. 10: 2004. https://doi.org/10.3390/app9102004