Dynamic Characteristic Monitoring of Wind Turbine Structure Using Smartphone and Optical Flow Method

Abstract

:1. Introduction

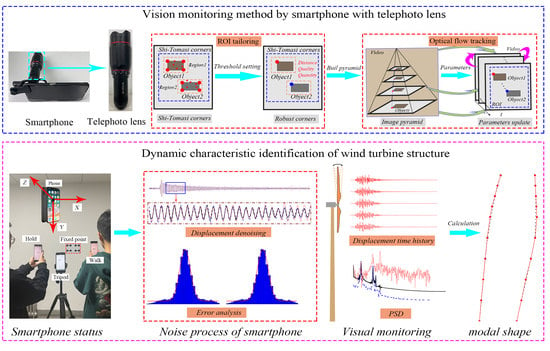

2. Dynamic Displacement Monitoring Based on Computer Vision

2.1. Camera Calibration

2.2. Target Tracking Principle Based on Optical Flow Method

2.3. Target-Free Feature Extraction Based on Optical Flow Method

2.4. Smartphone Jitter Processing

2.5. Displacement Calculation

3. Smartphone Performance Test

3.1. Smartphone Lens Distortion Test

3.2. Smartphone Displacement Monitoring

3.3. Performance Test of Smartphones in Different States

3.4. Structural Displacement Monitoring Using Smartphone in Different States

3.5. Structural Displacement Monitoring of Smartphone Assembled with Long Focus Lens

4. Dynamic Characteristic Monitoring of Wind Turbine Structure

4.1. Experimental Equipment

4.2. Natural Frequency Identification of Wind Turbine Structure

4.3. Shaking Table Test of Wind Turbine Structure

5. Conclusions

- (1)

- The proposed method based on optical flow method for monitoring the target-free dynamic characteristics of wind turbine structures can better identify targets by simulating simple and complex background projects. In addition, the use of smartphones combined with visual algorithms can simultaneously monitor the spatial displacement of the entire blade through ROI clipping.

- (2)

- The method of high pass filtering combined with adaptive scaling factor was adopted to effectively eliminate the displacement drift caused by the two shooting states of standing and slightly walking. The error analysis shows that the final error is less than 2 mm, which can meet the requirements of structural dynamic characteristics monitoring. The smartphone is equipped with a telephoto lens to monitor the displacement of the structure, which effectively expands the method of smartphone to monitor the dynamic characteristics of the structure.

- (3)

- The proposed method for monitoring the dynamic characteristics of wind turbine structures performs well in cooperation with smartphones. Combined with the shaking table test, the results show that using smartphones to monitor the dynamic characteristics of fan structures has higher accuracy in time and frequency domains.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hernandez-Estrada, E.; Lastres-Danguillecourt, O.; Robles-Ocampo, J.B.; Lopez-Lopez, A.; Sevilla-Camacho, P.Y.; Perez-Sariñana, B.Y.; Dorrego-Portela, J.R. Considerations for the structural analysis and design of wind turbine towers: A review. Renew. Sustain. Energy Rev. 2021, 137, 110447. [Google Scholar]

- Beganovic, N.; Söffker, D. Structural health management utilization for lifetime prognosis and advanced control strategy deployment of wind turbines: An overview and outlook concerning actual methods, tools, and obtained results. Renew. Sustain. Energy Rev. 2016, 64, 68–83. [Google Scholar] [CrossRef]

- Lin, Z.; Cevasco, D.; Collu, M. A methodology to develop reduced-order models to support the operation and maintenance of offshore wind turbines. Appl. Energy 2020, 259, 114228. [Google Scholar] [CrossRef]

- Xu, D.; Liu, P.F.; Chen, Z.P. Damage mode identification and singular signal detection of composite wind turbine blade using acoustic emission. Compos. Struct. 2021, 255, 112954. [Google Scholar] [CrossRef]

- Lau, B.C.P.; Ma, E.W.M.; Pech, M. Review of offshore wind turbine failures and fault prognostic methods. In Proceedings of the IEEE 2012 Prognostics and System Health Management Conference, Beijing, China, 23–25 May 2013. [Google Scholar]

- Mishnaevsky, L., Jr.; Branner, K.; Petersen, H.K.; Beauson, J.; McGugan, M.; Sørensen, N.F. Materials for wind turbine blades: An overview. Materials 2017, 10, 1285. [Google Scholar]

- Keegan, M.H.; Nash, D.H.; Stack, M.M. On erosion issues associated with the leading edge of wind turbine blades. J. Appl. Phys. 2013, 46, 383001. [Google Scholar] [CrossRef] [Green Version]

- Du, Y.; Zhou, S.; Jing, X.; Ping, Y.; Wu, H.; Kwok, N. Damage detection techniques for wind turbine blades: A review. Mech. Syst. Signal Process. 2020, 141, 106445. [Google Scholar] [CrossRef]

- Jasinien, E.; Raiutis, R.; Voleiis, A.; Vladiauskas, A.; Mitchard, D.; Amos, M. NDT of wind turbine blades using adapted ultrasonic and radiographic techniques. Insight 2009, 51, 477–483. [Google Scholar] [CrossRef] [Green Version]

- Behtani, A.; Tiachacht, S.; Khatir, T.; Khatir, S.; Wahab, M.A.; Benaissa, B. Residual Force Method for damage identification in a laminated composite plate with different boundary conditions. Frat. Integrità Strutt. 2022, 16, 35–48. [Google Scholar] [CrossRef]

- Khatir, S.; Abdel Wahab, M.; Tiachacht, S.; Le Thanh, C.; Capozucca, R.; Magagnini, E.; Benaissa, B. Damage identification in steel plate using FRF and inverse analysis. Frat. Integrità Strutt. 2021, 58, 416–433. [Google Scholar] [CrossRef]

- Hsu, M.H.; Zhuang, Z.Y. An intelligent detection logic for fan-blade damage to wind turbines based on mounted-accelerometer data. Buildings 2022, 12, 1588. [Google Scholar] [CrossRef]

- Amenabar, I.; Mendikute, A.; López-Arraiza, A.; Lizaranzu, M.; Aurrekoetxea, J. Comparison and analysis of non-destructive testing techniques suitable for delamination inspection in wind turbine blades. Compos. Part B Eng. 2011, 42, 1298–1305. [Google Scholar]

- Felipe-Sesé, L.; Díaz, F.A. Damage methodology approach on a composite panel based on a combination of Fringe Projection and 2D Digital Image Correlation. Mech. Syst. Signal Process. 2018, 101, 467–479. [Google Scholar]

- Li, Y.; Peng, L.; Wu, C.; Zhang, J. Street View Imagery (SVI) in the built environment: A theoretical and systematic review. Buildings 2022, 12, 1167. [Google Scholar] [CrossRef]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Wang, N.; Zhao, Q.; Li, S.; Zhao, X.; Zhao, P. Damage classification for masonry historic structures using convolutional neural networks based on still images. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1073–1089. [Google Scholar] [CrossRef]

- Sangirardi, M.; Altomare, V.; De Santis, S.; de Felice, G. Detecting damage evolution of masonry structures through computer-vision-based monitoring methods. Buildings 2022, 12, 831. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Signal Process. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Dong, C.Z.; Ye, X.W.; Jin, T. Identification of structural dynamic characteristics based on machine vision technology. Measurement 2018, 126, 405–416. [Google Scholar] [CrossRef]

- Khadka, A.; Fick, B.; Afshar, A.; Tavakoli, M.; Baqersad, J. Non-contact vibration monitoring of rotating wind turbines using a semi-autonomous UAV. Mech. Syst. Signal Process. 2020, 138, 106446. [Google Scholar] [CrossRef]

- Song, Q.; Wu, J.; Wang, H.; An, Y.; Tang, G. Computer vision-based illumination-robust and multi-point simultaneous structural displacement measuring method. Mech. Syst. Signal Process. 2022, 170, 108822. [Google Scholar] [CrossRef]

- Han, R.; Zhao, X.; Yu, Y.; Guan, Q.; Hu, W.; Li, M. A cyber-physical system for girder hoisting monitoring based on smartphones. Sensors 2016, 16, 1048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grossi, M. A sensor-centric survey on the development of smartphone measurement and sensing systems. Measurement 2019, 135, 572–592. [Google Scholar] [CrossRef]

- Ozer, E.; Feng, M.Q. Biomechanically influenced mobile and participatory pedestrian data for bridge monitoring. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147717705240. [Google Scholar] [CrossRef]

- Han, R.; Zhao, X. Shaking table tests and validation of multi-modal sensing and damage detection using smartphones. Buildings 2011, 11, 477. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, H.; Yu, Y.; Zhu, Q.; Hu, W.; Li, M.; Ou, J. Displacement monitoring technique using a smartphone based on the laser projection-sensing method. Sens. Actuators A Phys. 2016, 246, 35–47. [Google Scholar] [CrossRef]

- Zhao, X.; Ri, K.; Han, R.; Yu, Y.; Li, M.; Ou, J. Experimental research on quick structural health monitoring technique for bridges using smartphone. Adv. Mater. Sci. Eng. 2016, 2016, 1871230. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Chen, X.; Chen, H.; Wang, B.; Li, W.; Liu, S.; Li, P.; Qi, Z.; He, Z.; Zhao, X. Simulation of smartphone-based public participation in earthquake structural response emergency monitoring using a virtual experiment and AI. Buildings 2022, 12, 492. [Google Scholar] [CrossRef]

- Ozer, E.; Feng, D.; Feng, M.Q. Hybrid motion sensing and experimental modal analysis using collocated smartphone camera and accelerometers. Meas. Sci. Technol. 2017, 28, 105903. [Google Scholar] [CrossRef]

- Wang, N.; Zhao, X.; Zhao, P.; Zhang, Y.; Zou, Z.; Ou, J. Automatic damage detection of historic masonry buildings based on mobile deep learning. Autom. Constr. 2019, 103, 53–66. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision; Computer Science Department, Carnegie Mellon University: Pittsburgh, PA, USA, 1981; pp. 674–679. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988. [Google Scholar]

- Dong, C.Z.; Celik, O.; Catbas, F.N. Marker-free monitoring of the grandstand structures and modal identification using computer vision methods. Struct. Health Monit. 2019, 18, 1491–1509. [Google Scholar] [CrossRef]

- Yan, W.J.; Feng, Z.Q.; Yang, W.; Yuen, K.V. Bayesian inference for the dynamic properties of long-span bridges under vortex-induced vibration with Scanlan’s model and dense optical flow scheme. Mech. Syst. Signal Process. 2022, 174, 109078. [Google Scholar] [CrossRef]

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Li, W.; Zhao, W.; Gu, J.; Fan, B. Dynamic characteristics monitoring of large wind turbine blades based on target-free DSST vision algorithm and UAV. Remote Sens. 2022, 14, 3113. [Google Scholar] [CrossRef]

- Yoon, Y.; Elanwar, H.; Choi, H.; Golparvar-Fard, M.; Spencer, B.F., Jr. Target-free approach for vision-based structural system identification using consumer-grade cameras. Struct. Control Health Monit. 2016, 23, 1405–1416. [Google Scholar] [CrossRef]

- Khuc, T.; Catbas, F.N. Completely contactless structural health monitoring of real-life structures using cameras and computer vision. Struct. Control Health Monit. 2017, 24, e1852. [Google Scholar] [CrossRef]

- Slimani, M.; Khatir, T.; Tiachacht, S.; Boutchicha, D.; Benaissa, B. Experimental sensitivity analysis of sensor placement based on virtual springs and damage quantification in CFRP composite. J. Mater. Eng. Struct. 2022, 9, 207–220. [Google Scholar]

| Smartphone Category | Smartphone Photo | Frame Rate | Max Pixel | Pixel Density | Zoom Multiple | Aperture |

|---|---|---|---|---|---|---|

| iPhone 12 |  | 30/60 fps | 12 million | 460 ppi | 5 | f/2.4 |

| Honor X10 |  | 30/60 fps | 40 million | 397 ppi | 10 | f/1.8 |

| Motion State | X-Direction/Pixel | Y-Direction/Pixel | Z-Direction/Pixel |

|---|---|---|---|

| Equipped with tripod | 0.015 | 0.034 | 0.018 |

| Standing shooting | 95.025 | 72.183 | 6.254 |

| Walk slightly | 150.641 | 282.944 | 61.239 |

| Shooting Method | RMSE | R2 | |

|---|---|---|---|

| Standing shooting | 0.6219 | 0.8254 | 0.8763 |

| Walk slightly | 0.7342 | 0.7513 | 0.7925 |

| Measuring Points | Accelerometer (mm/s2) | Smartphone (mm) | Measuring Points | Accelerometers (mm/s2) | Smartphone (mm) |

|---|---|---|---|---|---|

| P0 | 5.6341 × 10−4 | 20.6585 | P5 | 8.3780 | |

| P1 | 18.1829 | P6 | 6.8293 | ||

| P2 | 2.3902 × 10−4 | 15.0244 | P7 | 1.6911 × 10−4 | 5.4341 |

| P3 | 12.0122 | P8 | 4.2276 | ||

| P4 | 6.5732 × 10−5 | 10.1504 | P9 | 1.0098 × 10−4 | 3.4472 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, W.; Li, W.; Fan, B.; Du, Y. Dynamic Characteristic Monitoring of Wind Turbine Structure Using Smartphone and Optical Flow Method. Buildings 2022, 12, 2021. https://doi.org/10.3390/buildings12112021

Zhao W, Li W, Fan B, Du Y. Dynamic Characteristic Monitoring of Wind Turbine Structure Using Smartphone and Optical Flow Method. Buildings. 2022; 12(11):2021. https://doi.org/10.3390/buildings12112021

Chicago/Turabian StyleZhao, Wenhai, Wanrun Li, Boyuan Fan, and Yongfeng Du. 2022. "Dynamic Characteristic Monitoring of Wind Turbine Structure Using Smartphone and Optical Flow Method" Buildings 12, no. 11: 2021. https://doi.org/10.3390/buildings12112021