1. Introduction

Learning and planning without an accurate dynamic model provide a great challenge for complex robotics control. In awareness of the daunting difficulty of physical control, reinforcement learning (RL) [

1], which instead treats the environment dynamic as a black box, implicitly learns optimal behaviors from interactions with the environment in pursuit of the maximal accumulated rewards. Combined with deep neural networks, deep RL has achieved great success in both simulated and real-world robotic control tasks, such as dexterous manipulation [

2,

3,

4,

5,

6], motion control [

7,

8,

9,

10], navigation [

11,

12,

13] and so on [

14,

15,

16]. In the ideal case, the reward function for each task should be an indicator for task solving [

2,

6]. Nevertheless, most algorithms rely on a delicately designed and appropriately shaped task-specific reward function that reflects the domain knowledge [

17,

18]. For example, Ref. [

6] takes the distance from the gripper to the handle as well as thedifference between the current and desired door pose into the reward function for a door-opening task. However, it is impractical to engineer rewards for all the tasks, especially for high-dimensional continuous control tasks with enormous state–action spaces (as shown in

Figure 1). As it grows tougher and requires considerably more exploration, the ability to efficiently robotic control with unshaped task-solving rewards is indispensable.

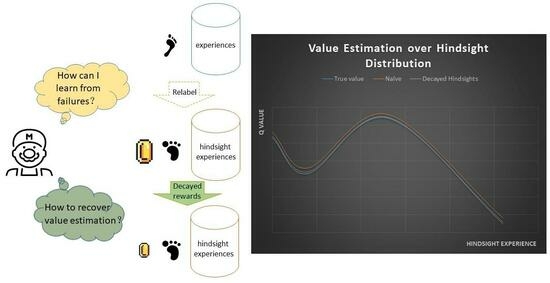

The recent advancement on hindsight experience replay (HER) [

19] proposes to replay past experiences with pseudo goals (abstracted from states indicating task solving), which enriches pseudo task-solving signals and enables learning from failures. Incorporated with HER, goal-conditioned RL [

20,

21,

22], where agents pursue various goals in an open-ended environment, learns to achieve and generalize across different goals with sparse task-solving rewards. Recent advances in experience replay with hindsights have shown to greatly enable goal-conditioned RL in various scenarios [

23,

24,

25,

26,

27,

28,

29,

30]. However, when replaying the experience, it introduces a well-known cognitive bias that describes the overestimation of one’s foresights after acquiring the outcomes of executing certain actions, which is called

hindsight bias [

31] and impedes learning in sequential decision-making tasks [

32,

33]. Concretely, pseudo rewards from the relabeled experience may give the agent unreasonable confidence in reaching past achieved goals, neglecting the actual outcomes of its policy. For value-based learning, the bias leads to overestimation in value function.

In countering the bias of regenerating past experiences with original goals and hindsight goals, existing work either increases the likelihood of sampled actions by assigning aggressive rewards for relabeled experiences [

34], or reduces changes in the likelihood of sampled trajectories [

35]. However, hindsight replay changes the distribution of replayed goals, yet most of the goals will not be further explored. Thus, assigning excessive hindsight rewards results in the unreliable generalization for goal-conditioned RL, which assumes that behaviors for one goal can generalize to another similar one. As hindsight goals progressively spread across all the goal space in an unprincipled manner, the value function approximation may collapse. Therefore, it is of great significance to restrict excessive hindsights on goals with high uncertainties under the current policy.

Dealing with the mentioned problem, we analyze the biased value function and consider stabilizing it with decayed hindsight rewards, namely decayed hindsight (DH). Compared to vanilla HER, if the current policy cannot regenerate the past experience with a hindsight goal very well, the hindsight reward assigned to the experience will be decreased. Embodying DH into HER enables consistent experience replay, Decayed-HER. For one thing, the inevitable hindsight bias will be adaptively reduced, according to the distribution dissimilarity with replayed goals. For another, it maintains a balance between hindsight replay and task-solving indication. Both in online and offline settings, we evaluate our proposed Decayed-HER on various goal-conditioned manipulation tasks using a simulated physics engine [

36]. Experiments demonstrate that it achieves the best average performance over the state-of-the-art multi-goal replay strategies in online learning and improves the performance in offline learning.

Our contribution can be summarized as below:

As far as we know, this manuscript is the first to propose a surrogate objective over hindsight experiences after analyzing the hindsight bias in HER, which is the foundation of reliable and unbiased multi-goal value estimation.

We propose a tractable implementation of the decayed hindsight and successfully embed it to online multi-goal replay and offline RL. Compared to existing works, the proposed method is effective and easy to implement with the potential of getting rid of low sample efficiency and heavy computation burden.

We conduct a series of experiments and show promising results on high-dimensional continuous control tasks with sparse rewards. Especially for offline RL, the proposed method pictures a prospective future to effectively learn from experiences and datasets without exploring the environment.

2. Related Works

Rewards shaping. Rewards shaping [

17,

18] mostly aims at managing every-step reward that heuristically reflects domain knowledge, such as the likelihood of achieving the desired goal. However, as mentioned in [

19], HER with shaped rewards [

17] performs worse than with sparse rewards. The source of it is that dense shaped rewards are more likely to collapse the value function approximation. We emphasize that manipulation hindsight rewards to counter bias are fundamentally different from reward shaping. In general, bias countering cares about the likelihood of regenerating past experiences by the current policy instead of providing every-step rewards.

Except for task-solving rewards, recent works construct various signals to encourage exploration. Ref. [

37] proposesself-balancing shaped rewards, which award sibling rollout to reconstruct a self-balancing optimum. Ref. [

38] appliesan asymmetric reward relabeling strategy to induce competition between a pair of agents. Both [

37,

38] encourage exploration beyond the original tasks.

Self-Imitation. Self-imitation [

39] shares the similar idea of HER and can be extended to goal-conditioned RL [

40,

41,

42,

43]. These works imitate achieved trajectories with goal-conditioned policies, just like replaying past experiences with the achieved goals in HER. For self-imitation, the hindsight bias may also lead to unstable policy updates. We will explore it in future work.

3. Materials and Methods

In this section, we revise the value-based goal-conditioned RL framework and its universal value function approximators, then analyze experience replay with the hindsight bias mentioned before, and derive Decayed-HER in the following.

3.1. Goal-Conditioned RL and Universal Value Function Approximators

Goal-conditioned RL extends typical RL to goal-conditioned behaviors. Consider a discounted Markov decision process (MDP),

, where

,

,

each represent a set of states, actions and goals,

and

are the transition probability distribution and reward function,

is a discount factor. At the beginning of each episode, the RL agent obtains the desired goal

. Then at time step

t, it observes a state

, executes an action

and receives a reward

at the next time step that indicates task solving. Concretely,

where

defines a tractable mapping from states to goals, and

is a pre-defined task-specific tolerance threshold [

22].

Let

denote a trajectory, and

denote its accumulated discounted return at

. Let

denote a universal policy,

and

denote its universal value function. Combined with neural networks, universal value function approximators (UVFA) [

21] have the expectation of generalizing the value estimation over various goals. With experience

, it optimizes

via performing policy improvement with the expectation

In general, HER variants concatenate states and goals together as joint inputs for the

Q-value function, just like [

19,

23,

44,

45].

3.2. Hindsight Bias

Dealing with sparse task-solving rewards, HER utilizes the hindsight—the pseudo goal is going to be achieved in the near future—to learn from failures. Concretely, it relabels experience

with a pseudo goal

and enables to learn from the relabeled experience

with a hindsight reward

. Since the pseudo goal is randomly sampled from the same trajectory of the original experience [

19], the hindsight reward thus yields a higher probability of goal reaching than

r. Formally, the current policy

is updated with Equation (

1) via

which plays a key role to remember and reuse multi-goal experiences.

However, HER neglects the fact that without loss of generality, we have

for most of the states and policies. It introduces hindsight bias indicating that HER inevitably changes the sample distribution of experiences. For experience

e, we regard the original distribution of experience as

, and the distribution of hindsight experience as

. The vanilla HER proposes hindsight goals in a random manner, which makes an unpredictable distribution shift from

to

and skews the value estimation over the distribution from

to

. Notice that

Importance sampling seems natural for canceling the sampling bias but is non-trivial in practice, which may result in policy updates with high variance [

22,

46].

As the environment dynamic is independent of goals, a goal-conditioned policy is able to regenerate the transition

of

e with any input goal as long as, with some probability, it can generate the action

a at the state

s. Without loss of generality, replaying experience with hindsights equals adding diverse behavior policies in advance. Thus we can assume that

for most of sampled hindsight experiences but

progressively approaches

, as the pseudo goal approaches the original goal. Informally, we assume that

for almost every replayed

e and some constant

c.

In order to verify that this theoretical overestimation does exist in practice, we plot the true return and the value estimation of TD3 [

47], which already addresses function approximation error in actor-critic methods, on a goal-conditioned continuous control task named parking-v0 [

48], in which the ego-vehicle must park in a given space with the appropriate heading. For every epoch during training, we obtain the values with the current policy. Concretely, we randomly sample a

pair from the replay buffer and take its

-value as value estimation under the current policy

. Then we take the true discounted returns over 100 rollouts that start with the pair and follow

as the return. In

Figure 2a, we plot the averaged value estimations and returns over 50 pairs at each epoch. We can see that there is a very clear overestimation during the training process, which may be minimal but cannot be neglected. For one thing, the skewed value function leads to sub-optimal policies. For another, the overestimation may result in a more significant bias for unexplored goals, given the iterated Bellman updates. Countering the effect of hindsight bias is essential.

3.3. Decayed Hindsights

In view of Equation (

2), we consider the skewed objective

for relabeled experience

. Noticing that importance sampling is non-trivial for hindsight experience, we instead directly learn to approximate

in the derived MDP

with reward

where

describes the distribution dissimilarity and we assume that

holds for every sampled

e.

Theorem 1. Under mild conditions, is a surrogate estimate for .

Proof. By appending

into the derived MDP, we can trivially obtain

which indicates that we can approximately estimate the true value

over

f by self-bootstrapped estimating

over

h with

. □

The new MDP inherits the convergence from the original MDP as long as the reward is bounded, i.e., for any experience

, bounded

r and some constant

, we hold

It is evident that if

is bounded, the reward is bounded. According to the previous analysis, we can assume that with probability

,

holds. Then it converges and

is in principle positive for goal-reaching hindsight experience and optimal policy.

On the basis of this observation, we make the following statements:

- 1.

As the difference grows with , the hindsight reward is in principle decreased with the distribution dissimilarity. We call the reconstructed hindsight rewards decayed hindsights.

- 2.

Nevertheless, obtaining

is non-trivial, as the estimation of

can be intractable for hindsight experience

. As most of the pseudo goals will not be further explored, we instead consider the ability of the current policy

to reproduce the sampled trajectory containing transition

, given the original goal

and the hindsight goal

. Under mild conditions, we have

where

is the sampled trajectory, and both the probability of sample

with

f and the probability of sample

with

h are omitted. (For simplicity, we denote

as

.) Concretely, we take

For fear of high variance, we constrain in the implementation. In future work, we may pay attention to further easing the computation burden.

After obtaining

and appending

in the value updates, we modify the vanilla algorithm in solving parking-v0 to TD3 with DH. In

Figure 2b, we also plot the averaged value estimations and returns over 50 pairs at each epoch. We can see that the overestimation progressively disappears. As the learning becomes stable, the value estimations match well with the returns. Compared to

Figure 2a, the returns are higher than those obtained by TD3 without decayed hindsights.

Figure 2 shows that the hindsight bias does exist and can be decreased by our proposed decayed hindsights.

3.4. Decayed-HER

Figure 3 presents the overall framework for Decayed-HER.

As shown in the framework, the training process runs along each module in a loop:

Given the desired goal g at the start of each episode, AGENT interacts with ENV with a goal-conditioned policy and stores the generated trajectory into BUFFER.

Sample a trajectory from BUFFER and RELABEL experiences with hindsight goals and the resulting hindsight rewards. Here, we show a simple strategy for sampling replay goals: First, we randomly sample a state and a future state along the same trajectory after it, . Then, we abstract the goal representation of as and store the relabeled . If the decayed hindsights module is enabled, the hindsight rewards will be modified.

OPTIMIZER samples experiences (that may be a mixed batch of original experiences and hindsight experiences) from BUFFER to update the policy of AGENT.

We apply DH to the decayed hindsights module, which is significantly different from other HER algorithms and is indispensable to the loop for unbiased objective

. Subsequently, we present the resulting Decayed-HER, which can incorporate decayed hindsights with any goal-conditioned off-policy RL algorithm, as shown in Algorithm 1.

| Algorithm 1 Decayed hindsight experience replay. |

![Machines 10 00856 i001]() |

3.5. Offline Decayed-HER

While Algorithm 1 works for online multi-goal tasks directly, we consider the probability of applying it in offline settings, where it learns from a fixed dataset without further exploring the environment. Notice that there is no explicit goal in the locomotion tasks; we consider the final states of expert trajectories with high returns as goals.

The offline dataset can be regarded as a static replay buffer with trajectories pre-generated by diverse behavior policies. Without loss of generality, let

denote a universal behavior policy. In off-policy RL, the target policy

can learn from experiences generated by any behavior policy as long as if

, we have

for each

. Conversely, if any state-action pair is unavailable for any behavior policy, there will be an approximation error in the estimation of

. One of the great challenges for offline RL is to address the problem of potentially learning from unavailable state-action pairs, i.e., out-of-distribution (OOD) data. Most existing offline RL algorithms adopt the conventional value-based framework but regularize the policy learning to match with the behavioral data to mitigate this problem. One stream of works [

49,

50,

51] avoid OOD state–action value evaluation by constraining the target policy within the distribution or support of the behavioral data. In general, these offline RL algorithms define the penalized value function as

where

D is a divergence function between distributions over actions (such as KL divergence),

is the penalization factor. It requires a pre-estimated cloned policy

since we do not have access to any

.

Based on the realization of

, we provide a practical realization of

D in terms of a

T-step trajectory

. Concretely, we take

for hindsight experience

e. The first term in

shows that we decrease the original

r with the dissimilarity. The second term represents the punishment proportional to

, which gets rid of the estimation of

.

5. Discussion and Conclusions

In recent years, the rapid development of neural networks has made it possible to efficiently and continuously control high-dimensional tasks. The approximation errors introduced by the neural networks are from different sources. In value-based RL, function approximation errors lead to overestimated value estimates and sub-optimal policies [

47]. In our work, we focus on another approximation error from the abuse of hindsights, which widely exist in HER [

19]. While existing works on hindsight bias neglect the effect of distribution shift on rewards, we instead derive a surrogate objective with modified rewards, i.e., decayed hindsights.

In this paper, we propose decayed hindsights to ease the influence of the overestimation of regenerating the hindsight experience. We analyze the hindsight bias in vanilla HER and approximate an unbiased MDP with decayed rewards. It leads to an efficient, bias-reduced consistent multi-goal hindsight replay, namely Decayed-HER. With its help, continuous control with sparse rewards in high dimensions can be consistently and effectively solved via multi-goal RL. In general, we incorporate our decayed Hindsights both with online multi-goal replay and offline behavior-regularized policies. Experiments show that it enables obtaining high performance and stabilizing the learning from sparse rewards. By framing typical offline RL into goal-conditioned RL, we are able to apply goal-conditioned policies in solving tasks without explicit goals.

In future work, we will further explore the hindsight bias in the goal-conditioned self-imitation method. It adopts a similar idea to hindsight replay but can be realized in a policy-based manner [

40,

41,

42,

43]. The hindsight bias, which reflects the difference between the estimated value and actual return, may lead to unstable and uncontrollable policy updates. It is essential to directly counter the bias in addition to trust the region policy optimization [

9,

55].