Analyzing and Improving the Performance of a Particulate Matter Low Cost Air Quality Monitoring Device

Abstract

:1. Introduction

2. Experiments

2.1. Experimental Setup

2.2. Exploratory Data Analysis and Preprocessing

2.3. Advanced Data Analysis: Self-Organizing Maps (SOMs)

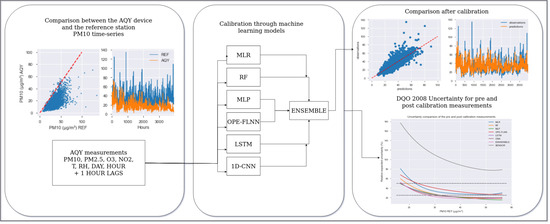

2.4. Statistical Machine Learning Algorithms

2.4.1. Multiple Linear Regression (MLR)

2.4.2. Random Forest (RF)

2.4.3. Multilayer Perceptron (MLP)

2.4.4. Convolutional Neural Networks for Time Series (CNNs)

2.4.5. Long Short-Term Memory Neural Networks (LSTMs)

2.4.6. Orthogonal Polynomial Expanded, Functional Link, Neural Networks (OPE-FLNNs)

2.4.7. Averaging Ensemble (ENSEMBLE)

2.5. Metrics

2.6. Target Diagram

2.7. Relative Expanded Uncertainty

3. Results

3.1. Exploratory Data Analysis (EDA)

3.2. LCAQMD Evaluation

3.3. Self-Organizing Maps

3.4. Computational Intelligence Calibration

3.5. Relative Expanded Uncertainty and Target Diagram

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Statistics. 2018. Available online: https://apps.who.int/iris/bitstream/handle/10665/272596/9789241565585-eng.pdf?ua=1 (accessed on 26 October 2020).

- Pascal, M.; Corso, M.; Chanel, O.; Declercq, C.; Badaloni, C.; Cesaroni, G.; Henschel, S.; Meister, K.; Haluza, D.; Martin-Olmedo, P.; et al. Assessing the public health impacts of urban air pollution in 25 European cities: Results of the Aphekom project. Sci. Total Environ. 2013, 449, 390–400. [Google Scholar] [CrossRef]

- EUD (European Union Directive). Directive 2008/50/EC of the EuropeanParliament and of the Council of 21 May 2008 on ambient air quality and cleaner air for Europe. Off. J. Eur. Union 2008, L152. [Google Scholar]

- Borrego, C.; Ginja, J.; Coutinho, M.; Ribeiro, C.; Karatzas, K.; Sioumis, T.; Katsifarakis, N.; Konstantinidis, K.; De Vito, S.; Esposito, E.; et al. Assessment of air quality microsensors versus reference methods: The EuNetAir Joint Exercise—Part II. Atmos. Environ. 2018, 193, 127–142. [Google Scholar] [CrossRef]

- Concas, F.; Mineraud, J.; Lagerspetz, E.; Varjonen, S.; Puolamäki, K.; Nurmi, P.; Tarkoma, S. Low-Cost Outdoor Air Quality Monitoring and In-Field Sensor Calibration. arXiv 2020, arXiv:1912.06384v2. Available online: https://arxiv.org/abs/1912.06384v2 (accessed on 26 October 2020).

- Si, M.; Xiong, Y.; Du, S.; Du, K. Evaluation and calibration of a low-cost particle sensor in ambient conditions using machine-learning methods. Atmos. Meas. Tech. 2020, 13, 1693–1707. [Google Scholar] [CrossRef] [Green Version]

- Zimmerman, N.; Presto, A.A.; Kumar, S.P.N.; Gu, J.; Hauryliuk, A.; Robinson, E.S.; Robinson, A.L.; Subramanian, R. A machine learning calibration model using random forests to improve sensor performance for lower-cost air quality monitoring. Atmos. Meas. Tech. 2018, 11, 291–313. [Google Scholar] [CrossRef] [Green Version]

- Climate-Data.org. Available online: https://en.climate-data.org/europe/greece/thessaloniki/thessaloniki-1001/ (accessed on 26 October 2020).

- Air Quality Sensor Performance Evaluation Center. Field Evaluation Aeroqual AQY (v1.0). 2018. Available online: http://www.aqmd.gov/docs/default-source/aq-spec/field-evaluations/aeroqual-aqy-v1-0---field-evaluation.pdf?sfvrsn=21 (accessed on 26 October 2020).

- Troyanskaya, O.G.; Cantor, M.; Sherlock, G.; Brown, P.O.; Hastie, T.; Tibshirani, R.; Botstein, D.; Altman, R.B. Missing value estimation methods for DNA microarrays. Bioinformatics 2001, 17, 520–525. [Google Scholar] [CrossRef] [Green Version]

- Kohonen, T. Self-organized formation of topologically correct feature maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biol. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Wang, W.; Gang, J. Application of Convolutional Neural Network in Natural Language Processing. In Proceedings of the International Conference on Information Systems and Computer Aided Education (ICISCAE), Changchun, China, 6–8 July 2018. [Google Scholar] [CrossRef]

- Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. In Proceedings of the 9th ISCA Workshop on Speech Synthesis, Sunnyvale, CA, USA, 13–15 September 2016; arXiv:1609.03499. Available online: https://arxiv.org/abs/1609.03499 (accessed on 26 October 2020).

- Borovykh, A.; Bohte, S.; Oosterlee, C. Conditional time series forecasting with convolutional neural networks. arXiv 2018, arXiv:170304691. Available online: https://arxiv.org/abs/1703.04691 (accessed on 26 October 2020).

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Vijayarani, S.; Ilamathi, M.J.; Nithya, M. Preprocessing Techniques for Text Mining—An Overview. Int. J. Comput. Sci. Commun. Netw. 2015, 5, 7–16. Available online: https://www.researchgate.net/publication/339529230_Preprocessing_Techniques_for_Text_Mining_-_An_Overview (accessed on 26 October 2020).

- Zhang, D.; Lin, J.; Peng, Q.; Wang, D.; Yang, T.; Sorooshian, S.; Liu, X.; Zhuang, J. Modeling and simulating of reservoir operation using the artificial neural network, support vector regression, deep learning algorithm. J. Hydrol. 2018, 565, 720–736. [Google Scholar] [CrossRef] [Green Version]

- Tsiouris, Κ.Μ.; Pezoulas, V.C.; Zervakis, M.E.; Konitsiotis, S.; Koutsouris, D.D.; Fotiadis, D.I. A Long Short-Term Memory deep learning network for the prediction of epileptic seizures using EEG signals. Comput. Biol. Med. 2018, 99, 24–37. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.S.; Park, I.; Song, C.H.; Lee, K.; Yun, J.W.; Kim, H.K.; Jeon, M.; Lee, J.; Han, K.M. Development of a daily PM10 and PM2.5 prediction system using a deep long short-term memory neural network model. Atmos. Chem. Phys. Discuss. 2019, 19, 12935–12951. [Google Scholar] [CrossRef] [Green Version]

- Vuković, N.; Petrović, M.; Miljković, Z. A comprehensive experimental evaluation of orthogonal polynomial expanded random vector functional link neural networks for regression. Appl. Soft Comput. 2018, 70, 1083–1096. [Google Scholar] [CrossRef]

- Jolliff, J.K.; Kindle, J.C.; Shulman, I.; Penta, B.; Friedrichs, M.A.; Helber, R.; Arnone, R.A. Summary diagrams for coupled hydrodynamic-ecosystem model skill assessment. J. Mar. Syst. 2009, 76, 64–82. [Google Scholar] [CrossRef]

- Spinelle, L.; Gerboles, M.; Villani, M.G.; Aleixandre, M.; Bonavitacola, F. Field calibration of a cluster of low-cost available sensors for air quality monitoring. Part A: Ozone and nitrogen dioxide. Sens. Actuators B Chem. 2015, 215, 249–257. [Google Scholar] [CrossRef]

- Jayaratne, R.; Liu, X.; Thai, P.; Dunbabin, M.; Morawska, L. The influence of humidity on the performance of a low-cost air particle mass sensor and the effect of atmospheric fog. Atmos. Meas. Tech. 2018, 11, 4883–4890. [Google Scholar] [CrossRef] [Green Version]

- Masson, N.; Piedrahita, R.; Hannigan, M. Approach for quantification of metal oxide type semiconductor gas sensors used for ambient air quality monitoring. Sens. Actuators B Chem. 2015, 208, 339–345. [Google Scholar] [CrossRef]

- Cameron, A.C.; Windmeijer, F.A. An R-squared measure of goodness of fit for some common nonlinear regression models. J. Econ. 1997, 77, 329–342. [Google Scholar] [CrossRef]

- Weissert, L.; Alberti, K.; Miskell, G.; Pattinson, W.; Salmond, J.; Henshaw, G.; Williams, D.E. Low-cost sensors and microscale land use regression: Data fusion to resolve air quality variations with high spatial and temporal resolution. Atmos. Environ. 2019, 213, 285–295. [Google Scholar] [CrossRef]

- Karagulian, F.; Barbiere, M.; Kotsev, A.; Spinelle, L.; Gerboles, M.; Lagler, F.; Redon, N.; Crunaire, S.; Borowiak, A. Review of the Performance of Low-Cost Sensors for Air Quality Monitoring. Atmosphere 2019, 10, 506. [Google Scholar] [CrossRef] [Green Version]

- Tagle, M.; Rojas, F.; Reyes, F.; Vásquez, Y.; Hallgren, F.; Lindén, J.; Kolev, D.; Watne, Å.K.; Oyola, P. Field performance of a low-cost sensor in the monitoring of particulate matter in Santiago, Chile. Environ. Monit. Assess. 2020, 192, 1–18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Scheme 2. | Symbol | Formula |

|---|---|---|

| Coefficient of determination | ||

| Mean absolute error | MAE | |

| Root mean square error | RMSE | |

| Unbiased root mean squared distance | uRMSD | |

| Bias | B |

| NO2 REF | NO2 AQY | O3 REF | O3 AQY | PM2.5 AQY | PM10 REF | PM10 AQY | TEMP | RH | |

|---|---|---|---|---|---|---|---|---|---|

| NO2 REF | 1 | 0.04 | −0.65 | −0.42 | 0.20 | 0.43 | 0.26 | 0 | −0.01 |

| NO2 AQY | 0.04 | 1 | 0.35 | −0.05 | −0.08 | 0.03 | −0.10 | 0.61 | −0.45 |

| O3 REF | −0.65 | 0.35 | 1 | 0.62 | −0.21 | −0.29 | −0.25 | 0.30 | −0.42 |

| O3 AQY | −0.42 | −0.05 | 0.62 | 1 | −0.08 | −0.36 | −0.12 | −0.21 | −0.27 |

| PM2.5 AQY | 0.20 | −0.08 | −0.21 | −0.08 | 1 | 0.49 | 0.92 | −0.31 | 0.21 |

| PM10 REF | 0.43 | 0.03 | −0.29 | −0.36 | 0.49 | 1 | 0.64 | 0.03 | 0.06 |

| PM10 AQY | 0.26 | −0.10 | −0.25 | −0.12 | 0.92 | 0.64 | 1 | −0.32 | 0.17 |

| TEMP | 0 | 0.61 | 0.30 | −0.21 | −0.31 | 0.03 | −0.32 | 1 | −0.49 |

| RH | −0.01 | −0.45 | −0.42 | −0.27 | 0.21 | 0.06 | 0.17 | −0.49 | 1 |

| R2 | R | MAE | RMSE | R2 | R | MAE | RMSE | R2 | R | MAE | RMSE | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NO2 | −3.02 | 0.04 | 16.76 | 19.84 | −3.32 | 0.14 | 17.49 | 20.03 | −2.71 | 0.0 | 15.96 | 19.58 |

| O3 | 0.20 | 0.62 | 18.16 | 22.17 | 0.11 | 0.77 | 20.71 | 24.35 | 0.31 | 0.61 | 15.73 | 19.84 |

| PM10 | −1.28 | 0.64 | 15.39 | 17.77 | −0.31 | 0.79 | 12.55 | 14.65 | −2.68 | 0.50 | 18.22 | 20.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bagkis, E.; Kassandros, T.; Karteris, M.; Karteris, A.; Karatzas, K. Analyzing and Improving the Performance of a Particulate Matter Low Cost Air Quality Monitoring Device. Atmosphere 2021, 12, 251. https://doi.org/10.3390/atmos12020251

Bagkis E, Kassandros T, Karteris M, Karteris A, Karatzas K. Analyzing and Improving the Performance of a Particulate Matter Low Cost Air Quality Monitoring Device. Atmosphere. 2021; 12(2):251. https://doi.org/10.3390/atmos12020251

Chicago/Turabian StyleBagkis, Evangelos, Theodosios Kassandros, Marinos Karteris, Apostolos Karteris, and Kostas Karatzas. 2021. "Analyzing and Improving the Performance of a Particulate Matter Low Cost Air Quality Monitoring Device" Atmosphere 12, no. 2: 251. https://doi.org/10.3390/atmos12020251