1. Introduction

In recent years, medical data mining has gained recognition, and especially, the development of the class prediction model has been of great interest. The classification of medical datasets arises in many applications, such as medical diagnostic tests of diseases and gene expression tests. Medical diagnosis is used to find out the diseases of patients based on the given symptoms and physical examinations. Gene expression tests are to predict the probability of diseases based on the genes associated with the phenotype or disease. About diseases such as liver cancer, lung cancer, breast cancer, and gastric cancer, the early diagnosis or prediction of these diseases are pretty vital, because they can prevent or stop an outbreak and even save precious time. Hence, developing a powerful prediction model is considered as a primary task for medical data mining. However, medical datasets often have the imbalanced classes distribution problem, which means positive outcomes are rare compared to the negative outcomes, and what we are interested in is the minority class rather than the majority class.

Imbalanced datasets are considered as critical issues in data mining and machine learning. The conventional classifiers generally have a high prediction for the majority class but fail to detect the minority class, because they are designed for maximizing the overall accuracy and assume that the costs misclassification are equal.

The support vector machine (SVM) is the most popular classifier algorithm and has been proven to outperform other classification methods when dealing with high-dimensional datasets and numerical features [

1]. Because it can deal with nonlinear and high-dimensional problems, it has a good performance for many different datasets. The standard SVM is formulated as follows:

Primal problem:

where

is an orthogonal vector to the hyperplane

,

C is the cost of misclassification,

is the bias,

is a mapping function, and

is a slack variable. Slack variables measure the error that includes the data points on the wrong side of the hyperplane or within the margin. Hence, the classifier can be written as

, and the predicted label is

.

Because it is difficult to solve the primal problem directly when the mapping function is not the identity function, we need to convert the primal problem into the dual problem:

Dual problem:

where

is a Lagrange multiplier, and

is a kernel function. Common choices for kernel functions are linear function

, polynomial function

, and Gaussian radial basis function

. In particular, Gaussian radial basis function is very popular in the SVM. In general,

can be considered as the similarity measurement between two data points

and

. In the dual standard SVM, the classifier can be rewritten as

.

Although the standard SVM is a powerful tool for classification, it still has some drawbacks:

The hyperplane used in the SVM algorithm will skew toward the minority class if the training dataset is imbalanced. The objective of the conventional SVM is to maximize the overall accuracy and an equal misclassification cost is assumed in the classifiers.

The performance of the SVM highly depends on the parameter selection and its kernel selection. In general, it can be very time consuming to optimize its parameters by using a grid search.

The SVM is based on the structural risk minimization (SRM) and aims to maximize the margin and minimize the misclassification error. As a consequence, in order to lower (misclassification error), the hyperplane will skew toward the minority class in the imbalanced dataset so that the SVM easily misclassifies the new observations to the majority class. So far, the common solutions to this problem are re-sampling, cost-sensitive learning, and a threshold adjustment.

Re-sampling is used to modify the dataset to improve its balance, and it can be categorized into two groups: under-sampling (such as a one-sided selection [

2]) and over-sampling (such as SMOTE [

3] and borderline SMOTE [

4]). Although re-sampling is the easiest way to improve the performance, it still has some drawbacks. Under-sampling may lose some valuable information, and over-sampling will increase the completion time.

Cost-sensitive learning [

5] is to adjust the misclassification cost between the majority class and minority class, and the ratio of two costs can be determined by the inverse of the imbalanced ratio (IR), which is defined as the proportion samples in the number of the majority class to the minority class [

6], PSO algorithm [

7], and information entropy [

8]. However, some researchers do not recommend cost-sensitive learning. They believe that the improving effect will be limited because the Karush–Kuhn–Tucker (KKT) conditions take the penalty constants as the upper bounds of the misclassification costs [

9].

A threshold adjustment is to modify the threshold or decision value, and there are some methods based on the rule of thumb [

10], Fisher’s discriminant analysis [

11], the midpoint between two-class data points using ensemble learning [

12], and the

score of the k-fold cross validation [

13]. In order to evaluate the efficiency of classification, we consider the most time-consuming method proposed by Brank et al. (2003) [

13], but we adjust the threshold based on the G-mean of the five-fold cross validation and rename it the CV-THR SVM.

Tuning the parameters is one of the most critical steps for training the model, and a grid search is the simplest method. However, it is time consuming to optimize the parameters of a nonlinear SVM by using a grid search [

14,

15]. Recently, many types of optimization algorithms were proposed to minimize the completion time, such as particle swarm optimization (PSO) [

16,

17,

18], the genetic algorithm (GA) [

15,

16,

18], a linear search [

14], and others [

19,

20,

21]. These optimization algorithms are all based on k-fold cross validation, and different metrics are used as the evaluation criteria. Taking k-fold cross validation as the fitness function may avoid overfitting but costs too much time, which is quite inefficient.

In this paper, we consider the SVM with a Gaussian radial basis kernel and

. In order to deal with the above problems, the imbalanced datasets and parameter selection, we purpose a fast and simple method based on a threshold adjustment, called b-SVM, to improve the classification performance for imbalanced datasets, and furthermore, we also propose an approach, called the Min-max gamma selection, to optimize the parameter

of SVMs without carrying out an extensive k-fold cross validation. The remaining part of this paper is organized as follows:

Section 2 describes our new methods, materials, and flowcharts.

Section 3 presents the results of the experiments and compares our approaches with other methods.

Section 4 is the discussions and conclusions.

2. Materials and Methods

2.1. b-SVM

To deal with the high rate of false negatives, we focus on the reasons that standard SVM

formula easily becomes negative in the imbalanced dataset. First of all, we decompose and analyze the

structure:

where

are the support vectors whose

values are less than

C and greater than 0,

are the

of minority class,

are the

of majority class, and tuning factor

:

is directly related to the . In general, the number of support vectors in the majority class is larger than in the minority class, which implies that the more imbalanced the dataset is, the more negative is. Because results in the skewness of hyperplane, we correct the hyperplane by eliminating , . Finally, b-SVM is defined as follows:

2.2. Min-Max Gamma Selection

The two parameters,

C and

, play an important role in radial basis function kernel (Gaussian kernel) and there are no exact ranges for their values. The radial basis function kernel is defined as

where

is a parameter that sets how far the searching radius of training dataset reaches. Many researchers prefer applying k-fold cross validation to calculation of G-mean instead of training dataset as fitness values, to avoid overfitting or underfitting, even though the excessive completion time is required. In contrast, if we take the G-mean of training dataset as fitness value, it is usually to obtain the

which is larger than the optimal

. Although we can prevent the model underfitting and lower the completion time, overly large value of

can easily cause model overfitting.

To overcome the problem above, a new method called Min-max gamma selection is proposed to select the appropriate

value without carrying out k-fold cross validation. We select the

from the set

and calculate the “G-mean of training dataset” for each

. In particular, we choose the smallest value of

which has the largest G-mean of training dataset as the optimal

, to avoid overfitting and underfitting. Min-max gamma selection Algorithm 1 is formally presented as follows:

| Algorithm 1: Min-max gamma selection |

![Genes 14 00583 i001]() |

It is worth mentioning, C-value has effect on optimal -value, and based on our previous experiments, we suggest setting or for Min-max gamma selection.

2.3. Performance Measures

In order to evaluate classifiers on imbalanced datasets, using accuracy as a measure can be misleading. Therefore, we consider an alternative measure, G-mean, which is a measure of the ability of a classifier to balance sensitivity and specificity, has been widely used in imbalanced datasets, where G-mean , sensitivity , and specificity (TP = true positive; FN = false negative; TN = true negative; FP = false positive). To evaluate whether the performance between two methods is significantly different, the paired-t test with significance level of is conducted for comparing paired classification results.

2.4. Simulation Study

We generate low-dimensional and high-dimensional datasets to evaluate the classification performance of SVMs. Low/(high)-dimensional datasets are generated as follows: Each observation has 30 (1000) features. Among all the features, 20 (900) are non-informative features, each following independently for both classes. The remaining 10 (100) are informative features following k-dimensional multivariate normal distributions and for the majority class and the minority class, respectively, where k is the number of informative features, , , and . In this simulation, we assume that informative features are equi-correlated with correlation and the degree of imbalance is quantified using the imbalance ratio (IR), which is represented as the ratio between the number of samples in the majority and minority classes. For each simulation experiment, we generate the training dataset of 60 and 200 samples, respectively, and the testing dataset of 2000 samples, with different . In the end, we have 48 datasets, and each simulation is repeated 50 times. We use testing datasets to evaluate the classification performance of SVMs and ensure good statistical behavior.

2.5. Real Datasets

Six benchmark datasets are used to assess the performance of SVMs, and among all datasets, first two are low-dimensional datasets, and the rest of the datasets are high dimensional.

Table 1 shows the summary of these real datasets. We use ten-fold cross validation and repeat this process 30 times to evaluate the classification performance.

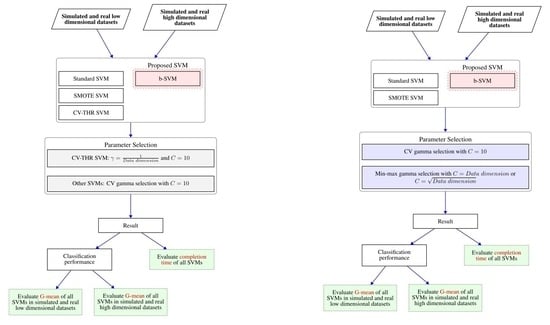

2.6. Flow Chart for Experiments

First of all, we will compare our proposed method b-SVM with three conventional SVMs, standard SVM, SMOTE SVM, and CV-THR SVM, in a simulation study and real datasets. About parameter selection, we employed default

and

for CV-THR SVM without carrying out parameter selection, because CV-THR SVM is too time-consuming. As for the remaining SVMs, we employed default

and optimal

based on five-fold cross validation (CV gamma selection). CV gamma selection is the most common and popular method to determine the parameter, even though it requires significant time to calculate. Second, with respect to gamma selection, we only consider three SVMs, standard SVM, SMOTE SVM, and b-SVM, and compare our approach Min-max gamma selection Algorithm 1 with the common method CV gamma selection. The flow chart is shown in

Figure 1.