Extraction of Tobacco Planting Information Based on UAV High-Resolution Remote Sensing Images

Abstract

:1. Introduction

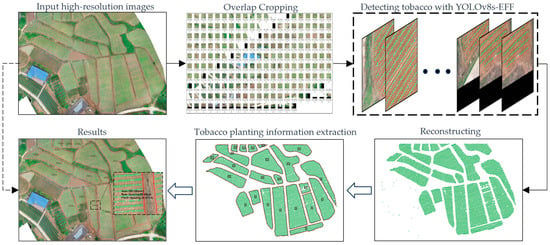

- For large-area tobacco planting monitoring, we propose a tobacco planting information extraction method based on UAV images that can effectively identify and locate small and densely planted tobacco plants and obtain the area, row spacing, and plant spacing synchronously.

- Aiming to detect small and dense tobacco, we built a tobacco detection model, YOLOv8s-EFF, based on YOLOv8s. YOLOv8s-EFF integrates SENet and ASFF to learn more fine-grained features of tobacco images, enhancing the detection performance of tobacco.

- We proposed a tobacco planting distance extraction algorithm based on tobacco coordinates. The algorithm uses tobacco detection coordinates to realize the calculation of plant spacing and row spacing. Meanwhile, the quantile method with a specific threshold is applied to detect outliers, which alleviates the problem of over- or under-detection in scenes of dense tobacco planting or small tobaccos and improves the accuracy of the calculation results.

- The shape of the tobacco field is variable in the real environment due to the planting method, topography, etc. We creatively constructed an algorithm for extracting the area of the tobacco field based on expanding contours. The algorithm can accurately extract the contours and areas of tobacco fields with different shapes based on discrete tobacco detection coordinates.

2. Materials and Methods

2.1. Experimental Area

2.2. Data and Preprocessing

2.3. Methods

2.3.1. Overall Process for Extracting Tobacco Planting Information

2.3.2. Object Detection Model and Tobacco Field Region Division

2.3.3. Planting Distance Extraction Algorithm and Outlier Detection

2.3.4. Extracting Tobacco Field Area Based on Expanding Contour

- Traverse the Delaunay triangulation, calculate the circumscribed circle radius of each triangle, and eliminate the triangles whose radius is greater than the alpha value.

- In the remaining triangles, the edges that appear only once are reserved and connected in turn in a counterclockwise direction to obtain the initial contour of the tobacco field.

3. Results and Discussion

3.1. Tobacco Detection

3.2. Tobacco Planting Information Extraction

3.2.1. Isolated Tobacco Field

3.2.2. Composite Tobacco Fields

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Khan, I.H.; Suman, R. Understanding the potential applications of Artificial Intelligence in Agriculture Sector. Adv. Agrochem 2023, 2, 15–30. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning–Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Maktab Dar Oghaz, M.; Razaak, M.; Kerdegari, H.; Argyriou, V.; Remagnino, P. Scene and Environment Monitoring Using Aerial Imagery and Deep Learning. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 362–369. [Google Scholar] [CrossRef]

- Li, Z.; Mao, J.; Cao, P. Digital transformation: An inevitable choice for innovation and development of tobacco science and technology in China. Tob. Sci. Technol. 2022, 55, 1–8. [Google Scholar] [CrossRef]

- Shao, X.; Jiang, S.; Yan, S.; Mei, Y.; Li, X.; Pu, E.; Hou, Q.; Wang, X.; Shan, S.; Guo, J.; et al. Exploration of the Construction of Smart Tobacco Agriculture. Tob. Regul. Sci. (TRS) 2022, 1, 1652–1662. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Huang, S.; Wu, S.; Sun, C.; Ma, X.; Jiang, Y.; Qi, L. Deep localization model for intra-row crop detection in paddy field. Comput. Electron. Agric. 2020, 169, 105203. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Lu, S.; Liu, X.; He, Z.; Zhang, X.; Liu, W.; Karkee, M. Swin-Transformer-YOLOv5 for Real-Time Wine Grape Bunch Detection. Remote Sens. 2022, 14, 5853. [Google Scholar] [CrossRef]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Xiao, G.; Wen, P.; Zhang, J.; Hou, C. Mapping Tobacco Fields Using UAV RGB Images. Sensors 2019, 19, 1791. [Google Scholar] [CrossRef] [PubMed]

- Fang, H. Rice crop area estimation of an administrative division in China using remote sensing data. Int. J. Remote Sens. 1998, 19, 3411–3419. [Google Scholar] [CrossRef]

- Wu, B.; Li, Q. Crop planting and type proportion method for crop acreage estimation of complex agricultural landscapes. Int. J. Appl. Earth Obs. Geoinf. 2012, 16, 101–112. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- De Macedo, M.M.G.; Mattos, A.B.; Oliveira, D.A.B. Generalization of Convolutional LSTM Models for Crop Area Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1134–1142. [Google Scholar] [CrossRef]

- Huang, L.; Wu, X.; Peng, Q.; Yu, X. Depth Semantic Segmentation of Tobacco Planting Areas from Unmanned Aerial Vehicle Remote Sensing Images in Plateau Mountains. J. Spectrosc. 2021, 2021, 6687799. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. (Engl. Ed.) 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Ayala, C.; Sesma, R.; Aranda, C.; Galar, M. A Deep Learning Approach to an Enhanced Building Footprint and Road Detection in High-Resolution Satellite Imagery. Remote Sens. 2021, 13, 3135. [Google Scholar] [CrossRef]

- Ghandorh, H.; Boulila, W.; Masood, S.; Koubaa, A.; Ahmed, F.; Ahmad, J. Semantic Segmentation and Edge Detection—Approach to Road Detection in Very High Resolution Satellite Images. Remote Sens. 2022, 14, 613. [Google Scholar] [CrossRef]

- Hoque, M.A.-A.; Phinn, S.; Roelfsema, C.; Childs, I. Tropical cyclone disaster management using remote sensing and spatial analysis: A review. Int. J. Disaster Risk Reduct. 2017, 22, 345–354. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, L. Geological Disaster Recognition on Optical Remote Sensing Images Using Deep Learning. Procedia Comput. Sci. 2016, 91, 566–575. [Google Scholar] [CrossRef]

- Mallupattu, P.K.; Sreenivasula Reddy, J.R. Analysis of Land Use/Land Cover Changes Using Remote Sensing Data and GIS at an Urban Area, Tirupati, India. Sci. World J. 2013, 2013, 268623. [Google Scholar] [CrossRef] [PubMed]

- Wiatkowska, B.; Słodczyk, J.; Stokowska, A. Spatial-Temporal Land Use and Land Cover Changes in Urban Areas Using Remote Sensing Images and GIS Analysis: The Case Study of Opole, Poland. Geosciences 2021, 11, 312. [Google Scholar] [CrossRef]

- Gupta, P.; Christopher, S.A.; Wang, J.; Gehrig, R.; Lee, Y.; Kumar, N. Satellite remote sensing of particulate matter and air quality assessment over global cities. Atmos. Environ. 2006, 40, 5880–5892. [Google Scholar] [CrossRef]

- Scheibenreif, L.; Mommert, M.; Borth, D. Estimation of Air Pollution with Remote Sensing Data: Revealing Greenhouse Gas Emissions from Space. arXiv 2021, arXiv:2108.13902. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Glenn, J. YOLOv8. Ultralytics. Available online: https://githubcom/ultralytics/ultralytics (accessed on 15 April 2023).

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; Volume 76, pp. 226–231. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Delaunay, B. Sur la sphere vide. Izv. Akad. Nauk SssrOtd. Mat. I Estestv. Nauk 1934, 7, 1–2. [Google Scholar]

- Lee, D.T.; Schachter, B.J. Two algorithms for constructing a Delaunay triangulation. Int. J. Comput. Inf. Sci. 1980, 9, 219–242. [Google Scholar] [CrossRef]

- Glenn, J. YOLOv5. Available online: https://githubcom/ultralytics/yolov5 (accessed on 15 April 2023).

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312v3. [Google Scholar]

| Experimental Areas | Original Data | Augmented Data (1×) | Training Set | Test Set |

|---|---|---|---|---|

| Chongzhou | 272 | 272 | 544 | 68 |

| Dazhai | 290 | 290 | 580 | 73 |

| Jiange | 278 | 278 | 556 | 70 |

| Shifang | 263 | 263 | 526 | 66 |

| Total | 1103 | 1103 | 2206 | 277 |

| Methods | AP0.5:0.95 | AP0.5 | AP0.75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| YOLOv8s [30] | 0.628 | 0.831 | 0.771 | 0.483 | 0.627 | 0.767 |

| YOLOv8s + SEBlock | 0.633 | 0.831 | 0.783 | 0.481 | 0.636 | 0.776 |

| YOLOv8s + ASFF | 0.642 | 0.831 | 0.787 | 0.492 | 0.638 | 0.789 |

| YOLOv8s-EFF (ours) | 0.644 | 0.831 | 0.789 | 0.498 | 0.642 | 0.785 |

| YOLOv5s [36] | 0.534 | 0.827 | 0.611 | 0.354 | 0.628 | 0.635 |

| RetinaNet-R101 [37] | 0.537 | 0.825 | 0.604 | 0.340 | 0.625 | 0.684 |

| Faster R-CNN-R101 [38] | 0.508 | 0.822 | 0.514 | 0.296 | 0.586 | 0.650 |

| Tobacco Field | Area | Row Spacing | Plant Spacing | |

|---|---|---|---|---|

| CZ | MV | 528.12 m2 | 107.3 cm | 68.9 cm |

| CV | 533.20 m2 | 107.02 cm | 67.55 cm | |

| ACC | 99.04% | 99.74% | 98.04% | |

| DZ | MV | 3330.44 m2 | 109.5 cm | 55.2 cm |

| CV | 3414.06 m2 | 114.72 cm | 55.67 cm | |

| ACC | 97.49% | 95.23% | 99.15% | |

| JG | MV | 487.53 m2 | 86.9 cm | 37.7 cm |

| CV | 497.18 m2 | 95.52 cm | 39.70 cm | |

| ACC | 98.02% | 90.08% | 94.69% | |

| SF | MV | 1618.21 m2 | 101.6 cm | 37.9 cm |

| CV | 1674.62 m2 | 100.09 cm | 38.47 cm | |

| ACC | 96.51% | 98.51% | 98.5% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, L.; Liao, K.; Li, Y.; Li, B.; Zhang, J.; Wang, Y.; Lu, L.; Jian, S.; Qin, R.; Fu, X. Extraction of Tobacco Planting Information Based on UAV High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 359. https://doi.org/10.3390/rs16020359

He L, Liao K, Li Y, Li B, Zhang J, Wang Y, Lu L, Jian S, Qin R, Fu X. Extraction of Tobacco Planting Information Based on UAV High-Resolution Remote Sensing Images. Remote Sensing. 2024; 16(2):359. https://doi.org/10.3390/rs16020359

Chicago/Turabian StyleHe, Lei, Kunwei Liao, Yuxia Li, Bin Li, Jinglin Zhang, Yong Wang, Liming Lu, Sichun Jian, Rui Qin, and Xinjun Fu. 2024. "Extraction of Tobacco Planting Information Based on UAV High-Resolution Remote Sensing Images" Remote Sensing 16, no. 2: 359. https://doi.org/10.3390/rs16020359