Identifying Winter Wheat Using Landsat Data Based on Deep Learning Algorithms in the North China Plain

Abstract

:1. Introduction

| Reference | Data | Method | Application |

|---|---|---|---|

| Chu et al., 2016 [23] | MODIS | Vegetation index | Crop mapping |

| Teluguntla et al., 2017 [24] | MODIS | quantitative spectrum matching technique (QSMT) + Automatic cultivated land classification algorithm based on rules (ACCA) | Crop mapping |

| Van et al., 2018 [28] | Sentinel-1, 2 | RF | Crop mapping |

| You et al., 2016 [30] | GF-1 | Spectral analysis | Crop area extraction |

| Ma et al., 2016 [31] | GF-1 | Image interpretation + GIS analysis | Crop area extraction |

| Zhang et al., 2018 [32] | GF-2 | A hybrid structure convolutional neural network (HSCNN) | Crop mapping |

| Liu et al., 2016 [35] | MODIS | A fuzzy decision tree classifier + vegetation index | Crop classification |

| Chen et al., 2012 [36] | MODIS | Spectral analysis | Crop classification |

| Sisodia et al., 2014 [39] | Landsat ETM+ | Supervised maximum likelihood classification (MLC) | Land cover classification |

| Zheng et al., 2015 [42] | Landsat OLI | Support vector machine (SVM) | Crop classification |

| Wang et al., 2022 [43] | WHU-HI dataset | Feature transform combined with random forest (RF) | Crop classification |

| Sang et al., 2019 [44] | Landsat TM, OLI | Classification and regression trees (CART) | Land use change |

2. Materials and Methods

2.1. Study Region

2.2. Data

2.2.1. Landsat Imagery

2.2.2. Data Pre-Processing

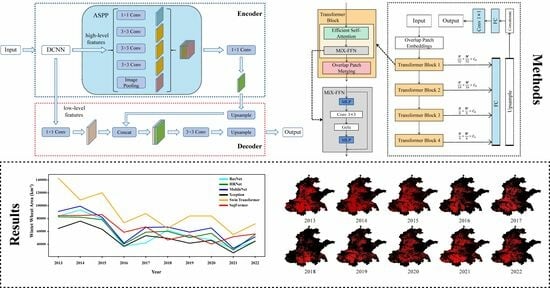

2.3. Methods

2.3.1. Random Forest Classifier

2.3.2. Deeplabv3+ and Improvement

2.3.3. SegFormer

- SegFormer Encoder

- SegFormer Decoder

2.4. Evaluation Metrics

2.5. Experimental Setup

3. Results

3.1. Training Efficiencies

3.2. Winter Wheat Crop Identification

3.3. Temporal and Spatial Variation Characteristics of Winter Wheat in the North China Plain

4. Discussion

- (1)

- With the rapid development of the social economy, China is experiencing rapid urbanization, and a significant amount of farmland on the outskirts of cities has been occupied, which may also be a reason for the gradual reduction in winter wheat area [88].

- (2)

- (3)

- The decline of winter wheat cultivation around settlements is also associated with the adjustment of the cropping structure, where many arable lands have been repurposed to cultivate economically efficient cash crops such as vegetables, flowers and medicinal herbs, particularly in the vicinity of towns [91].

5. Conclusions

- (1)

- In the winter wheat identification task, DL methods and the RF method save time and labor costs compared to statistical methods. Additionally, benefiting from their deep network levels and strong feature learning capabilities, all DL methods in our study outperform the traditional RF method significantly. However, there are also performance differences among different DL methods.

- (2)

- The SegFormer outperforms other methods, achieving a mIoU value of 0.8194 and an F1 value of 0.8459, it can effectively differentiate winter wheat fields from buildings and water bodies, with a particular advantage in processing edge details. Therefore, using the SegFormer method to obtain the spatial distribution of winter wheat in the NCP from 2013 to 2022 is a recommended choice.

- (3)

- There are differences in the trends in the NCP winter wheat area from 2013 to 2022 as reflected by several DL methods, but each method generally shows a downward trend. A timely grasp of changes in the area of winter wheat is of great practical significance to the relevant government departments involved in guiding agricultural production, measuring yields and adjusting agricultural structures, and is conducive to guaranteeing food security.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhou, K.; Zhang, Z.; Liu, L.; Miao, R.; Yang, Y.; Ren, T.; Yue, M. Research on SUnet Winter Wheat Identification Method Based on GF-2. Remote Sens. 2023, 15, 3094. [Google Scholar] [CrossRef]

- Li, S.; Li, F.; Gao, M.; Li, Z.; Leng, P.; Duan, S.; Ren, J. A new method for winter wheat mapping based on spectral reconstruction technology. Remote Sens. 2021, 13, 1810. [Google Scholar] [CrossRef]

- Announcement of the National Statistics Bureau on Grain Output in 2019. Available online: https://www.gov.cn/xinwen/2019-12/07/content_5459250.htm (accessed on 7 December 2019).

- Announcement of the National Statistics Bureau on Grain Output in 2020. Available online: http://www.gov.cn/xinwen/2020-12/10/content_5568623.htm (accessed on 10 December 2020).

- Announcement of the National Statistics Bureau on Grain Output in 2021. Available online: http://www.gov.cn/xinwen/2021-12/06/content_5656247.htm (accessed on 6 December 2021).

- Announcement of the National Statistics Bureau on Grain Output in 2022. Available online: http://www.gov.cn/xinwen/2022-12/12/content_5731454.htm (accessed on 12 December 2022).

- Wang, L.; Zheng, Y.; Duan, L.; Wang, M.; Wang, H.; Li, H.; Li, R.; Zhang, H. Artificial selection trend of wheat varieties released in huang-huai-hai region in china evaluated using dus testing characteristics. Front. Plant Sci. 2022, 13, 898102. [Google Scholar] [CrossRef]

- Calzadilla, A.; Rehdanz, K.; Betts, R.; Falloon, P.; Wiltshire, A.; Tol, R.S.J. Climate change impacts on global agriculture. Clim. Change 2013, 120, 357–374. [Google Scholar] [CrossRef]

- Fróna, D.; Szenderák, J.; Harangi-Rákos, M. Economic effects of climate change on global agricultural production. Nat. Conserv. 2021, 44, 117–139. [Google Scholar] [CrossRef]

- Samiullah, M.A.K.; Rahman, A.-U.; Mahmood, S. Evaluation of urban encroachment on farmland. Erdkunde 2019, 73, 127–142. Available online: https://www.jstor.org/stable/26663996 (accessed on 27 May 2019). [CrossRef]

- Li, K.; Yang, X.; Liu, Z.; Zhang, T.; Lu, S.; Liu, Y. Low yield gap of winter wheat in the North China Plain. Eur. J. Agron. 2014, 59, 1–12. [Google Scholar] [CrossRef]

- Mo, X.-G.; Hu, S.; Lin, Z.-H.; Liu, S.-X.; Xia, J. Impacts of climate change on agricultural water resources and adaptation on the North China Plain. Adv. Clim. Change Res. 2017, 8, 93–98. [Google Scholar] [CrossRef]

- Gleeson, T.; Wada, Y.; Bierkens, M.F.P.; Beek, L.P.H.V. Water balance of global aquifers revealed by groundwater footprint. Nature 2012, 488, 197. [Google Scholar] [CrossRef]

- de Graaf, I.E.M.; van Beek, L.P.H.; Wada, Y.; Bierkens, M.F.P. Dynamic attribution of global water demand to surface water and groundwater resources: Effects of abstractions and return flows on river discharges. Adv. Water Resour. 2014, 64, 21–33. [Google Scholar] [CrossRef]

- Grogan, D.S.; Zhang, F.; Prusevich, A.; Lammers, R.B.; Wisser, D.; Glidden, S.; Li, C.; Frolking, S. Quantifying the link between crop production and mined groundwater irrigation in China. Sci. Total Environ. 2015, 511, 161–175. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Shen, Y.; Yu, Q.; Flerchinger, G.N.; Zhang, Y.; Liu, C.; Zhang, X. Effect of precipitation change on water balance and WUE of the winter wheat–summer maize rotation in the North China Plain. Agric. Water Manag. 2010, 97, 1139–1145. [Google Scholar] [CrossRef]

- Wu, M.; Yang, L.; Yu, B.; Wang, Y.; Zhao, X.; Niu, Z.; Wang, C. Mapping crops acreages based on remote sensing and sampling investigation by multivariate probability proportional to size. Trans. Chin. Soc. Agric. Eng. 2014, 30, 146–152. [Google Scholar]

- Ma, L.; Gu, X.; Xu, X.; Huang, W.; Jia, J. Remote sensing measurement of corn planting area based on field-data. Trans. Chin. Soc. Agric. Eng. 2009, 25, 147–151. [Google Scholar]

- Kang, Y.; Hu, X.; Meng, Q.; Zou, Y.; Zhang, L.; Liu, M.; Zhao, M. Land cover and crop classification based on red edge indices features of GF-6 WFV time series data. Remote Sens. 2021, 13, 4522. [Google Scholar] [CrossRef]

- Zou, J.; Huang, Y.; Chen, L.; Chen, S. Remote Sensing-Based Extraction and Analysis of Temporal and Spatial Variations of Winter Wheat Planting Areas in the Henan Province of China. Open Life Sci. 2018, 13, 533–543. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Li, X.; Tan, M.; Xin, L. Remote sensing monitoring of changes in winter wheat area in North China Plain from 2001 to 2011. Trans. Chin. Soc. Agric. Eng. 2015, 31, 190–199. [Google Scholar]

- Bai, Y.; Zhao, Y.; Shao, Y.; Zhang, X.; Yuan, X. Deep learning in different remote sensing image categories and applications: Status and prospects. Int. J. Remote Sens. 2022, 43, 1800–1847. [Google Scholar] [CrossRef]

- Chu, L.; Liu, Q.; Huang, C.; Liu, G. Monitoring of winter wheat distribution and phenological phases based on MODIS time-series: A case study in the Yellow River Delta, China. J. Integr. Agric. 2016, 15, 2403–2416. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Oliphant, A.; Poehnelt, J.; Yadav, K.; Rao, M.; Massey, R. Spectral matching techniques (SMTs) and automated cropland classification algorithms (ACCAs) for mapping croplands of Australia using MODIS 250-m time-series (2000–2015) data. Int. J. Digit. Earth 2017, 10, 944–977. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Frolking, S.; Li, C.; Moore, B. Mapping paddy rice agriculture in South and Southeast Asia using multi-temporal MODIS images. Remote Sens. Environ. 2006, 100, 95–113. [Google Scholar] [CrossRef]

- Xu, Q.; Yang, G.; Long, H.; Wang, C.; Li, X.; Huang, D. Crop information identification based on MODIS NDVI time-series data. Trans. Chin. Soc. Agric. Eng. 2014, 30, 134–144. [Google Scholar]

- Wei, P.; Chai, D.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-scale rice mapping under different years based on time-series Sentinel-1 images using deep semantic segmentation model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic use of radar Sentinel-1 and optical Sentinel-2 imagery for crop mapping: A case study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef]

- Yao, J.; Wu, J.; Xiao, C.; Zhang, Z.; Li, J. The classification method study of crops remote sensing with deep learning, machine learning, and Google Earth engine. Remote Sens. 2022, 14, 2758. [Google Scholar] [CrossRef]

- You, J.; Pei, Z.; Wang, F.; Wu, Q.; Guo, L. Area extraction of winter wheat at county scale based on modified multivariate texture and GF-1 satellite images. Trans. Chin. Soc. Agric. Eng. 2016, 32, 131–139. [Google Scholar]

- Ma, S.; Yi, X.; You, J.; Guo, L.; Lou, J. Winter wheat cultivated area estimation and implementation evaluation of grain direct subsidy policy based on GF-1 imagery. Trans. Chin. Soc. Agric. Eng. 2016, 32, 169–174. [Google Scholar]

- Zhang, C.; Gao, S.; Yang, X.; Li, F.; Yue, M.; Han, Y.; Zhao, H.; Zhang, Y.n.; Fan, K. Convolutional neural network-based remote sensing images segmentation method for extracting winter wheat spatial distribution. Appl. Sci. 2018, 8, 1981. [Google Scholar] [CrossRef]

- Christopher, C.; Sebastian, F.; Julian, Z.; Gerd, R.; Stefan, D. Per-Field Irrigated Crop Classification in Arid Central Asia Using SPOT and ASTER Data. Remote Sens. 2010, 2, 1035–1056. [Google Scholar] [CrossRef]

- Esch, T.; Metz, A.; Marconcini, M.; Keil, M. Combined use of multi-seasonal high and medium resolution satellite imagery for parcel-related mapping of cropland and grassland. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 230–237. [Google Scholar] [CrossRef]

- Liu, J.; Huffman, T.; Shang, J.; Qian, B.; Dong, T.; Zhang, Y. Identifying major crop types in Eastern Canada using a fuzzy decision tree classifier and phenological indicators derived from time series MODIS data. Can. J. Remote Sens. 2016, 42, 259–273. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, Y.; Shen, S. Crop classification by remote sensing based on spectral analysis. Trans. Chin. Soc. Agric. Eng. 2012, 28, 154–160. [Google Scholar]

- Deren, L.I.; Liangpei, Z.; Guisong, X. Automatic Analysis and Mining of Remote Sensing Big Data. Acta Geod. Cartogr. Sin. 2014, 43, 1211–1216. [Google Scholar] [CrossRef]

- Mammone, A.; Turchi, M.; Cristianini, N. Support vector machines. Wiley Interdiscip. Rev. Comput. Stat. 2009, 1, 283–289. [Google Scholar] [CrossRef]

- Sisodia, P.S.; Tiwari, V.; Kumar, A. Analysis of supervised maximum likelihood classification for remote sensing image. In Proceedings of the International Conference on Recent Advances and Innovations in Engineering (ICRAIE-2014), Jaipur, India, 9–11 May 2014; pp. 1–4. [Google Scholar]

- Tatsumi, K.; Yamashiki, Y.; Torres, M.A.C.; Taipe, C.L.R. Crop classification of upland fields using Random forest of time-series Landsat 7 ETM+ data. Comput. Electron. Agric. 2015, 115, 171–179. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Z.; Yin, C. Fine crop classification based on UAV hyperspectral images and random forest. ISPRS Int. J. Geo-Inf. 2022, 11, 252. [Google Scholar] [CrossRef]

- Sang, X.; Guo, Q.; Wu, X.; Fu, Y.; Xie, T.; He, C.; Zang, J. Intensity and stationarity analysis of land use change based on CART algorithm. Nat. Sci. Rep. 2019, 9, 12279. [Google Scholar] [CrossRef]

- Gao, Q.; Lim, S.; Jia, X. Hyperspectral image classification using convolutional neural networks and multiple feature learning. Remote Sens. 2018, 10, 299. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 645–657. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Geng, J.; Fan, J.; Wang, H.; Ma, X.; Li, B.; Chen, F. High-Resolution SAR Image Classification via Deep Convolutional Autoencoders. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2351–2355. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road extraction from high-resolution remote sensing imagery using deep learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Zeng, Y.; Guo, Y.; Li, J. Recognition and extraction of high-resolution satellite remote sensing image buildings based on deep learning. Neural Comput. Appl. 2022, 34, 2691–2706. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, G.; Amankwah, S.O.Y.; Wei, X.; Feng, A. Monitoring the summer flooding in the Poyang Lake area of China in 2020 based on Sentinel-1 data and multiple convolutional neural networks. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102400. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Ren, T.; Liu, D.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.; Zhang, X.; Li, S. Identification of seed maize fields with high spatial resolution and multiple spectral remote sensing using random forest classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef]

- Li, Y.; Hao, Z.; Lei, H. Survey of convolutional neural network. J. Comput. Appl. 2016, 36, 2508–2515. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Bhosle, K.; Musande, V. Evaluation of deep learning CNN model for land use land cover classification and crop identification using hyperspectral remote sensing images. J. Indian Soc. Remote Sens. 2019, 47, 1949–1958. [Google Scholar] [CrossRef]

- Xie, B.; Zhang, H.K.; Xue, J. Deep convolutional neural network for mapping smallholder agriculture using high spatial resolution satellite image. Sensors 2019, 19, 2398. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Houlsby, N. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Feng, D.; Zhang, Z.; Yan, K. A semantic segmentation method for remote sensing images based on the Swin transformer fusion Gabor filter. IEEE Access 2022, 10, 77432–77451. [Google Scholar] [CrossRef]

- Mo, X.; Liu, S.; Lin, Z.; Guo, R. Regional crop yield, water consumption and water use efficiency and their responses to climate change in the North China Plain. Agric. Ecosyst. Environ. 2009, 134, 67–78. [Google Scholar] [CrossRef]

- Meng, J.; Wu, B.; Zhang, M. Estimating regional winter wheat leaf N concentration with meris by integrating a field observation-based model and histogram matching. Trans. ASABE 2013, 56, 1589–1598. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Huang, F.; Xia, X.; Huang, Y.; Lv, S.; Chen, Q.; Pan, Y.; Zhu, X. Comparison of winter wheat extraction methods based on different time series of vegetation indices in the Northeastern margin of the Qinghai–Tibet Plateau: A case study of Minhe, China. Remote Sens. 2022, 14, 343. [Google Scholar] [CrossRef]

- Mo, Y.; Zhong, R.; Cao, S. Orbita hyperspectral satellite image for land cover classification using random forest classifier. J. Appl. Remote Sens. 2021, 15, 014519. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Guo, S.; Ye, Z.; Deng, H.; Hou, X.; Zhang, H. Urban tree classification based on object-oriented approach and random forest algorithm using unmanned aerial vehicle (uav) multispectral imagery. Remote Sens. 2022, 14, 3885. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Chen, Y.; Yi, H.; Liao, C.; Huang, P.; Chen, Q. Visual measurement of milling surface roughness based on Xception model with convolutional neural network. Measurement 2021, 186, 110217. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, C.; Zhang, H.; Wu, X.; Yang, W.; Shen, Y.; Lu, B.; Wang, J. AUTS: A Novel Approach to Mapping Winter Wheat by Automatically Updating Training Samples Based on NDVI Time Series. Agriculture 2022, 12, 817. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Wu, D.; Yin, X.; Jiang, B.; Jiang, M.; Li, Z.; Song, H. Detection of the respiratory rate of standing cows by combining the Deeplab V3+ semantic segmentation model with the phase-based video magnification algorithm. Biosyst. Eng. 2020, 192, 72–89. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 7–10 December 2021; pp. 12077–12090. [Google Scholar]

- Islam, M.A.; Jia, S.; Bruce, N.D. How much position information do convolutional neural networks encode? arXiv 2020, arXiv:2001.08248. [Google Scholar]

- Wang, W.; Su, C. Automatic concrete crack segmentation model based on transformer. Autom. Constr. 2022, 139, 104275. [Google Scholar] [CrossRef]

- Tang, X.; Tu, Z.; Wang, Y.; Liu, M.; Li, D.; Fan, X. Automatic detection of coseismic landslides using a new transformer method. Remote Sens. 2022, 14, 2884. [Google Scholar] [CrossRef]

- Li, H.; Wang, G.; Dong, Z.; Wei, X.; Wu, M.; Song, H.; Amankwah, S.O.Y. Identifying cotton fields from remote sensing images using multiple deep learning networks. Agronomy 2021, 11, 174. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. Int. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar] [CrossRef]

- Xu, H.; Tian, Z.; He, X.; Wang, J.; Sun, L.; Fischer, G.; Fan, D.; Zhong, H.; Wu, W.; Pope, E. Future increases in irrigation water requirement challenge the water-food nexus in the northeast farming region of China. Agric. Water Manag. 2019, 213, 594–604. [Google Scholar] [CrossRef]

- Ni, R.; Tian, J.; Li, X.; Yin, D.; Li, J.; Gong, H.; Zhang, J.; Zhu, L.; Wu, D. An enhanced pixel-based phenological feature for accurate paddy rice mapping with Sentinel-2 imagery in Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 178, 282–296. [Google Scholar] [CrossRef]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A Survey of Transformers. AI Open 2021, 3, 111–132. [Google Scholar] [CrossRef]

- Song, W.; Feng, A.; Wang, G.; Zhang, Q.; Dai, W.; Wei, X.; Hu, Y.; Amankwah, S.O.Y.; Zhou, F.; Liu, Y. Bi-Objective Crop Mapping from Sentinel-2 Images Based on Multiple Deep Learning Networks. Remote Sens. 2023, 15, 3417. [Google Scholar] [CrossRef]

- Hao, P.; Di, L.; Zhang, C.; Guo, L. Transfer Learning for Crop classification with Cropland Data Layer data (CDL) as training samples. Sci. Total Environ. 2020, 733, 138869. [Google Scholar] [CrossRef]

- Song, W.; Deng, X. Effects of urbanization-induced cultivated land loss on ecosystem services in the North China Plain. Energies 2015, 8, 5678–5693. [Google Scholar] [CrossRef]

- Sun, H.-Y.; Liu, C.-M.; Zhang, X.-Y.; Shen, Y.-J.; Zhang, Y.-Q. Effects of irrigation on water balance, yield and WUE of winter wheat in the North China Plain. Agric. Water Manag. 2006, 85, 211–218. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, M.; Li, J.; Liu, Z.; Zhao, Z.; Zhang, Y.; Zhou, S.; Wang, Z. Improving water use efficiency and grain yield of winter wheat by optimizing irrigations in the North China Plain. Field Crops Res. 2018, 221, 219–227. [Google Scholar] [CrossRef]

- Feifei, L.; Jun, T.; Xianjun, G.; Yuanwei, Y.; Yangying, Z. Analysis of Climate Change Effects on Winter Wheat Sowing Area and Yield in Northern Henan Based on GEE. J. Henan Agric. Sci. 2022, 51, 150. [Google Scholar] [CrossRef]

- Lei, H.; Yang, D. Combining the crop coefficient of winter wheat and summer maize with a remotely sensed vegetation index for estimating evapotranspiration in the North China plain. J. Hydrol. Eng. 2014, 19, 243–251. [Google Scholar] [CrossRef]

- Dong, J.; Liu, W.; Han, W.; Xiang, K.; Lei, T.; Yuan, W. A phenology-based method for identifying the planting fraction of winter wheat using moderate-resolution satellite data. Int. J. Remote Sens. 2020, 41, 6892–6913. [Google Scholar] [CrossRef]

| Product | Image Region | Time | Multispectral Image Resolution | Panchromatic Image Resolution |

|---|---|---|---|---|

| Landsat 8 OLI | 124-033 | 17 April 2013 | 30 m | 15 m |

| Landsat 8 OLI | 122-034 | 22 April 2014 | 30 m | 15 m |

| Landsat 8 OLI | 123-035 | 18 May 2015 | 30 m | 15 m |

| Landsat 8 OLI | 123-035 | 18 April 2016 | 30 m | 15 m |

| Landsat 8 OLI | 123-035 | 7 May 2017 | 30 m | 15 m |

| Landsat 8 OLI | 123-035 | 8 April 2018 | 30 m | 15 m |

| Landsat 8 OLI | 122-037 | 17 April 2019 | 30 m | 15 m |

| Landsat 8 OLI | 120-036 | 22 April 2019 | 30 m | 15 m |

| Landsat 8 OLI | 124-033 | 18 April 2019 | 30 m | 15 m |

| Landsat 8 OLI | 124-037 | 18 April 2019 | 30 m | 15 m |

| Landsat 8 OLI | 123-035 | 29 April 2020 | 30 m | 15 m |

| Landsat 8 OLI | 124-037 | 9 May 2021 | 30 m | 15 m |

| Landsat 9 OLI | 122-034 | 20 April 2022 | 30 m | 15 m |

| Network | Time |

|---|---|

| ResNet | 89,764 s |

| HRNet | 109,321 s |

| MobileNet | 35,280 s |

| Xception | 48,247 s |

| Swin Transformer | 146,523 s |

| SegFormer | 71,048 s |

| RF | 3396 s |

| Method | Accuracy | Precision | mIoU | Recall | F1 |

|---|---|---|---|---|---|

| ResNet | 0.8960 | 0.7826 | 0.7592 | 0.7885 | 0.7855 |

| HRNet | 0.9005 | 0.8051 | 0.7698 | 0.7901 | 0.7975 |

| MobileNet | 0.8911 | 0.7933 | 0.7522 | 0.7671 | 0.7800 |

| Xception | 0.8693 | 0.7426 | 0.7104 | 0.7264 | 0.7344 |

| Swin Transformer | 0.8484 | 0.6260 | 0.6612 | 0.7155 | 0.6678 |

| SegFormer | 0.9252 | 0.8382 | 0.8194 | 0.8538 | 0.8459 |

| RF | 0.6732 | 0.5809 | 0.4962 | 0.4304 | 0.4945 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Wang, G.; Wang, G.; Song, W.; Wei, X.; Hu, Y. Identifying Winter Wheat Using Landsat Data Based on Deep Learning Algorithms in the North China Plain. Remote Sens. 2023, 15, 5121. https://doi.org/10.3390/rs15215121

Zhang Q, Wang G, Wang G, Song W, Wei X, Hu Y. Identifying Winter Wheat Using Landsat Data Based on Deep Learning Algorithms in the North China Plain. Remote Sensing. 2023; 15(21):5121. https://doi.org/10.3390/rs15215121

Chicago/Turabian StyleZhang, Qixia, Guofu Wang, Guojie Wang, Weicheng Song, Xikun Wei, and Yifan Hu. 2023. "Identifying Winter Wheat Using Landsat Data Based on Deep Learning Algorithms in the North China Plain" Remote Sensing 15, no. 21: 5121. https://doi.org/10.3390/rs15215121