The basic idea of this article is to integrate sparse representation denoising into non-local mean filtering in theory and algorithm structure. In this section, the model of speckle noise in SAR images is first analyzed, followed by an introduction to sparse representation theory and related image-denoising algorithms. Finally, the motivation of applying sparse representation theory to non-local filtering in the new algorithm is explained in detail.

2.1. Noise Model Analysis

In optical images, noise usually exists in additive form. In contrast, speckle noise satisfies the multiplicative model under the assumption of full development. The noise-free optical image and the image after adding multiplicative noise are shown in

Figure 1. Assuming that the coherent speckle noise of the SAR image is fully developed, the observed SAR image I can be represented by the following model [

17]:

where x is the true speckle-free SAR image and v is the coherent speckle noise. A Gamma distribution with mean 1/L and variance 1/L is obeyed, where L is the number of image views. The common denoising algorithms designed for additive noise cannot be applied to SAR images directly. As a result, logarithmic conversion of the SAR image is required before despeckling. The SAR image model is converted to an additive model. The image model is then transformed into the following model:

SAR image can be regarded as the superposition of valid information and speckle noise. The valid information refers to the part of the image that can provide the information of the observed scene, such as terrain, buildings, maneuvering targets. In SAR-BM3D algorithm, speckle noise appears in the form of disordered and random high-intensity pixels in the similar block-matching step. When the intensity of speckle noise in SAR images is too high, it is possible to match two randomly similar blocks of noisy pixel blocks into a similar block group. This actually enhances the noise pixels in the subsequent joint filtering, resulting in strong point diffusion phenomenon and even false targets. The key to solving this problem is to enable the algorithm to distinguish whether the pixels in an image belong to valid information or speckle noise. The valid information in the SAR image tends to have a certain structure and texture, while the speckle noise is disordered and random. It is due to the different sparsity of the valid information and speckle noise that the human eye can distinguish between them in the image. This sparsity is the key to solving the problem of strong scatterer diffusion.

2.2. Sparse Representation

Transform dictionaries are usually divided into general dictionaries and learning dictionaries. Common general dictionaries choose the fixed form dictionaries on specific transform domain, such as Fourier transform (FT) dictionary, discrete cosine transform (DCT) dictionary, wavelet transform (WT) dictionary, contourlet dictionary, etc. The learning dictionary is a special dictionary generated by training the image to be processed. Each column in the dictionary is called an atom, each atom contains the structural features of the image. Sparse representation theory assumes that signals can be represented by linear combination of a finite number of atoms in a predefined dictionary. The coefficients corresponding to these atoms are the combination coefficients. Most of the combination coefficients are approximately zero. The matrix formed by these combination coefficients is the coefficient matrix. The coefficient matrix is sparse. After constructing the sparse linear model using the training samples, the atoms are endowed with the structure features of the valid information. The valid information in the image can be obtained from a finite number of atoms in the dictionary. The valid information can be combined linearly by these finite atoms with the sparse coefficient matrix.

As illustrated in

Figure 2, given an image X, valid information such as terrain information in X can be expressed as the product of a dictionary D and a sparse coefficient matrix α, i.e., X = Dα. The randomness and unstructured nature of the invalid information prevents it from being represented sparsely by the dictionary [

18]. Therefore, for a noise-containing image X, which will be reconstructed by the dictionary and the sparse coefficient matrix, the noise in X will not be sparsely represented. The reconstructed image

= Dα contains only the valid information in the original image X, and the noise will be discarded as the residual (X −

). This is the basic principle: that the sparse representation can denoise the image.

The original representation filtering on specific transform domain sets a threshold for the coefficient matrix by a specific shrinkage method. The elements of the coefficient matrix less than the threshold are regarded as zero, and the coefficient items containing noise are discarded. Denoising is accomplished by exploiting the sparsity of the coefficient matrix on this specific transform domain. The most classical hard threshold shrinkage is to set a threshold artificially, and the elements below the threshold are counted as near-zero, then the coefficient matrix can be regarded as sparse. The original sparse representation filtering on a specific transform domain extracts a finite number of atom combinations in the corresponding orthogonal dictionary of this transform domain. Then, the denoised results are reconstructed by inverse transformation. The coefficient items containing noise information are discarded as reconstruction residuals in this process. This idea has led to the creation of the well-known wavelet shrinkage algorithm and several new customized multi-scale and directional redundant transformation, such as [

19,

20]. These algorithms are easy to understand and operate.

However, the success of such a general dictionary approach in practical denoising applications depends on whether the filtered signal is sparse in the corresponding transform domain. Therefore, this kind of method is not sufficiently general. Compared with the specific transform domain filtering, the sparse despeckling part of the new proposed algorithm takes the form of solving the maximum a posteriori (MAP) estimation problem. The dictionary of the new proposed method is a set of learned parameters learned from the image instead of the set of pre-selected basis functions like curvelet or contourlet. The DCT dictionary is used as the initial dictionary. In each iteration, the orthogonal matching pursuit (OMP) [

21] method is used to update the sparse coefficient matrix row by row, and the singular value decomposition is used to update the dictionary matrix column by column. The optimal solution that meets the constraints is learned from multiple optimizations. The constraints are usually divided into sparsity constraint and image error constraint. The sparsity constraint is the maximum sparsity of the coefficient matrix, image error constraint is the maximum error between the input image and the reconstructed image. Dictionary learning is an iterative process of optimizing the dictionary column by column using an update method in each iteration. The sparse representation is the process in which the reconstructed image is linearly combined by using updated dictionary with updated sparse coefficient matrix in each optimization process.

The effect of the maximum a posteriori estimation method is related to the degree of dictionary quality optimization. In this paper, the degree of sparsity is defined as the ratio of the number of non-zero elements to the total number of elements in a column of atoms in the dictionary. As shown in

Figure 3 and

Figure 4,

Figure 3 shows that the dictionary learned from real SAR images with different number of iterations, (a) is the dictionary learned from iteratively updating five times, and (b) is the dictionary learned from iteratively updating ten times.

Figure 4 shows the despeckling results learned from real SAR images with different number of iterations, (a) is the despeckling result learned from iteratively updating five times, and (b) is the despeckling result learned from iteratively updating ten times. Obviously, the filtering results (a) in the

Figure 4 corresponding to the dictionary learned from five iterations are fuzzy, the point targets are mixed together, and the experimental data show that the sparsity degree of the learned sparse coefficient matrix learned from five iterations is 9/256. Compared with the filtering results after five iterations in

Figure 4a, the filtering results after ten iterations in

Figure 4b are significantly more detailed, and the edge and point targets marked by red rectangles are also clearer. The experimental data show that the sparsity degree of the sparse coefficient matrix learned from ten iterations is reduced to 3/256. Therefore, with the increase in the number of iterations, the quality of the learned dictionary can be gradually improved. The learned dictionary after more iterations can not only express more detailed parts of the image, but also reduce the sparsity degree of the sparse matrix. As a result, it can be seen from

Figure 3 and

Figure 4 that the dictionary optimized with a larger number of iterations has the potential to represent the image more accurately and sparsely.

Inspired by the above conclusion that the higher the number of iterative updates, the higher the quality of the learned dictionary, the proposed method uses the sparse representation filtering based on K-SVD to accomplish the filtering in specific transform domain. The optimal dictionary and sparse coefficient matrix that satisfy the constraints are learned, so as to achieve the best combined effect of speckle noise suppression and image detail representation. K-SVD based on overcomplete dictionary learning is one of the most representative algorithms in image denoising based on sparse representation. Then, SAR image filtering based on dictionary learning and sparse representation [

22] adds a pre-processing step of logarithmic conversion of the image before K-SVD image despeckling. The SAR image-denoising model based on weighted sparse representation [

23,

24] performs similar block matching on the logarithmic image of the original SAR image, and then performs K-SVD image denoising. This SAR image filtering based on dictionary learning and sparse representation applies the sparse representation filtering idea to SAR image despeckling, showing good results in speckle noise suppression.

2.3. Motivation

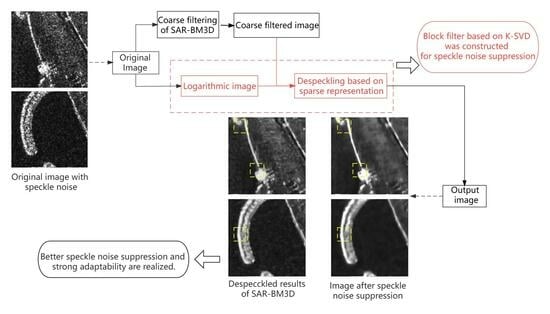

The core idea of K-SVD filtering is to take advantage of the different characteristics that valid information can be sparsely represented, while noise information cannot be sparsely represented. The different characteristics can precisely help to solve the problem that valid information and noise in the image are difficult to distinguish in amplitude. From the perspective of algorithm structure, the image denoising based on sparse representation has some similarity with the method architecture in SAR-BM3D. They both contain the steps of image block selection, filter processing, and block restoration. So, the block filtering step of fine filtering in SAR-BM3D can be theoretically replaced by K-SVD despeckling. A new algorithm of non-local SAR image despeckling based on sparse representation can be proposed.

The proposed algorithm does not have a serious impact on the filtering results during the similar block-matching process, even if the similar block matching is affected by the high-intensity speckle noise. Since the speckle noise of the image is still unstructured and cannot be represented sparsely by the dictionary, the speckle noise will be discarded during the reconstruction of the image through the dictionary and sparse coefficient matrix.

The first step of the new algorithm follows the coarse filtering step of SAR-BM3D. The second step is performed on the logarithmic image of the original image. The logarithmic image is divided into blocks of the same size to form a three-dimensional dictionary group. And the dictionary of the corresponding block is selected according to the position of the current image block in the filtering process. Then, the sparse matrix and learned dictionary are trained using the OMP method and K-SVD. Finally, the filtered image blocks are reconstructed. The K-SVD algorithm is flexible and can be used with a variety of pursuit methods, the classical OMP pursuit method is selected in this paper. After sequentially and iteratively processing all image blocks, the final image is restored by weight aggregation. Such an improvement not only inherits the strong adaptivity and good filtering effect of SAR-BM3D, but also can overcome the drawback of strong point diffusion for strong noise images and demonstrate the adaptability of the algorithm. The specific method flow will be described in detail in

Section 3.