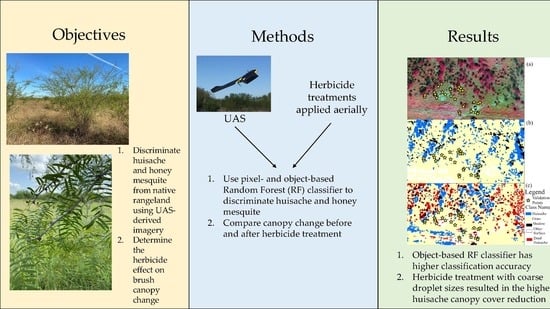

Identification of Brush Species and Herbicide Effect Assessment in Southern Texas Using an Unoccupied Aerial System (UAS)

Abstract

:1. Introduction

2. Materials and Methods

2.1. Rangeland Information

2.2. Data Acquisition

2.3. Data Processing

2.4. Training Sample Collection and Classification

3. Results

3.1. Results of Site 1

3.1.1. Brush Classification

3.1.2. Herbicide Efficacy Assessment

3.2. Results of Site 2

3.2.1. Brush Classification

3.2.2. Herbicide Efficacy Assessment

4. Discussion

4.1. Classification Results Comaprison

4.2. Herbicde Effect on Brush Species

4.3. Relevent Work Comparison

4.4. Limitations and Further Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Drawe, D.L.; Mutz, J.L.; Scifres, C.J. Ecology and Management of Huisache on the Texas Coastal Prairie; Texas FARMER Collection: San Marcos, TX, USA, 1983; pp. 45–60. [Google Scholar]

- Mesquite Ecology and Management. Available online: https://agrilifeextension.tamu.edu/asset-external/mesquite-ecology-and-management/ (accessed on 17 February 2022).

- Ansley, R.J.; Jacoby, P.W.; Hicks, R.A. Leaf and whole plant transpiration in honey mesquite following severing of lateral roots. J. Range Manag. 1991, 44, 577–583. [Google Scholar] [CrossRef] [Green Version]

- Medlin, C.R.; McGinty, W.A.; Hanselka, C.W.; Lyons, R.K.; Clayton, M.K.; Thompson, W.J. Treatment life and economic comparisons of Honey Mesquite (Prosopis glandulosa) and Huisache (Vachellia farnesiana) herbicide programs in rangeland. Weed Technol. 2019, 33, 763–772. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote Sensing Technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Dyson, J.; Mancini, A.; Frontoni, E.; Zingaretti, P. Deep learning for soil and crop segmentation from remotely sensed data. Remote Sens. 2019, 11, 1859. [Google Scholar] [CrossRef] [Green Version]

- Chen, P.-C.; Chiang, Y.-C.; Weng, P.-Y. Imaging using unmanned aerial vehicles for Agriculture Land Use Classification. Agriculture 2020, 10, 416. [Google Scholar] [CrossRef]

- Naghdyzadegan Jahromi, M.; Zand-Parsa, S.; Doosthosseini, A.; Razzaghi, F.; Jamshidi, S. Enhancing vegetation indices from sentinel-2 using multispectral UAV data, Google Earth engine and Machine Learning. In Computational Intelligence for Water and Environmental Sciences; Springer: Singapore, 2022; pp. 507–523. [Google Scholar]

- Bhandari, M.; Chang, A.; Jung, J.; Ibrahim, A.M.; Rudd, J.C.; Baker, S.; Landivar, J.; Liu, S.; Landivar, J. Unmanned aerial system-based high-throughput phenotyping for Plant Breeding. Plant Phenom. J. 2023, 6, e20058. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; del Campo, A.; Moreno, M.A. Combined use of agro-climatic and very high-resolution remote sensing information for Crop Monitoring. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 66–75. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote Sensing of the environment with small Unmanned Aircraft Systems (UASS), part 2: Scientific and commercial applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef] [Green Version]

- George, E.A.; Tiwari, G.; Yadav, R.N.; Peters, E.; Sadana, S. UAV systems for parameter identification in agriculture. In Proceedings of the 2013 IEEE Global Humanitarian Technology Conference: South Asia Satellite (GHTC-SAS), Trivandrum, India, 23–24 August 2013; pp. 270–273. [Google Scholar] [CrossRef]

- Everitt, J.H.; Villarreal, R. Detecting huisache (Acacia farnesiana) and Mexican palo-verde (Parkinsonia aculeata) by aerial photography. Weed Sci. 1987, 35, 427–432. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.; Miller, P.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Ku, N.-W.; Popescu, S.C. A comparison of multiple methods for mapping local-scale mesquite tree aboveground biomass with remotely sensed data. Biomass Bioenergy 2019, 122, 270–279. [Google Scholar] [CrossRef]

- Gillan, J.K.; Karl, J.W.; van Leeuwen, W.J. Integrating drone imagery with existing rangeland monitoring programs. Environ. Monit. Assess. 2020, 192, 269. [Google Scholar] [CrossRef] [PubMed]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral remote sensing from Unmanned Aircraft: Image processing workflows and applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Jackson, M.; Portillo-Quintero, C.; Cox, R.; Ritchie, G.; Johnson, M.; Humagain, K.; Subedi, M.R. Season, classifier, and spatial resolution impact honey mesquite and yellow bluestem detection using an unmanned aerial system. Rangel. Ecol. Manag. 2020, 73, 658–672. [Google Scholar] [CrossRef]

- Ramoelo, A.; Cho, M.A.; Mathieu, R.; Madonsela, S.; van de Kerchove, R.; Kaszta, Z.; Wolff, E. Monitoring grass nutrients and biomass as indicators of rangeland quality and quantity using random forest modelling and worldview-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 43–54. [Google Scholar] [CrossRef]

- Akar, Ö. Mapping land use with using rotation forest algorithm from UAV images. Eur. J. Remote Sens. 2017, 50, 269–279. [Google Scholar] [CrossRef] [Green Version]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and Deep Learning Techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Bazrafshan, O.; Ehteram, M.; Moshizi, Z.G.; Jamshidi, S. Evaluation and uncertainty assessment of wheat yield prediction by Multilayer Perceptron model with Bayesian and copula bayesian approaches. Agric. Water Manag. 2022, 273, 107881. [Google Scholar] [CrossRef]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Tzotsos, A.; Argialas, D. Support Vector Machine Classification for object-based image analysis. In Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2008; pp. 663–677. [Google Scholar] [CrossRef]

- Li, Z.; Hao, F. Multi-scale and multi-feature segmentation of High-Resolution Remote Sensing Image. J. Multimed. 2014, 9, 7. [Google Scholar] [CrossRef]

- Lawrence, R.L.; Wood, S.D.; Sheley, R.L. Mapping invasive plants using hyperspectral imagery and Breiman Cutler classifications (randomforest). Remote Sens. Environ. 2006, 100, 356–362. [Google Scholar] [CrossRef]

- Adam, E.M.; Mutanga, O.; Rugege, D.; Ismail, R. Discriminating the papyrus vegetation (Cyperus papyrus L.) and its co-existent species using random forest and hyperspectral data resampled to HYMAP. Int. J. Remote Sens. 2011, 33, 552–569. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the importance of training data sample selection in random forest image classification: A case study in Peatland Ecosystem Mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef] [Green Version]

- Hoffman, R.R. Cognitive and perceptual processes in remote sensing image interpretation. In Remote Sensing and Cognition; Taylor & Francis: Abingdon, UK, 2018; pp. 1–18. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Ansley, R.J.; Pinchak, W.E.; Teague, W.R.; Kramp, B.A.; Jones, D.L.; Jacoby, P.W. Long-term grass yields following chemical control of honey mesquite. J. Range Manag. 2004, 1, 49–57. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R., Jr.; Everitt, J.H.; Ritchie, J.C.; Moran, M.S.; Booth, D.T.; Anderson, G.L.; Clark, P.E.; Seyfried, M.S. Applications and research using Remote Sensing for Rangeland Management. Photogramm. Eng. Remote Sens. 2003, 69, 675–693. [Google Scholar] [CrossRef] [Green Version]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using Deep Learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef] [Green Version]

- Retallack, A.; Finlayson, G.; Ostendorf, B.; Lewis, M. Using deep learning to detect an indicator arid shrub in ultra-high-resolution UAV imagery. Ecol. Indic. 2022, 145, 109698. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. RESNET-based tree species classification using UAV images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 475–481. [Google Scholar] [CrossRef] [Green Version]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS Hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef] [Green Version]

| Treatment Number | Replications | Treatments and Rates (kg a.i. ha−1) | Herbicide Name | Area (ha) | Nozzle | Droplet Size (μm) | Application Rate (L ha−1) |

|---|---|---|---|---|---|---|---|

| 1 | 1 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 7.5 Floodjet Wide-Angle Flat Spray Tip | 800 | 37.4 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 2 | 1 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 7.5 Floodjet Wide-Angle Flat Spray Tip | 400 | 37.4 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 3 | 2 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 7.5 Floodjet Wide-Angle Flat Spray Tip | 400 | 37.4 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 4 | 1 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 10 Floodjet Wide-Angle Flat Spray Tip | 800 | 74.8 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 5 | 2 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 10 Floodjet Wide-Angle Flat Spray Tip | 800 | 74.8 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 6 | 1 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 10 Floodjet Wide-Angle Flat Spray Tip | 400 | 74.8 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 7 | 2 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 10 Floodjet Wide-Angle Flat Spray Tip | 400 | 74.8 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® | ||||||

| 8 | 1 | Aminopyralid + Florpyrauxifen-benzyl (0.07, 0.007) | DuraCor® | 2.02 | Flat fan nozzles with nonionic surfactant | Coarse | 28.1 |

| 9 | 1 | Aminopyralid + Florpyrauxifen-benzyl (0.07, 0.007) | DuraCor® | 2.02 | Flat fan nozzles with nonionic surfactant | Coarse | 28.1 |

| Aminopyralid potassium salt + Metsulfuron methyl (0.004, 0.00082) | Chaparral® | ||||||

| 10 | 1 | Aminopyralid + Florpyrauxifen-benzyl (0.07, 0.007) | DuraCor® | 4.05 | Flat fan nozzles with nonionic surfactant | Coarse | 28.1 |

| Aminopyralid potassium salt + Picloram potassium salt + Fluroxypyr 1-methylheptyl ester (0.03, 0.058, and 0.058) | Meza Vue® | ||||||

| 11 | 1 | Aminopyralid potassium salt + Picloram potassium salt + Fluroxypyr 1-methylheptyl ester (0.06, 0.116, and 0.116 | Meza Vue® | 2.02 | Flat fan nozzles with nonionic surfactant | Coarse | 28.1 |

| 12 | 1 | Aminopyralid + 2,4-D (0.086, 0.70) | Grazon Next HL® | 2.02 | Flat fan nozzles with nonionic surfactant | Coarse | 28.1 |

| Picloram (0.067) | Tordon® | ||||||

| Metsulfuron methyl (0.0067) | MSM 60® | ||||||

| 13 | 2 | Clopyralid + Aminopyralid (0.56, 0.12) | Sendero® | 4.05 | TK-VP 7.5 Floodjet Wide-Angle Flat Spray Tip | 800 | 37.4 |

| Picloram (0.56) | Tordon 22K® | ||||||

| Methylated seed oil organo silicant (0.058) | Dyne-amic® |

| Treatment Number | Chemical Treatments | Droplet Size (μm) | Application Rate (L ha−1) |

|---|---|---|---|

| 1 | Aminocyclopyrachlor mixture | 417 | 37.4 |

| 2 | Aminocyclopyrachlor mixture | 417 | 86.8 |

| 3 | Aminocyclopyrachlor mixture | 630 | 86.8 |

| 4 | Aminocyclopyrachlor mixture | Max | 86.8 |

| Band Combination | Number of Bands | Input Layers |

|---|---|---|

| All Bands | 7 | G, R, RE, NIR, CHM, NDVI, 1st principal component of GLCM bands |

| No CHM | 6 | G, R, RE, NIR, NDVI, 1st principal component of GLCM bands |

| No NDVI | 6 | G, R, RE, NIR, CHM, 1st principal component of GLCM bands |

| Date | Classification | Class | Bands Combination | ||

|---|---|---|---|---|---|

| All Bands, % | No CHM, % | No NDVI, % | |||

| 15 November 2019 | Object-based | Huisache | 32.46 ± 0.16 | 17.45 ± 0.14 | 26.58 ± 0.12 |

| Grass | 52.89 ± 0.14 | 71.39 ± 0.18 | 63.86 ± 0.06 | ||

| Shadow | 7.56 ± 0.05 | 6.71 ± 0.03 | 5.76 ± 0.01 | ||

| Other Surface | 7.09 ± 0.01 | 4.45 ± 0.01 | 3.81 ± 0.01 | ||

| Pixel-based | Huisache | 24.21 ± 0.14 | 20.03 ± 0.14 | 17.85 ± 0.10 | |

| Grass | 59.31 ± 0.13 | 65.38 ± 0.18 | 66.87 ± 0.15 | ||

| Shadow | 10.68 ± 0.01 | 8.11 ± 0.09 | 7.22 ± 0.01 | ||

| Other Surface | 5.80 ± 0.04 | 6.48 ± 0.03 | 8.06 ± 0.01 | ||

| 2 November 2020 | Object-based | Huisache | 23.31 ± 0.13 | 20.64 ± 0.10 | 15.97 ± 0.06 |

| Grass | 30.15 ± 0.18 | 56.14 ± 0.19 | 37.05 ± 0.21 | ||

| Shadow | 4.74 ± 0.07 | 6.47 ± 0.02 | 4.83 ± 0.07 | ||

| Other Surface | 5.62 ± 0.01 | 5.98 ± 0.05 | 6.22 ± 0.01 | ||

| Dead Huisache | 36.18 ± 0.16 | 10.77 ± 0.12 | 35.93 ± 0.16 | ||

| Pixel-based | Huisache | 15.69 ±0.04 | 21.66 ± 0.11 | 15.23 ± 0.04 | |

| Grass | 33.76 ± 0.19 | 50.68 ± 0.19 | 32.36 ± 0.19 | ||

| Shadow | 4.04 ± 0.05 | 5.62 ± 0.04 | 4.21 ± 0.06 | ||

| Other Surface | 3.60 ± 0.02 | 7.02 ± 0.05 | 3.93 ± 0.02 | ||

| Dead Huisache | 42.91 ± 0.14 | 15.03 ± 0.07 | 44.27 ± 0.17 | ||

| Classification | Treatment | Band Combination, % | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| All Bands | No CHM | No NDVI | ||||||||

| Pre-Treatment | 1 Yr Post-Treatment | Canopy Change | Pre-Treatment | 1 Yr Post-Treatment | Canopy Change | Pre-Treatment | 1 Yr Post-Treatment | Canopy Change | ||

| Object-based | Treatment 1 | 37.49 ± 0.02 | 17.48 ± 0.01 | −55.79 ± 0.15 | 10.2 ± 0.01 | 14.21 ± 0.01 | −37.19 ± 0.12 | 35.33 ± 0.01 | 11.95 ± 0.01 | −67.93 ± 0.09 |

| Treatment 2 | 25.36 ± 0.02 | 27.14 ± 0.01 | 1.76 ± 0.15 | 7.78 ± 0.01 | 10.59 ± 0.01 | −37.99 ± 0.12 | 24.19 ± 0.01 | 15.09 ± 0.01 | −40.68 ± 0.09 | |

| Treatment 3 | 28.51 ± 0.02 | 18.3 ± 0.02 | −38.96 ± 0.15 | 8.69 ± 0.01 | 8.67 ± 0.01 | −49.86 ± 0.12 | 26.73 ± 0.01 | 13.25 ± 0.01 | −52.88 ± 0.09 | |

| Treatment 4 | 33.48 ± 0.02 | 17.35 ± 0.09 | −50.72 ± 0.15 | 9.75 ± 0.01 | 6.28 ± 0.01 | −70.65 ± 0.12 | 30.6 ± 0.01 | 10.81 ± 0.01 | −66.41 ± 0.09 | |

| Treatment 5 | 32.14 ± 0.02 | 22.89 ± 0.01 | −32.26 ± 0.15 | 10.08 ± 0.01 | 11.06 ± 0.01 | −46.77 ± 0.12 | 28.69 ± 0.01 | 15.75 ± 0.01 | −47.81 ± 0.09 | |

| Treatment 6 | 25.96 ± 0.02 | 24.51 ± 0.08 | −10.24 ± 0.15 | 7.92 ± 0.01 | 8.57 ± 0.01 | −45.10 ± 0.12 | 23.94 ± 0.10 | 14.31 ± 0.05 | −43.16 ± 0.09 | |

| Treatment 7 | 21.44 ± 0.02 | 18.7 ± 0.07 | −17.07 ± 0.15 | 8.04 ± 0.01 | 6.5 ± 0.01 | −53.18 ± 0.12 | 20.59 ± 0.01 | 11.3 ± 0.01 | −47.81 ± 0.09 | |

| Treatment 8 | 23.06 ± 0.02 | 17.81 ± 0.10 | −26.58 ± 0.15 | 5.62 ± 0.01 | 9.96 ± 0.01 | −14.32 ± 0.12 | 15.27 ± 0.02 | 14.34 ± 0.01 | −10.75 ± 0.09 | |

| Treatment 9 | 29.92 ± 0.02 | 26.36 ± 0.05 | −15.29 ± 0.15 | 7.96 ± 0.01 | 17.01 ± 0.01 | −29.75 ± 0.12 | 25.78 ± 0.01 | 22.75 ± 0.01 | −16.06 ± 0.09 | |

| Treatment 10 | 30.97 ± 0.02 | 29.12 ± 0.04 | −10.60 ± 0.15 | 11.92 ± 0.01 | 15.48 ± 0.01 | −17.67 ± 0.12 | 28.78 ± 0.01 | 22.31 ± 0.01 | −26.29 ± 0.09 | |

| Treatment 11 | 22.08 ± 0.02 | 44.97 ± 0.01 | 93.67 ± 0.15 | 7.24 ± 0.01 | 24.4 ± 0.01 | 57.06 ± 0.12 | 20.44 ± 0.01 | 31.85 ± 0.01 | 48.17 ± 0.09 | |

| Treatment 12 | 9.53 ± 0.02 | 23.85 ± 0.10 | 137.81 ± 0.15 | 1.6 ± 0.01 | 30.26 ± 0.01 | 491.39 ± 0.12 | 9.19 ± 0.01 | 19.15 ± 0.01 | 98.09 ± 0.09 | |

| Treatment 13 | 39.53 ± 0.02 | 23.49 ± 0.05 | −43.51 ± 0.15 | 2.28 ± 0.01 | 13.16 ± 0.01 | −52.76 ± 0.12 | 38.49 ± 0.01 | 15.6 ± 0.01 | −61.47 ± 0.09 | |

| Total | 28.84 ± 0.02 | 23.31 ± 0.13 | −23.17 ± 0.15 | 17.53± 0.01 | 12.24 ± 0.10 | −32.91 ± 0.12 | 26.58 ± 0.01 | 15.97 ± 0.06 | −42.87 ± 0.09 | |

| Pixel-based | Treatment 1 | 27.73 ± 0.12 | 13.38 ± 0.03 | −54.12 ± 0.09 | 21.96 ± 0.02 | 14.24 ± 0.03 | −40.15 ± 0.13 | 27.46 ± 0.01 | 11.83 ± 0.02 | −59.03 ± 0.07 |

| Treatment 2 | 15.818 ± 0.12 | 15.02 ± 0.03 | −9.70 ± 0.09 | 16.59 ± 0.02 | 11.28 ± 0.03 | −35.38 ± 0.13 | 16.9 ± 0.01 | 16.17 ± 0.02 | −9.04 ± 0.07 | |

| Treatment 3 | 16.5 ± 0.12 | 12.25 ± 0.03 | −29.47 ± 0.09 | 16.72 ± 0.02 | 9.95 ± 0.03 | −43.50 ± 0.13 | 16.72 ± 0.01 | 11.88 ± 0.02 | −32.43 ± 0.07 | |

| Treatment 4 | 20.72 ± 0.12 | 9.94 ± 0.03 | −54.81 ± 0.09 | 20.2 ± 0.02 | 7.26 ± 0.03 | −65.88 ± 0.13 | 19.73 ± 0.01 | 9.81 ± 0.02 | −52.73 ± 0.07 | |

| Treatment 5 | 20.54 ± 0.12 | 15.09 ± 0.03 | −30.17 ± 0.09 | 20.47 ± 0.02 | 12.48 ± 0.03 | −42.10 ± 0.13 | 18.95 ± 0.01 | 14.53 ± 0.02 | −27.10 ± 0.07 | |

| Treatment 6 | 15.24 ± 0.12 | 13.16 ± 0.03 | −17.86 ± 0.09 | 15.58 ± 0.02 | 9.94 ± 0.03 | −39.36 ± 0.13 | 14.77 ± 0.01 | 13.09 ± 0.02 | −15.73 ± 0.07 | |

| Treatment 7 | 13.38 ± 0.12 | 9.48 ± 0.03 | −32.61 ± 0.09 | 14.07 ± 0.02 | 7.69 ± 0.03 | −48.04± 0.13 | 11.68 ± 0.01 | 9.13 ± 0.02 | −25.67 ± 0.07 | |

| Treatment 8 | 12.13 ± 0.12 | 13.78 ± 0.03 | −8.06 ± 0.09 | 12.89 ± 0.02 | 10.76 ± 0.03 | −20.62 ± 0.13 | 8.8 ± 0.01 | 14.13 ± 0.02 | 52.75 ± 0.07 | |

| Treatment 9 | 24.92 ± 0.12 | 21.89 ± 0.03 | −16.45 ± 0.09 | 24.31 ± 0.02 | 17.89 ± 0.03 | −30.04 ± 0.13 | 20.08 ± 0.01 | 21.6 ± 0.02 | −30.08 ± 0.07 | |

| Treatment 10 | 18.54 ± 0.12 | 21.43 ± 0.03 | 9.89 ± 0.09 | 19.46 ± 0.02 | 16.79 ± 0.03 | −17.96 ± 0.13 | 16.07 ± 0.01 | 21.07 ± 0.02 | −17.99 ± 0.07 | |

| Treatment 11 | 15.02 ± 0.12 | 29.88 ± 0.03 | 89.18 ± 0.09 | 15.95 ± 0.02 | 23.881 ± 0.03 | 41.90 ± 0.13 | 12.62 ± 0.01 | 27.79 ± 0.02 | 109.46 ± 0.07 | |

| Treatment 12 | 6.28 ± 0.12 | 23.34 ± 0.03 | 253.43 ± 0.09 | 5.51 ± 0.02 | 24.69 ± 0.03 | 326.12 ± 0.13 | 6.65 ± 0.01 | 18.18 ± 0.02 | 159.81 ± 0.07 | |

| Treatment 13 | 29.98 ± 0.12 | 16.97 ± 0.03 | −46.16 ± 0.09 | 27.59 ± 0.02 | 14.11 ± 0.03 | −51.39 ± 0.13 | 30.05 ± 0.01 | 17.69 ± 0.02 | −44.01 ± 0.07 | |

| Total | 18.88 ± 0.13 | 15.69 ± 0.03 | −21.00 ± 0.09 | 18.38 ± 0.02 | 13.04 ± 0.03 | −32.53 ± 0.13 | 17.85 ± 0.10 | 15.23 ± 0.02 | −18.89 ± 0.07 | |

| Date | Classification | Class | Bands Combination | ||

|---|---|---|---|---|---|

| All Bands | No CHM | No NDVI | |||

| Canopy Cover, % | |||||

| 26 June 2017 | Object-based | Huisache | 15.78 ± 0.16 | 46.13 ± 0.20 | 42.07 ± 0.43 |

| Mesquite | 18.30 ± 0.20 | 12.06 ± 0.20 | 11.85 ± 0.18 | ||

| Grass | 41.79 ± 0.16 | 27.37 ± 0.20 | 29.21 ± 0.13 | ||

| Shadow | 9.84 ± 0.07 | 5.91 ± 0.01 | 6.32 ± 0.03 | ||

| Dead Brush | 12.61 ± 0.13 | 7.28 ± 0.22 | 9.23 ± 0.15 | ||

| Other Surface | 1.68 ± 0.01 | 1.24 ± 0.11 | 1.31 ± 0.01 | ||

| Pixel-based | Huisache | 16.17 ± 0.17 | 18.47 ± 0.18 | 21.50 ± 0.18 | |

| Mesquite | 18.39 ± 0.12 | 14.95 ± 0.19 | 14.95 ± 0.21 | ||

| Grass | 39.77 ± 0.12 | 42.34 ± 0.17 | 39.82 ± 0.14 | ||

| Shadow | 9.15 ± 0.06 | 9.87 ± 0.14 | 8.92 ± 0.01 | ||

| Dead Brush | 14.90 ± 0.11 | 12.49 ± 0.16 | 13.22 ± 0.16 | ||

| Other Surface | 1.62 ± 0.17 | 1.88 ± 0.01 | 1.58 ± 0.06 | ||

| 26 July 2021 | Object-based | Huisache | 29.21 ± 0.14 | 35.45 ± 0.13 | 25.31 ± 0.2 |

| Mesquite | 20.04 ± 0.21 | 20.84 ± 0.22 | 19.37 ± 0.15 | ||

| Grass | 36.91 ± 0.11 | 28.18 ± 0.15 | 39.28 ± 0.06 | ||

| Shadow | 4.04 ± 0.01 | 4.37 ± 0.14 | 4.77 ± 0.01 | ||

| Dead Brush | 7.35 ± 0.01 | 9.68 ± 0.10 | 8.50 ± 0.01 | ||

| Other Surface | 2.45 ± 0.01 | 1.47 ± 0.10 | 2.76 ± 0.01 | ||

| Pixel-based | Huisache | 12.27 ± 0.12 | 19.73 ± 0.18 | 13.78 ± 0.18 | |

| Mesquite | 26.62 ± 0.22 | 29.19 ± 0.20 | 27.29 ± 0.19 | ||

| Grass | 41.12 ± 0.12 | 30.58 ± 0.20 | 40.07 ± 0.07 | ||

| Shadow | 6.15 ± 0.01 | 6.79 ± 0.09 | 5.37 ± 0.01 | ||

| Dead Brush | 10.64 ± 0.01 | 11.81 ± 0.15 | 10.70 ± 0.07 | ||

| Other Surface | 3.20 ± 0.03 | 1.89 ± 0.04 | 2.79 ± 0.01 | ||

| Band Combination, % | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| All Bands | No CHM | No NDVI | |||||||||

| Classification | Treatment | 3 Years PT | 7 Years PT | CC | 3 Years PT | 7 Years PT | CC | 3 Years PT | 7 Years PT | CC | |

| Object-based | Treatment 1 | Huisache | 6.73 ± 0.02 | 18.55 ± 0.01 | 11.82 ± 0.20 | 5.22 ± 0.01 | 28.09 ± 0.02 | 22.87 ± 0.01 | 7.52 ± 0.019 | 24.7 ± 0.09 | 17.17 ± 0.14 |

| Mesquite | 6.12 ± 0.01 | 11.64 ± 0.03 | 5.52 ± 0.02 | 7.78 ± 0.02 | 21.72 ± 0.02 | 13.93 ± 0.02 | 5.74 ± 0.02 | 15.07 ± 0.01 | 9.33 ± 0.15 | ||

| Treatment 2 | Huisache | 2.92 ± 0.02 | 12.13 ± 0.01 | 9.21 ± 0.20 | 2.14 ± 0.01 | 20.86 ± 0.02 | 18.72 ± 0.01 | 3.5 ± 0.02 | 18.38 ± 0.09 | 14.88 ± 0.14 | |

| Mesquite | 2.64 ± 0.01 | 12.65 ± 0.03 | 10 ± 0.020 | 3.37 ± 0.02 | 20.28 ± 0.02 | 16.92 ± 0.02 | 2.43 ± 0.02 | 15.42 ± 0.01 | 13.00 ± 0.02 | ||

| Treatment 3 | Huisache | 5.83 ± 0.02 | 19.04 ± 0.01 | 13.2 ± 0.02 | 4.3 ± 0.01 | 35.65 ± 0.02 | 31.35 ± 0.01 | 6.95 ± 0.01 | 27.48 ± 0.009 | 20.53 ± 0.01 | |

| Mesquite | 4.95 ± 0.01 | 23.16 ± 0.03 | 18.21 ± 0.02 | 6.75 ± 0.02 | 25.29 ± 0.02 | 18.54 ± 0.02 | 4.25 ± 0.02 | 20.09 ± 0.01 | 15.84 ± 0.02 | ||

| Treatment 4 | Huisache | 3.84 ± 0.02 | 9.59 ± 0.01 | 5.75 ± 0.02 | 3.08 ± 0.01 | 13.31 ± 0.02 | 10.24 ± 0.01 | 4.02 ± 0.02 | 10.68 ± 0.09 | 6.66 ± 0.02 | |

| Mesquite | 2.79 ± 0.01 | 53.49 ± 0.03 | 50.7 ± 0.02 | 4.07 ± 0.02 | 15.19 ± 0.02 | 11.12 ± 0.02 | 2.15 ± 0.01 | 4.07 ± 0.01 | 1.92 ± 0.02 | ||

| Total | Huisache | 5.08 ± 0.02 | 15.75 ± 0.01 | 10.68 ± 0.02 | 3.85 ± 0.01 | 26.38 ± 0.02 | 22.52 ± 0.01 | 5.82 ± 0.01 | 21.86 ± 0.01 | 16.04 ± 0.02 | |

| Mesquite | 4.4 ± 0.01 | 21.77 ± 0.03 | 17.37 ± 0.02 | 5.81 ± 0.02 | 21.46 ± 0.02 | 15.65 ± 0.02 | 3.92 ± 0.01 | 15.02 ± 0.01 | 11.1 ± 0.02 | ||

| Pixel-based | Treatment 1 | Huisache | 7.68 ± 0.02 | 18.57 ± 0.01 | 10.86 ± 0.01 | 8.45 ± 0.01 | 24.99 ± 0.02 | 16.54 ± 0.01 | 10.41 ± 0.01 | 20.65 ± 0.02 | 10.23 ± 0.02 |

| Mesquite | 5.47 ± 0.01 | 20 ± 0.03 | 14.54 ± 0.02 | 5.35 ± 0.02 | 30.5 ± 0.02 | 25.14 ± 0.009 | 4.56 ± 0.01 | 21.16 ± 0.01 | 16.6 ± 0.01 | ||

| Treatment 2 | Huisache | 3.36 ± 0.02 | 12.98 ± 0.01 | 9.62 ± 0.01 | 3.52 ± 0.01 | 21.02 ± 0.02 | 17.5 ± 0.01 | 4.49 ± 0.02 | 14.88 ± 0.009 | 10.39 ± 0.009 | |

| Mesquite | 2.18 ± 0.01 | 21.58 ± 0.03 | 19.4 ± 0.02 | 2.31 ± 0.02 | 23.08 ± 0.02 | 20.77 ± 0.009 | 1.69 ± 0.01 | 22.5 ± 0.01 | 20.82 ± 0.01 | ||

| Treatment 3 | Huisache | 6.14 ± 0.02 | 19.58 ± 0.009 | 13.45 ± 0.01 | 6.96 ± 0.01 | 32.73 ± 0.02 | 25.77 ± 0.01 | 7.77 ± 0.1 | 21.85 ± 0.02 | 14.08 ± 0.01 | |

| Mesquite | 4.01 ± 0.01 | 26.35 ± 0.03 | 22.34 ± 0.02 | 4.53 ± 0.02 | 30.67 ± 0.02 | 26.14 ± 0.009 | 3.2 ± 0.01 | 27.21 ± 0.01 | 24 ± 0.01 | ||

| Treatment 4 | Huisache | 4.7 ± 0.02 | 8.4 ± 0.009 | 3.7 ± 0.01 | 7.18 ± 0.01 | 14.21 ± 0.02 | 7.03 ± 0.01 | 8.69 ± 0.01 | 9.4 ± 0.02 | 0.44 ± 0.1 | |

| Mesquite | 1.5 ± 0.01 | 5.14 ± 0.03 | 3.64 ± 0.02 | 1.91 ± 0.02 | 15.95 ± 0.02 | 14.05 ± 0.01 | 0.72 ± 0.01 | 4.61 ± 0.01 | 3.89 ± 0.01 | ||

| Total | Huisache | 5.69 ± 0.02 | 15.95 ± 0.02 | 10.26 ± 0.01 | 6.59 ± 0.01 | 24.74 ± 0.02 | 18.16 ± 0.01 | 7.92 ± 0.01 | 17.89 ± 0.02 | 9.96 ± 0.01 | |

| Mesquite | 3.1 ± 0.01 | 20.08 ± 0.03 | 16.47 ± 0.02 | 3.83 ± 0.02 | 26.51 ± 0.02 | 22.68 ± 0.01 | 2.85 ± 0.01 | 20.83 ± 0.01 | 17.97 ± 0.01 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, X.; Clayton, M.K.; Starek, M.J.; Chang, A.; Jessup, R.W.; Foster, J.L. Identification of Brush Species and Herbicide Effect Assessment in Southern Texas Using an Unoccupied Aerial System (UAS). Remote Sens. 2023, 15, 3211. https://doi.org/10.3390/rs15133211

Shen X, Clayton MK, Starek MJ, Chang A, Jessup RW, Foster JL. Identification of Brush Species and Herbicide Effect Assessment in Southern Texas Using an Unoccupied Aerial System (UAS). Remote Sensing. 2023; 15(13):3211. https://doi.org/10.3390/rs15133211

Chicago/Turabian StyleShen, Xiaoqing, Megan K. Clayton, Michael J. Starek, Anjin Chang, Russell W. Jessup, and Jamie L. Foster. 2023. "Identification of Brush Species and Herbicide Effect Assessment in Southern Texas Using an Unoccupied Aerial System (UAS)" Remote Sensing 15, no. 13: 3211. https://doi.org/10.3390/rs15133211