Built-Up Area Change Detection Using Multi-Task Network with Object-Level Refinement

Abstract

:1. Introduction

- (1)

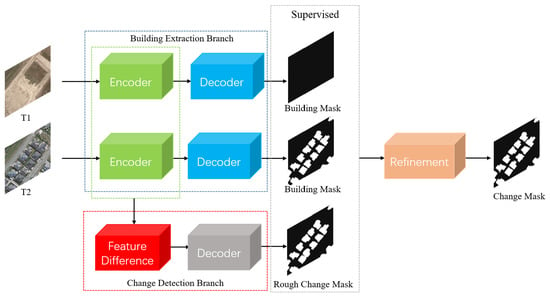

- Aiming to solve the problem of the low accuracy of the change detection methods based on deep learning in the case of a small number of samples, a new multi-task change detection method is proposed. The proposed method uses the building extraction dataset to pre-train the building extraction branch so that the feature extraction module of the network can better extract the building features. Therefore, only a small number of building transformation detection samples are required for the follow-up network training while achieving an excellent detection effect.

- (2)

- To enhance the network’s ability to extract the features of a building, a building detection branch is added to the network, and a multi-task learning strategy is used in network training so that the network has a stronger feature extraction capability.

- (3)

- For making full use of the results of the building extraction branch to improve the change detection accuracy, a object-level refinement algorithm is proposed. Combined with the results of the change detection branch and building extraction branch, the proposed method selects the building mask with change area greater than a predefined threshold as a final change detection result, which improves the accuracy and visual effect of change detection results.

2. Previous Work

3. Proposed Methods

3.1. Building Detection Branch

3.2. Change Detection Branch

3.3. Pre-Training and Multi-Task Learning

3.4. Object-Level Refinement Algorithm

- (1)

- If a change mask is detected in the area where a building mask has been detected, and the number of the pixels in the intersection of the two masks divided by the number of the pixels in the building mask is greater than the predefined threshold , then the building mask is set as a result of the processed change mask.

- (2)

- If a change mask is detected in the area where a building mask has been detected, and the number of the pixels in the intersection of the two masks divided by the number of the pixels in the building mask is less than the predefined threshold , then all masks in the area are set as non-change as a processed result.

- (3)

- If there is only a change mask and no building mask in an area, all masks in the area are set as non-change as a processed result.

- (4)

- If there is only a building mask and no change mask in an area, all masks in the area are set as non-change as a processed result.

4. Experiments and Analysis

4.1. Dataset and Implementation Details

4.2. Results of Change Detection

4.3. Efficiency of Object-Level Refinement Algorithm

4.4. Efficiency of Pre-Training and Multi-Task Learning

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Singh, A. Review article digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Hay, G.; Carvalho, L.; Wulder, M. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Zanetti, M.; Bruzzone, L. A theoretical framework for change detection based on a compound multiclass statistical model of the difference image. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1129–1143. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. An adaptive multiscale random field technique for unsupervised change detection in vhr multitemporal images. Geosci. Remote Sens. Symp. 2009, 4, 777–780. [Google Scholar]

- Torres-Vera, M.A.; Prol-Ledesma, R.M.; García-López, D. Three decades of land use variations in mexico city. Int. Remote Sens. 2009, 30, 117–138. [Google Scholar] [CrossRef]

- Jensen, J.R.; Toll, D.L. Detecting residential land-use development at the urban fringe. Photogramm. Eng. Remote Sens. 1982, 48, 629–643. [Google Scholar]

- Deng, J.; Wang, K.; Deng, Y.; Qi, G. Pca-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Ortizrivera, V.; Vélezreyes, M.; Roysam, B. Change detection in hyperspectral imagery using temporal principal components. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XII; SPIE: Bellingham, WA, USA, 2006; Volume 6233, pp. 368–377. [Google Scholar]

- Munyati, C. Use of principal component analysis (pca) of remote sensing images in wetland change detection on the kafue flats, zambia. Geocarto Int. 2004, 19, 11–22. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2006, 45, 218–236. [Google Scholar] [CrossRef] [Green Version]

- Johnson, R.D.; Kasischke, E. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Malila, W.A. Change vector analysis: An approach for detecting forest changes with landsat. LARS Symp. 1980, 385, 326–335. [Google Scholar]

- Erener, A.; Düzgün, H.S. A methodology for land use change detection of high resolution pan images based on texture analysis. Ital. J. Remote Sens. 2009, 41, 47–59. [Google Scholar] [CrossRef]

- Tomowski, D.; Ehlers, M.; Klonus, S. Colour and texture based change detection for urban disaster analysis. In Proceedings of the 2011 Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 329–332. [Google Scholar]

- Lei, T.; Zhang, Q.; Xue, D.; Chen, T.; Meng, H.; Nandi, A.K. End-to-end change detection using a symmetric fully convolutional network for landslide mapping. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3027–3031. [Google Scholar]

- Zhang, H.; Tang, X.; Han, X.; Ma, J.; Zhang, X.; Jiao, L. High-Resolution Remote Sensing Images Change Detection with Siamese Holistically-Guided FCN. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4340–4343. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved unet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Huang, F.; Xu, C.; Zhu, Z. Change detection of hyperspectral remote sensing images based on deep belief network. Int. J. Earth Sci. Eng. 2016, 9, 2096–2105. [Google Scholar]

- Samadi, F.; Akbarizadeh, G.; Kaabi, H. Change detection in sar images using deep belief network: A new training approach based on morphological images. IET Image Process. 2019, 13, 2255–2264. [Google Scholar] [CrossRef]

- Argyridis, A.; Argialas, D.P. Building change detection through multi-scale geobia approach by integrating deep belief networks with fuzzy ontologies. Int. J. Image Data Fusion 2016, 7, 148–171. [Google Scholar] [CrossRef]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic change detection in synthetic aperture radar images based on pcanet. IEEE Geosci. Remote Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Wan, Y.; Liu, Y.; Peng, Q.; Jie, F.; Ming, D. Remote sensing image change detection algorithm based on bm3d and pcanet. In Chinese Conference on Image and Graphics Technologies; Springer: Singapore, 2019; Volume 1043, pp. 524–531. [Google Scholar]

- Li, M.; Li, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. Sar image change detection using pcanet guided by saliency detection. IEEE Geosci. Remote Sens. Lett. 2018, 16, 402–406. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building instance change detection from large-scale aerial images using convolutional neural networks and simulated samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Maiya, S.R.; Babu, S.C. Slum segmentation and change detection: A deep learning approach. arXiv 2018, arXiv:1811.07896. [Google Scholar]

- Cao, Z.; Wu, M.; Yan, R.; Zhang, F.; Wan, X. Detection of small changed regions in remote sensing imagery using convolutional neural network. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; Volume 502, pp. 12–17. [Google Scholar]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building change detection for remote sensing images using a dual-task constrained deep siamese convolutional network model. IEEE Geosci. Remote Sens. Lett. 2020, 18, 811–815. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Huang, J.; Wang, H.; Xin, Q. Fine-Grained Building Change Detection From Very High-Spatial-Resolution Remote Sensing Images Based on Deep Multitask Learning. IEEE Geosci. Remote Sens. Lett. 2020, 58, 8000605. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, W. A feature difference convolutional neural network-based change detection method. IEEE Trans. Geosci. Remote 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Chen, H.; Li, W.; Shi, Z. Adversarial instance augmentation for building change detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5603216. [Google Scholar] [CrossRef]

- Zheng, Z.; Ma, A.; Zhang, L.; Zhong, Y. Change is everywhere: Single-temporal supervised object change detection in remote sensing imagery. CVF Int. Conf. Comput. Vis. 2021, 15, 15193–15202. [Google Scholar]

- Yang, M.; Jiao, L.; Liu, F.; Hou, B.; Yang, S. Transferred Deep Learning-Based Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6960–6973. [Google Scholar] [CrossRef]

- Liu, J.; Chen, K.; Xu, G.; Sun, X.; Han, H. Convolutional Neural Network-Based Transfer Learning for Optical Aerial Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 127–131. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised deep change vector analysis for multiple-change detection in vhr images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Wu, C.; Do, C.H.B.; Zhang, L. Unsupervised change detection in multi-temporal vhr images based on deep kernel pca convolutional mapping network. arXiv 2019, arXiv:1912.08628. [Google Scholar]

- Saha, S.; Mou, L.; Qiu, C.; Zhu, X.X.; Bruzzone, L. Unsupervised deep joint segmentation of multitemporal high-resolution images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8780–8792. [Google Scholar] [CrossRef]

- D’Addabbo, A.; Pasquariello, G.; Amodio, A. Urban change detection from vhr images via deep-features exploitation. In Proceedings of Sixth International Congress on Information and Communication Technology; London, UK, 25–26 February 2022, Springer: Berlin/Heidelberg, Germany, 2022; pp. 487–500. [Google Scholar]

- Chen, Q.; Wang, L.; Waslander, S.L.; Liu, X. An end-to-end shape modeling framework for vectorized building outline generation from aerial images. ISPRS J. Photogramm. Remote Sens. 2020, 170, 114–126. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Fan, W.; Guan, H.; Wang, C.; Li, J. Self-attention in reconstruction bias u-net for semantic segmentation of building rooftops in optical remote sensing images. Remote Sens. 2021, 13, 2524. [Google Scholar] [CrossRef]

- Ziaee, A.; Dehbozorgi, R.; Döller, M. A novel adaptive deep network for building footprint segmentation. arXiv 2021, arXiv:2103.00286. [Google Scholar]

- Wei, S.; Ji, S.; Lu, M. Toward automatic building footprint delineation from aerial images using cnn and regularization. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2178–2189. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. CVF Conf. Comput. Pattern Recognit. 2020, 10, 781–790. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Bao, T.; Fu, C.; Fang, T.; Huo, H. Ppcnet: A combined patch-level and pixel-level end-to-end deep network for high-resolution remote sensing image change detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1797–1801. [Google Scholar] [CrossRef]

- Zhang, L.; Hu, X.; Zhang, M.; Shu, Z.; Zhou, H. Object-level change detection with a dual correlation attention-guided detector. ISPRS J. Photogramm. Remote Sens. 2021, 177, 147–160. [Google Scholar] [CrossRef]

- Lian, X.; Yuan, W.; Guo, Z.; Cai, Z.; Song, X.; Shibasaki, R. End-to-end building change detection model in aerial imagery and digital surface model based on neural networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1239–1246. [Google Scholar] [CrossRef]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Zhang, Y.; Yang, Q. An overview of multi-task learning. Natl. Rev. 2018, 5, 30–43. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Zhu, J.; Liu, Y.; Li, H. Dasnet: Dual attentive fully convolutional siamese networks for change detection in high-resolution satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar] [CrossRef]

- Lin, M.; Shi, Q.; Marinoni, A.; He, D.; Zhang, L. Super-resolution-based Change Detection Network with Stacked Attention Module for Images with Different Resolutions. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4403718. [Google Scholar]

| Method | Year | Precision | Recall | F1 Value |

|---|---|---|---|---|

| Ji et al., 2019a [25] (25% samples used for training) | 2019 | 0.9520 | 0.8040 | 0.8745 |

| Ji et al., 2019a [25] (50% samples used for training) | 2019 | 0.9310 | 0.8920 | 0.9111 |

| IFN [53] (50% samples used for training) | 2020 | 0.9026 | 0.8054 | 0.8512 |

| STANet [54] (50% samples used for training) | 2020 | 0.7429 | 0.8935 | 0.8121 |

| Our method (10% samples used for training) | 2022 | 0.9220 | 0.8675 | 0.8939 |

| Our method (25% samples used for training) | 2022 | 0.9173 | 0.8906 | 0.9037 |

| Our method (50% samples used for training) | 2022 | 0.9512 | 0.8930 | 0.9212 |

| Method | Year | Precision | Recall | F1 Value |

|---|---|---|---|---|

| DASNet [55] (80% samples used for training) | 2020 | 0.8920 | 0.9050 | 0.8980 |

| DTCDSCN [28] (80% samples used for training) | 2020 | 0.9015 | 0.8935 | 0.8975 |

| IFN (80% samples used for training) | 2020 | 0.9159 | 0.9374 | 0.9265 |

| STANet (80% samples used for training) | 2020 | 0.9007 | 0.8040 | 0.8496 |

| SRCDNet [56] (80% samples used for training) | 2021 | 0.8484 | 0.9013 | 0.8740 |

| Our method (80% samples used for training) | 2022 | 0.9457 | 0.9293 | 0.9374 |

| Training Data Size | Processed | Unprocessed | ||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1 Value | Precision | Recall | F1 Value | |

| 10% | 0.9220 | 0.8675 | 0.8939 | 0.8770 | 0.8247 | 0.8501 |

| 25% | 0.9173 | 0.8906 | 0.9037 | 0.8927 | 0.8879 | 0.8903 |

| 50% | 0.9512 | 0.8930 | 0.9212 | 0.9420 | 0.8966 | 0.9187 |

| Sample Size | Strategy | Precision | Recall | F1 Value |

|---|---|---|---|---|

| 10% | none | 0.7627 | 0.5451 | 0.6358 |

| multi-task learning | 0.8718 | 0.7202 | 0.7888 | |

| multi-task learning and pre-train | 0.8770 | 0.8247 | 0.8501 | |

| multi-task learning, pre-train and object-level refinement algorithm | 0.9220 | 0.8675 | 0.8939 | |

| 25% | none | 0.7350 | 0.8546 | 0.7903 |

| multi-task learning | 0.8424 | 0.8959 | 0.8588 | |

| multi-task learning and pre-train | 0.8927 | 0.8879 | 0.8903 | |

| multi-task learning, pre-train and object-level refinement algorithm | 0.9173 | 0.8906 | 0.9037 | |

| 50% | none | 0.8908 | 0.8824 | 0.8866 |

| multi-task learning | 0.9271 | 0.9019 | 0.9143 | |

| multi-task learning and pre-train | 0.9420 | 0.8966 | 0.9187 | |

| multi-task learning, pre-train and object-level refinement algorithm | 0.9512 | 0.8930 | 0.9212 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, S.; Li, W.; Sun, K.; Wei, J.; Chen, Y.; Wang, X. Built-Up Area Change Detection Using Multi-Task Network with Object-Level Refinement. Remote Sens. 2022, 14, 957. https://doi.org/10.3390/rs14040957

Gao S, Li W, Sun K, Wei J, Chen Y, Wang X. Built-Up Area Change Detection Using Multi-Task Network with Object-Level Refinement. Remote Sensing. 2022; 14(4):957. https://doi.org/10.3390/rs14040957

Chicago/Turabian StyleGao, Song, Wangbin Li, Kaimin Sun, Jinjiang Wei, Yepei Chen, and Xuan Wang. 2022. "Built-Up Area Change Detection Using Multi-Task Network with Object-Level Refinement" Remote Sensing 14, no. 4: 957. https://doi.org/10.3390/rs14040957