Individual Tree Detection in Coal Mine Afforestation Area Based on Improved Faster RCNN in UAV RGB Images

Abstract

:1. Introduction

2. Data and Method

2.1. Study Area

2.2. Data

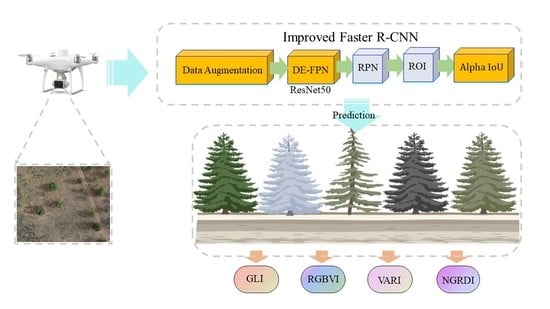

2.3. Improved Faster R-CNN Framework

2.4. Multi-Strategy Fusion Data Augmentation

2.5. DE-FPN Structure

2.6. Modified Generalized Function

2.7. Hyperparameters and Backbone

2.8. Aerial Photography Area

2.9. Remote Sensing Indexes

3. Results

3.1. Modules Effectiveness Evaluation

3.2. Module Evaluation

3.3. Accuracy Comparison with Other Models

3.4. Stand Density

3.5. Remote Sensing Indices Results

4. Discussion

4.1. Advantages of an Improvement Strategy

4.2. Advantages of Improved Faster R-CNN

4.3. Comparison with Transformer

4.4. Evaluation of Ecologyical Effects

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Backbone | DA | DE-FPN | Alpha-IoU | AP | AP50 | AP60 | AP70 |

|---|---|---|---|---|---|---|---|

| VGG11 | 71.54 | 77.70 | 74.59 | 40.87 | |||

| √ | 71.94 | 79.31 | 74.04 | 41.36 | |||

| √ | 75.16 | 84.22 | 74.94 | 48.89 | |||

| √ | 71.63 | 79.64 | 73.87 | 38.66 | |||

| √ | √ | 74.74 | 84.17 | 75.17 | 44.74 | ||

| √ | √ | 72.18 | 81.18 | 73.21 | 41.09 | ||

| √ | √ | 74.25 | 80.76 | 77.56 | 41.45 | ||

| √ | √ | √ | 75.85 | 86.52 | 75.53 | 45.13 | |

| VGG16 | 73.27 | 85.27 | 72.11 | 41.88 | |||

| √ | 75.76 | 86.84 | 76.28 | 40.45 | |||

| √ | 73.94 | 83.49 | 75.02 | 40.92 | |||

| √ | 73.98 | 89.43 | 70.68 | 40.84 | |||

| √ | √ | 76.81 | 90.83 | 72.11 | 53.55 | ||

| √ | √ | 74.71 | 87.79 | 71.54 | 48.16 | ||

| √ | √ | 75.75 | 89.55 | 74.27 | 40.30 | ||

| √ | √ | √ | 77.65 | 97.77 | 70.15 | 47.27 | |

| VGG19 | 75.07 | 87.94 | 70.47 | 54.88 | |||

| √ | 76.70 | 88.41 | 75.82 | 45.09 | |||

| √ | 74.89 | 90.99 | 70.80 | 42.95 | |||

| √ | 73.89 | 87.76 | 70.69 | 45.07 | |||

| √ | √ | 76.14 | 94.92 | 71.04 | 40.19 | ||

| √ | √ | 77.53 | 97.86 | 71.63 | 40.14 | ||

| √ | √ | 76.40 | 91.58 | 71.93 | 48.78 | ||

| √ | √ | √ | 78.29 | 88.92 | 77.65 | 48.96 | |

| ResNet18 | 75.03 | 85.69 | 72.99 | 51.22 | |||

| √ | 75.40 | 86.95 | 72.60 | 51.93 | |||

| √ | 72.89 | 84.85 | 70.91 | 44.99 | |||

| √ | 79.76 | 94.11 | 75.56 | 53.48 | |||

| √ | √ | 75.42 | 86.43 | 71.39 | 58.54 | ||

| √ | √ | 80.07 | 90.98 | 77.33 | 58.24 | ||

| √ | √ | 80.26 | 92.26 | 79.90 | 45.64 | ||

| √ | √ | √ | 80.80 | 90.57 | 80.23 | 53.77 | |

| ResNet34 | 71.87 | 82.19 | 69.90 | 48.77 | |||

| √ | 74.64 | 89.13 | 70.87 | 46.28 | |||

| √ | 76.15 | 87.90 | 72.92 | 53.82 | |||

| √ | 75.92 | 84.82 | 76.38 | 47.33 | |||

| √ | √ | 78.23 | 87.79 | 79.55 | 44.29 | ||

| √ | √ | 76.53 | 86.40 | 75.22 | 52.11 | ||

| √ | √ | 73.84 | 84.44 | 73.08 | 45.09 | ||

| √ | √ | √ | 79.58 | 87.83 | 81.62 | 46.66 | |

| ResNet50 | 81.93 | 93.66 | 81.20 | 49.61 | |||

| √ | 83.18 | 96.18 | 82.49 | 46.98 | |||

| √ | 84.50 | 93.99 | 84.34 | 56.68 | |||

| √ | 84.53 | 91.04 | 86.60 | 56.68 | |||

| √ | √ | 82.11 | 91.43 | 80.93 | 58.87 | ||

| √ | √ | 83.77 | 93.21 | 84.19 | 53.78 | ||

| √ | √ | 86.70 | 94.64 | 87.72 | 58.78 | ||

| √ | √ | √ | 89.89 | 98.93 | 90.43 | 60.60 | |

| MobileNet V2 | 56.37 | 70.76 | 52.28 | 29.58 | |||

| √ | 56.82 | 71.18 | 52.75 | 30.01 | |||

| √ | 58.29 | 72.93 | 53.41 | 33.88 | |||

| √ | 58.74 | 72.61 | 53.41 | 38.44 | |||

| √ | √ | 59.18 | 72.55 | 55.11 | 35.38 | ||

| √ | √ | 58.65 | 71.72 | 54.12 | 37.57 | ||

| √ | √ | 59.85 | 73.65 | 55.86 | 34.39 | ||

| √ | √ | √ | 60.78 | 74.05 | 56.96 | 36.26 |

References

- Arellano, P.; Tansey, K.; Balzter, H.; Boyd, D.S. Detecting the effects of hydrocarbon pollution in the Amazon forest using hyperspectral satellite images. Environ. Pollut. 2015, 205, 225–239. [Google Scholar] [CrossRef] [PubMed]

- Gamfeldt, L.; Snäll, T.; Bagchi, R.; Jonsson, M.; Gustafsson, L.; Kjellander, P.; Ruiz-Jaen, M.; Froberg, M.; Stendahl, J.; Philipson, C.D.; et al. Higher levels of multiple ecosystem services are found in forests with more tree species. Nat. Commun. 2013, 4, 1340. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ahirwal, J.; Maiti, S.K. Ecological Restoration of Abandoned Mine Land: Theory to Practice. Handb. Ecol. Ecosyst. Eng. 2021, 12, 231–246. [Google Scholar]

- Yao, X.; Niu, Y.; Dang, Z.; Qin, M.; Wang, K.; Zhou, Z.; Zhang, Q.; Li, J. Effects of natural vegetation restoration on soil quality on the Loess Plateau. J. Earth Environ. 2015, 6, 238–247. [Google Scholar]

- Li, Y.; Zhou, W.; Jing, M.; Wang, S.; Huang, Y.; Geng, B.; Cao, Y. Changes in Reconstructed Soil Physicochemical Properties in an Opencast Mine Dump in the Loess Plateau Area of China. Int. J. Environ. Res. Public Health 2022, 19, 706. [Google Scholar] [CrossRef]

- Maiti, S.K.; Bandyopadhyay, S.; Mukhopadhyay, S. Importance of selection of plant species for successful ecological restoration program in coal mine degraded land. In Phytorestoration of Abandoned Mining and Oil Drilling Sites; Elsevier BV: Amsterdam, The Netherlands, 2021; pp. 325–357. [Google Scholar]

- Gilhen-Baker, M.; Roviello, V.; Beresford-Kroeger, D.; Roviello, G.N. Old growth forests and large old trees as critical organisms connecting ecosystems and human health. A review. Environ. Chem. Lett. 2022, 20, 1529–1538. [Google Scholar] [CrossRef]

- Mi, J.; Yang, Y.; Hou, H.; Zhang, S.; Raval, S.; Chen, Z.; Hua, Y. The long-term effects of underground mining on the growth of tree, shrub, and herb communities in arid and semiarid areas in China. Land Degrad. Dev. 2021, 32, 1412–1425. [Google Scholar] [CrossRef]

- Li, S.; Wang, J.; Zhang, M.; Tang, Q. Characterizing and attributing the vegetation coverage changes in North Shanxi coal base of China from 1987 to 2020. Resour. Policy 2021, 74, 102331. [Google Scholar] [CrossRef]

- Jin, J.; Yan, C.; Tang, Y.; Yin, Y. Mine Geological Environment Monitoring and Risk Assessment in Arid and Semiarid Areas. Complexity 2021, 2021, 3896130. [Google Scholar] [CrossRef]

- Han, B.; Jin, X.; Xiang, X.; Rui, S.; Zhang, X.; Jin, Z.; Zhou, Y. An integrated evaluation framework for Land-Space ecological restoration planning strategy making in rapidly developing area. Ecol. Indic. 2021, 124, 107374. [Google Scholar] [CrossRef]

- Błaszczak-Bąk, W.; Janicka, J.; Kozakiewicz, T.; Chudzikiewicz, K.; Bąk, G. Methodology of Calculating the Number of Trees Based on ALS Data for Forestry Applications for the Area of Samławki Forest District. Remote Sens. 2021, 14, 16. [Google Scholar] [CrossRef]

- Wang, W.; Liu, R.; Gan, F.; Zhou, P.; Zhang, X.; Ding, L. Monitoring and Evaluating Restoration Vegetation Status in Mine Region Using Remote Sensing Data: Case Study in Inner Mongolia, China. Remote Sens. 2021, 13, 1350. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Sensing, X.C.-R. Undefined Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Asadzadeh, S.; de Oliveira, W.J.; de Souza Filho, C.R. UAV-based remote sensing for the petroleum industry and environmental monitoring: State-of-the-art and perspectives. J. Pet. Sci. Eng. 2022, 208, 109633. [Google Scholar] [CrossRef]

- Jung, J.; Maeda, M.; Chang, A.; Bhandari, M.; Ashapure, A.; Landivar-Bowles, J. The potential of remote sensing and artificial intelligence as tools to improve the resilience of agriculture production systems. Curr. Opin. Biotechnol. 2021, 70, 15–22. [Google Scholar] [CrossRef] [PubMed]

- Hou, M.; Tian, F.; Ortega-Farias, S.; Riveros-Burgos, C.; Zhang, T.; Lin, A. Estimation of crop transpiration and its scale effect based on ground and UAV thermal infrared remote sensing images. Eur. J. Agron. 2021, 131, 126389. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Lv, Y.; Zhang, C.; Yun, W.; Gao, L.; Wang, H.; Ma, J.; Li, H.; Zhu, D. The delineation and grading of actual crop production units in modern smallholder areas using RS Data and Mask R-CNN. Remote Sens. 2020, 12, 1074. [Google Scholar] [CrossRef]

- Abeysinghe, T.; Milas, A.S.; Arend, K.; Hohman, B.; Reil, P.; Gregory, A.; Vázquez-Ortega, A. Mapping invasive Phragmites australis in the Old Woman Creek estuary using UAV remote sensing and machine learning classifiers. Remote Sens. 2019, 11, 1380. [Google Scholar] [CrossRef] [Green Version]

- Roslan, Z.; Long, Z.A.; Husen, M.N.; Ismail, R.; Hamzah, R. Deep Learning for Tree Crown Detection in Tropical Forest. In Proceedings of the 14th International Conference on Ubiquitous Information Management and Communication, IMCOM, Taichung, Taiwan, 3–5 January 2020; Volume 2020. [Google Scholar]

- Bajpai, G.; Gupta, A.; Chauhan, N. Real Time Implementation of Convolutional Neural Network to Detect Plant Diseases Using Internet of Things. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 1066, pp. 510–522. [Google Scholar]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- Quanlong, F.; Jiantao, L.; Jianhua, G. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar]

- Sferlazza, S.; Maltese, A.; Dardanelli, G.; Veca, D.S.L.M. Optimizing the sampling area across an old-growth forest via UAV-borne laser scanning, GNSS, and radial surveying. ISPRS Int. J. Geo-Inf. 2022, 11, 168. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Env. Monit Assess 2016, 188, 146. [Google Scholar] [CrossRef]

- Qiu, L.; Jing, L.; Hu, B.; Li, H.; Tang, Y.A. New Individual Tree Crown Delineation Method for High Resolution Multispectral Imagery. Remote Sens. 2020, 12, 585. [Google Scholar] [CrossRef] [Green Version]

- Franklin, S.E.; Ahmed, O.S. Deciduous Tree Species Classification Using Object-Based Analysis and Machine Learning with Unmanned Aerial Vehicle Multispectral Data. Int. J. Remote Sens. 2018, 39, 5236–5245. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar]

- Xiang, T.Z.; Xia, G.S.; Zhang, L. Mini-Unmanned Aerial Vehicle-Based Remote Sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef] [Green Version]

- Fu, L.; Zhang, D.; Ye, Q. Recurrent thrifty attention network for remote sensing scene recognition. IEEE Trans. Geosci. Remote Sens. 2020, 59, 8257–8268. [Google Scholar] [CrossRef]

- Guo, X.; Liu, Q.; Sharma, R.P.; Chen, Q.; Ye, Q.; Tang, S.; Fu, L. Tree Recognition on the Plantation Using UAV Images with Ultrahigh Spatial Resolution in a Complex Environment. Remote Sens. 2021, 13, 4122. [Google Scholar] [CrossRef]

- Xie, E.; Ding, J.; Wang, W.; Zhan, X.; Xu, H.; Sun, P.; Li, Z.; Luo, P. DetCo: Unsupervised Contrastive Learning for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11 October 2021; pp. 8392–8401. [Google Scholar]

- Wang, W.; Lai, Q.; Fu, H.; Shen, J.; Ling, H.; Yang, R. Salient Object Detection in the Deep Learning Era: An In-depth Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3239–3259. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Gidaris, S.; Komodakis, N. Object detection via a multi-region and semantic segmentation-aware U model. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1134–1142. [Google Scholar]

- Girshick, R.G. Fast R-cnn. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. Eur. Conf. Comput. Sci. 2016, 9905, 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [Green Version]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9626–9635. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. IEEE Conf. Comput. Vis. Pattern Recognit. 2017, 11, 936–944. [Google Scholar]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. AUGFPN: Improving multi-scale feature learning for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12592–12601. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. DetectoRS: Detecting Objects with Recursive Feature Pyramid and Switchable Atrous Convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10208–10219. [Google Scholar]

- Zhang, Y.; Chen, G.; Cai, Z. Small Target Detection Based on Squared Cross Entropy and Dense Feature Pyramid Networks. IEEE Access 2021, 9, 55179–55190. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Yang, Y.; Jing, J.; Tang, Z. Impact of injection temperature and formation slope on CO2 storage capacity and form in the Ordos Basin, China. Environ. Sci. Pollut. 2022, 1–21. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, G.; Su, X.; Zhuang, X.; Wang, L.; Fu, H.; Li, L. Geochemical controls on the enrichment of fluoride in the mine water of the Shendong mining area, China. Chemosphere 2021, 284, 131388. [Google Scholar] [CrossRef]

- Xiao, W.; Zhang, W.; Ye, Y.; Lv, X.; Yang, W. Is underground coal mining causing land degradation and significantly damaging ecosystems in semi-arid areas? A study from an Ecological Capital perspective. Land Degrad. Dev. 2020, 31, 1969–1989. [Google Scholar] [CrossRef]

- Zeng, Y.; Lian, H.; Du, X.; Tan, X.; Liu, D. An Analog Model Study on Water–Sand Mixture Inrush Mechanisms During the Mining of Shallow Coal Seams. Mine Water Environ. 2022, 41, 428–436. [Google Scholar] [CrossRef]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y. Detection of maize tassels from UAV RGB imagery with faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef] [Green Version]

- Yuan, C.; Agaian, S.S. BiThermalNet: A lightweight network with BNN RPN for thermal object detection. In Multimodal Image Exploitation and Learning 2022; SPIE: Orlando, FL, USA, 2022; Volume 12100, pp. 114–123. [Google Scholar]

- Pazhani, A.; Vasanthanayaki, C. Object detection in satellite images by faster R-CNN incorporated with enhanced ROI pooling (FrRNet-ERoI) framework. Earth Sci. Inform. 2022, 15, 553–561. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. GCNet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the 2019 International Conference on Computer Vision Workshop, ICCVW 2019, Seoul, Korea, 27–28 October 2019; pp. 1971–1980. [Google Scholar]

- Naveed, H. Survey: Image mixing and deleting for data augmentation. arXiv 2021, arXiv:2106.07085. [Google Scholar]

- Yang, G.; Yu, S.; Dong, H.; Slabaugh, G.; Dragotti, P.L.; Ye, X.; Liu, F.; Arridge, S.; Keegan, J.; Guo, Y.; et al. DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction. IEEE Trans. Med. Imaging 2018, 37, 1310–1321. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Deng, H. GC-YOLOv3: You only look once with global context block. Electronics 2020, 9, 1235. [Google Scholar] [CrossRef]

- He, J.; Erfani, S.; Ma, X.; Bailey, J.; Chi, Y.; Hua, X.-S. Alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression. arXiv 2021, arXiv:2110.13675. [Google Scholar]

- Wu, S.; Yang, J.; Wang, X.; Li, X. Iou-balanced loss functions for single-stage object detection. Pattern Recognit. Lett. 2022, 156, 96–103. [Google Scholar] [CrossRef]

- Li, J.; Cheng, B.; Feris, R.; Xiong, J.; Huang, T.S.; Hwu, W.M.; Shi, H. Pseudo-IoU: Improving label assignment in anchor-free object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2378–2387. [Google Scholar]

- Zaidi, S.; Ansari, M.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A Survey of Modern Deep Learning based Object Detection Models. arXiv 2021, arXiv:2104.11892. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F. ImageNet: A large-scale hierarchical image database. In Proceedings of the International Conference on Advances in Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Cao, Y.; Cheng, X.; Mu, J. Concentrated Coverage Path Planning Algorithm of UAV Formation for Aerial Photography. IEEE Sens. J. 2022, 22, 11098–11111. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Araújo e Silva Ferraz, G.; Mendes dos Santos, L.; Santana, L.S.; Marin, D.B.; Rossi, G.; Conti, L. Application of RGB Images Obtained by UAV in Coffee Farming. Remote Sens. 2021, 13, 2397. [Google Scholar] [CrossRef]

- Hu, X.; Niu, B.; Li, X.; Min, X. Unmanned aerial vehicle (UAV) remote sensing estimation of wheat chlorophyll in subsidence area of coal mine with high phreatic level. Earth Sci. Inform. 2021, 14, 2171–2181. [Google Scholar] [CrossRef]

- Erunova, M.G.; Pisman, T.I.; Shevyrnogov, A.P. The Technology for Detecting Weeds in Agricultural Crops Based on Vegetation Index VARI (PlanetScope). J. Sib. Fed. Univ. Eng. Technol. 2021, 14, 347–353. [Google Scholar] [CrossRef]

- Aravena, R.A.; Lyons, M.B.; Roff, A.; Keith, D.A. A Colourimetric Approach to Ecological Remote Sensing: Case Study for the Rainforests of South-Eastern Australia. Remote Sens. 2021, 13, 2544. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, Z.; Liu, C.; Yu, Y.; Pang, S.; Duic, N.; Shafie-khah, N.; Catalao, P.S. Generative adversarial networks and convolutional neural networks based weather classification model for day ahead short-term photovoltaic power forecasting. Energy Convers. Manag. 2019, 181, 443–462. [Google Scholar] [CrossRef]

- Liao, Y.; Zhang, G.; Yang, Z.; Liu, W. EFLDet: Enhanced feature learning for object detection. Neural Computing and Applications. Neural Comput. Appl. 2021, 34, 1033–1045. [Google Scholar] [CrossRef]

- Khan, S.D.; Alarabi, L.; Basalamah, S. A unified deep learning framework of multi-scale detectors for geo-spatial object detection in high-resolution satellite images. Arab. J. Sci. Eng. 2022, 47, 9489–9504. [Google Scholar] [CrossRef]

- Li, H.; Liu, B.; Zhang, Y.; Fu, C.; Han, X.; Du, L.; Gao, W.; Chen, Y.; Liu, X.; Wang, Y.; et al. 3D IFPN: Improved Feature Pyramid Network for Automatic Segmentation of Gastric Tumor. Front. Oncol. 2021, 11, 618496. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Korea, 27–28 October 2019; pp. 658–666. [Google Scholar]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6054–6063. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Yu, J.; Zhang, W. Face mask wearing detection algorithm based on improved YOLO-v4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Liu, H.; Li, L.; Long, Y.; Wang, X.; Wang, Z.; Li, J.; Chang, Y. Application of local fully Convolutional Neural Network combined with YOLO v5 algorithm in small target detection of remote sensing image. PLoS ONE 2021, 16, e0259283. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Sweitzerland, 2016; pp. 21–37. [Google Scholar]

- Zheng, Q.; Li, Y.; Zheng, L.; Shen, Q. Progressively real-time video salient object detection via cascaded fully convolutional networks with motion attention. Neurocomputing 2022, 467, 465–475. [Google Scholar] [CrossRef]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6569–6578. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Song, H.; Sun, D.; Chun, S.; Jampani, V.; Han, D.; Heo, B.; Kim, W.; Yang, M. Vidt: An efficient and effective fully transformer-based object detector. arXiv 2021, arXiv:2110.03921. [Google Scholar]

- Lou, X.; Huang, Y.; Fang, L.; Huang, S.; Gao, H.; Yang, L.; Weng, Y. Measuring loblolly pine crowns with drone imagery through deep learning. J. For. Res. 2021, 33, 227–238. [Google Scholar] [CrossRef]

- Zhang, K.; Shen, H. Solder joint defect detection in the connectors using improved faster-rcnn algorithm. Appl. Sci. 2021, 11, 576. [Google Scholar] [CrossRef]

- Liang, D.; Yang, F.; Zhang, T.; Yang, P. Understanding mixup training methods. IEEE Access. 2018, 6, 58774–58783. [Google Scholar] [CrossRef]

- Chen, S.; Abhinav, S.; Saurabh, S.; Abhinav, G. Revisting unreasonable effectivness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Zhu, L.; Xie, Z.; Liu, L.; Tao, B.; Tao, W. Iou-uniform r-cnn: Breaking through the limitations of rpn. Pattern Recognit. 2021, 112, 107816. [Google Scholar] [CrossRef]

- Tian, Y.; Su, D.; Lauria, S.; Liu, X. Recent Advances on Loss Functions in Deep Learning for Computer Vision. Neurocomputing 2022, 14, 223–231. [Google Scholar] [CrossRef]

- Veselá, H.; Lhotáková, Z.; Albrechtová, J.; Frouz, J. Seasonal changes in tree foliage and litterfall composition at reclaimed and unreclaimed post-mining sites. Ecol. Eng. 2021, 173, 106424. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Q.; Jiang, P.; Zheng, Y.; Yuan, L.; Yuan, P. LDS-YOLO: A lightweight small object detection method for dead trees from shelter forest. Comput. Electron. Agric. 2022, 198, 107035. [Google Scholar] [CrossRef]

- Karpov, P.; Godin, G.; Tetko, I.V. Transformer-CNN: Swiss knife for QSAR modeling and interpretation. J. Cheminform. 2020, 12, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xie, Y.; Zhang, J.; Shen, C.; Xia, Y. Cotr: Efficiently bridging cnn and transformer for 3d medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Cham, Switzerland, 2021; pp. 171–180. [Google Scholar]

- Kong, Q.; Xu, Y.; Wang, W.; Plumbley, M.D. Sound event detection of weakly labelled data with CNN-transformer and automatic threshold optimization. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2450–2460. [Google Scholar] [CrossRef]

- Li, Z.; Chen, G.; Zhang, T. A CNN-transformer hybrid approach for crop classification using multitemporal multisensor images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Chen, K.; Wu, Y.; Wang, Z.; Zhang, X.; Nian, F.; Li, S.; Shao, X. Audio Captioning Based on Transformer and Pre-Trained CNN. In DCASE; Case Publishing: Tokyo, Japan, 2020; pp. 21–25. [Google Scholar]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive tree biovolume from uav multi-resolution image segmentation with mask r-cnn. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef]

- D’Odorico, P.; Schönbeck, L.; Vitali, V.; Meusburger, K.; Schaub, M.; Ginzler, C.; Zweifel, R.; Velasco, V.M.E.; Gisler, J.; Gessler, A.; et al. Drone-based physiological index reveals long-term acclimation and drought stress responses in trees. Plant Cell Environ. 2021, 44, 3552–3570. [Google Scholar] [CrossRef]

- Chuvieco, E. Fundamentals of Satellite Remote Sensing: An Environmental Approach; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Wu, S.; Wang, J.; Yan, Z.; Song, G.; Chen, Y.; Ma, Q.; Deng, M.; Wu, Y.; Zhao, Y.; Guo, Z.; et al. Monitoring tree-crown scale autumn leaf phenology in a temperate forest with an integration of PlanetScope and drone remote sensing observations. ISPRS J. Photogramm. Remote Sens. 2021, 171, 36–48. [Google Scholar] [CrossRef]

- Xi, D.; Lei, D.; Si, G.; Liu, W.; Zhang, T.; Mulder, J. Long-term 15N balance after single-dose input of 15Nlabeled NH4+ and NO3− in a subtropical forest under reducing N deposition. Glob. Biogeochem. Cycles 2021, 35, e2021GB006959. [Google Scholar] [CrossRef]

| Parameters | Value |

|---|---|

| Individual sensors total pixels | 2.12 million |

| Individual sensors effective pixels | 2.08 million |

| FOV | 62.7° |

| Focal length | 5.74 mm |

| Aperture | f/2.2 |

| Average ground sampling distance | 5 m |

| Spatial resolution | 0.01 m |

| Flight time | 10:00 A.M.–15:00 P.M. |

| Flight height | 30 m |

| Method | ΔAP | |

|---|---|---|

| DA | RE | 0.16 |

| RIC | 0.31 | |

| DAGAN | 0.82 | |

| RE + RIC + DAGAN | 1.26 | |

| FPN | EnFPN | 1.99 |

| AugFPN | 2.13 | |

| iFPN | 2.44 | |

| DE-FPN | 2.82 | |

| IoU Loss | DIoU | 1.33 |

| GIoU | 0.82 | |

| CIoU | 2.21 | |

| Alpha-IoU | 2.60 |

| Model | Backbone | AP | AP50 | AP60 | AP70 |

|---|---|---|---|---|---|

| Faster R-CNN | ResNet50 | 89.89 | 98.93 | 90.43 | 60.60 |

| Mask R-CNN [81] | ResNet50 | 81.42 | 87.76 | 82.26 | 59.04 |

| TridentNet [82] | ResNet50 | 79.70 | 81.25 | 85.89 | 50.27 |

| YOLO v3 [83] | DarkNet-53 | 85.18 | 90.47 | 89.51 | 52.02 |

| YOLO v4 [84] | CSPDarkNet-53 | 78.85 | 82.93 | 81.84 | 54.67 |

| YOLO v5 [85] | ResNet50 | 83.89 | 93.58 | 84.77 | 51.29 |

| SSD [86] | ResNet50 | 82.24 | 90.25 | 83.54 | 52.99 |

| FCOS [87] | ResNet50 | 83.71 | 92.43 | 85.46 | 50.55 |

| CenterNet511 [88] | Hourglass104 | 84.66 | 91.00 | 87.32 | 55.02 |

| EFLDet [59] | BRNet-ResNet50 | 86.08 | 90.38 | 89.83 | 58.17 |

| DETR [89] | ResNet50 | 86.06 | 94.74 | 86.63 | 58.73 |

| ViDT [90] | ViT | 85.67 | 91.28 | 88.29 | 58.39 |

| A | B | C | D | |

|---|---|---|---|---|

| Number of Tree | 1539 | 971 | 602 | 793 |

| Area (ha) | 7.546 | 7.546 | 7.546 | 7.546 |

| Stand Density (trees ha−1) | 203.95 | 128.68 | 79.78 | 105.09 |

| GLI | RGBVI | VARI | NGRDI | ||

|---|---|---|---|---|---|

| A | Min | 0.06 | 0.16 | 0.02 | 0.03 |

| Max | 0.15 | 0.22 | 0.06 | 0.05 | |

| Mean | 0.09 | 0.17 | 0.04 | 0.04 | |

| B | Min | 0.07 | 0.21 | 0.01 | 0.02 |

| Max | 0.17 | 0.49 | 0.08 | 0.07 | |

| Mean | 0.10 | 0.27 | 0.05 | 0.05 | |

| C | Min | 0.07 | 0.16 | 0.01 | 0.02 |

| Max | 0.16 | 0.51 | 0.12 | 0.18 | |

| Mean | 0.12 | 0.34 | 0.07 | 0.08 | |

| D | Min | 0.06 | 0.21 | 0.02 | 0.03 |

| Max | 0.22 | 0.77 | 0.25 | 0.19 | |

| Mean | 0.15 | 0.43 | 0.12 | 0.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, M.; Tian, Y.; Zhang, S.; Huang, L.; Wang, H.; Liu, Z.; Yang, L. Individual Tree Detection in Coal Mine Afforestation Area Based on Improved Faster RCNN in UAV RGB Images. Remote Sens. 2022, 14, 5545. https://doi.org/10.3390/rs14215545

Luo M, Tian Y, Zhang S, Huang L, Wang H, Liu Z, Yang L. Individual Tree Detection in Coal Mine Afforestation Area Based on Improved Faster RCNN in UAV RGB Images. Remote Sensing. 2022; 14(21):5545. https://doi.org/10.3390/rs14215545

Chicago/Turabian StyleLuo, Meng, Yanan Tian, Shengwei Zhang, Lei Huang, Huiqiang Wang, Zhiqiang Liu, and Lin Yang. 2022. "Individual Tree Detection in Coal Mine Afforestation Area Based on Improved Faster RCNN in UAV RGB Images" Remote Sensing 14, no. 21: 5545. https://doi.org/10.3390/rs14215545