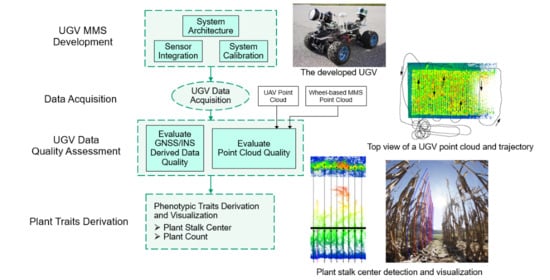

Figure 1.

Unmanned ground vehicle (UGV) development and data quality assessment workflow. MMS: mobile mapping system; GNSS/INS: global navigation satellite system/inertial navigation system; UAV: unmanned aerial vehicle.

Figure 1.

Unmanned ground vehicle (UGV) development and data quality assessment workflow. MMS: mobile mapping system; GNSS/INS: global navigation satellite system/inertial navigation system; UAV: unmanned aerial vehicle.

Figure 2.

The UGV MMS developed in this research. LiDAR: light detection and ranging.

Figure 2.

The UGV MMS developed in this research. LiDAR: light detection and ranging.

Figure 3.

Integration architecture showing the flow of information among the sensors. PPS: pulse per second; GPRMC: GPS satellite recommended minimum navigation sentence C.

Figure 3.

Integration architecture showing the flow of information among the sensors. PPS: pulse per second; GPRMC: GPS satellite recommended minimum navigation sentence C.

Figure 4.

The developed UGV with the orientation of the coordinate systems for the GNSS/INS body frame (yellow), RGB camera frame (red), and LiDAR frame (green).

Figure 4.

The developed UGV with the orientation of the coordinate systems for the GNSS/INS body frame (yellow), RGB camera frame (red), and LiDAR frame (green).

Figure 5.

Projection of a point in the LiDAR point cloud colored by intensity (middle) onto corresponding original fisheye lens image (left) and rectified image (right) using the estimated LiDAR–camera system calibration parameters.

Figure 5.

Projection of a point in the LiDAR point cloud colored by intensity (middle) onto corresponding original fisheye lens image (left) and rectified image (right) using the estimated LiDAR–camera system calibration parameters.

Figure 6.

UAV orthophotos of fields: (a) ACRE-9D and (b) ACRE-12.

Figure 6.

UAV orthophotos of fields: (a) ACRE-9D and (b) ACRE-12.

Figure 7.

Above-canopy phenotyping systems used in this study: (a) UAV and (b) PhenoRover.

Figure 7.

Above-canopy phenotyping systems used in this study: (a) UAV and (b) PhenoRover.

Figure 8.

The UGV traversing under the canopy in a maize field.

Figure 8.

The UGV traversing under the canopy in a maize field.

Figure 9.

Sample images from the camera onboard the UGV, showing canopy coverage for: (a) A-1 and (b) B-1 datasets. The A-1 dataset has low to moderate canopy cover, whereas in the B-1 dataset, the coverage is mostly low due to plant senescence late in the season.

Figure 9.

Sample images from the camera onboard the UGV, showing canopy coverage for: (a) A-1 and (b) B-1 datasets. The A-1 dataset has low to moderate canopy cover, whereas in the B-1 dataset, the coverage is mostly low due to plant senescence late in the season.

Figure 10.

Point clouds (colored by height from blue to red) and trajectory (black lines) for: (a) A-1 dataset (captured by the UGV), and (b) A-2 dataset (captured by the UAV).

Figure 10.

Point clouds (colored by height from blue to red) and trajectory (black lines) for: (a) A-1 dataset (captured by the UGV), and (b) A-2 dataset (captured by the UAV).

Figure 11.

Point clouds (colored by height from blue to red) and trajectory (black lines) for: (a) B-1 dataset (captured by the UGV), (b) B-2 dataset (captured by the PhenoRover), and (c) B-3 dataset (captured by the UAV).

Figure 11.

Point clouds (colored by height from blue to red) and trajectory (black lines) for: (a) B-1 dataset (captured by the UGV), (b) B-2 dataset (captured by the PhenoRover), and (c) B-3 dataset (captured by the UAV).

Figure 12.

Position accuracy, combined separation, and float/fixed ambiguity status charts for the A-1 dataset with different base station options: (a) P775, (b) INWL, (c) PRDU, and (d) P775+INWL+PRDU. Option-d produced the best estimate in this case.

Figure 12.

Position accuracy, combined separation, and float/fixed ambiguity status charts for the A-1 dataset with different base station options: (a) P775, (b) INWL, (c) PRDU, and (d) P775+INWL+PRDU. Option-d produced the best estimate in this case.

Figure 13.

Option-d: Effect of different extents of canopy cover on the float/fixed ambiguity status for the A-1 dataset.

Figure 13.

Option-d: Effect of different extents of canopy cover on the float/fixed ambiguity status for the A-1 dataset.

Figure 14.

Shown above are the position accuracy, combined separation, and float/fixed ambiguity status charts for the B-1 dataset with different base station options: (a) P775, (b) INWL, (c) PRDU, and (d) P775+INWL+PRDU. Note that, in this case, option-b produced the best result, whereas option-d was the worst.

Figure 14.

Shown above are the position accuracy, combined separation, and float/fixed ambiguity status charts for the B-1 dataset with different base station options: (a) P775, (b) INWL, (c) PRDU, and (d) P775+INWL+PRDU. Note that, in this case, option-b produced the best result, whereas option-d was the worst.

Figure 15.

Illustration of plant rows and alley locations.

Figure 15.

Illustration of plant rows and alley locations.

Figure 16.

Illustration of the coordinate systems involved in plant row/alley detection.

Figure 16.

Illustration of the coordinate systems involved in plant row/alley detection.

Figure 17.

Point density maps along with the trajectory (black lines) for: (a) A-1 dataset (captured by UGV), and (b) A-2 dataset (captured by UAV).

Figure 17.

Point density maps along with the trajectory (black lines) for: (a) A-1 dataset (captured by UGV), and (b) A-2 dataset (captured by UAV).

Figure 18.

Selected profiles in field ACRE-9D: (a) profile locations, (b) P1 side view, (c) P2 side view, and (d) P3 side view.

Figure 18.

Selected profiles in field ACRE-9D: (a) profile locations, (b) P1 side view, (c) P2 side view, and (d) P3 side view.

Figure 19.

Peak/valley detection results for the A-1 (UGV) and A-2 (UAV) datasets: (a) plant row locations where the gray dotted lines indicate the UGV tracks, and (b) alley locations.

Figure 19.

Peak/valley detection results for the A-1 (UGV) and A-2 (UAV) datasets: (a) plant row locations where the gray dotted lines indicate the UGV tracks, and (b) alley locations.

Figure 20.

Impact of trajectory accuracy on the point cloud visualized as: (a) a map showing Z-difference between UGV and UAV point clouds based on extracted terrain patches, for ACRE-9D. A selected region where the solution type was float is also shown, highlighted by the red box. (b) A side view of the two-point clouds for the selected region showing discrepancy in the ground elevation.

Figure 20.

Impact of trajectory accuracy on the point cloud visualized as: (a) a map showing Z-difference between UGV and UAV point clouds based on extracted terrain patches, for ACRE-9D. A selected region where the solution type was float is also shown, highlighted by the red box. (b) A side view of the two-point clouds for the selected region showing discrepancy in the ground elevation.

Figure 21.

Point density maps along with the trajectory (black lines) for: (a) B-1 dataset (captured by UGV), (b) B-2 dataset (captured by PhenoRover), and (c) B-3 dataset (captured by UAV).

Figure 21.

Point density maps along with the trajectory (black lines) for: (a) B-1 dataset (captured by UGV), (b) B-2 dataset (captured by PhenoRover), and (c) B-3 dataset (captured by UAV).

Figure 22.

Selected profile in field ACRE-12: (a) profile locations, and (b) P1 side view.

Figure 22.

Selected profile in field ACRE-12: (a) profile locations, and (b) P1 side view.

Figure 23.

Peak detection result showing the plant row locations for B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets. The gray dotted lines indicate the UGV tracks.

Figure 23.

Peak detection result showing the plant row locations for B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets. The gray dotted lines indicate the UGV tracks.

Figure 24.

Impact of trajectory accuracy on point cloud visualized as: (a) a map showing Z-difference between UGV and UAV point clouds based on terrain patches, for ACRE-12. A selected region for comparing point cloud alignment is shown in a red box. (b) A side view of the two-point clouds for the selected region, showing good alignment of the ground elevation.

Figure 24.

Impact of trajectory accuracy on point cloud visualized as: (a) a map showing Z-difference between UGV and UAV point clouds based on terrain patches, for ACRE-12. A selected region for comparing point cloud alignment is shown in a red box. (b) A side view of the two-point clouds for the selected region, showing good alignment of the ground elevation.

Figure 25.

Detected plant centers along with the UGV trajectory and plant row ID superimposed on the UGV point cloud (colored by height) in ACRE-12. Selected areas i, ii, and iii show locations with reasonable quality, sparse, and noisy point clouds.

Figure 25.

Detected plant centers along with the UGV trajectory and plant row ID superimposed on the UGV point cloud (colored by height) in ACRE-12. Selected areas i, ii, and iii show locations with reasonable quality, sparse, and noisy point clouds.

Figure 26.

A top view with automatically detected plant centers together with a side view showing manually established plant locations superimposed on the UGV point cloud (colored by height) at location

i in

Figure 25. The thick black line shows the agreement between the automatic detection and ground truth.

Figure 26.

A top view with automatically detected plant centers together with a side view showing manually established plant locations superimposed on the UGV point cloud (colored by height) at location

i in

Figure 25. The thick black line shows the agreement between the automatic detection and ground truth.

Figure 27.

Plant location visualization showing the plant centers as vertical lines for the left plant row (corresponding to the area shown in

Figure 26—location

i in

Figure 25) in the: (

a) original image and (

b) rectified image. The blue and red colors are for easy identification of adjacent plants.

Figure 27.

Plant location visualization showing the plant centers as vertical lines for the left plant row (corresponding to the area shown in

Figure 26—location

i in

Figure 25) in the: (

a) original image and (

b) rectified image. The blue and red colors are for easy identification of adjacent plants.

Figure 28.

Factors that cause difficulty in manual identification of plants including: (

a) sparse point cloud at location

ii in

Figure 25 and (

b) collapsed leaves in the plant rows and noisy point cloud at location

iii in

Figure 25.

Figure 28.

Factors that cause difficulty in manual identification of plants including: (

a) sparse point cloud at location

ii in

Figure 25 and (

b) collapsed leaves in the plant rows and noisy point cloud at location

iii in

Figure 25.

Figure 29.

A top view with automatically detected plant centers together with a side view showing manually established plant locations superimposed on the UGV point cloud (colored by height) showing areas with: (a) low point density (location ii) and (b) high noise level (location iii). The thick black line shows the agreement between the automatic detection and ground truth.

Figure 29.

A top view with automatically detected plant centers together with a side view showing manually established plant locations superimposed on the UGV point cloud (colored by height) showing areas with: (a) low point density (location ii) and (b) high noise level (location iii). The thick black line shows the agreement between the automatic detection and ground truth.

Table 1.

Manufacturer-specified post-processed (PP) performance of the SPAN-IGM during GNSS outages [

27].

Table 1.

Manufacturer-specified post-processed (PP) performance of the SPAN-IGM during GNSS outages [

27].

| Outage Duration (s) | Position Accuracy (m) | Attitude Accuracy (°) |

|---|

| Horizontal | Vertical | Roll | Pitch | Heading |

|---|

| 0 | ±0.01 | ±0.02 | ±0.006 | ±0.006 | ±0.019 |

| 10 | ±0.02 | ±0.02 | ±0.007 | ±0.007 | ±0.021 |

| 60 | ±0.22 | ±0.10 | ±0.008 | ±0.008 | ±0.024 |

Table 2.

Field datasets used in this study.

Table 2.

Field datasets used in this study.

| Dataset | Field | Data Acquisition Date | System | Number of Tracks | Sensor-to-Object Distance | Track-to-Track Lateral Distance |

|---|

| A-1 | ACRE-9D | 2020/09/29 | UGV | 4 | 0.5–100 m | 4–7.5 m |

| A-2 | ACRE-9D | 2020/09/22 | UAV | 3 | 40 m | 8 m |

| B-1 | ACRE-12 | 2020/11/03 | UGV | 8 | 0.5–100 m | 2.5–7 m |

| B-2 | ACRE-12 | 2020/11/05 | PhenoRover | 10 | 4–10 m | 2.5–4 m |

| B-3 | ACRE-12 | 2020/11/03 | UAV | 4 | 40 m | 8 m |

Table 3.

Base stations and available GNSS signal type. All base stations are located near West Lafayette, Indiana.

Table 3.

Base stations and available GNSS signal type. All base stations are located near West Lafayette, Indiana.

| Dataset | Base Station Code | Baseline Distance from Test Site (km) | Available Signal Type |

|---|

| A-1 | P775 | 0.6 | GPS |

| INWL | 6.0 | GPS+GLONASS |

| PRDU | 8.5 | GPS+GLONASS |

| B-1 | P775 | 0.9 | GPS |

| INWL | 5.3 | GPS+GLONASS |

| PRDU | 7.8 | GPS+GLONASS |

Table 4.

Number of points in the surveyed area and statistics of the point density for A-1 (UGV) and A-2 (UAV) datasets.

Table 4.

Number of points in the surveyed area and statistics of the point density for A-1 (UGV) and A-2 (UAV) datasets.

| Dataset | Number of Points (Millions) | Point Density (Point/m2) |

|---|

| 25th Percentile | Median | 75th Percentile |

|---|

| A-1 (UGV) | 85.0 | 1200 | 9600 | 38,400 |

| A-2 (UAV) | 4.7 | 1200 | 2000 | 2800 |

Table 5.

Estimated vertical discrepancy and square root of a posteriori variance factor using A-1 (UGV) and A-2 (UAV) datasets.

Table 5.

Estimated vertical discrepancy and square root of a posteriori variance factor using A-1 (UGV) and A-2 (UAV) datasets.

| Reference | Source | Number of Observations | | |

|---|

| Mean | Std. Dev. |

|---|

| A-1 (UGV) | A-2 (UAV) | 2118 | 0.048 | −0.013 | 0.001 |

Table 6.

Estimated planimetric discrepancy and square root of a posteriori variance factor using A-1 (UGV) and A-2 (UAV) datasets.

Table 6.

Estimated planimetric discrepancy and square root of a posteriori variance factor using A-1 (UGV) and A-2 (UAV) datasets.

| Reference | Source | Number of Observations | | | |

|---|

| Mean | Std. Dev. | Mean | Std. Dev. |

|---|

| A-1 (UGV) | A-2 (UAV) | 19 alleys and 25 plant rows | 0.072 | 0.050 | 0.017 | −0.016 | 0.014 |

Table 7.

Number of points in the surveyed area and statistics of the point density for B-1 (UGV), B-2 (PhenoRover), and B-2 (UAV) datasets.

Table 7.

Number of points in the surveyed area and statistics of the point density for B-1 (UGV), B-2 (PhenoRover), and B-2 (UAV) datasets.

| Dataset | Number of Points (Millions) | Point Density (Point/m2) |

|---|

| 25th Percentile | Median | 75th Percentile |

|---|

| B-1 (UGV) | 53.0 | 4400 | 17,200 | 50,800 |

| B-2 (PhenoRover) | 78.8 | 23,200 | 49,200 | 92,000 |

| B-3 (UAV) | 6.7 | 2800 | 3600 | 4400 |

Table 8.

Estimated vertical discrepancy and square root of a posteriori variance factor using B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets.

Table 8.

Estimated vertical discrepancy and square root of a posteriori variance factor using B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets.

| Reference | Source | Number of

Observations | | |

|---|

| Mean | Std. Dev. |

|---|

| B-1 (UGV) | B-2 (PhenoRover) | 291 | 0.038 | 0.014 | 0.002 |

| B-1 (UGV) | B-3 (UAV) | 307 | 0.038 | 0.052 | 0.002 |

Table 9.

Estimated planimetric discrepancy and square root of a posteriori variance factor using B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets.

Table 9.

Estimated planimetric discrepancy and square root of a posteriori variance factor using B-1 (UGV), B-2 (PhenoRover), and B-3 (UAV) datasets.

| Reference | Source | Number of Observations | | | |

|---|

| Mean | Std. Dev. | Mean | Std. Dev. |

|---|

| B-1 (UGV) | B-2 (PhenoRover) | Two field boundaries and 44 plant rows | 0.053 | 0.013 | 0.008 | 0.050 | 0.038 |

| B-1 (UGV) | B-3 (UAV) | Two field boundaries and 44 plant rows | 0.055 | −0.014 | 0.008 | 0.080 | 0.039 |

Table 10.

Plant counting accuracy using the B-1 dataset showing the 31 plant rows where ground truth is available.

Table 10.

Plant counting accuracy using the B-1 dataset showing the 31 plant rows where ground truth is available.

| Row ID | Estimation | Ground Truth | Error (%) | Row ID | Estimation | Ground Truth | Error (%) |

|---|

| 0 | 69 | 83 | 16.9 | 16 * | 77 | 79 | 2.5 |

| 1 | 74 | 88 | 15.9 | 17 | 79 | 85 | 7.1 |

| 2 | 77 | 86 | 10.5 | 18 | 76 | 83 | 8.4 |

| 3 * | 81 | 89 | 9.0 | 19 | 79 | 85 | 7.1 |

| 4 * | 84 | 84 | 0.0 | 20 * | 80 | 85 | 5.9 |

| 5 | 69 | 79 | 12.7 | 21 * | 76 | 80 | 5.0 |

| 6 * | 75 | 78 | 3.8 | 22 | 79 | 85 | 7.1 |

| 7 * | 73 | 82 | 11.0 | 23 | 68 | 83 | 18.1 |

| 8 | 75 | 84 | 10.7 | 24 | 72 | 87 | 17.2 |

| 9 | 66 | 77 | 14.3 | 25 | 77 | 82 | 6.1 |

| 10 * | 72 | 80 | 10.0 | 26* | 75 | 82 | 8.5 |

| 11 * | 78 | 85 | 8.2 | 27* | 72 | 80 | 10.0 |

| 12 | 73 | 87 | 16.1 | 28 | 76 | 85 | 10.6 |

| 13 | 75 | 78 | 3.8 | 37 | 62 | 76 | 18.4 |

| 14 | 74 | 83 | 10.8 | 43 | 64 | 78 | 17.9 |

| 15 * | 74 | 82 | 9.8 | | | Average | 10.1 |