1. Introduction

The process of spectral unmixing (SU) aims at providing accurate information at sub-pixel level on a hyperspectral scene, by decomposing the spectral signature associated with an image element in signals typically belonging to macroscopically pure materials, or endmembers. The contribution of a given material to the spectrum of an image element is a fractional quantity, usually named abundance. The unmixing process is applied regularly within a wide range of research fields, ranging from classification and target detection to generic denoising and dimensionality reduction techniques [

1,

2]. Usually, the full process of spectral unmixing includes the following main steps, one of which is optional:

Estimation of the number of materials present in the scene.

Dimensionality reduction, as an optional step carried out by removing non-relevant spectral ranges or projecting the data onto a new parameter space, which can be defined also based on results from the previous step.

Endmember extraction, in which the spectra related to materials present in the scene, often referred to as endmembers, are estimated.

Abundance estimation, in which the fractional coverage of each pixel is estimated in terms of the pure materials present on ground.

It is not uncommon to refer to the whole process as

unsupervised or

supervised spectral unmixing when the endmembers must be estimated or are known in advance (reducing the problem to abundance estimation), respectively [

3]. Spectral unmixing received an increase in attention after Keshava and Mustard’s seminal overview [

4]. In 2012, a comprehensive review of the topic including an in-depth analysis of most state-of-the-art algorithms on dimensionality reduction, endmember extraction, and abundance estimation was published by Bioucas Dias et al. in [

1].

Researchers have usually a limited number of annotated hyperspectral datasets to validate their algorithms, as high spatial resolution state-of-the-art data are only acquirable by airborne instruments, and spaceborne imaging missions have mostly been hosting experimental instruments until recent years. As pointed out by Zhu et al. [

5], hyperspectral datasets with associated ground truth at sub-pixel level, suitable for the validation of unmixing algorithms, would be of great benefit to the community. The datasets commonly referred to as Urban, Jasper Ridge and Samson [

5] are often used to assess dimensionality estimation, endmember extraction and abundance estimation methods. Nevertheless, these do not have an associated ground truth: no measurement was carried out in-situ, the spectral mixtures are not validated for any pixel, and the images contain spectral signatures belonging to several materials beyond the few macro-classes defined in [

5]. Therefore, detected materials and their degree of mixture are usually matched against results obtained by other researchers applying state-of-the-art algorithms [

6].

In this paper, we introduce the DLR HyperSpectral Unmixing (DLR HySU) benchmark dataset, which includes airborne and ground-based measurements of synthetic reference targets of different materials and sizes. The dataset allows a separate assessment of the spectral unmixing main steps, including dimensionality estimation, endmember extraction, and abundance estimation. The dataset is open and available online [

7]. Results of popular state-of-the-art algorithms are assessed on a HySpex hyperspectral image acquired over DLR Oberpfaffenhofen premises as follows. In a typical processing pipeline, the first step would be to define the number of materials present in a dataset. If unknown, this can be derived by dimensionality estimation algorithms. In the presented framework, the number of materials is known for selected subsets as the targets were deployed in a field of rather uniform and short grass, without patches of bare soil or particularly stressed vegetation, and additional materials in the image can be easily masked out. Subsequently, the spectra related to pure materials are derived by endmember extraction algorithms: the dataset allows testing methods working both with and without the pure pixel assumption by restricting the analysis to sets of targets with the relevant size. The reference spectra used to assess the performance of the methods are extracted directly from the image since the presence of pure pixels is ensured by the large ratio between the size of the largest targets and the ground sampling distance (GSD). The selected spectra are compared to the in-situ measurements to verify a physically meaningful representation of the real reflectance of the materials. Finally, abundance estimation methods allow estimating the amount of each image element which belongs to one of the materials related to the identified endmembers. As all targets have been accurately measured, absolute errors can be computed as the difference between the integral of the abundances in a region of interest and the real area of the targets. Additional reported experiments jointly solve the endmember extraction and abundance estimation steps. The dataset also offers the possibility to test target detection algorithms, as additional small targets have been scattered in the area of interest, with the relative details made available for this purpose.

Spectral unmixing has been modeled in the literature as either a linear or non-linear process. In linear spectral unmixing, the contributions forming the spectrum related to a given image element are directly proportional to the fractions of the target occupied by different materials. Therefore, the assumption is that all solar light reaching the target is either absorbed or reflected to or away from the sensor, after taking into account interactions with the atmosphere. In non-linear spectral unmixing, more complex scattering interactions are considered, in which rays of light can bounce from neighboring image elements or undergo multiple scattering within the same image element before being reflected towards the sensor, especially for targets with high refraction such as water [

8], belonging to composed or multi-layered structures, or wherever direct illumination sources are absent, such as shadowed areas [

9]. In the literature the simpler linear model is often adopted in practical applications since it represents a reliable first approximation of the actual material interactions and generally provides valuable results [

10]. In this paper, we consider the linear model only, as the whole area of interest is flat and far away from high-rise objects and no refractive materials are present. We thus model the spectrum of a pixel

with

m bands as a linear combination of

n reference spectra

, weighted by

n scalar fractional abundances

, plus a residual vector

containing the portion of the signal which cannot be represented in terms of the basis vectors of choice:

Here,

collects several quantities which are hard to separate, such as noise, over- or under-estimation of atmospheric interaction, missing materials in

, variations in the spectra of a single material within the scene, wrong estimation of the abundances

, and non-linear effects [

2].

The paper is structured as follows.

Section 2 introduces the DLR HySU benchmark, including target deployment, airborne HySpex/3K imaging and ground-based SVC reflectance measurements.

Section 3,

Section 4 and

Section 5 report an assessment of dimensionality estimation, endmember extraction and abundances estimation algorithms, respectively.

Section 6 contains additional experiments on single-step unmixing and hidden target detection. We conclude in

Section 7 and report details on the targets deployed for the dataset in

Appendix A.

3. Dimensionality Estimation

Dimensionality estimation is often carried out before identifying the materials within a scene using endmember extraction algorithms, whenever their number is not known a priori. The output of dimensionality estimation is an integer representing the estimated number of dimensions, which is usually considered equal to the number of different materials within the scene. Nevertheless, the use of this family of algorithms goes beyond unmixing workflows, as the estimated number of dimensions can be used to drive dimensionality reduction steps, for example by selecting the number of synthetic variables to be kept after a rotation of the parameter space through Principal Components Analysis (PCA) or Minimum Noise Fraction (MNF). This is due to the high dimensionality of hyperspectral data, with an image containing up to hundreds of narrow spectral bands, often strongly correlated, especially within limited spectral ranges.

In this section, we report the results of two popular methods on different configurations of the DLR HySU dataset: the Hyperspectral Signal Identification by Minimum Error (HySime) [

20] and the Harsanyi–Farrand–Chang (HFC) method [

21], as implemented in [

22,

23], respectively. Both algorithms aim at identifying the real informational content of a scene using eigenvalues analysis after projecting the data onto a suitable space. A more complete overview of the topic is reported in [

24]. HySime is one of the most popular choices in the literature due to a satisfactory performance, the limited computational resources required, and the absence of required additional input parameters. The noise statistics needed to run the algorithm can be estimated directly from the data in a first step. Although HySime is based on least square error minimization, HFC aims at separating noise and signal eigenvalues, being formulated as a detection problem [

21]. An additional parameter

t representing the false alarm rate must be given as input.

We estimate the dimensionality of the data on four out of the five subsets of the DLR HySU dataset described in

Section 2.2.2. The main purpose of testing on the different subsets is that the estimated dimensionality is expected to increase when including additional materials with respect to the ones considered in

Figure 5. Therefore, it is of interest to also consider the subsets where

K is larger but its exact value is unknown. We leave out the

Small Targets subset to satisfy the pigeon-hole principle on which these algorithms are based, which associates each dimension to a target material represented by a pure spectrum [

1].

The results reported in

Table 1 show that HySime clearly overestimates the real dimensionality of the three smaller subsets, with an increasing error as the dataset becomes smaller. This is in line with previous experiments finding HySime to strongly overestimate the number of endmembers when applied to small datasets, such as the Samson dataset [

25]: the window in which the algorithm is estimating the noise must be large enough [

26], otherwise the analysis can be driven by noise rather than signal [

27]. As the size of the image increases, HySime results stabilize and provide a meaningful result for the case of the full subset. We report HFC performance when setting the false alarm rate

t to

,

, and

, because these values are commonly used in the literature [

20,

25,

28]. On the DLR HySU dataset the HFC clearly outperforms HySime on the three smaller subsets, with a rather stable estimation, as the former is in principle not affected by the total size of the image [

26]. On the other hand, HFC is likely overestimating

K on the full dataset. Despite being limited, the assessment presented in this section enforces the idea that the most suitable algorithm for dimensionality estimation should be selected according to the properties of the data at hand.

4. Endmember Extraction

Endmember extraction (EE) methods aim at identifying spectra related to materials which are homogeneous at a relevant scale (which depends on the data at hand and the application) in a hyperspectral scene. Algorithms working under the pure pixel assumption try to identify image elements associated with the most representative spectra for all materials contained in the image. On the other hand, EE algorithms working without the pure pixel assumption [

29] consider any image element as a mixture of several materials and try to identify them outside of the convex hull encompassing the data. The latter class of algorithms is of particular importance for current times, which are witnessing the first spaceborne dedicated hyperspectral missions such as DESIS [

30], usually characterized by a GSD in the order of 30 meters, and therefore often not containing pure pixels for relevant materials present in a scene.

In this section, we report results of traditional EE methods, both with and without pure pixel assumption. We consider the following four algorithms with pure pixel assumption.

Vertex Components Analysis (VCA) [

22,

31] iteratively determines endmembers as extreme pixels on the convex hull and performs orthogonal subspace projection (OSP) with respect to the determined endmembers, taking into account noise influences in the process.

N-FINDR [

32], here used as implemented in [

23], initializes the endmembers as random pixels in the variable space and iteratively substitutes them with their spectral neighbors, keeping the final set spanning the maximum volume.

Automatic Target Generation Process (ATGP) [

33] is also based on OSP, with its main difference with respect to VCA lying in the initialization step [

34]. ATGP has been tested in this paper with the Python implementation in [

35]. Finally,

Pixel Purity Index (PPI) [

36], which was made popular by its availability in software packages such as ENVI, selects extreme pixels in the data cloud projected in the variable space by drawing a set of random lines and choosing pixels marked more often as extremes. As several parameters, including the number of lines, must be set in advance, PPI has seen relatively less use in recent years. In this paper, we applied PPI with default parameters in two different software packages (in Python [

35] and MatLab [

23], obtaining similar results). In the group of algorithms without pure pixel assumption, we tested

split augmented Lagrangian (SISAL) [

37] as implemented in [

22] and

Non-negative Matrix Factorization (NMF) [

38] as implemented in [

39], both aiming at identifying the minimum volume simplex containing the hyperspectral vectors. In the following experiments, the Itakura–Saito distance [

40] has been used as distortion measure for NMF, as it yielded the best performance. In an additional experiment, we applied the k-means clustering algorithm [

41] to the subsets to highlight materials which could be confused in the scenario.

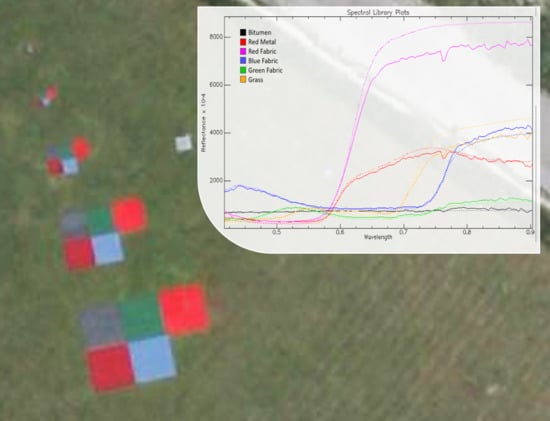

The experiments have been carried out on all datasets containing, as far as we have been able to verify, only six relevant materials as reported in

Figure 5. These are the subsets

Large Targets and

All Targets Masked (containing pure and mixed pixels) and the subset

Small Targets (containing only mixed pixels except for most grass image elements). The performance of each algorithm is evaluated by associating to each material in the HySpex spectral library (i.e., the solid lines in

Figure 5) the extracted endmember yielding the minimum spectral angle [

42]. Please note that a single extracted endmember could be the nearest neighbor to two different materials in the library: this does not bias the results of the analysis, as both spectral angles cannot be simultaneously small.

All algorithms with pure pixel assumptions were tested on the

Large Targets dataset and their performance is reported in

Figure 6a. Here, results from the best algorithm among the two based on minimum volume analysis, namely SISAL, are also reported. VCA and N-FINDR obtain the best results, with SISAL obtaining a poor performance, as does PPI with both the Python and MatLab implementations (both implementations give similar results, so we only report the former). For VCA and N-FINDR we have carried out a few trials to confirm that slight differences in the output would not affect the analysis, and show only a representative run. The results obtained by dictionary learning (DL), also shown in the plot, are competitive, but we defer their discussion to

Section 6.1, while the performance of k-means is reported in

Section 4.1. The locations of the retrieved endmembers are shown for the three best algorithms VCA, N-FINDR and ATGP in

Figure 7. As hinted in

Figure 6a, ATGP is missing the green fabric endmember and the largest distortion for bitumen is because the endmember chosen is a mixed pixel with the adjacent red metal sheets.

Results on the

Small Targets subset are reported in

Figure 6b. As expected, algorithms operating without the pure pixel assumption yield the best performance, with slightly better results obtained by SISAL. Improvements are however not substantial with respect to VCA and N-FINDR. All algorithms struggle at identifying the bitumen material, which is difficult to retrieve in a mixed setting due to the absence of clear absorption features in the analyzed spectral range. Furthermore, green fabric appears also here as a difficult material to find, probably due to some spectral features in common with the dominating grass spectra.

Figure 8 shows the iterations of the SISAL algorithm, produced by its implementation in [

22], showing the evolution of the endmembers in a squashed two-dimensional representation.

Results on the subset containing all targets with masked background are reported in

Figure 9. If the ideal input number of endmembers

is used as in

Figure 9a, VCA obtains the best results, followed by N-FINDR and ATGP, which have problems with the bitumen and green fabric endmembers, respectively (see

Figure 10). In

Figure 9b we report results obtained by setting the input number of endmembers

k as the value between 6 and 10 yielding the best results. In this case, additional endmembers which are not matched to any of the six spectra in the HySpex reference library in

Figure 5 (solid lines) are simply ignored. Distortions are as expected reduced except for k-means (see dedicated

Section 4.1), and VCA yields again the best performance, followed by N-FINDR. Again, the results of DL are discussed in

Section 6.1. Despite the higher number of spectra extracted, ATGP does not manage to extract accurately the bitumen spectrum. This is shown also in

Figure 11, reporting the locations of the extracted endmembers, where some additional materials detected by ATGP are still located in mixed image elements.

4.1. Clustering Experiment

To assess the separability of the different materials, we report an additional experiment involving k-means [

41] in representation of clustering algorithms. To use k-means for endmembers extraction, we selected the cluster centroids output by the algorithm. K-means is not traditionally directly applied to this problem (with some exceptions, e.g., [

43]): on the one hand, it does not locate pure pixels (the centroids usually do not exactly match any image element); on the other hand, it is not able to locate endmembers outside of the convex hull encompassing the data for a highly mixed scenario. Therefore, unsupervised clustering is rather used as a pre-processing step for other EE algorithms [

44] and, to avoid confusion, k-means results are presented separately in this paragraph. We ran the algorithm using as input number of clusters

on the

Large Targets and

All Targets Masked subsets. On the latter we also used

, as this yielded the best results in terms of spectral angle if

k is allowed to vary between 6 and 10. Results reported in

Figure 12 show a partial separation of the targets, with the bitumen and green fabric endmembers largely merged in a single cluster and the mixed pixels between red fabric and grass assigned to a separate cluster. As the number of clusters grow, larger number of mixed pixels form additional clusters. This explains the marginal improvement when increasing the value of

k in

Figure 9 in terms of spectral angle between cluster centroids and endmembers. Furthermore, it confirms that k-means is not reliable as a stand-alone endmember extraction method despite comparable results to traditional algorithms on the

All Targets Masked subset.

5. Abundance Estimation

The last step in the spectral unmixing process is abundance estimation. This task consists of estimating the individual material abundances in each pixel given a library of spectral endmembers. As stated in

Section 1, we adopt solely the linear mixture model (cf. Equation (

1)). Please note that the abundances are not necessarily related to the relative areas occupied by the materials (for a discussion, see [

1] and references therein). However, such assumption is made here along with linear mixing and the measured target areas are accordingly used as ground truth to evaluate the accuracy of abundance estimation. This simplified approach will be validated a posteriori by the obtained results. In the following we report on the application of widely used abundance estimation algorithms to the DLR HySU benchmark dataset. Unless otherwise stated, the reference spectral library extracted from the HySpex image (cf. solid lines in

Figure 5) is used as input to the algorithms. This choice enables the evaluation of the abundance estimation process itself while being decoupled from any uncertainties introduced by endmember extraction. The robustness of our results against the choice of the spectral library is nevertheless investigated at the end of the section.

Four traditional algorithms commonly used for abundance estimation were evaluated: unconstrained least squares (UCLS), non-negative least squares (NNLS), fully constrained least squares (FCLS) [

45] and least squares with least absolute shrinkage and selection operator (LASSO) [

46]. All mentioned algorithms are based on least squares minimization, but the constraints applied on the abundances are distinct: UCLS finds the plain least squares solution without any constraints; NNLS and FCLS both require abundances to be non-negative (the so-called non-negativity constraint), with FCLS requiring in addition that the abundances sum to one (the so-called sum-to-one constraint); the version of LASSO used in this work implements the abundance non-negativity constraint and an upper limit

on the

-norm of the abundance vector, which induces sparsity. Although other methods exist in the literature, the mentioned algorithms, which also cover sparse analysis, are among the most relevant techniques in use within the hyperspectral community for linear unmixing. The results reported here are based on the MatLab implementation of UCLS, NNLS and FCLS [

23] and on the Python implementation of LASSO in the SPAMS toolbox [

47,

48,

49].

The abundance maps obtained with UCLS, NNLS, FCLS and LASSO (with

) are shown in

Figure 13. The six abundances (five materials plus vegetation background) for each algorithm are displayed in single RGB composites, where red metal and red fabric are associated with the red channel, green fabric and grass (the latter rescaled from 0 to 0.3 for visualization purposes) to the green channel, and blue fabric and bitumen to the blue channel. Since all tested algorithms perform unmixing for each pixel independently, the results in

Figure 13 only had to be obtained once for the full subset. This is different from the case of the endmember extraction investigated in

Section 4, where algorithms were tested in several subsets of the benchmark. Overall, the four unmixing techniques can derive abundance maps where most targets are well-defined with relatively crisp edges, little confusion between the materials and few false positives across the subset. It is clear that pure or close to pure pixels are identified in the 3 and 2m targets, while smaller targets correspond to highly mixed pixels as expected. The absence of visible aliasing effects in

Figure 13 is due to a realistic abundance estimation of mixed pixels, yielding smooth transitions on boundaries between different materials.

Figure 14 reports a detail of NNLS abundances for the largest targets, offering a more detailed overview about what happens in pixels with a high degree of mixture. The qualitative positive outcome of the abundance estimation step attests the accuracy of the unmixing algorithms as well as the quality of the reference spectral library. Two additional features in

Figure 13 are worth pointing out. First, in all cases a large abundance of bitumen is found to the right of the 1m targets in the position of the SVC white panel (cf. position I in

Figure 1a), as both bitumen and the white panel exhibit a similarly flat spectrum. Secondly, the vegetation background has been clearly singled out by unmixing with one endmember only despite the slight spectral variability of grass across the football field.

Moving to a quantitative analysis, the obtained abundances can be directly associated with the area of each target on the ground. The approximate areas of the deployed targets are 9, 4, 1, 0.25 and 0.0625 m

, corresponding approximately to 18.4, 8.2, 2.0, 0.5, and 0.1 pixels in the HySpex processed dataset of 0.7m spatial resolution, with the exact areas measured on site (cf.

Appendix A) used for validation. The estimated area of a target

t, denoted

, is derived from the abundance maps in

Figure 13 as the integral of all fractional material abundances

inside a region

surrounding the target. This assumes implicitly the correspondence between abundances and fractional area occupied by the material inside the pixel. The region

is defined by selecting the central pixel of each target and expanding the region until the abundance for the material of interest is

. The regions for the two smaller targets (0.25 and 0.5m) have been additionally dilated using as structuring element a disc with radius 1. The definition of

was designed to ensure that all pixels containing the target signal (both pure and mixed) are included and simultaneously spurious abundances far from the target are neglected. The regions

are depicted in

Figure 15 with a brightness proportional to the number of different regions assigned to each pixel, and as a consequence to the potential degree of mixture. The estimated areas

for all targets and unmixing algorithms are reported in

Table 2 in units of pixels. Using the measured areas

in

Table A2 as ground truth, the unsigned relative error

is plotted in

Figure 16 for each target size, material and algorithm.

The area estimation results in

Table 2 and

Figure 16 reveal several interesting trends. It is remarkable that the areas of the targets on the ground can be reconstructed down to a few percent accuracy using hyperspectral data only, without obvious biases towards general over- or under-estimation of the areas. This lends credit to our initial assumption linking abundances to relative areas, and it constitutes a non-trivial, independent test of the linear mixing model. Overall, the linear model offers a good description of the DLR HySU benchmark. The area estimation accuracy is however not universally high and depends strongly on the unmixing algorithm of choice, target material and target size. These effects are now discussed in turn.

All four tested algorithms lead to similar area estimation results for the DLR HySU benchmark targets. However, the NNLS and LASSO (

) algorithms give the most consistent estimates across the different target materials and sizes. The mean area estimation error for these algorithms ranges from ∼4% for the 3m targets up to ∼20% for the 0.25m targets. FCLS actually presents better mean accuracy for the 3, 2, 1 and 0.5m targets, but it evidently fails for the 0.25m green fabric target. The combination of the abundance non-negativity and abundance sum constraints in FCLS appears to be disadvantageous for unmixing small sub-pixel targets resembling the background vegetation. UCLS obtains results in line with the other algorithms for the larger targets, but it breaks down for the 0.5m green fabric target and all 0.25m targets. The lack of any abundance constraint seems to make UCLS particularly prone to noise, therefore delivering less meaningful abundances, in some cases negative (see green fabric targets of 0.5 and 0.25m in

Table 2).

We have further tested the use of LASSO by trying different

-norm upper limits in the range

. As illustrated in

Figure 17, results are similar for

and exactly the same for

, but degrade clearly for

. This is likely a consequence of having a spectral library collected with endmembers from the hyperspectral image itself. For the corresponding pixels, the abundance vector is a single-entry vector with

, so any

will not allow such solution. We therefore show the main LASSO abundance estimation results for

. It is interesting to notice that the results of NNLS and LASSO (

) are identical for all 0.5 and 0.25m targets except the 0.5m red fabric target, cf.

Table 2. This happens as NNLS and LASSO with

solve exactly the same least squares minimization problem when the optimal abundance vector is such that

. Both algorithms require abundance non-negativity and, in the case

, the LASSO upper limit

with

becomes irrelevant, as illustrated in

Figure 18. The right panel of the figure identifies the pixels for which NNLS found optimal abundance vectors with

, clearly confirming that for all 0.5 and 0.25m targets except the 0.5m red fabric target both algorithms are expected to provide the same exact solution. Naturally, the same statement applies to LASSO with

.

Trends of the target area error according to material and size are shown in

Figure 19a for LASSO (

). Other unmixing algorithms are qualitatively similar as can be seen in

Table 2, so we focus on LASSO results for discussion. The area of the blue and red fabric targets can be reconstructed with an error smaller than

for the 3m targets and better than

for all sizes. Overall, these are the targets for which the abundance estimation step works best. Bitumen target areas can also be reconstructed to within

for the 3 and 2m targets, but the error rises to 20–30% for the smaller targets where mixed pixels are present. The low and flat reflectance spectrum of bitumen appears to be easily identifiable for pure or close to pure pixels, while being very difficult to single out in the case of highly mixed pixels. The worse results are obtained for red metal and green fabric, where the area error hover above

for the 3 and 2m targets and reaches 40–50% for the smaller targets. As stated in

Section 2, red metal and green fabric are indeed challenging materials: the former has a rugged surface and a tendency to produce specular reflections, while the later resembles the background vegetation. This might explain the worse results obtained for the two materials. Finally, we comment on the trend with target size. Apart from a handful of outliers, the general trend observed in

Figure 19a shows that the area reconstruction error increases rapidly with decreasing target size. This is intuitively expected, because smaller targets are associated with mixed pixels, contributing to each spectrum a weaker signal which is closer in amplitude to the image noise. Geometrically speaking, the perimeter-to-area ratio of a square target increases as its side length decreases, and so does the area reconstruction relative error. In addition, it is expected that the contrast between the target material and the background vegetation plays a role, but the modeling of the reconstruction area error lies outside the scope of the present work.

Up to now the spectral library manually extracted from the HySpex image was used as input to the abundance estimation process. The robustness of the obtained results is now tested using instead a spectral library containing the mean SVC acquisitions on the ground, see dashed lines in

Figure 5.

Figure 19b shows the resulting target area errors for the case of LASSO (

). The comparison of both panels in

Figure 19 leads to interesting conclusions dependent on target material and size. For all target sizes, it is apparent that the results are fairly robust for red metal, red fabric and green fabric, but bitumen and blue fabric display some degree of sensitivity to the spectral library used. There is also a clear dependence on target size. The target area error for the larger targets (3 and 2m) for all materials degrades from <10% when using the HySpex spectral library to around

when using the SVC library. The degradation is much more severe for the smallest 0.25m targets, while the results are comparable for the mid-size targets (0.5 and 1m). Overall, we conclude that the abundance estimation results for resolved targets are robust, which is consistent with the qualitative similarity of the two spectral libraries already apparent from

Figure 5. Abundance estimation for sub-pixel targets is sensitive to the spectral library used and better results are obtained when the library is selected directly from the image.

7. Conclusions

This paper introduces the DLR HySU (HyperSpectral Unmixing) benchmark dataset, consisting of a high-resolution airborne image acquired by the HySpex spectrometer in the VNIR range, completed by high-resolution airborne 3K RGB data, and in-situ SVC spectrometer measurements. The area of interest contains five synthetic targets of different materials in five different sizes, deployed on ground in a homogeneous area. The dataset allows testing all main steps of a typical spectral unmixing workflow, including dimensionality estimation, endmember extraction with and without pure pixel assumption, and abundance estimation. Further areas of research which can benefit from the DLR HySU dataset include target detection and denoising. Regarding the former, additional small targets have been scattered in the area of interest and are described in the paper. The latter can use the in-situ collected spectra as reference to verify denoising procedures on single targets, especially for bitumen which is characterized by a flat spectrum. Despite the relative simplicity of the targets arrangement, the described mixing scenarios result more accurate with respect to the ones currently available in the literature, as the surface area of all targets of different materials is known, and no additional materials are present in the scene, enabling a precise assessment of algorithm performance. Future datasets may include additional target materials and more complex mixing patterns in an effort to provide more realistic mixing scenarios.

Testing state-of-the art algorithms with the DLR HySU benchmark dataset for different steps of the unmixing procedure yielded several interesting results:

The confirmation of overestimation by the most used dimensionality estimation method for imaging spectrometer data, HySime, in non-ideal settings, i.e., when applied to images too small in size with non-zero noise contribution.

The comparison between algorithms working with or without the pure pixel assumption assessed on real data for different targets, suggesting that the latter family of algorithms may perform slightly better at handling complex, highly mixed data. To the best of our knowledge, this is the first time that a comparison between algorithms working with or without the pure pixel assumption is made on real data. In the past, such assessment was made on synthetic images [

1].

The equivalence between the NNLS and the LASSO methods for specific cases.

The effects of enforcing the sum-to-one constraint in FCLS, often used in abundance estimation in the literature, which may introduce severe distortions in the case of image elements with a high degree of mixture. The last aspect adds up to the distortions introduced by FCLS whenever an incomplete spectral library is used [

2].

With the experience gathered in previous campaigns and the one presented in this work, some aspects have been identified that should be taken care of when preparing a complex dataset of this kind. First, it would be desirable to have smaller GSD or larger targets deployed on ground to derive a spectral library from averaged spectra. As a rule of thumb, to ensure several pure pixels for all targets, the size of these should be set at least to five times the GSD. Secondly, the area containing the targets of interest should be as close as possible to sensor nadir to minimize spatial distortions due to aircraft roll movements. Furthermore, the integration time of the imaging spectrometer should be set to a low value if bright targets are chosen, as usually synthetic targets have a higher reflectance with respect to natural ones.

The DLR HySU benchmark dataset is open and available at [

7]. The community is invited to make use of the dataset to test spectral unmixing and other applications, expanding the exploratory analysis we have presented in this work.