Crop Row Detection through UAV Surveys to Optimize On-Farm Irrigation Management

Abstract

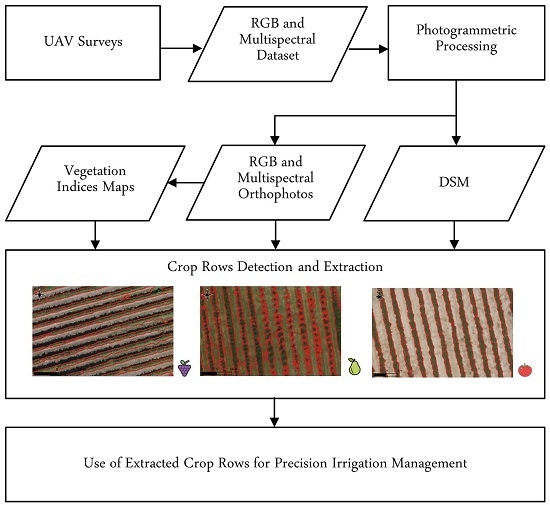

:1. Introduction

2. Materials

2.1. Study Sites

2.1.1. Vineyard

2.1.2. Pear Orchard

2.1.3. Tomato Field

2.2. UAV Surveys and Photogrammetric Processing

2.2.1. Vineyard

2.2.2. Pear Orchard

2.2.3. Tomato Field

3. Crop Row Detection Methods

3.1. Thresholding Algorithms

3.1.1. Local Maxima Extraction

3.1.2. Threshold Selection

3.2. Classification Algorithms

3.2.1. K-Means Clustering

3.2.2. Minimum Distance to Mean Classifier

3.3. Bayesian Segmentation

- is called the posterior probability and describes the new level of knowledge of the unknown parameters x given the observed data y.

- is a normalization constant used to impose that the sum of for all possible x is equal to one.

- , instead, represents the prior probability distribution. It describes the knowledge of the unknown parameters x without the contribution of the observed data.

- is defined as the likelihood and is a function of x. It describes the way in which the a priori knowledge is modified by data and depends on the noise distribution.

4. Results

4.1. Vineyard

4.2. Pear Orchard

4.3. Tomato Field

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pachauri, R.K.; Allen, M.R.; Barros, V.R.; Broome, J.; Cramer, W.; Christ, R.; Church, J.A.; Clarke, L.; Dahe, Q.; Dasgupta, P.; et al. Climate Change 2014: Synthesis Report. Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; IPCC: Geneva, Switzerland, 2014. [Google Scholar]

- Costa, J.; Vaz, M.; Escalona, J.; Egipto, R.; Lopes, C.; Medrano, H.; Chaves, M. Modern viticulture in southern Europe: Vulnerabilities and strategies for adaptation to water scarcity. Agric. Water Manag. 2016, 164, 5–18. [Google Scholar] [CrossRef]

- UN-Water. 2018 UN World Water Development Report, Nature-Based Solutions for Water; UNESCO: Paris, France, 2018. [Google Scholar]

- Castellarin, S.D.; Bucchetti, B.; Falginella, L.; Peterlunger, E. Influenza del deficit idrico sulla qualità delle uve: Aspetti fisiologici e molecolari. Italus Hortus 2011, 18, 63–79. [Google Scholar]

- Monaghan, J.M.; Daccache, A.; Vickers, L.H.; Hess, T.M.; Weatherhead, E.K.; Grove, I.G.; Knox, J.W. More ‘crop per drop’: Constraints and opportunities for precision irrigation in European agriculture. J. Sci. Food Agric. 2013, 93, 977–980. [Google Scholar] [CrossRef]

- Sanchez, L.; Sams, B.; Alsina, M.; Hinds, N.; Klein, L.; Dokoozlian, N. Improving vineyard water use efficiency and yield with variable rate irrigation in California. Adv. Anim. Biosci. 2017, 8, 574–577. [Google Scholar] [CrossRef]

- McClymont, L.; Goodwin, I.; Whitfield, D.; O’Connell, M. Effects of within-block canopy cover variability on water use efficiency of grapevines in the Sunraysia irrigation region, Australia. Agric. Water Manag. 2019, 211, 10–15. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Technology in precision viticulture: A state of the art review. Int. J. Wine Res. 2015, 7, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A light-weight multispectral sensor for micro UAV: Opportunities for very high resolution airborne remote sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1193–1199. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Corti, M.; Cavalli, D.; Cabassi, G.; Vigoni, A.; Degano, L.; Gallina, P.M. Application of a low-cost camera on a UAV to estimate maize nitrogen-related variables. Precis. Agric. 2019, 20, 675–696. [Google Scholar] [CrossRef]

- Noh, H.; Zhang, Q. Shadow effect on multi-spectral image for detection of nitrogen deficiency in corn. Comput. Electron. Agric. 2012, 83, 52–57. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Bessa, J.; Sousa, A.; Peres, E.; Morais, R.; Sousa, J.J. Vineyard properties extraction combining UAS-based RGB imagery with elevation data. Int. J. Remote Sens. 2018, 39, 5377–5401. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.; Ingram, B.; Bardeen, M. Detection and segmentation of vine canopy in ultra-high spatial resolution RGB imagery obtained from unmanned aerial vehicle (UAV): A case study in a commercial vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef] [Green Version]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-based automatic detection and monitoring of chestnut trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Xu, X.; Han, J.; Zhang, L.; Bian, C.; Jin, L.; Liu, J. The estimation of crop emergence in potatoes by UAV RGB imagery. Plant Methods 2019, 15, 15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pádua, L.; Adão, T.; Sousa, A.; Peres, E.; Sousa, J.J. Individual Grapevine Analysis in a Multi-Temporal Context Using UAV-Based Multi-Sensor Imagery. Remote Sens. 2020, 12, 139. [Google Scholar] [CrossRef] [Green Version]

- Ortuani, B.; Facchi, A.; Mayer, A.; Bianchi, D.; Bianchi, A.; Brancadoro, L. Assessing the Effectiveness of Variable-Rate Drip Irrigation on Water Use Efficiency in a Vineyard in Northern Italy. Water 2019, 11, 1964. [Google Scholar] [CrossRef] [Green Version]

- Ronchetti, G.; Pagliari, D.; Sona, G. DTM generation through UAV survey with a fisheye camera on a vineyard. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 983–989. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Peres, E.; Morais, R.; Sousa, J. Multi-Temporal Vineyard Monitoring through UAV-Based RGB Imagery. Remote Sens. 2018, 10, 1907. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Birth, G.S.; McVey, G.R. Measuring the color of growing turf with a reflectance spectrophotometer 1. Agron. J. 1968, 60, 640–643. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.L. Use of digital webcam images to track spring green-up in a deciduous broadleaf forest. Oecologia 2007, 152, 323–334. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D point clouds derived from UAV RGB imagery to describe vineyard 3D macro-structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef] [Green Version]

- MATLAB. Version 9.3 (R2017b); The MathWorks Inc.: Natick, MA, USA, 2017; Available online: https://www.mathworks.com/downloads/ (accessed on 14 December 2018).

- QGIS Development Team. QGIS Geographic Information System; Open Source Geospatial Foundation, 2009. Available online: http://qgis.osgeo.org (accessed on 2 January 2020).

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 1 January 1967; Volume 1, pp. 281–297. [Google Scholar]

- Ross, S.M. Probabilità e statistica per l’ingegneria e le scienze; Apogeo Editore: Milano, Italy, 2003. [Google Scholar]

- Bayes, T. LII. An essay towards solving a problem in the doctrine of chances. By the late Rev. Mr. Bayes, FRS communicated by Mr. Price, in a letter to John Canton, AMFR S. Philos. Trans. R. Soc. Lond. 1763, 53, 370–418. [Google Scholar] [CrossRef]

- Geman, S.; Geman, D. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 721–741. [Google Scholar] [CrossRef]

- Marino, A.; Marotta, F.; Crop rows detection through UAV images. In Master Degree Final Dissertation, Politecnico di Milano. 2019. Available online: https://www.politesi.polimi.it/ (accessed on 28 November 2019).

- BorgognoMondino, E.; Gajetti, M. Preliminary considerations about costs and potential market of remote sensing from UAV in the Italian viticulture context. Eur. J. Remote Sens. 2017, 50, 310–319. [Google Scholar] [CrossRef]

- Cinat, P.; Di Gennaro, S.F.; Berton, A.; Matese, A. Comparison of Unsupervised Algorithms for Vineyard Canopy Segmentation from UAV Multispectral Images. Remote Sens. 2019, 11, 1023. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.; Jiménez-Brenes, F.; Torres-Sánchez, J.; Peña, J.; Borra-Serrano, I.; López-Granados, F. 3-D characterization of vineyards using a novel UAV imagery-based OBIA procedure for precision viticulture applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef] [Green Version]

- Dong, X.; Zhang, Z.; Yu, R.; Tian, Q.; Zhu, X. Extraction of Information about Individual Trees from High-Spatial-Resolution UAV-Acquired Images of an Orchard. Remote Sens. 2020, 12, 133. [Google Scholar] [CrossRef] [Green Version]

- Ortuani, B.; Sona, G.; Ronchetti, G.; Mayer, A.; Facchi, A. Integrating Geophysical and Multispectral Data to Delineate Homogeneous Management Zones within a Vineyard in Northern Italy. Sensors 2019, 19, 3974. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Di Gennaro, S.F.; Dainelli, R.; Palliotti, A.; Toscano, P.; Matese, A. Sentinel-2 Validation for Spatial Variability Assessment in Overhead Trellis System Viticulture Versus UAV and Agronomic Data. Remote Sens. 2019, 11, 2573. [Google Scholar] [CrossRef] [Green Version]

- Khaliq, A.; Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of satellite and UAV-based multispectral imagery for vineyard variability assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef] [Green Version]

| Label | Easting (m) | Northing (m) | Height (m) |

|---|---|---|---|

| v1 | −0.029 | −0.008 | 0.088 |

| v2 | −0.007 | −0.012 | −0.012 |

| v3 | −0.025 | −0.030 | −0.063 |

| v4 | 0.008 | 0.025 | 0.062 |

| v5 | 0.015 | 0.005 | 0.000 |

| v6 | 0.022 | −0.013 | 0.024 |

| v7 | 0.030 | −0.013 | 0.027 |

| v8 | 0.020 | −0.015 | −0.086 |

| v9 | 0.012 | 0.008 | 0.012 |

| RMSE | 0.021 | 0.016 | 0.052 |

| Label | Easting (m) | Northing (m) | Height (m) |

|---|---|---|---|

| p1 | −0.050 | −0.017 | 0.007 |

| p2 | −0.032 | −0.004 | −0.017 |

| p3 | 0.018 | −0.060 | 0.021 |

| p4 | 0.028 | −0.089 | 0.113 |

| p5 | 0.003 | 0.023 | −0.290 |

| p6 | 0.100 | 0.053 | 0.018 |

| p7 | −0.085 | 0.003 | 0.054 |

| RMSE | 0.056 | 0.047 | 0.119 |

| Label | Easting (m) | Northing (m) | Height (m) |

|---|---|---|---|

| t1 | −0.098 | 0.071 | −0.101 |

| t2 | 0.095 | 0.068 | 0.046 |

| t3 | 0.028 | 0.71 | 0.077 |

| t4 | −0.026 | −0.127 | −0.054 |

| t5 | −0.019 | −0.154 | 0.149 |

| t6 | −0.053 | −0.038 | 0.114 |

| RMSE | 0.062 | 0.096 | 0.097 |

| Index | Name | Formula | References |

|---|---|---|---|

| NDVI | Normalized Difference Vegetation Index | [22] | |

| SR | Simple Ratio | [23] | |

| SAVI | Soil-Adjusted Vegetation Index | [24] | |

| ARVI | Atmospherically Resistant Vegetation Index | where: | [25] |

| ExG | Excess Green | [26] | |

| G% | Normalized Green Channel Brightness | [27] |

| Method | Input | User’s Choices |

|---|---|---|

| Local Maxima Extraction | G% | cell size: 5 m percentage: 30% |

| Threshold Selection | DSM | cell size: 3 m threshold: 0.3 |

| K-means Clustering | RGB orthophoto | classes: 6 |

| MDM Classifier | RGB orthophoto | classes: 2 |

| Bayesian Segmentation | ExG, Gaussian filter ( = 3) | Background: = 0.2, = 0.2 Crop canopy: = 0.7, = 0.25 |

| Method | OA | PA Crop Canopy | UA Crop Canopy |

|---|---|---|---|

| Local Maxima Extraction | 0.94 | 0.95 | 0.91 |

| Threshold Selection | 0.76 | 0.41 | 0.99 |

| K-means Clustering | 0.82 | 0.73 | 0.80 |

| MDM Classifier | 0.87 | 0.84 | 0.83 |

| Bayesian Segmentation | 0.96 | 0.97 | 0.94 |

| Method | Input | User’s Choices |

|---|---|---|

| Local Maxima Extraction | DSM | cell size: 4 m percentage: 40% |

| Threshold Selection | DSM | cell size: 4 m threshold: 0 |

| K-means Clustering | RGB orthophoto | classes: 5 |

| MDM Classifier | RGB orthophoto | classes: 2 |

| Bayesian Segmentation | NDVI, Gaussian filter ( = 3) | Background: =0.8, =0.04 Crop canopy: =0.93, =0.04 |

| Method | OA | PA Crop Canopy | UA Crop Canopy |

|---|---|---|---|

| Local Maxima Extraction | 0.92 | 0.88 | 0.93 |

| Threshold Selection | 0.95 | 0.97 | 0.92 |

| K-means Clustering | 0.95 | 0.90 | 0.99 |

| MDM Classifier | 0.87 | 0.68 | 0.99 |

| Bayesian Segmentation | 0.94 | 0.91 | 0.95 |

| Method | Input | User’s Choices |

|---|---|---|

| Local Maxima Extraction | G% | cell size: 3 m percentage: 30% |

| Threshold Selection | DSM | cell size: 4 m threshold: 0 |

| K-means Clustering | SAVI + NDVI | classes: 5 |

| MDM Classifier | SAVI + NDVI | classes: 2 |

| Bayesian Segmentation | ExG, Histogram adjustment | Background: = 0.05, = 0.15 Crop canopy: = 0.65, = 0.35 |

| Method | OA | PA Crop Canopy | UA Crop Canopy |

|---|---|---|---|

| Local Maxima Extraction | 0.98 | 0.94 | 0.98 |

| Threshold Selection | 0.97 | 0.87 | 0.99 |

| K-means Clustering | 0.93 | 0.93 | 0.79 |

| MDM Classifier | 0.90 | 0.60 | 0.92 |

| Bayesian Segmentation | 0.98 | 0.91 | 0.98 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ronchetti, G.; Mayer, A.; Facchi, A.; Ortuani, B.; Sona, G. Crop Row Detection through UAV Surveys to Optimize On-Farm Irrigation Management. Remote Sens. 2020, 12, 1967. https://doi.org/10.3390/rs12121967

Ronchetti G, Mayer A, Facchi A, Ortuani B, Sona G. Crop Row Detection through UAV Surveys to Optimize On-Farm Irrigation Management. Remote Sensing. 2020; 12(12):1967. https://doi.org/10.3390/rs12121967

Chicago/Turabian StyleRonchetti, Giulia, Alice Mayer, Arianna Facchi, Bianca Ortuani, and Giovanna Sona. 2020. "Crop Row Detection through UAV Surveys to Optimize On-Farm Irrigation Management" Remote Sensing 12, no. 12: 1967. https://doi.org/10.3390/rs12121967