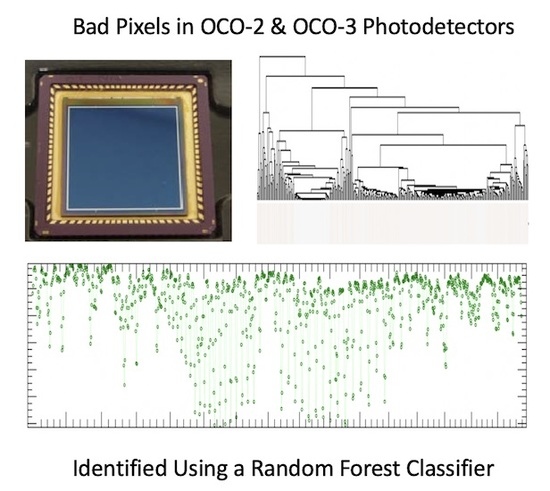

Classification of Anomalous Pixels in the Focal Plane Arrays of Orbiting Carbon Observatory-2 and -3 via Machine Learning

Abstract

:1. Introduction

2. Data and Methods

2.1. Data Normalization

2.2. Characterizing Pixel Behavior and Feature Extraction

2.3. Random Forest Classification

3. Results and Discussion

3.1. Joint Distributions of Likelihood Statistics

3.2. Tree Interpreter

3.3. OCO-2 and OCO-3 Bad Pixel Summaries

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ABO2 | O A-band |

| BPM | Bad Pixel Map |

| DN | Digital Number |

| DTW | Dynamic Time Warping |

| FPA | Focal Plane Array |

| IOC | In-Orbit Checkout |

| IQR | Interquartile Range |

| NASA | National Aeronautic and Space Administration |

| OBA | Optical Bench Assembly |

| OCO | Orbiting Carbon Observatory |

| PCA | Principal Component Analysis |

| SCO2 | Strong CO band |

| TVAC | Thermal Vacuum Testing |

| WCO2 | Weak CO band |

| XCO2 | Column-averaged CO mole fraction |

References

- Ghosh, S.; Froebrich, D.; Freitas, A. Robust autonomous detection of the defective pixels in detectors using a probabilistic technique. Appl. Opt. 2008, 47, 6904–6924. [Google Scholar] [CrossRef] [PubMed]

- Santini, F.; Palombo, A.; Dekker, R.J.; Pignatti, S.; Pascucci, S.; Schwering, P.B. Advanced anomalous pixel correction algorithms for hyperspectral thermal infrared data: The TASI-600 case study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2393–2404. [Google Scholar] [CrossRef]

- Guanter, L.; Segl, K.; Sang, B.; Alonso, L.; Kaufmann, H.; Moreno, J. Scene-based spectral calibration assessment of high spectral resolution imaging spectrometers. Opt. Express 2009, 17, 11594–11606. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fischer, A.D.; Downes, T.; Leathers, R. Median spectral-spatial bad pixel identification and replacement for hyperspectral SWIR sensors. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XIII, Orlando, FL, USA, 7 May 2007; International Society for Optics and Photonics: Bellingham, WA, USA, 2007; Volume 6565, p. 65651E. [Google Scholar]

- Kieffer, H.H. Detection and correction of bad pixels in hyperspectral sensors. In Proceedings of the Hyperspectral Remote Sensing and Applications, Denver, CO, USA, 6 November 1996; International Society for Optics and Photonics: Bellingham, WA, USA, 1996; Volume 2821, pp. 93–108. [Google Scholar]

- Chapman, J.W.; Thompson, D.R.; Helmlinger, M.C.; Bue, B.D.; Green, R.O.; Eastwood, M.L.; Geier, S.; Olson-Duvall, W.; Lundeen, S.R. Spectral and Radiometric Calibration of the Next, Generation Airborne Visible Infrared Spectrometer (AVIRIS-NG). Remote Sens. 2019, 11, 2129. [Google Scholar] [CrossRef] [Green Version]

- Celestre, R.; Rosenberger, M.; Notni, G. A novel algorithm for bad pixel detection and correction to improve quality and stability of geometric measurements. J. Phys. Conf. Ser. 2016, 772, 012002. [Google Scholar] [CrossRef] [Green Version]

- Han, T.; Goodenough, D.G.; Dyk, A.; Love, J. Detection and correction of abnormal pixels in Hyperion images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; Volume 3, pp. 1327–1330. [Google Scholar]

- Tan, Y.P.; Acharya, T. A robust sequential approach for the detection of defective pixels in an image sensor. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, AZ, USA, 15–19 March 1999; Volume 4, pp. 2239–2242. [Google Scholar]

- López-Alonso, J.M.; Alda, J. Principal component analysis of noise in an image-acquisition system: bad pixel extraction. In Proceedings of the Photonics, Devices, and Systems II, Prague, Czech Republic, 26–29 May 2002; International Society for Optics and Photonics: Bellingham, WA, USA, 2003; Volume 5036, pp. 353–357. [Google Scholar]

- Rankin, B.M.; Broadwater, J.B.; Smith, M. Anomalous Pixel Replacement and Spectral Quality Algorithm for Longwave Infrared Hyperspectral Imagery. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4316–4319. [Google Scholar]

- Eldering, A.; Wennberg, P.O.; Viatte, C.; Frankenberg, C.; Roehl, C.M.; Wunch, D. The Orbiting Carbon Observatory-2: First, 18 months of science data products. Atmos. Meas. Tech. 2017, 10, 549–563. [Google Scholar] [CrossRef] [Green Version]

- O’Dell, C.W.; Eldering, A.; Wennberg, P.O.; Crisp, D.; Gunson, M.R.; Fisher, B.; Frankenberg, C.; Kiel, M.; Lindqvist, H.; Mandrake, L.; et al. Improved retrievals of carbon dioxide from Orbiting Carbon Observatory-2 with the version 8 ACOS algorithm. Atmos. Meas. Tech. 2018, 11, 6539–6576. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer Series in Statistics; Springer: New York, NY, USA, 2009; pp. 174–189. [Google Scholar]

- Crisp, D.; Pollock, H.R.; Rosenberg, R.; Chapsky, L.; Lee, R.A.; Oyafuso, F.A.; Frankenberg, C.; O’Dell, C.W.; Bruegge, C.J.; Doran, G.B.; et al. The on-orbit performance of the Orbiting Carbon Observatory-2 (OCO-2) instrument and its radiometrically calibrated products. Atmos. Meas. Tech. 2017, 10, 59–81. [Google Scholar] [CrossRef] [Green Version]

- Eldering, A.; Pollock, R.; Lee, R.; Rosenberg, R.; Oyafuso, F.; Granat, R.; Crisp, D.; Gunson, M. Orbiting Carbon Observatory OCO-2 Level L1b Algorithm Theoretical Basis; National Aeronautics and Space Administration, Jet Propulsion Laboratory, California Institute of Technology: Pasadena, CA, USA, 2019. Available online: https://docserver.gesdisc.eosdis.nasa.gov/public/project/OCO/OCO_L1B_ATBD.pdf (accessed on 4 December 2019).

- Eldering, A.; Taylor, T.E.; O’Dell, C.W.; Pavlick, R. The OCO-3 mission: Measurement objectives and expected performance based on 1 year of simulated data. Atmos. Meas. Tech. 2019, 12, 2341–2370. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D.L.; Johnstone, J.M. Ideal spatial adaptation by wavelet shrinkage. Biometrika 1994, 81, 425–455. [Google Scholar] [CrossRef]

- Daubechies, I. Ten lectures on wavelets. SIAM 1992, 61, 10–14. [Google Scholar]

- Sakoe, H.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef] [Green Version]

- Berndt, D.J.; Clifford, J. Using dynamic time warping to find patterns in time series. In Proceedings of the KDD Workshop, Seattle, WA, USA, 31 July–1 August 1994; Volume 10, pp. 359–370. [Google Scholar]

- Salvador, S.; Chan, P. Toward accurate dynamic time warping in linear time and space. Intell. Data Anal. 2007, 11, 561–580. [Google Scholar] [CrossRef] [Green Version]

- Rosenberg, R.; Maxwell, S.; Johnson, B.C.; Chapsky, L.; Lee, R.A.; Pollock, R. Preflight Radiometric Calibration of Orbiting Carbon Observatory 2. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1994–2006. [Google Scholar] [CrossRef]

- Saabas, A. Treeinterpreter. Available online: https://github.com/andosa/treeinterpreter (accessed on 2 October 2017).

- Ghibaudo, G.; Boutchacha, T. Electrical noise and RTS fluctuations in advanced CMOS devices. Microelectron. Reliab. 2002, 42, 573–582. [Google Scholar] [CrossRef]

| Feature (Dark and Lamp) | Description | |

|---|---|---|

| Minimum | responsiveness | |

| Maximum | sensitivity | |

| Jumping Score | change with time | (1) |

| Noise | instability | |

| Dynamic Time Warping | similarity to neighbors | (2) |

| Temperature Correlations | FPA, OBA and other: 6 (OCO-2) and 4 (OCO-3) | (3) |

| ABO2 | WCO2 | SCO2 | ||||

|---|---|---|---|---|---|---|

| Feature | % > 0.10 | Feature | % > 0.10 | Feature | % > 0.10 | |

| OCO-2 | dark_std | 59% | dark_dtw | 33% | dark_std | 67% |

| dark_dtw | 28% | dark_jump | 33% | dark_jump | 51% | |

| dark_jump | 19% | dark_std | 28% | all_temps | 15% | |

| OCO-3 | dark_std | 47% | lamp_dtw | 41% | lamp_dtw | 34% |

| dark_dtw | 18% | lamp_min | 20% | dark_dtw | 25% | |

| dark_jump | 18% | dark_std | 18% | lamp_min | 15% | |

| Version | Upload Date | # ABO2 Pixels | # WCO2 Pixels | # SCO2 Pixels | Description |

|---|---|---|---|---|---|

| (5, 10,10) | 13-February-2014 | 853 | 4520 | 4414 | OCO-2 Inflight Update |

| (11, 11, 11) | 09-August-2018 | 1213 | 5262 | 5192 | OCO-2 Inflight Update With Classifier |

| (100, 100, 100) | 21-May-2013 | 748 | 599 | 636 | OCO-3 Initial Map |

| (102, 102,102) | 27-April-2018 | 916 | 1389 | 1451 | OCO-3 Preflight Update With Classifier |

| (103,103, 103) | 26-July-2019 | 1132 | 1936 | 1982 | OCO-3 Inflight Update With Classifier |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marchetti, Y.; Rosenberg, R.; Crisp, D. Classification of Anomalous Pixels in the Focal Plane Arrays of Orbiting Carbon Observatory-2 and -3 via Machine Learning. Remote Sens. 2019, 11, 2901. https://doi.org/10.3390/rs11242901

Marchetti Y, Rosenberg R, Crisp D. Classification of Anomalous Pixels in the Focal Plane Arrays of Orbiting Carbon Observatory-2 and -3 via Machine Learning. Remote Sensing. 2019; 11(24):2901. https://doi.org/10.3390/rs11242901

Chicago/Turabian StyleMarchetti, Yuliya, Robert Rosenberg, and David Crisp. 2019. "Classification of Anomalous Pixels in the Focal Plane Arrays of Orbiting Carbon Observatory-2 and -3 via Machine Learning" Remote Sensing 11, no. 24: 2901. https://doi.org/10.3390/rs11242901