Multispectral Image Super-Resolution Burned-Area Mapping Based on Space-Temperature Information

Abstract

:1. Introduction

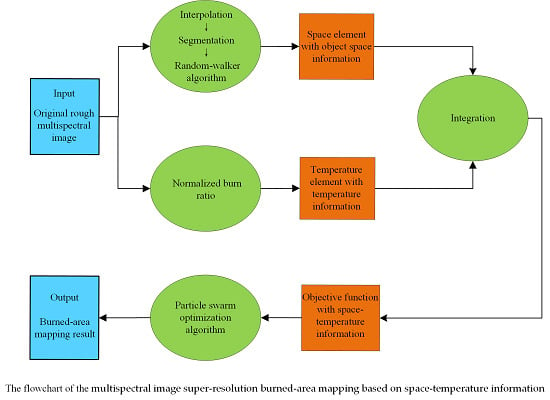

2. Dataset

3. Methodology

3.1. Space Element

3.2. Temperature Element

3.3. Implementation of STI

4. Experiments and Results

4.1. Experimental Settings

4.2. Results Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote. Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef]

- Bastarrika, A.; Chuvieco, E.; Martin, M.P. Mapping burned areas from Landsat TM/ETM plus data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Ling, F.; Du, Y.; Zhang, Y.; Li, X.; Xiao, F. Burned-Area Mapping at the Subpixel Scale With MODIS Images. IEEE Geosci. Remote. Sens. Lett. 2015, 12, 1963–1967. [Google Scholar] [CrossRef]

- Atkinson, P.M. Mapping sub-pixel boundaries from remotely sensed images. In Proc. Innovations in GIS; CRC Press: New York, NY, USA, 1997; pp. 166–180. [Google Scholar]

- Wang, Q.; Atkinson, P.M.; Shi, W. Indicator cokriging-based subpixel mapping without prior spatial structure information. IEEE Trans. Geosci. Remote Sens. 2015, 53, 309–323. [Google Scholar] [CrossRef]

- Atkinson, P.M. Sub-pixel target mapping from soft-classified remotely sensed imagery. Photogramm. Eng. Remote Sens. 2005, 71, 839–846. [Google Scholar] [CrossRef]

- Villa, A.; Chanussot, J.; Benediktsson, J.A.; Jutten, C. Spectral unmixing for the classification of hyperspectral images at a finer spatial resolution. IEEE J. Sel. Top. Signal Process. 2011, 5, 521–533. [Google Scholar] [CrossRef]

- Ling, F.; Li, W.; Du, Y.; Li, X. Land cover change mapping at the subpixel scale with different spatial-resolution remotely sensed imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 182–186. [Google Scholar] [CrossRef]

- Makido, Y.; Shortridge, A.; Messina, J.P. Assessing alternatives for modeling the spatial distribution of multiple land-cover classes at subpixel scales. Photogramm. Eng. Remote Sens. 2007, 73, 935–943. [Google Scholar] [CrossRef]

- Verhoeye, J.; De Wulf, R. Land-cover mapping at sub-pixel scales using linear optimization techniques. Remote Sens. Environ. 2002, 79, 96–104. [Google Scholar] [CrossRef]

- Zhang, Y.; Ling, F.; Li, X.; Du, Y. Super-resolution land cover mapping using multiscale self-similarity redundancy. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 5130–5145. [Google Scholar] [CrossRef]

- Tong, X.; Xu, X.; Plaza, A.; Xie, H.; Pan, H.; Cao, W.; Lv, D. A new genetic method for subpixel mapping using hyperspectral images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 4480–4491. [Google Scholar] [CrossRef]

- He, D.; Zhong, Y.; Feng, R.; Zhang, L. Spatial-temporal sub-pixel mapping based on swarm intelligence theory. Remote Sens. 2016, 8, 894. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Hao, S.; Wang, L. Improving remote sensing image super-resolution mapping based on the spatial attraction model by utilizing the pansharpening technique. Remote Sens. 2019, 11, 247. [Google Scholar] [CrossRef]

- Ling, F.; Li, X.; Du, Y.; Xiao, F. Sub-pixel mapping of remotely sensed imagery with hybrid intra- and inter-pixel dependence. Int. J. Remote Sens. 2013, 34, 341–357. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Leung, H.; Zhang, G. Subpixel mapping based on hopfield neural network with more prior information. IEEE Geosci. Remote Sens. Lett. 2019, 8, 1284–1288. [Google Scholar] [CrossRef]

- Li, X.; Du, Y.; Ling, F.; Feng, Q.; Fu, B. Superresolution mapping of remotely sensed image based on hopfield neural network with anisotropic spatial dependence model. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1265–1269. [Google Scholar]

- Nigussie, D.; Zurita-Milla, R.; Clevers, J.G.P.W. Possibilities and limitations of artificial neural networks for subpixel mapping of land cover. Int. J. Remote Sens. 2011, 32, 7203–7226. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S. Sub-pixel mapping of tree canopy, impervious surfaces, and cropland in the Laurentian great lakes basin using MODIS time-series data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2011, 4, 336–347. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. The effect of the point spread function on sub-pixel mapping. Remote Sens. Environ. 2017, 193, 127–137. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Wang, L. Allocating classes for soft-then-hard sub-pixel mapping algorithms in units of class. IEEE Trans. Geosci. Remote Sens. 2014, 5, 2940–2959. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Chanussot, J. Soft-then-hard subpixel land cover mapping based on spatial-spectral interpolation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1851–1854. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M. Sub-pixel mapping of remote sensing images based on radial basis function interpolation. ISPRS J. Photogramm. 2014, 92, 1–15. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M.; Zhang, H. Class allocation for soft-then-hard subpixel mapping algorithms with adaptive visiting order of classes. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1494–1498. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.M.; Hu, J.; Jiang, Y. Hybrid constraints of pure and mixed pixels for soft-then-hard super-resolution mapping with multiple shifted images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2040–2052. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Kong, Y.; Leung, H. Superresolution mapping based on hybrid interpolation by parallel paths. Remote Sens. Lett. 2019, 10, 149–157. [Google Scholar] [CrossRef]

- Ge, Y.; Chen, Y.; Stein, A.; Li, S.; Hu, J. Enhanced sub-pixel mapping with spatial distribution patterns of geographical objects. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2356–2370. [Google Scholar] [CrossRef]

- Xu, X.; Zhong, Y.; Zhang, L.; Zhang, H. Sub-pixel mapping based on a MAP model with multiple shifted hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 580–593. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Mura, M.D.; Chanussot, J. Using multiple subpixel shifted images with spatial-spectral information in soft-then-hard subpixel mapping. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 13, 1851–1854. [Google Scholar] [CrossRef]

- Nguyen, M.Q.; Atkinson, P.M.; Lewis, H.G. Superresolution mapping using Hopfield neural network with LIDAR data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 366–370. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Wu, Y.; Leung, H. Utilizing pansharpening technique to produce sub-pixel resolution thematic map from coarse remote sensing image. Remote Sens. 2018, 10, 884. [Google Scholar] [CrossRef]

- Wang, P.; Mura, M.D.; Chanussot, J.; Zhang, G. Soft-then-hard super-resolution mapping based on pansharpening technique for remote sensing image. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 334–344. [Google Scholar] [CrossRef]

- Thornton, M.W.; Atkinson, P.M.; Holland, D.A. A linearised pixel swapping method for mapping rural linear land cover features from fine spatial resolution remotely sensed imagery. Comput. Geosci. 2007, 33, 1261–1272. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Leung, H. Improving super-resolution flood inundation mapping for multispectral remote sensing image by supplying more spectral information. IEEE Geosci. Remote Sens. Lett. 2019, 16, 771–775. [Google Scholar] [CrossRef]

- Xie, H.; Luo, X.; Xu, X.; Pan, H.; Tong, X. Automated subpixel surface water mapping from heterogeneous urban environments using Landsat 8 OLI Imagery. Remote Sens. 2016, 8, 584. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Atkinson, P.M.; Shi, W. Fast subpixel mapping algorithms for subpixel resolution change detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1692–1706. [Google Scholar] [CrossRef]

- Ling, F.; Li, X.; Xiao, F.; Fang, S.; Du, Y. Object-based sub-pixel mapping of buildings incorporating the prior shape information from remotely sensed imagery. Int. J. Appl. Earth Observat. Geoinf. 2012, 18, 283–292. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, L.; Zhang, G.; Bi, H.; Mura, M.D.; Chanussot, J. Superresolution land cover mapping based on pixel-, subpixel-, and superpixel-scale spatial dependence with pansharpening technique. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, online. 1–17. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.M.; An, R.; Chen, Y. Object-based superresolution land-cover mapping from remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 328–340. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Fang, L.; Li, M.; Benediktsson, J.A. Extended random walker-based classification of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 144–153. [Google Scholar] [CrossRef]

- Holden, Z.A.; Smith, A.M.S.; Morgan, P.; Rollins, M.G.; Gessler, P.E. Evaluation of novel thermally enhanced spectral indices for mapping fire perimeters and comparisons with fire atlas data. Int. J. Remote Sens. 2005, 26, 4801–4808. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. An SVM ensemble approach combining spectral, structural, and semantic features for the classification of high-resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 257–272. [Google Scholar] [CrossRef]

- Cui, B.; Xie, X.; Ma, X.; Ren, G.; Ma, Y. Superpixel-based extended random walker for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 53, 3233–3243. [Google Scholar] [CrossRef]

- Song, M.; Zhong, Y.; Ma, A.; Feng, R. Multiobjective sparse subpixel mapping for remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 47, 4490–4508. [Google Scholar] [CrossRef]

- Soriano, A.; Vergara, L.; Ahmed, B.; Salazar, A. Fusion of scores in a detection context based on alpha integration. Neural Comput. 2015, 27, 1983–2010. [Google Scholar] [CrossRef]

- Jia, S.; Qian, Y. Spectral and spatial complexity-based hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2006, 45, 3867–3879. [Google Scholar]

| Area 1 | ||||

| HSAM | OSRM | SRBAM | STI | |

| Burned area (%) | 76.30 | 77.66 | 79.84 | 83.13 |

| Background (%) | 93.10 | 93.50 | 94.13 | 95.09 |

| OA (%) | 89.31 | 89.93 | 90.91 | 92.39 |

| Kappa | 0.6940 | 0.7116 | 0.7397 | 0.7622 |

| Area 2 | ||||

| HSAM | OSRM | SRBAM | STI | |

| Burned area (%) | 56.50 | 59.73 | 63.66 | 68.39 |

| Background (%) | 95.62 | 95.94 | 96.34 | 96.82 |

| OA (%) | 92.04 | 92.63 | 93.35 | 94.21 |

| Kappa | 0.5212 | 0.5567 | 0.6000 | 0.6321 |

| Area 3 | ||||

| HSAM | OSRM | SRBAM | STI | |

| Burned area (%) | 72.18 | 73.98 | 77.02 | 79.87 |

| Background (%) | 95.52 | 95.81 | 96.09 | 96.76 |

| OA (%) | 92.28 | 92.78 | 93.39 | 94.42 |

| Kappa | 0.6770 | 0.6979 | 0.7112 | 0.7463 |

| Area 4 | ||||

| HSAM | OSRM | SRBAM | STI | |

| Burned area (%) | 94.23 | 95.35 | 95.41 | 96.04 |

| background (%) | 98.54 | 98.59 | 98.60 | 99.23 |

| OA (%) | 98.18 | 98.26 | 98.47 | 99.01 |

| Kappa | 0.9448 | 0.9494 | 0.9531 | 0.9596 |

| Area 5 | ||||

| HSAM | OSRM | SRBAM | STI | |

| Burned area (%) | 71.60 | 73.14 | 76.27 | 79.82 |

| Background (%) | 96.41 | 96.61 | 97.01 | 97.45 |

| OA (%) | 93.63 | 93.98 | 94.68 | 95.48 |

| Kappa | 0.6801 | 0.6975 | 0.7328 | 0.7627 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Zhang, L.; Zhang, G.; Jin, B.; Leung, H. Multispectral Image Super-Resolution Burned-Area Mapping Based on Space-Temperature Information. Remote Sens. 2019, 11, 2695. https://doi.org/10.3390/rs11222695

Wang P, Zhang L, Zhang G, Jin B, Leung H. Multispectral Image Super-Resolution Burned-Area Mapping Based on Space-Temperature Information. Remote Sensing. 2019; 11(22):2695. https://doi.org/10.3390/rs11222695

Chicago/Turabian StyleWang, Peng, Lei Zhang, Gong Zhang, Benzhou Jin, and Henry Leung. 2019. "Multispectral Image Super-Resolution Burned-Area Mapping Based on Space-Temperature Information" Remote Sensing 11, no. 22: 2695. https://doi.org/10.3390/rs11222695