Cryptocurrency Trading Using Machine Learning

Abstract

:1. Introduction

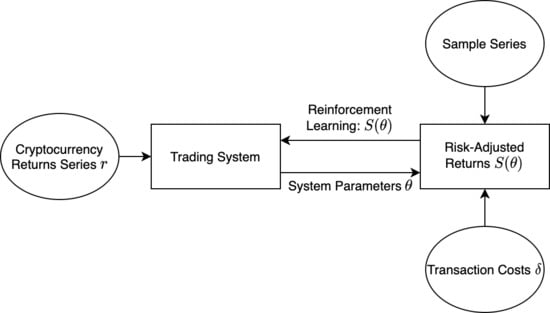

2. Direct Reinforcement Learning

3. Data Sample

4. Discussion of Findings

5. Conclusions

Funding

Conflicts of Interest

Appendix A

| Algorithm A1: Parameter Optimization via Gradient Ascent |

| Input: Training returns series r with length T, learning rate , commission , window m, reward function S, number of epochs N Output: Model parameters ; ; for 1 to N do ; ; for to T do ; ; end ; end |

References

- Acosta, Marco A. 2018. Machine learning core inflation. Economics Letters 169: 47–50. [Google Scholar] [CrossRef]

- Akcora, Cuneyt Gurcan, Matthew F. Dixon, Yulia R. Gel, and Murat Kantarcioglu. 2018. Bitcoin risk modeling with blockchain graphs. Economics Letters 173: 138–42. [Google Scholar] [CrossRef] [Green Version]

- Baur, Dirk G., and Thomas Dimpfl. 2018. Asymmetric volatility in cryptocurrencies. Economics Letters 173: 148–51. [Google Scholar] [CrossRef]

- Bellman, Richard. 1957. Dynamic Programming. Princeton: Princeton University Press. [Google Scholar]

- Brauneis, Alexander, and Roland Mestel. 2018. Price discovery of cryptocurrencies: Bitcoin and beyond. Economics Letters 165: 58–61. [Google Scholar] [CrossRef]

- Carapuço, João, Rui Neves, and Nuno Horta. 2018. Reinforcement learning applied to forex trading. Applied Soft Computing 73: 783–94. [Google Scholar] [CrossRef]

- Catania, Leopoldo, Stefano Grassi, and Francesco Ravazzolo. 2019. Forecasting cryptocurrencies under model and parameter instability. International Journal of Forecasting 35: 485–501. [Google Scholar] [CrossRef]

- Chen, Cathy Yi-Hsuan, and Christian M. Hafner. 2019. Sentiment-induced bubbles in the cryptocurrency market. Journal of Risk and Financial Management 12: 53. [Google Scholar] [CrossRef] [Green Version]

- Gold, Carl. 2003. Fx trading via recurrent reinforcement learning. Paper presented at 2003 IEEE International Conference on Computational Intelligence for Financial Engineering, Hong Kong, China, March 20–23; pp. 363–70. [Google Scholar]

- Grobys, Klaus, and Niranjan Sapkota. 2019. Cryptocurrencies and momentum. Economics Letters 180: 6–10. [Google Scholar] [CrossRef]

- Henrique, Bruno Miranda, Vinicius Amorim Sobreiro, and Herbert Kimura. 2019. Literature review: Machine learning techniques applied to financial market prediction. Expert Systems with Applications 124: 226–51. [Google Scholar] [CrossRef]

- Huck, Nicolas. 2019. Large data sets and machine learning: Applications to statistical arbitrage. European Journal of Operational Research 278: 330–42. [Google Scholar] [CrossRef]

- Jiang, Zhenlong, Ran Ji, and Kuo-Chu Chang. 2020. A machine learning integrated portfolio rebalance framework with risk-aversion adjustment. Journal of Risk and Financial Management 13: 155. [Google Scholar] [CrossRef]

- Koutmos, Dimitrios. 2018. Liquidity uncertainty and bitcoin’s market microstructure. Economics Letters 172: 97–101. [Google Scholar] [CrossRef]

- Koutmos, Dimitrios. 2019. Market risk and bitcoin returns. Annals of Operations Research, 1–25. [Google Scholar] [CrossRef]

- Koutmos, Dimitrios, and James E. Payne. 2020. Intertemporal asset pricing with bitcoin. Review of Quantitative Finance and Accounting, 1–27. [Google Scholar] [CrossRef]

- Kristóf, Tamás, and Miklós Virág. 2020. A comprehensive review of corporate bankruptcy prediction in hungary. Journal of Risk and Financial Management 13: 35. [Google Scholar] [CrossRef] [Green Version]

- Kumar, Piyush, and Rajat Raychaudhuri. 2014. Stock Ranking Predictions Based on Neighborhood Model. U.S. Patent Application No. 13/865,676, March 4. [Google Scholar]

- Lahmiri, Salim, and Stelios Bekiros. 2019. Cryptocurrency forecasting with deep learning chaotic neural networks. Chaos, Solitons & Fractals 118: 35–40. [Google Scholar]

- Li, Xin, and Chong Alex Wang. 2017. The technology and economic determinants of cryptocurrency exchange rates: The case of bitcoin. Decision Support Systems 95: 49–60. [Google Scholar] [CrossRef]

- Makarov, Igor, and Antoinette Schoar. 2020. Trading and arbitrage in cryptocurrency markets. Journal of Financial Economics 135: 293–319. [Google Scholar] [CrossRef] [Green Version]

- Markovitz, Harry M. 1959. Portfolio Selection: Efficient Diversification of Investments. Hoboken: John Wiley. [Google Scholar]

- Moody, John, and Matthew Saffell. 2001. Learning to trade via direct reinforcement. IEEE Transactions on Neural Networks 12: 875–89. [Google Scholar] [CrossRef] [Green Version]

- Paszke, Adam, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in PyTorch. Advances in Neural Information Processing Systems 32: 8024–35. [Google Scholar]

- Phillip, Andrew, Jennifer S. K. Chan, and Shelton Peiris. 2018. A new look at cryptocurrencies. Economics Letters 163: 6–9. [Google Scholar] [CrossRef]

- Saltzman, Bennett, and Julieta Yung. 2018. A machine learning approach to identifying different types of uncertainty. Economics Letters 171: 58–62. [Google Scholar] [CrossRef]

- Sutton, Richard S. 1988. Learning to predict by the methods of temporal differences. Machine Learning 3: 9–44. [Google Scholar] [CrossRef]

- Tzouvanas, Panagiotis, Renatas Kizys, and Bayasgalan Tsend-Ayush. 2020. Momentum trading in cryptocurrencies: Short-term returns and diversification benefits. Economics Letters 191: 108728. [Google Scholar] [CrossRef]

- Watkins, Christopher J. C. H., and Peter Dayan. 1992. Q-learning. Machine Learning 8: 279–92. [Google Scholar] [CrossRef]

- Zhang, Yuchen, and Shigeyuki Hamori. 2020. The predictability of the exchange rate when combining machine learning and fundamental models. Journal of Risk and Financial Management 13: 48. [Google Scholar] [CrossRef] [Green Version]

| 1. | See (Bellman 1957; Sutton 1988; Watkins and Dayan 1992). Direct reinforcement learning, or, recurrent reinforcement learning, as it is often synonymously referred to, indicates a class of models that do not have to learn a value function Carapuço et al. (2018). |

| 2. | Shorting cryptocurrencies is accessible to all investors (see https://www.cryptocointrade.com/crypto-trading-blog/how-to-short-bitcoin/). |

| 3. | Our model is calibrated to account for transaction costs of 0.10%. Costs can vary depending on the exchange, but this amount is becoming an industry standard (see https://www.binance.com/en/fee/schedule). Applying lower (higher) transaction costs to our model results in more (less) frequent trading. Additional results across a range of transaction costs are not tabulated for brevity but available on request. |

| 4. | |

| 5. | Many other time window combinations are entertained (results available on request) but find that these windows optimize risk-adjusted performance. Re-training every 100 days allows the model to adapt to shifts in cryptocurrency market behaviors and conditions. For our system parameters in (1), we use a 10-day window of autoregressive returns (m = 10). Our results (untabulated but available on request) show that our DR model does not behave lik a momentum- or mean reverting-type of strategy (because lags greater than 1 day are significant in driving the strategy). Thus, our DR model accounts for week-to-week shifts in market behaviors and conditions in determining its long/short decisions. |

| Cryptocurrency | Abbrev. | Avg. Price | Max | Min. | Avg. Volume | Avg. Mkt. Cap |

|---|---|---|---|---|---|---|

| Bitcoin | BTC | $4057.42 | $19,497.40 | $210.49 | $4,450,445,943 | $69,165,755,657 |

| Ethereum | ETH | $207.28 | $1396.42 | $0.43 | $1,739,231,393 | $20,456,600,907 |

| Litecoin | LTC | $51.82 | $358.34 | $2.63 | $613,577,135 | $2,933,407,200 |

| Ripple | XRP | $0.27 | $3.38 | $0.0041 | $456,691,482 | $10,744,494,433 |

| Monero | XMR | $72.27 | $469.20 | $0.36 | $39,390,717 | $1,147,314,395 |

| Cryptocurrency | Cum. Returns | Sharpe | Sortino | Max Drawdown | Value-at-Risk | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| BH | DR | BH | DR | BH | DR | BH | DR | BH | DR | |

| Bitcoin | 1.352 | 3.398 | 0.037 | 0.094 | 0.053 | 0.143 | 0.616 | 0.434 | 0.059 | 0.056 |

| Ethereum | 0.302 | 0.240 | −0.031 | −0.041 | −0.042 | −0.056 | 0.870 | 0.823 | 0.081 | 0.082 |

| Litecoin | 0.637 | 0.837 | 0.005 | 0.017 | 0.008 | 0.027 | 0.818 | 0.767 | 0.083 | 0.083 |

| Ripple | 0.441 | 0.783 | −0.012 | 0.013 | −0.020 | 0.022 | 0.611 | 0.591 | 0.083 | 0.082 |

| Monero | 0.459 | 1.374 | −0.010 | 0.039 | −0.014 | 0.057 | 0.780 | 0.796 | 0.082 | 0.079 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koker, T.E.; Koutmos, D. Cryptocurrency Trading Using Machine Learning. J. Risk Financial Manag. 2020, 13, 178. https://doi.org/10.3390/jrfm13080178

Koker TE, Koutmos D. Cryptocurrency Trading Using Machine Learning. Journal of Risk and Financial Management. 2020; 13(8):178. https://doi.org/10.3390/jrfm13080178

Chicago/Turabian StyleKoker, Thomas E., and Dimitrios Koutmos. 2020. "Cryptocurrency Trading Using Machine Learning" Journal of Risk and Financial Management 13, no. 8: 178. https://doi.org/10.3390/jrfm13080178