1. Introduction

An approach based on many-objective decision-making can be developed for smart computer infrastructures in some crucial domains such as education, health care, public transport and urban planning. If the number of criteria is greater than 3, we consider the many-criteria optimization problem as a special case of multi-criteria optimization problem. However, more importantly, as the number of criteria increases, there is an explosion in the number of Pareto-optimal solutions. We show an example where the number of effective evaluations is five for two criteria and then two hundred for four criteria. So, how much will it be for the seven criteria and more? In this paper, we explain this phenomenon of a sudden explosion of the number of Pareto-optimal solutions. Consequently, much more memory should be allocated to the Pareto solution archive and a much larger population size should be assumed in evolutionary algorithms, particle swarm optimization (PSO) or ant colony optimization (ACO).

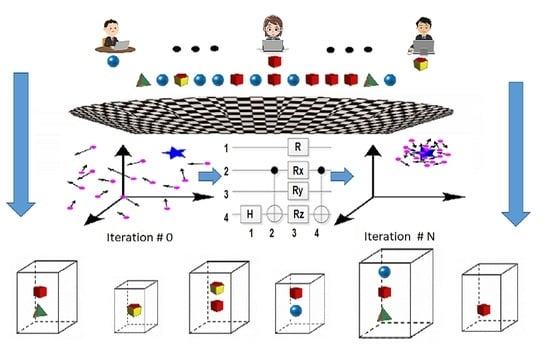

Because several criteria characterize complex systems such as computing clouds, the placement of virtual machines in smart computing clouds is formulated as the many-optimization problem. In the model of the multi-task training, the selected deep learning models (DLs) can be retrained at the central hosts cyclically, and then their copies migrate as virtual machines (VMs) to an edge of the computing cloud. Besides this, a host failure forces the virtual machines to be moved immediately from that computer to the most appropriate servers. This smart computing cloud should be supported by using teleportation of virtual machines via the Internet of Things (IoT) to optimize several criteria such as energy consumption, the workload of the bottleneck computer, cost of hardware, reliability of the cloud and others. Some criteria can be constrained because of the dramatic increase in wireless devices that influence several additional bounds [

1].

Recent advances in cloud computing, deep learning and Big Data have introduced data-driven solutions into platforms based on OpenStack, which enables the construction of private computing clouds with their own intelligent services, for example, for education at universities or for the smart city. On the long side, commercial public clouds such as Microsoft Azure and Amazon Elastic Compute Cloud immediately deliver advanced services based on artificial intelligence and quantum computing. In both cases, efficient placement of VMs and management of computer resources is one of the particularly important issues [

2]. An adequate resource assignment is a crucial challenge for optimization of teleportation of virtual machines by a cloud hypervisor that allocates computer resources to virtual machines with requirements due to central processing units (CPUs), graphical processing units (GPUs), random-access memory (RAM) and disk storage. Live migration is very effective because it involves transferring VMs without shutting down client systems for clouds and without doing a lot of administrative work [

3].

Teleportation of a virtual machine can change the workload of the bottleneck computer or the workload of the bottleneck transmission node. Besides this, the energy consumption by the set of cloud hosts depends on the virtual machine assignment. Some VMs can be moved to less energy-consuming hosts, and the others can be allocated to database servers to accelerate operations on their data. Therefore, a problem of cloud resource assignment should be formulated as a multi-objective optimization issue with selected criteria. We present the new many-objective problem of virtual machine placement with seven criteria such as: electric power of hosts, reliability of cloud, CPU workload of the bottleneck host, communication capacity of the critical node, a free RAM capacity of the most loaded memory, a free disc memory capacity of the most busy storage, and overall computer costs. This is a significant extension of the current formulation of this issue with four criteria [

4].

Metaheuristics such as genetic algorithms, differential evolution, harmony search, bee colony optimization, particle swarm optimization and ant colony algorithms can be used for solving multi-criteria optimization problems to find the representation of Pareto-optimal solutions [

5]. Based on many numerical experiments, we propose Many-objective Quantum-inspired Particle Swarm Optimization Algorithm (MQPSO) with additional improvements based on quantum gates. Not only do high-quality solutions justify this approach, but also that decision-making in computing clouds requires quantum-inspired intelligent software to handle some dynamic situations. Furthermore, PSOs have been applied to support several key tasks in computer clouds.

A goal of this paper is to study MQPSO for optimization of VMs placement regarding selected seven criteria subject to the computer resource constraints. One of the main contributions is the formulation of the many-objective optimization problem with seven crucial criteria for the management of virtual machines, and the MQPSO algorithm that can be used to determine the Pareto-optimal solutions for the other many-objective optimization problems, too.

To order many important issues, the rest of this paper is organized, as follows. Related work is described in

Section 2. Then, a teleportation model of virtual machines is characterized in

Section 3. Next,

Section 4 presents many-objective optimization problem formulation, and then

Section 5 describes MQPSO with quantum gates for finding Pareto-optimal solutions. Finally, some numerical experiments are presented in

Section 6 just before

Section 7 with conclusions.

2. Related Work

We consider studies on the placement of virtual machines in the computing cloud, and then we analyze quantum-inspired PSOs [

6]. An overview of virtual machine placement schemes in cloud computing is presented in [

7]. An energy-efficient algorithm for live migration of virtual machines may reduce wastage of power by initiating a sleep mode of idle hosts [

8]. To avoid overloaded servers, the cloud workload optimizer analyzes load on physical machines and determines an optimal placement due to energy consumption by an ant colony optimization algorithm. A local migration agent based on an optimal solution selects appropriate physical servers for VMs. Finally, the migration orchestrator moves the VMs to the hosts, and an energy manager initiates a sleep mode for idle hosts to save energy. In addition, efficient managing of resources is recommended for green (or energy-aware) cloud with parallel machine learning [

9].

It is a very small (almost zero) downtime in the order of milliseconds within live migration of virtual machines [

1]. This live migration is carried out without disrupting any active network connections, even when the VM is moved to the target host because an original VM is running. There are other benefits associated with migrating VMs. For instance, the placement of VMs can be a solution for the minimization of the processor usage of hosts or for the minimization of their Input/Output usage subject to keeping virtual machines zero downtime [

10]. To increase the throughput of the system, VMs are supposed to be distributed to each server in proportion to their computing Input/Output capacity. Migrations of virtual machines can be conducted by the management of services of software platforms such as Red Hat Cluster Suite to create load balancing cluster services [

10].

We recommend OpenStack as the suited cloud software for supporting the live migration of VMs. For instance, it was demonstrated by using high network interfaces with transmission capacity10 Gb/s [

11]. Our experimental cloud WUT-GUT confirms the adequate possibilities of OpenStack due to the teleportation of VMs.

An influence of both the virtual machine size for transmission and bandwidth of the network on required teleportation time is very crucial [

9]. We can save energy by well-organized management of these resources for cloud data centers. Besides this, an adequate placing VM in datacenters may diminish the number of VM migrations that is another optimization criterion [

12]. If the smart VMs are going to migrate, some of them may be unable to obtain the destination hosts due to the limited resources. To avoid the above situations, Wang et al. formulated another NP-hard optimization problem, which both maximizes the revenue of successfully placing the VMs and minimizes the total number of migrations subject to the resource constraint of hosts. Regarding complexity proof, the above problem is equivalent to finding the shortest path problem [

9].

Deep reinforcement learning was developed for multi-objective placement of virtual machines in cloud datacenters [

13]. Because high packing factors could lead to performance and security issues, for example, virtual machines can compete for hardware resources or collude to leak data, it was introduced a multi-objective approach to find optimal placement strategies considering different goals, such as the impact of hardware outages, the power required by the datacenter, and the performance perceived by users. Placement strategies are found by using a deep reinforcement learning framework to select the best placement heuristic. A proposed algorithm outperforms bin packing heuristics [

13].

Task scheduling and allocation of cloud resources impact the performance of applications [

14]. Customer satisfaction, resource utilization and better performance are crucial for service providers. A multi-cloud environment may significantly reduce the cost, makespan, delay, waiting time and response time to avoid customer dissatisfaction and to improve the quality of services. A multi-swarm optimization model can be used for multi-cloud scheduling to improve the quality of services [

14].

Green strategies for networking and computing inside data centers, such as server consolidation or energy-aware routing, should not negatively impact the quality and service level agreements expected from network operators [

15]. Therefore, robust strategies can place virtual network functions to save energy savings and to increase the protection level against resource demand uncertainty. The proposed model explicitly provides for robustness to unknown or imprecisely formulated resource demand variations, powers down unused routers, switch ports and servers and calculates the energy optimal virtual network functions placement and network embedding also considering latency constraints on the service chains [

15]. A fast robust optimization-based heuristic for the deployment of green virtual network functions was studied in [

16].

Because a service function chain specifies a sequence of virtual network functions for user traffic to realize a network service, the problem of orchestration this chain is very crucial [

17]. It can be formulated the deadline-aware co-located and geo-distributed orchestration with demand uncertainty as optimization issues with the consideration of end-to-end delay in service chains by modeling queueing and processing delays. The proposed algorithm improved the performance in terms of the ability to cope with demand fluctuations, scalability and relative performance against other recent algorithms [

17].

Another aspect of virtualization is the nonlinear optimization problem of mapping virtual links to physical network paths under the condition that bandwidth demands of virtual links are uncertain [

18]. To realize virtual links with predictable performance, mapping is required to guarantee a bound on the congestion probability of the physical paths that embed the virtual links.

The second part of the work is about some new approaches to PSO algorithms. Although quantum-inspired PSO algorithms have not been used to optimize the migration of virtual machines in the computing cloud so far, it is worth discussing them due to the PSO algorithm with quantum gates proposed in the article. The quantum-behavior particle swarm optimization (QPSO) algorithm may control placement problems in software-defined networking to make computer networks agile and flexible [

19]. To meet the requirements of users and conquer the physical limitation of networks, it is necessary to design an efficient controller placement mechanism, which is defined as an optimization problem to determine the proper positions and number of its controllers. A particle is a vector for placing controllers at each switch. Besides this, the algorithm is characterized by quantum behavior with no quantum gates. QPSO algorithm demonstrates power fast convergence rate but limits in global search ability. Simulation results show that QPSO achieves better performance in several instances of multi-controller placement problems [

19].

An enhanced quantum behaved particle swarm optimization (e-QPSO) algorithm improves the exploration and the exploitation properties of the original QPSO for function optimization. It is based on the adaptive balance among the personal best and the global best positions using the parameter alpha. Besides this, it keeps the balance between diversification and intensification using the parameter gamma. In addition, a percentage of the worst-performing population is re-initialized to prevent the stack of the particle at the local optima. The results of e-QPSO outperform twelve QPSO variants, two adaptive variants of PSO, as well as nine well-known evolutionary algorithms due to 59 benchmark instances [

6].

A self-organizing quantum-inspired particle swarm optimization algorithm (MMO_SO_QPSO) is another version of PSO for solving multimodal multi-objective optimization problems (MMOPs) [

20]. It should find all equivalent Pareto optimal solutions and maintain a balance between the convergence and diversity of solutions in both decision space and objective space. In the algorithm, a self-organizing map is used to find the best neighbor leader of particles, and then a special zone searching method is adopted to update the position of particles and locate equivalent Pareto optimal solutions in decision space. Quantum behaviors of particles are not described as position vectors and velocity vectors, but they are replaced by the wave function. To maintain diversity and convergence of Pareto optimal solutions, a special archive mechanism based on the maximum-minimum distance among solutions is developed. Some outstanding Pareto optimal solutions are maintained in another archive. In addition, a performance indicator estimates the similarity between obtained Pareto optimal solutions and true efficient alternatives. Experimental results demonstrate the superior performance of MMO_SO_QPSO for solving MMOPs [

20].

It is worth noting that the MMO_SO_QPSO algorithm is one of the most advanced PSO algorithms inspired by quantum particle behavior. For this reason, it is the foundation on which our algorithm MQPSO is based, which additionally takes into account quantum gates and the many criteria selection procedure.

Besides this, there are several interesting works that have dealt with hybrid metaheuristics, which could provide an effective introduction to advanced metaheuristics in general [

21], in combination with exact approaches applied in network design [

22] and improved particle swarm optimization [

23].

3. Live Migration of Intelligent Virtual Machines

We can identify the following system elements that are relevant for the modeling. Let V be the number of virtual machines that are trained in the cloud on I physical machines. Moreover, let virtual machines be denoted as α1, …, αv, …, αV. Each αv can work at the host assigned to the safety node such as N1, …, Ni, …, NI in the cloud. Let β1, …, βj, …, βJ be the possible types of physical machines providing a set of resources to VMs. Exactly one physical machine βj can be assigned to each node Ni.

Virtual machine migration involves changing the host on the source node to the host on the target node, which is a key decision in the optimization problem under consideration. Destination nodes of the VMs after migrations can be modeled by

, where [

4]:

To find adequate amounts of resources, we consider the assignment of hosts that are the other important decisions supporting the placement of VMs. Capacities of resources in the cloud can be adapted to the needs of virtual machines by designating an appropriate matrix

, where:

Resources provided by physical machines are characterized by six vectors such as: the vector of electric power consumption (watt), the vector of RAM memory capacities (GB), the vector of the numbers of the preferred computers and the vector of disk storage capacities (TB). In addition, the vector ($) characterizes costs of using the selected physical machines. Let the computer be failed independently due to an exponential distribution with rate . The sixth vector is the vector of the reliability rates .

Moreover, four characteristics are related to virtual machines. Let be the matrix of cumulative times of VMs runs on different types of computers (s) and also —the matrix of total communication time between agent pairs located at different nodes (s). In addition, the memory requirements of VMs are described by the vector of reserved RAM (GB) and the vector of required disk storage (TB).

The binary matrix double

considers decision variables that can minimize four criteria and maximize three criteria characterizing computing cloud. We maximize three criteria. Let

be the free capacity of the RAM in the bottleneck computer due to RAM (GB) and

—the free capacity of the disc storage in the bottleneck computer due to disk storage (TB) [

4]. Let

R denote the reliability of the cloud that should be maximized, too. Moreover, we minimize

E—electric power of the cloud (watt). Let

be communication capacity of the bottleneck node (s). Moreover,

Ξ—cost of hosts (money unit, i.e.,

$) is the criterion for minimization. Let

be the processing workload of the bottleneck CPU (s) that is the fourth criterion for maximization.

Live migration of virtual machines may change the values of the above criteria. Therefore, we should find the set of Pareto-optimal solutions. On the other hand, the decision-maker can choose a compromise solution from this set.

Intelligent virtual machines contain some pre-trained domain models based mainly on convolutional neural network (CNN) and long short term memory (LSTM) ANNs [

24,

25]. Several virtual machines can run on a host, but they can migrate from the source computer to the destination regarding the Pareto-optimal solution obtained by the MQPSO. For instance, an accuracy of 99% can be achieved within approximately one minute for training the CNN on the virtual machine Linux Fedora Server (the CPU Intel Core i7, 27 GHz, RAM 16 GB) due to the German Traffic Sign Detection Benchmark Dataset. The CNN identifies the traffic signs represented by the matrix with 28 × 28 selected features. The dataset is divided into 570 training signs and 330 test items [

24]. In this case, live migration of this virtual machine is unlikely to be recommended, unless there is a host failure [

26]. Retraining is very fast, and then a trained model can be sent to nodes on the Internet of Things [

27].

However, a virtual machine needs additional resources in the following situation, which is very common in intelligent clouds [

28]. Much more time is required to learn the Long Short Term Memory artificial neural network for video classification. The LSTM can detect some dangerous situations from the web camera monitoring city areas using the Cityscapes Dataset with 25,000 stereo videos about the street scenes from 50 cities [

29]. After 70 h of the CPU elapsed time, LSTM based model achieved an average accuracy of 54.7% that is rather unacceptable. The LSTM was implemented at the Matlab R2021b environment. Therefore, the supervised learning process should be accelerated by using GPUs, and the virtual machine with LSTM is supposed to be moved to the other server.

Live migration of the virtual machine to the more powerful workstation with the GPUs can be carried out by using protocols HTTPS and WebSocket. Besides this, micro-services exchange data with format JSON, which were verified in the experimental computing cloud GUT-WUT (Gdańsk University of Technology—Warsaw University of Technology). This cloud is based on the OpenStack software platform that maintains possibilities of VM teleportation [

4].

Another deep learning model that uses the virtual machine may be the Human Motion DataBase (HMDB) with 6849 clips divided into 51 action categories, each containing a minimum of 101 clips [

30]. For instance, the trained LSTM can detect undesirable situations in the city, such as smoking and drinking in the forbidden areas or pedestrians falling on the floor. The quick and correct classifications allow counteracting many extreme situations on city streets [

31]. Teleportation of the virtual machine with the LSTM trained on the HMDB can provide enough amount of cloud resources to train this virtual machine with high accuracy.

4. Many-Objective Optimization Problem

There are the upper constraints that have to be respected to guarantee project requirements such as the maximum computer load

or an upper limit of node transmission

. To save energy, let

be limit of electric power for the cloud. A budget constraint for the project can be denoted as

. Furthermore, there are minimal requirements for three maximizing metrics.

Rmin is the minimum reliability for the hosts used by virtual machines.

represents the free capacity of the disc storage in the bottleneck computer due to disk storage (TB).

is the free capacity of the RAM in the bottleneck computer due to RAM (GB). We can establish constraints, as below:

If we rebuild the cloud, some hosts can be removed from it, but the others can be left to cooperate with the new servers. Therefore, from the current set of computers we can determine the set of preferred computer types . Let be the set of indexes of the preferred computer types for the current infrastructure. Moreover, we are going to buy or rent the new hosts. Let be the set of indexes of the new computer types. Let , where . If we consider the new servers, we can buy 0, 1, 2 or more hosts of the same kind. However, we are supposed to respect the assumed computers number νj of the jth type from

In consequence, the following constraint is introduced:

The reliability

R is defined, as below [

4]:

To calculate the workload of the bottleneck computer, we introduce two formulas. If computer

βj is located in the cloud node no.

i, the decision variable

is equal to 1. If virtual machine no.

v is included in this cluster, the decision variable is equal to 1, too. The term

is the cumulative time of the

vth virtual machine run on computer

βj (s). Now, we can calculate the workload of the bottleneck computer, as follows [

4]:

Similarly, we can determine transmission workload of the bottleneck node by the following formula:

If computer

βj is located in the cloud node no.

i, electric consumption per time unit in this node is equal to

, and all nodes in this cloud require electric power, as below:

Also, we can calculate the total cost of computers, as follows:

Because the cluster of agents requires the RAM capacity

in node no.

i, the minimal capacity of RAM memory for a bottleneck host can be calculated, as follows:

Likewise, we can identify the bottleneck computer regarding free disc storage in the cloud:

Based on the model, we can formulate the many-criteria optimization problem, as follows.

Given:

numbers V, I, J, Rmin,

vectors

matrices

Find the representation of Pareto-optimal solutions

for an ordered tuple:

where:

- (1)

—the set of admissible solutions x that satisfy requirements (3)–(10) and the formal constraints, as below:

- (2)

F—the vector of seven minimized partial criteria

- (3)

—the domination relation in R7

The number of the admissible solutions is no greater than 2(V+J)I. If the binary encoding of solutions is substituted by the integer encoding, the upper limit of admissible solutions is V I I J. In the integer encoding, we introduce the modified decision variables: . In such a way, the formal constraints are satisfied. Let n ≈ V ≈ I ≈ J. The upper limit of admissible solutions increases in a non-polynomial way due to O(nn). We can prove the following Lemma.

Lemma 1. If number of nodes I ≥ 4 or I ≥ 2 and memory resources are limited, then the many-criteria combinatorial optimization problem (18)–(23) is NP-hard.

Proof. We will prove that the problem (18)–(23) is polynomially reducible to any known NP-hard problem. Let some assumptions be made to transform the formulated dilemma (18)–(23) into the other NP-hard issue. If

J = 1, then we consider the placement of

V virtual machines on

I computers. Moreover, let resource constraints are released and one objective function

is considered, only. Then, this case of the problem (18)–(23) is equivalent to a task assignment problem without memory limits [

32]. If

I ≥ 4, it was proved that task assignment dilemma without constraints is NP-hard for minimization of the total cost [

32]. On the other hand, if

I ≥ 2 and memory resources are limited, then minimization of the total cost for task assignment is NP-hard, too [

32]. Each hierarchical solution related to minimization

is Pareto-optimal solution [

4]. Because the problem (18)–(23) with one criterion is NP-hard, the extended issue with seven criteria is NP-hard, which ends the proof. □

Note that the problem of finding a minimum feasible assignment in some cases is equivalent to a knapsack problem, and hence is an NP-hard problem. Consider the

I node graph, in which every node is of degree 2 and the source and the sink both have degrees (

I-2). The weight

wi of the node

Ni corresponds to the weight of the

ith item in the knapsack problem. A feasible cut of the task assignment graph corresponds to a subset of items whose weights do not exceed the knapsack constraint weight

w. A minimum feasible cut corresponds to a knapsack packing of maximum value [

32].

Solving problem (18)–(23) by an enumerative algorithm is ineffective for the large search space with

VI IJ elements. Let us consider the instance of the problem (18)–(23) with 855 decision variables. In the experimental instance called Benchmark855 (

https://www.researchgate.net/publication/341480343_Benchmark_855, accessed: 19 November 2021), an algorithm determines a set of Pareto-optimal solutions for 45 virtual machines, 15 communication nodes and 12 types of servers. A search space contains 8.2 × 10

38 elements and 2 × 10

7 solutions are evaluated during 3 min by an enumerative algorithm implemented in Java on Dell E5640 dual-processor machine under Linux CentOS. It confirms that there are no practical chances to find any Pareto-solution by a systematic enumerative way. Besides this, 2 × 10

7 independent probabilistic trials are less likely to ensure a high-quality alternative.

There are exact solvers like the multi-criteria branch and bound method [

33] or the ε-constraint method [

34], but they usually produce poor quality solutions for the limited time of calculations. Metaheuristics find much better results than exact methods for many instances of different NP-hard multi-criteria optimization problems [

21].

5. Many-Objective Particle Swarm Optimization with Quantum Gates MQPSO

We simulated teleportation of virtual machines at the cloud GUT-WUT based on OpenStack that uses hosts from two universities [

4]. Algorithm 1 shows pseudocode visualizing how the various steps of the general algorithm many-objective particle swarm optimization with quantum gates (MQPSO) are adapted to the specific features of the VMs placement problem (18)–(23). The algorithm is based on Hadamard gates and rotation gates. Hadamard gate converts a qubit of a quantum register Q into a superposition of two basis states ∣0⟩ and ∣1⟩, as follows [

35]:

| Algorithm 1 Multi-objective Quantum-inspired PSO |

| 1: | Set input data, A(t) := Ø; t := 0 |

| 2: | Initialize quantum register Q(t) with M qubits by the set of Hadamard gates |

| 3: | while (not termination condition) do |

| 4: | | create P(t) by observing the state of Q(t) |

| 5: | | determine new positions and velocities of particles followed by create B(t) |

| 6: | | find Fonseca-Fleming ranks for an extended archive C(t) = A(t)∪B(t)∪P(t) |

| 7: | | calculate crowding distances, fitness and then sort particles in C(t) |

| 8: | | form A(t) of Pareto-optimal solutions from the sorted set C(t) |

| 9: | | a tournament selection of an angle rotation matrix based on rating |

| 10: | | mutate the selected matrix with the rate pm |

| 11: | | modify Q(t) using the rotation gates |

| 12: | | t := t + 1 |

| 13: | end while |

The Hadamard gate is a single-qubit operation based on the 90° rotation around the

y-axis, and then a 180° rotation around the

x-axis. If we use Dirac notation for the description of the qubit state

, the qubit can be represented by the matrix, as follows [

6]:

The procedure of random selection of decision values is involved with a chromosome matrix. If the decision variable

xm is characterized by a pair of complex numbers (

αm,

βm), it is equal to 0 with the probability

and to 1 with

. Alternatively, the state of the

mth qubit can be represented as the point on the 3D Bloch sphere, as follows [

35]:

where

and

.

In the 3D Bloch sphere, the Hadamard gate can be implemented by several rotations to achieve the desired point determined by a pair of angles (), that is, equal superposition of the two basis states. Two angles and determines the localization of qubit on the Bloch sphere. The North Pole represents the state |0⟩, the South Pole represents the state |1⟩ and the points on the equator represent all possible states in which 0 and 1 are the same. Thus, in this version of the quantum-inspired genetic algorithm, there are M Bloch spheres with the quantum gene states.

Therefore, the Hadamard gate can be modeled, as the matrix operation, as below:

The Hadamard gate can be implemented by the Pauli gates. The Pauli-X gate (

PX) is a single-qubit rotation through π radians around the

x-axis. On the other hand, the Pauli-Y gate (

PY) is a single-qubit rotation through π radians around the

y-axis. From (27), we get the following:

where:

For the Pauli-Z gate (

PZ) that is a single-qubit rotation through π radians around the

z-axis, we have, as below:

where

.

The initial step of MQPSO (Algorithm 1, step 1) is to enter data such as V, I, J, Rmin, Then, the initial value of the main loop is set to 0 (t := 0).

The quantum register

Q(

t) consists of

M qubits (Algorithm 1, step 2). The

M Hadamard gates are used, concurrently. We consider (

V +

I) blocks of qubits representing decision variables

. If there are

λ quantum bits for encoding decisions

and

μ quantum bits for encoding

, there are

M =

quantum bits for the register

Q. To minimize the size of the quantum register, we use the following formulas to determine the number of qubits

and

. Each qubit has an index within this register, starting at index 0 and counting up by 1 till

M. Besides this, we use

qubits (instead of

) for the determination placement of virtual machines because of a key advantage of the quantum register. It can proceed with

virtual machines placements, concurrently. We can create digital decision variables by using a roulette wheel due to the given probability distribution after the measurement of the quantum register (

Figure 1). Similarly, we reduce the number of qubits from

to

, allowing for the allocation of appropriate hosts to VMs clusters. Therefore, the quantum register consists of

M =

, only.

An outcome of measuring is saved into a binary measurement register

BMQ with the same number of entries as the qubit register. Declared binary states of

BMQ entries are 0 or 1. When a qubit of the register

Q is measured the second time, the corresponding bit in the binary register is overwritten by the new measurement bit, even when a measurement is done on a basis different than the basis used for an earlier measurement. In this case, the selection of

x-basis,

z-basis or

z-basis does not allow the storage of the previously measured bit in the register

BMQ. The most recent qubit measurement introduces achange to the associated binary bit of the measurement register. The quantum register

Q can be measured regarding the

z-basis of each qubit.

Figure 1 shows an example of a probability distribution for placement of the

vth virtual machine. This distribution is important to generate digital positions of particles.

An initial population

P(

t) of

L particles

px(

t)

= (

x(

t),

v(

t)) is created by measuring the state of the register

Q at the iteration

t (Algorithm 1, step 4). The current position at the iteration

t is encoded as

Besides, the velocity

of this particle has

V +

I coordinates, too. Therefore, the digital particle

has 2(

V +

I) coordinates. Placements of virtual machines are randomly selected

V times due to the roulette wheel constructed by the probability distribution provided by the quantum register

Q (

Figure 1). Also, the hosts with adequate resources to clusters of virtual machines are randomly chosen

I times by the roulette wheel related to measuring another part of the quantum register

Q. Besides this, the velocity vector

of this digital particle is created by generation

V +

I values for

. Based on the quantum register

Q,

L digital positions of particles can be created to establish the initial population

P(

t = 0), where

px(

t) = (

x(

t),

v(

t)) and

L is the size of a population.

The population

P(

t) enables the designation of an offspring population

B(

t) in accordance with the rules of canonical PSO algorithms (Algorithm 1, step 5). The new position is calculated by adding three vectors to the current position

x(

t). The first vector is a difference between the best position

pbest of this particle from the past and the current position. The vector is multiplied by a random number

r1 from the interval [0; 1] and by the given coefficient

c1. The second vector is a difference between the best perfect position

gbest of the neighborhood and the current position. This vector is multiplied by a random number

r2 from the interval [0; 1] and by the given coefficient

c2. The third vector is the difference between the velocity and the current position. This vector is multiplied by a random number

r0 from the interval [0; 1] and by the given coefficient

c0 (

Figure 2).

An extended archive

C(

t) is the sum of three sets of particles

A(

t)∪

B(

t)∪

P(

t) (Algorithm 1, step 6). We compare particles from the extended archive

C(

t). Criteria values of particles are calculated, followed by Fonseca–Fleming ranks [

36]. A rank

r(

x) of solution

x is a number of dominant solutions from

C(

t).

The next step of the algorithm MQPSO is calculation crowding distances, fitness values, and then sorting particles in

C(

t) (Algorithm 1, step 7). Each particle is characterized by crowding distance to determine its fitness and to distinguish solutions with the same rank [

37]. Sorted particles with the highest fitness values are qualified to an archive

A(t) of non-dominated solutions with their criteria values (Algorithm 1, step 8).

An important step of an algorithm is using three rotation gates to modify the quantum register

Q (Algorithm 1, steps 9–11). The

gate is a single-qubit rotation through the angle

(radians) around the

x-axis. Similarly, the

gate is a rotation through the angle

around the

y-axis. A rotation through

around the

z-axis is the

gate. The adequate matrix operations can be written, as follows [

35]:

Figure 3 shows the quantum gates for finding the correction of new particle position. It determines the new assignment of the

vth virtual machine to the host. There are Hadamard gates and three rotation gates that determine host number. An important role play rotation angles

for each qubit

. A matrix of angles

can be characterized, as below:

Initially, the angles are determined randomly. However, preferences should be given to modifications of the quantum register, which cause greater effects in the set of designated non-dominated solutions in the archive. For this reason, we evaluate each rotational angle matrix with the number of effective solutions in the archive, which solutions were determined using a given matrix. A matrix with a higher rating is more likely to be used in the next iteration because of a tournament selection of the rotation angle matrix in conjunction with the roulette rule. Each angle of the matrix can be mutated at the pm rate, which mutation consists in changing the angle by a random value with a normal distribution with standard deviation .

Figure 4 shows results after rotations the quantum register

Q followed by measurement. The most preferred host by virtual machines is located at the sixth node. Placement both at the 7th and the 15th node have much fewer chances to be selected, but they may be chosen for several VMs.

To sum up, the population P(t) of L particles is created by observing the state of the quantum register Q(t) in main loop of MQPSO. New positions and velocities of particles are generated followed by create a neighborhood B(t). Besides, values of criteria are calculated, and solutions are verified if they satisfy constraints. Then, we can find ranks of feasible solutions for an extended archive C(t) = A(t − 1)∪B(t)∪P(t). If a rank is equal to zero, a solution is non-dominated in an extended archive.

Non-dominated solutions are accepted for the A(t) archive, only. If the number of Pareto-optimal solutions exceeds the archive size, a representation of them is qualified, which takes place by means of the densification function. Solutions with ratings in less dense areas have a greater chance of qualifying for the archive. In the initial period of searching the space of feasible solutions, solutions with higher ranks and even unacceptable solutions based on the fitness function may be qualified.

The algorithm ends the exploration of space when the time limit is exceeded (the number of particle population generations) or when there is no improvement over a given number of iterations.

6. Pareto-Optimal Solutions and Compromise Alternatives

Let be a set of Pareto-optimal solutions for the many-objective problem of virtual machine placement ) (18)–(23) with n criteria, where n = 2, 3, …, 7. The set of admissible solutions is the same for each n. Because there are seven partial criteria , , , we can use a notation There are six sets of Pareto solutions: because . Also, there are six sets of evaluations .

Besides this,

n dimensional domination relation in

denoted as

can be defined, as below:

We can formally explain the number growth of Pareto-optimal solutions in many-objective optimization problems due to adding the partial criteria by the following theorem.

Theorem 1. A set of Pareto solutions for n (n ≥ 2) criteria in the many-criteria optimization problem of virtual machines placement ) (18)–(23) is included in the set of Pareto solutions for n + k criteria, k = 1,2, …, N − n (n + k ≤ N) and a domination relation in , which can be formulated, as below:

Proof. Let be a non-empty set of Pareto solutions for two criteria F1 and F2. If we add the third criterion F3, all solutions from are still Pareto-optimal. Besides, there is no admissible solution that dominates all solutions from the set due to three criteria . On the other hand, another non-dominated solution may exist regarding a smaller value of . Therefore, . Also, we can proof , and so on.

We have shown that for every natural number k ≥ 1 the implication T(k) ⇒ T(k + 1) is true since the truth of its predecessor implies the truth of the successor. Since the assumptions of the mathematical induction rule are satisfied for this theorem, the formula (35) is true for every k ≥ 1, which ends the proof. □

It can happen that , but this is extremely rare. Usually, along with additional criteria, the size of the Pareto set increases significantly in the many-criteria optimization problem of virtual machines placement ) (18)–(23).

The algorithm MQPSO determined the compromise solution (

Figure 5) characterized by the score

= (1240; 25,952; 10,244; 11,630; 18; 191; 0.92) with the smallest Euclidean distance to an ideal point

= (442; 25,221; 6942; 6750; 19; 195; 0.97) in the normalized criterion space

. Coordinates of an ideal point are calculated regarding the following formulas:

The nadir point

is another characteristic point of the criterion space

. The nadir point is required to normalize the criterion space. Contrary to the ideal point,

takes into account the worst values of the Pareto set

in terms of the preferences, as follows:

Moreover, an anti-ideal point

may be used for the normalization of a criterion space. Coordinates of an anti-ideal point are calculated due to the following formulas:

Because an algorithm determines the Pareto set of solutions

and its evaluation set

, we can normalize an evaluation set

as the 7D hypercube

[0; 1]

7, where the normalized ideal point is

= (0; 0; 0; 0; 1; 1; 1), as below:

The normalized nadir point

= (1; 1; 1; 1; 0; 0; 0) is characterized by the maximum Euclidean distance

from the normalized ideal point. In the hypercube

, a trade-off (compromise) placement of virtual machines

can be selected from the Pareto-optimal set

due to the smallest value of

p-norm , as follows:

where

is the normalization evaluation point of ,

.

Theorem 2. For the given parameter p = 1, p = 2 or p→∞, the normalization (39) and a domination relationin the many-criteria optimization problem of virtual machines placement) (18)–(23), p-normis a function of solution x, as follows:

Proof. Let p = 1. Then, . Because = (0; 0; 0; 0; 1; 1; 1), we get . We insert the right side of Equation (39) instead of , and we get the Equation (41). We prove the correctness of formulas (42) and (43) in a similar way, which ends the proof. □

7. Numerical Experiments

In order to verify the quality of the mathematical model, the correctness of the formulated many-criteria optimization problem, as well as the quality of the developed algorithm, several multi-variant numerical experiments were carried out, and the designated compromise solutions were simulated in the GUT-WUT cloud computing environment. We consider four instances of the virtual machine placement problem such as: Benchmark90, Benchmark306, Benchmark855 and Benchmark1020 that are available on site

https://www.researchgate.net/profile/Piotr-Dryja (accessed: 19 November 2022). For example, Benchmark855 is characterized by 855 binary decision variables, and therefore a binary search space contains 2.4 × 10

257 items. There are 45 VMs, 15 nodes and 12 possible hosts. Besides this, there are both 60 integer decision variables and 1.3 × 10

69 possible solutions.

If we consider seven criteria, there are 21 pairs:

(

and so on.

Figure 5 shows three evaluations of Pareto-optimal solutions in two criteria spaces

Points P

1 = (410; 395,223), P

2 = (448; 25,952) and P

3 = (587; 25,221) are non-dominated due to

. However, the results of the experiments confirmed a significant increase in the number of Pareto-optimal solutions with the addition of further criteria. For instance, the other criteria

significantly extended the set of Pareto solutions to a set {P

1, P

2, …, P

200}. While these supplementary 197 points are dominated by two criteria

,

, each new criterion usually increases the number of Pareto-optimal solutions that dominate points P

1, P

2, P

3 due to this new metric. As a result, we can expect several Pareto-optimal solutions from which we can choose the compromise evaluation

= (1240; 25,952; 10,244; 11,630; 18; 191; 0.92), where each evaluation of solution is presented as

y(

x) =

. The trade-off evaluation

minimizes Euclidean distance to an ideal point in the normalized space R

7. On the other hand, P

2 is the compromise point in the normalized space

R2.

Figure 6 shows the compromise placement of virtual machines. A solution

specifies 15 destinations for 45 virtual machines, where the adequate resources are provided to efficient run all tasks. There are three hosts DELL R520 E5640 v1 (Dell Inc., USA), 4 DELL R520 E5640 v2, 4 Infotronik ATX i5-4430 (Infotronik, Poland), 2 Infotronik ATX i7-4790, Fujitsu Primergy RX300S8 (Fujitsu, Japan) and IBM x3650 M4 (IBM, USA) allocated at 15 nodes.

The 7D compromise estimation

is dominated by the other solutions due to several pairs of criteria, but there is at least one pair of criteria, where it is non-dominated.

Figure 7 shows Pareto evaluations found by MQPSO for the cut

. In this case, the compromise point is dominated by seven evaluation points. However,

is close to the Pareto front of this pair criterion cut

. A similar situation occurs in

Figure 5, where the compromise score is dominated by 11 elements. On the other side, these evaluations are dominated by the compromise solution in

Figure 6. In summary, the compromise solution is not dominated by other alternatives in the sense of the four criteria and is, therefore, not dominated in the sense of the seven criteria as well.

The 7D estimation

of compromise solution was determined for an ideal point

= (442; 25,221; 6942; 6750; 19; 195; 0.97) (

Table 1). For normalization of the criterion space, the nadir point

N* = (2764; 49,346; 87,359; 20,740; 5.2; 21.5; 0.53) was used. Besides this, it was calculated the anti-ideal point

(3600; 50,000; 87,500; 22,000; 4; 7; 0.33) that can be applied for an alternative normalization. A selection of a compromise solution is carried out in the normalized space due to minimization

p-norm

.

If we choose the nadir point

N* for the normalization of the criterion space

Y, the evaluation of the compromise solution is

for

p = 1 and

p = 2 (No. 1 in

Table 1). Without losing the generality of the considerations,

Table 1 presents the best 20 solutions in the sense of

and normalization using the nadir point. When analyzing the coordinates of the points closest to the ideal point, it can be noticed that in this case the “middle” values of the criteria are preferred instead of lexicographic solutions, which are characterized by the best value of the selected criterion. The most preferred values in each of the seven categories are marked in yellow (

Table 1).

The undoubted advantage of the compromise solution is its full dominance over other competitors due to the size of the disk storage reserve in the most critical host. In this respect, the remaining solutions are characterized by slightly worse values. The advantage is also the largest reserve of RAM memory because only solution number 4 (

Table 1) has the same value. Moreover, the compromise alternative has the shortest data transmission time through the busiest cloud transmission node. In this case, solutions No. 5, 9 and 18 are also characterized by an equally high quality of data transmission. Consuming more electricity than several solutions is perhaps the biggest disadvantage of the compromise solution. However, this is not too much of a difference to the most energy-efficient placements of the VMs migration.

Solutions No. 2 and 3 are characterized by lower electric power consumption by more than 2 kilowatts. Furthermore, they are not the best in terms of any criterion, but in terms of , they are very close to the compromise solution.

If we choose the nadir point

N* for the normalization, the evaluation of compromise solution is

for

p→∞ (No. 4 in

Table 1).

Table 2 presents the

p-norm values for the best 20 Pareto-optimal VMs placements sorted by

. Solution No. 4 differs from

in that all criteria values are more balanced with respect to the ideal point coordinates. This is due to the greater consumption of electricity by the solution No. 1, which causes the value of the

to be 0.349. On the other hand, the solution No. 4 is characterized by

0.335.

If we select the anti-ideal point , the differences between the coordinate values of the point and the ideal point are greater than when the nadir point is taken into account. As a result, we are dealing with a completely different normalization. The specificity of this computational instance is such that the ideal point has the greatest impact on the change of normalization for the reliability of the cloud because the distance to the ideal point coordinate is increased by 45.5%. On the other hand, the increase in the length of the value range of 36% is characterized by the load of the CPU bottleneck host in the computing cloud. For the other five criteria, the impact on standardization is below 10%.

However, the change of the normalization point with respect to the ideal point did not result in any major changes related to the compromise solutions. Solution No. 1 remained a compromise solution for

p = 1 and for

p = 2. On the other hand, a new compromise solution has been identified for

p→∞ (

Table 1, No. 6). In this case,

decreased from 0.350 to 0.260, which caused

to be affected by

, which is 0.290.

The decision-maker can choose one value of the parameter p. We prefer an influence of all criteria on the compromise solutions for p = 1 but some of them can achieve very poor values. On the other hand, if p = 2, we favor the minimal Euclidean distance to the ideal point. Finally, all criteria achieve similar good values not far from ideal ones if p→∞ is selected.

Another dilemma is related to a selection between the nadir point and the anti-ideal point for the normalization of the criterion space. The nadir point gives information about a range of all efficient solutions. Besides this, the anti-ideal point gives information about the range of the admissible set. If the selection of compromise solutions is considered from the Pareto set, the nadir point is more suitable than the anti-ideal point for the criterion space normalization. As a consequence, the compromise solution can be selected.

We suggest selecting both p = 2 and the nadir point to determine the compromise solution from the set of Pareto-optimal elements of two or three criteria. The other approach is based on many-objective analysis with seven criteria, where an extended analysis is needed because of greater sensitivity of compromise solutions to the parameter p and the choice of the normalization point. In this way, a decision-maker can find some trade-off solutions after introducing a limit on the size of the representation of Pareto-optimal solutions, which is the specificity of solving optimization problems with many criteria.

A very important experiment is to compare the quality of the designated solutions by the proposed method with other methods. Outcome evaluations of Pareto placement of virtual machines are presented in

Table 3. The Benchmark855 was used for this purpose, too. We consider fifteen non-dominated solutions obtained by MQPSO, Non-dominated Sorting Genetic Algorithm II (NSGA-II) [

37], Multi-criteria Genetic Programming (MGP) [

38], Multi-criteria Differential Evolution (MDE) [

4] and Multi-criteria Harmony Search (MHS) [

39].

To compare 15 solutions provided by five metaheuristics, we achieved a new ideal point

= (385; 2193; 10,242; 9500; 18; 191; 0.92). In addition, the new nadir point

N* = (1405; 36,187; 33,259; 14,838; 11; 112; 0.83) was used for normalization. When analyzing the computational load of each algorithm, it was assumed that the population consists of 100 particles (MQPSO), chromosomes (MDE, MHS) or compact programs (MGP). The population number is set to 10,000 and the maximum computation time is 30 min. In MQPSO, the values of individual coefficients were as follows:

c0 = 1,

c1 = 2,

c2 = 2,

vmax = 1. In the differential evolution algorithm MSE,

q = 0.9 and

Cp = 0.4 were assumed. Moreover, an additional type of mutation was used, based on the multi-criteria tabu search algorithm [

2]. In contrast, in the harmony search MHS algorithm, the mutation rate p

m was 0.1 and the crossover rate

pc was 0.01. Using MGP genetic programming, it was assumed that the maximum number of nodes in the programming tree was 50, and the mutation rate and crossing rate were the same as for MHS.

An optimal swarm size is problem-dependent. If the number of particles in the swarm is greater, the initial diversity is larger, and a larger search space is explored. On the other hand, more particles increase the computational complexity, and the PSO exploration leads to a parallel random search. We observed that more particles lead to fewer swarms to reach the Pareto-optimal solutions, compared to a smaller number of particles. Our experiments confirmed that the MQPSO has the ability to find Pareto-optimal solutions with sizes of 60 to 100 particles. Each run was repeated 10 times, and

Table 3 lists the best solutions obtained with each algorithm. The

p-norm values for the best 15 Pareto-optimal VMs placements were sorted by

L2. Moreover, the other values for

p-norm were calculated, too.

Based on the obtained data, it can be concluded that the MQPSO method is better than the other methods due to the number of Pareto-optimal solutions in the first 12. The closest three solutions to the ideal point are determined with MQPSO. An important argument is also the fact that the average distance from the ideal point is the smallest for effective solutions provided by the MQPSO method. NSGA-II is the second metaheuristic with an average distance of 1.39 versus 1.16 achieved by MQPSO. If we consider the p-norm for p = 1, the compromise solution is the same. Also, the three nearest solutions to the ideal point are produced by MQPSO. On the other hand, solution No. 10 provided by NSGA-II and solution No. 14 determined by MDE are very close to the compromise solution due to Lp→∞. Moreover, the algorithm MQPSO has great potential to be extended in the nearest feature due to development of the quantum computers and a quantum algorithmic theory.