1. Introduction

Self-driving vehicles are the next major advancement in the automotive industry. Some of the core systems that allow for autonomy in vehicles are lane-following algorithms. Lane-following algorithms are responsible for keeping the vehicle centered within the lane by using lane detection techniques to detect the pavement markings along the road. According to the SAE (Society of Automotive Engineers) International association, vehicle autonomy can be broken down into six levels, starting with SAE Level Zero all the way up to SAE Level Five (

Figure 1).

Although our algorithms are capable of steering, braking, accelerating, and lane centering, we do not target any of the SAE levels of driving automation as our algorithms forgo any kind of object detection, automatic emergency braking, and warning systems in favor of researching robust lane detection and lane centering only using computer vision. Lane detection uses computer vision to detect the lane by continuously estimating the contours of the lane markings as the vehicle is in motion, whereas lane centering uses the contours as input to monitor the position of the lane markings in relation to the position of the vehicle. As seen in

Figure 1, steering assistance and lane-centering algorithms are two of the essential systems that allow for autonomy in vehicles, and since many of these systems rely on computer vision, the lane detection and lane centering problem devolves partially into a computer vision problem. Thus, this is the focus of our research.

There has been a growing recognition that theoretical results cannot capture the nuances of real-world algorithmic performance and many have started to view experimentation as providing a pathway from theory to practice [

2]. In this work, we aim to experimentally analyze the strengths and weaknesses of Contour Line Detection [

3], Hough Line Transform [

4], and Spring Center Approximation [

5] algorithms implemented in Python.

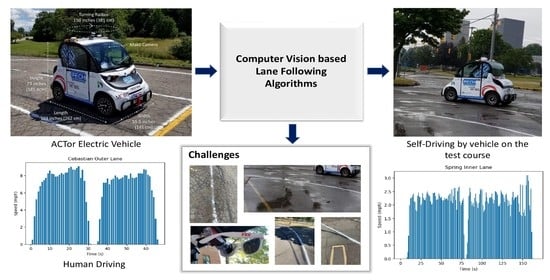

In our empirical analysis, we found that a robust lane-following algorithm must be able to deal with fading, broken-up, and missing road lane markings under varied weather conditions and be resilient to environmental obstructions, which may prevent the lane from being detected, such as shadows and reflections on the road. In this work, we tackle these challenges in the lane-following and lane-centering algorithms we developed, analyzed, and evaluated using a real street-legal electric vehicle. The key to our algorithms lies in the region of interest, filters, and yaw rate conversion function we designed. The yaw rate conversion function takes the coordinate of the centroid used by the lane-centering algorithm and converts it into yaw rates for the vehicle to use as input for steering. This allows our algorithms to work under varied weather conditions and environmental challenges. Our solutions were designed and implemented using the Robot Operating System (ROS) and OpenCV libraries in Python.

All of our algorithms work in a similar way: they lane follow by chasing a hypothetical blob that is always centered with respect to the lane. The coordinates of the blob are then converted into yaw rates, which the drive-by-wire system uses to control the steering of the vehicle. Thus, our goal is to implement this hypothetical blob that the algorithms can always rely on being in the middle of the lane. The first algorithm does this by computing the centroid of the largest contour of the edge line. This centroid is the middle point of the edge line, so we shift its position until it is in the middle of the lane relative to the edge line. The coordinates of the centroid are then converted into a yaw rate for steering. On the other hand, the second algorithm accomplishes this task by using Hough Line Transform to detect the solid white lines on both sides of the lane and then draws a hypothetical or “fictitious” line in between the white lines and computes its centroid to determine the position of the blob. The third algorithm uses Hough Line Transform to detect the road lane markings, then draws a series of rays that dynamically change in size to fit to the lane. This algorithm starts with the blob centered in the middle of the lane and uses the size of the rays to compute the forces acting on the blob to ensure it always stays in the middle of the lane using spring physics.

To make a fair comparison between the driving performance of the algorithms and a licensed human driver, we set a speed limit. The speed limit ensures that the algorithm can be tested safely, since the test course is circular and compact, this means that the turns are naturally sharp (see

Figure 2). After testing it, we concluded that seven miles per hour is the fastest speed the algorithms can safely handle while running circles around the test course. We arrived at this value on the basis that, on average, the fastest a licensed human driver could safely complete a lap around the course was eleven miles per hour without touching the lane markings. This number is within the expectations we had considering that, in simulation, the algorithms worked consistently up to ten miles per hour given the same test course. However, even in simulation, achieving speeds higher than ten miles per hour proved difficult because of the tight turns. The driving performance of human versus algorithm are put to the test and then evaluated under the same conditions by noting advantages and disadvantages the algorithms have over the human driver and vice versa. As an example, the algorithms were better at lane-following while keeping a consistent speed than the human driver. This benchmark allows us to gauge where our algorithms stand in comparison to a human driver and to pinpoint the areas that need the most improvement to bridge the gap in performance.

Thus, the goal of this research is to develop, analyze, and evaluate self-driving lane-following and lane-centering algorithms in simulation and in reality using street-legal electric vehicles in a test course with various challenges. In our design, we intend to account for sharp curves, narrow parking lot lines, unmaintained roads, and varied weather conditions. Furthermore, we aim to compare the performance of the algorithms to each other and to a licensed human driver under a speed limit. The main contributions and novelty of this research work are summarized as follows:

We propose multiple computer vision-based lane-following algorithms which are tested on a full scale electric vehicle in a controlled testing environment.

The real-world testing environment has sharp curves, faded or narrow lane markings, and unmaintained roads with exposure to the weather. The algorithms have been optimized to work under these conditions. Since computer vision-based lane-following algorithms that rely on just a camera have not been evaluated under these circumstances before, our algorithms serve as a baseline for navigating unmaintained roads under varied weather conditions. Our most reliable algorithms had a success rate of at least 70% with some lane positioning infractions.

We evaluate the driving data of the algorithms and a licensed human driver using a custom performance evaluation system, and then analyze and compare the two under a specified speed limit using reports from the vehicle’s drive-by-wire system. The algorithms are found to have a better speed control over the human driver, whereas the human driver outperformed the algorithms when driving at faster speeds while keeping to the lane.

We test the performance of algorithms written in Python as opposed to a compiled programming language such as C++.

The remainder of this paper is organized as follows:

Section 2 reviews the state-of-the-art research about lane-following algorithms for self-driving vehicles that only use a camera and computer vision. This section goes over main gaps identified in this kind of research and explains the role of our research in expanding knowledge in the discussed areas.

Section 3 elaborates on the simulation, physical testing environment, and the development of the lane-following algorithms. Then, the specifics about the challenges posed by the weather and unmaintained roads are illustrated in

Section 4.

Section 5 discusses the results of the evaluation of the algorithms in a real-time environment on the course using a street-legal electric vehicle. The performance of the algorithms to a human driver is also compared in this section. We reiterate the main results of this work and conclude the manuscript by identifying the limitations and future avenues of work in

Section 6.

2. Review of Literature

In most other research work on lane-following algorithms for self-driving vehicles using only a camera and computer vision, the algorithms are only tested in simulation. Even in simulation-based work, the lane detection is overlaid on an image or video of the road. A simulated vehicle is not used to understand the performance of lane detection algorithms at different speeds nor does it take into account the kinematics of the vehicle. Please refer to

Table 1 for the summary of the literature review detailing the highlights of the papers and the research gaps identified. In [

6], the approaches in previous literature were categorized into three classes: area-based methods, edge-based methods, area-edge-combined and algorithm-combined methods. In area-based methods, the road detection problem is considered as a classification problem and regions in the image are classified into road and non-road. In edge-based methods, an edge map of the road scene is obtained, and then using a predefined geometric model matching the procedure is carried out to detect the lane. In algorithm-combined methods, several methods are carried out together in parallel to increase detection performance. According to these classifications, we use edge-based methods, and thus our prior art search covers papers in this field.

In [

7], the authors test their self-driving algorithm, which involved Hough Transform with hyperbola fitting, on real-life vehicles. However, the authors mentioned that the algorithm works only on slightly curved and straight roads, and there were some problems with lane detection under certain lighting conditions. Our work aims to target these limitations by enabling the vehicle to take sharp turns within a set speed limit under different lighting conditions just using a camera and computer vision techniques.

The authors of [

8,

9] used several computer vision techniques for lane detection. In [

8], the authors compared thresholding, warping, and pixel summation to Gaussian Blur, Canny Edge Detection, and Sliding Window Algorithm and found that the second approach was more accurate. In [

9], the authors used the HLS (Hue, Light, Saturation) colorspace, perspective transform, and sliding window algorithm. We did not attempt the sliding window algorithm in this research work as the basic sliding window algorithm cannot detect dashed lines and sharp curves. We attempted different combinations of the image processing pipeline used in [

8,

9]; however, we observed that we gained minimal performance improvement relative to the increase in computational complexity when working on the real vehicle. We optimized the image processing pipeline for speed and efficiency by using only necessary techniques (refer

Section 3 for further details) to avoid processing delays.

Table 1.

Literature Review.

Table 1.

Literature Review.

| | Papers | Purpose | Brief Description | Research Gaps Identified |

|---|

| Deep Learning Approaches | [10] | Lane Detection | A spatial CNN approach was compared to sliding window algorithm. Tested in simulation. | Authors found that classical computer vision had considerably lower execution time than deep learning and no extra specialized hardware required. |

| [11] | Lane Detection | LaneNet [12] was tested on a real vehicle. | Only lane centering without steering angle calculation was performed with deep learning. Moreover, due to our test course being predefined, any deep learning-based lane-centering solution would have resulted in overfitting. |

| [13] | Lane Centering, and Steering Control | Transfer learning with inception network was used for lane centering and steering angle calculation. Tested on a real vehicle. | On average, the model achieved a 15.2 degree of error. This would not have worked for our course consisting of sharp turns. |

| Classical Computer Vision Approaches | [8,9,14,15,16] | Lane Detection | Standard Hough Line Transform, Sliding Window algorithm, Kalman Tracking, RANSAC algorithm. | These algorithms do not work well on sharp curves, varied weather conditions, nor poorly maintained roads. They also have not been tested in a real test environment. |

| [17] | Lane Detection | Kalman Tracking used and RANSAC algorithm for post-processing. Tested under varied, challenging weather conditions. | The future work of the paper included explicitly fitting the curve to the lane boundary data. |

| [18] | Lane Centering and Steering Control | A nonlinear path tracking system for steering control was presented and tested in simulation. | |

| [7] | Lane Detection, Centering, and Steering Control | Hough Transform with hyperbola fitting. Tested on real vehicles. | Only works on slight curves, straight roads, and certain lighting conditions. |

| | This work | Lane Detection, Centering, and Steering Control | Blob Contour Detection, Hough Line Transform, Spring Center Approximation method. Tested on a real vehicle in a test course with tight turns, varied weather and poorly maintained road conditions. | Only tested up to 7 miles per hour. The algorithms do not meet SAE J3016 standards because they lack object detection, automatic emergency braking, and warning systems. |

In [

15], the authors propose steerable filters for combating problems due to lighting changes, road marking variation, and shadows. These filters seem very useful for combating the shadow problem and especially for tuning to a specific lane angle. For lane tracking, in this paper, the authors opt for using a discrete time Kalman Filter. We did not go for this approach in our work as the Kalman filter provides a recursive solution of the least square method, and it is incapable of detecting and rejecting outliers which sometimes leads to poor lane tracking as stated in [

6]. In [

14], two different approaches were taken based on whether the road was curved or straight. For a straight road, the lane was detected with Standard Hough Transform. For curved roads, complete perspective transform followed by lane detection by scanning the rows in the top-view image was implemented. As an improvement to this, the authors in [

19] adopt a generalized curve model that can fit both straight and curved lines using an improved RANSAC (Random Sample Consensus) algorithm that uses the least squares technique to estimate lane model parameters based on feature extraction.

In [

10], the authors propose a computer vision algorithm called HistWind for lane detection. This algorithm involves filtering and ROI (region of interest) cropping, followed by histogram peak identification, then sliding window algorithm. HistWind is then compared with a Spatial CNN (Convolutional Neural Network) and the results are comparable for both, although HistWind has a considerably lower execution time. In [

13], the ACTor (Autonomous Campus TranspORt) vehicle was used for testing a deep learning-based approach for lane centering using a pretrained inception network and transfer learning. However, since this approach is computationally intensive and requires specialized hardware, we did not attempt deep learning-based solutions in our work. Additionally, due to the test course being predefined, any deep learning-based solution would have resulted in an overfitted model. The computer vision-based approach was chosen for this work because it is usually simpler and faster than any other technique that requires specialized hardware.

From the above papers, we have identified that the improved RANSAC algorithm [

19], Kalman Tracking [

19], sliding window algorithm [

19], and spline models such as [

20] detect and trace the exact curvature of the boundary of road. Out of these, as elaborated above, the RANSAC algorithm seems promising as seen in [

17]. Taking the characteristics of the various lane models and the needs of lane detection in a harsh, real-time environment into consideration, we propose fast and efficient lane keeping algorithms which use Contour Detection (which traces the exact curvature of the road) and Hough Line Transform (which linearly approximates the curvature of the road).

5. Results

An evaluation program was used to collect the total time, average speed, and speed infractions of a successful run for each method. An external evaluator recorded the number of times the vehicle would either touch a lane line or drift outside the lane. One of the Teaching Assistants was arbitrarily chosen as the evaluator and assigned to follow the vehicle across the test course. Markings were made on a paper version of the track where the vehicle touched a line, departed from the lane, or for the dead reckoning turn error. In addition, the weather conditions at the time and any additional comments were also noted. Since the same person evaluated all of the algorithms, the key was uniform and left up to the evaluator’s discretion. In addition, rosbags were recorded using the vehicle’s drive-by-wire system to corroborate and verify the evaluator’s sheets.

A run is defined as a failure if the human driver has to manually use the brake to stop the vehicle from hitting the curb or going off the predetermined course. In case of a lane departure wherein the algorithm is unable to follow the lane anymore, this case would also be considered a failure. In the dead reckoning turn, if the vehicle turns too much to the right in the case of the inner or outer lane turn, it is a failure case. If it turns too much to the right in the case of the outer lane-following, it hits the curb. If it turns too much to the right in the case of inner lane-following, the algorithm loses the middle dashed line or the outer line too according to the region of interest used. In both of these cases, it results in a failure case as the algorithm is unable to proceed following the lane.

Table 3 below shows the recorded data for the official runs of the algorithm. Each run, whether it was successful or not, was recorded as a rosbag file for future analysis. An external evaluator was responsible for noting the results. Refer to

Appendix A for the details of each of the official runs. The total number of recorded runs for each algorithm was used to determine the average success rate.

The official runs of each algorithm on the inner and outer lane are recorded above and processed into a speed-time graph. These are then compared with the data from the human driver that drove the best. Speed time graphs for the best human drivers are shown in

Figure 24. Refer to

Appendix A for more graphs.

The speed control for the algorithms are noticeably more consistent than that of the human drivers as seen in

Figure 25. The bumps in the graph are the result of the vehicle trying to make corrections for bumps and inconsistencies in the road. The sharp peaks and troughs of the graph are the result of losing a Hough line in the mask, then picking the line up again. The human drivers also demonstrated a tendency to go over the speed limit in many cases, suggesting it is difficult for humans to maintain a consistent speed at all times. On average, the human drivers were able to drive faster than the algorithms, close the set speed limit of seven miles per hour. However, the algorithms were not far off; the Hough algorithm was able to complete the outer lane laps at a maximum speed of 6.7 miles per hour and at an average speed of 4.476 miles per hour consistently for over four tests, which is comparable to the average speed of the human drivers. The human drivers would often exceed the speed limit and once they noticed the speed limit infraction on the speedometer of the vehicle, they would try to correct it by slowing down and the pattern would repeat throughout the laps for large distances traveled. On the other hand, the Hough algorithm for outer lane covered a much smaller distance of inconsistent change in speeds. Overall, the human driver was better at keeping to the lane at higher speeds, but struggled keeping a consistent speed when compared to the self-driving algorithms.

The algorithms are quite far from human performance in terms of control of the vehicle at high speeds though the Spring and Hough algorithm proved promising. In the recorded runs of the authors, the average speed ranged from 3.8 to 6.9 miles per hour (for each author attempting the course) when following the speed limit. In contrast, the Hough algorithm achieved an average speed of 4.476 miles per hour (and 4.358 miles per hour in another of the recorded runs) for outer lane which falls exactly within the range of the human runs.

All of the algorithms faced some difficulty when under direct sunlight. Hough lines especially are dependent on the accuracy of the HSV (Hue, Saturation, Value) mask, which varies depending on weather and light conditions. The Hough algorithm performed best in overcast weather conditions. We observed that the spring algorithm had a superior performance for the inner lane when compared to the outer lane. We attribute this to the fact that the algorithm is able to better detect the lane when it is at a closer proximity to the vehicle due to the sharper curves.

Table 4 indicates the most difficult turn for each algorithm and

Figure 26 provides the nomenclature to understand the table.

6. Conclusions

This research presented three different algorithms that autonomous vehicles may use to navigate both inner and outer roadway lanes. Real-world driving data and graphs showed that the human driver was better at staying within the lane while the algorithms excelled at driving at a certain speed consistently. We tested the algorithms on the ACTor self-driving platform as fast as they could go under the speed limit of seven miles per hour while still achieving the highest level of accuracy. In the end, during testing and demonstration, all three algorithms were able to complete the course for two laps. Some algorithms performed better than others, but ultimately, they were all able to complete the laps. Based on the results from all of the tables, we came to the conclusion illustrated in

Table 5.

A high average speed, along with the minimal number of line touches, suggests good speed and centering control since it means the car did not have to slow down as much for turns. Existing lane-following algorithms are built for smooth, well marked roadways [

7,

9]. In this research work, despite the numerous obstacles, such as the tight curves and unmaintained roads, our algorithms were able to navigate the test course. These algorithms serve as a baseline for navigating the challenging sections of road.

Our work aims to enable a vehicle to drive under varied weather and road conditions without any human intervention within the bounds of a predefined course when the self-driving feature is enabled. As per SAE definition of autonomy (refer

Figure 1), our work advances the computer vision aspect of self-driving research required to achieve full autonomy. However, we do not target a specific SAE level as our algorithms lack the capability for object detection, automatic emergency breaking, and warning systems. Hence, they do not comply with the standards defined in SAE J3016. Instead, our work focuses on making the lane detection algorithms work under less-than-ideal road conditions.

There are opportunities for future improvement of this study. For example, more data collection in the form of rosbags could be useful in getting more accurate values of the performance of the algorithms. We could also test the effectiveness of our filtration process under snowy conditions as long as the lanes are visible. The future directions of this research includes a fully-automated function to evaluate the performance of self-driving algorithms. We use an automated evaluation function to compute the time taken for the laps, the average speed, and the distance traveled over and below the speed limit. However, a fully automated evaluation system that also notes the weather conditions and number of line touches and departures could be built instead of any human evaluation. In addition, research into HDR (High Dynamic Range Imaging) algorithms can be used to improve the filtration pipeline used in this research as excessive sunlight and luminosity was a challenge that lead to a few failure cases (refer to

Appendix A). Lane detection using deep learning algorithms such as LaneNet [

12] followed by lane-centering algorithms could also be further explored.

The evaluation data files (or rosbags) of the algorithms driving are saved for further research in the future. The implementations of the algorithms are also open source and available on GitHub.

In the future, we intend to develop algorithms that will enable vehicles to travel faster and more accurately—ideally, at a pace that is equal to that of humans—and to deliver reliable data regardless of the weather, road conditions, or the amount of lighting present in the environment. We believe our work brings self-driving research one step closer to full automation.