Machine Learning for Automated Classification of Abnormal Lung Sounds Obtained from Public Databases: A Systematic Review

Abstract

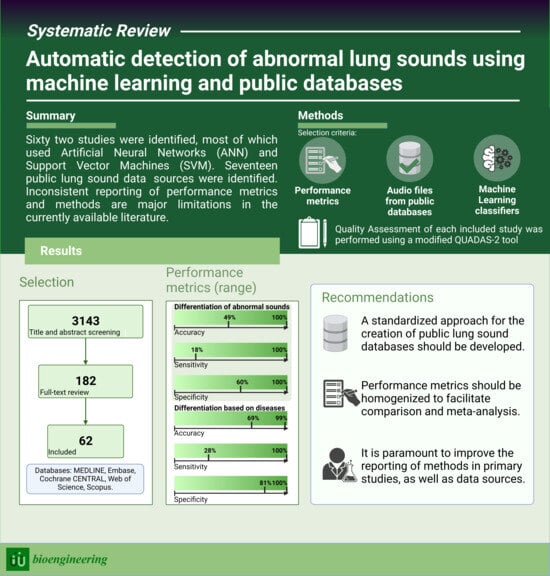

:1. Introduction

1.1. Context and Objectives

1.2. Process of Automated Abnormal Lung Sounds Classification

1.2.1. Lung Sound Recording

1.2.2. Audio Preprocessing

1.2.3. Feature Extraction

1.2.4. Classification

1.3. Public Lung Sound Databases

2. Materials and Methods

2.1. Bibliographic Search

2.2. Eligibility Criteria

2.3. Article Selection

2.4. Data Extraction

2.5. Quality Assessment

3. Results

3.1. Sources of Lung Sound Recordings

3.2. Features of Lung Sounds Databases

3.3. Types of Sounds Analyzed

3.4. Classification Models

| Name | Features | Refs. | |

|---|---|---|---|

| ANN | CNN RNN DNN DBN MLP | Inspired by networks of neurons, ANN models contain multiple layers of computing nodes that operate as nonlinear summing devices. These nodes communicate with each other by connection lines; the weight of each line is adjusted as the model is trained [56]. | [18,35,36,38,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91] |

| SVM | This maximal margin classifier aims to find the hyperplane in an N-dimensional space that distinctly classifies the data points [92]. | [14,37,59,63,65,66,78,87,93,94,95,96,97,98,99] | |

| k-NN | This classifier intends to classify a set of unlabeled data by assigning it to the class that contains the most similar labeled data points [100]. | [14,39,59,63,65,98,99] | |

| DT | This technique classifies data by posing questions regarding the item’s features. Each question is represented in a node, and every node directs to a series of child nodes, one for each possible answer, forming a hierarchical tree [101]. | [59,87,98,102,103,104] | |

| DA | This unsupervised learning technique intends to transform the features from a data point into a lower dimensional space, hereby maximizing the ratio of the between-class variance to the within-class variance, which results in maximized class separability [105]. | [87,106,107] | |

| RF | Random Forest is a classifier that builds multiple decision trees by using random samples of data points for each tree and random samples of the predictors; the resulting forest provides fitted values more accurate than those of a single tree [108]. | [78,109] | |

| GMM | Mixture models are derived from the idea that any distribution can be expressed as a mixture of distributions of known parameterization (such as Gaussians). Then, an optimization technique (such as expectation maximization) can be used to calculate estimates of the parameters of each component distribution [110]. | [34,35,111] | |

| HMM | The hidden Markov model creates a sequence of GMM models to explain the input data. Its main difference from GMM is that it takes account of the temporal progression of the data, whereas GMM treats each sound as a single entity [112]. | [111,113,114,115] | |

| GB | The main idea behind boosting techniques is to add a series of models into an ensemble sequentially. At each iteration, a new model is trained concerning the error of the whole ensemble [116]. | [99,117] | |

| LR | Logistic regression is a technique that describes and tests hypotheses about relationships between a categorical (outcome) variable and one or more categorical or continuous predictor variables [118]. | [63,119] | |

| NB | This supervised learning algorithm is based on the Bayes theorem. This technique works on probability distribution. The features present in the dataset are used to determine the outcome, but they are not related to other features [120]. | [39] |

3.5. Performance Metrics

3.6. Quality Assessment

4. Discussion

4.1. Clinical and Scientific Relevance

4.2. Opportunities and Barriers

4.3. Strengths and Limitations

4.4. Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Labaki, W.W.; Han, M.K. Chronic respiratory diseases: A global view. Lancet Respir. Med. 2020, 8, 531–533. [Google Scholar] [CrossRef] [PubMed]

- Wipf, J.E.; Lipsky, B.A.; Hirschmann, J.V.; Boyko, E.J.; Takasugi, J.; Peugeot, R.L.; Davis, C.L. Diagnosing pneumonia by physical examination: Relevant or relic? Arch. Intern. Med. 1999, 159, 1082–1087. [Google Scholar] [CrossRef]

- Brooks, D.; Thomas, J. Interrater reliability of auscultation of breath sounds among physical therapists. Phys. Ther. 1995, 75, 1082–1088. [Google Scholar] [CrossRef] [PubMed]

- Cardinale, L.; Volpicelli, G.; Lamorte, A.; Martino, J.; Andrea, V. Revisiting signs, strengths and weaknesses of Standard Chest Radiography in patients of Acute Dyspnea in the Emergency Department. J. Thorac. Dis. 2012, 4, 398–407. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, R.L. Differential auscultation of the acutely ill patient. Ann. Emerg. Med. 1985, 14, 589–590. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Myszczynska, M.A.; Ojamies, P.N.; Lacoste, A.M.; Neil, D.; Saffari, A.; Mead, R.; Hautbergue, G.M.; Holbrook, J.D.; Ferraiuolo, L. Applications of machine learning to diagnosis and treatment of neurodegenerative diseases. Nat. Rev. Neurol. 2020, 16, 440–456. [Google Scholar] [CrossRef]

- Hayashi, Y. The right direction needed to develop white-box deep learning in radiology, pathology, and ophthalmology: A short review. Front. Robot. AI 2019, 6, 24. [Google Scholar] [CrossRef]

- Kim, M.; Yun, J.; Cho, Y.; Shin, K.; Jang, R.; Bae, H.-j.; Kim, N. Deep learning in medical imaging. Neurospine 2019, 16, 657. [Google Scholar] [CrossRef]

- Chen, W.; Sun, Q.; Chen, X.; Xie, G.; Wu, H.; Xu, C. Deep learning methods for heart sounds classification: A systematic review. Entropy 2021, 23, 667. [Google Scholar] [CrossRef]

- Palaniappan, R.; Sundaraj, K.; Ahamed, N.U. Machine learning in lung sound analysis: A systematic review. Biocybern. Biomed. Eng. 2013, 33, 129–135. [Google Scholar] [CrossRef]

- Reichert, S.; Gass, R.; Brandt, C.; Andrès, E. Analysis of respiratory sounds: State of the art. Clin. Med. Circ. Respirat. Pulm. Med. 2008, 2, 45–58. [Google Scholar] [CrossRef] [PubMed]

- Kandaswamy, A.; Kumar, C.S.; Ramanathan, R.P.; Jayaraman, S.; Malmurugan, N. Neural classification of lung sounds using wavelet coefficients. Comput. Biol. Med. 2004, 34, 523–537. [Google Scholar] [CrossRef] [PubMed]

- Palaniappan, R.; Sundaraj, K.; Sundaraj, S. A comparative study of the SVM and K-nn machine learning algorithms for the diagnosis of respiratory pathologies using pulmonary acoustic signals. BMC Bioinform. 2014, 15, 223. [Google Scholar] [CrossRef]

- Richeldi, L.; Cottin, V.; Würtemberger, G.; Kreuter, M.; Calvello, M.; Sgalla, G. Digital Lung Auscultation: Will Early Diagnosis of Fibrotic Interstitial Lung Disease Become a Reality? Am. J. Respir. Crit. Care Med. 2019, 200, 261–263. [Google Scholar] [CrossRef]

- Kraman, S.S.; Wodicka, G.R.; Pressler, G.A.; Pasterkamp, H. Comparison of lung sound transducers using a bioacoustic transducer testing system. J. Appl. Physiol. 2006, 101, 469–476. [Google Scholar] [CrossRef]

- Gupta, P.; Wen, H.; Di Francesco, L.; Ayazi, F. Detection of pathological mechano-acoustic signatures using precision accelerometer contact microphones in patients with pulmonary disorders. Sci. Rep. 2021, 11, 13427. [Google Scholar] [CrossRef]

- Zulfiqar, R.; Majeed, F.; Irfan, R.; Rauf, H.T.; Benkhelifa, E.; Belkacem, A.N. Abnormal respiratory sounds classification using deep CNN through artificial noise addition. Front. Med. 2021, 8, 714811. [Google Scholar] [CrossRef]

- Salman, A.H.; Ahmadi, N.; Mengko, R.; Langi, A.Z.; Mengko, T.L. Performance comparison of denoising methods for heart sound signal. In Proceedings of the 2015 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Bali, Indonesia, 9–12 November 2015; pp. 435–440. [Google Scholar]

- Li, S.; Li, F.; Tang, S.; Xiong, W. A review of computer-aided heart sound detection techniques. BioMed Res. Int. 2020, 2020, 5846191. [Google Scholar] [CrossRef]

- Barclay, V.; Bonner, R.; Hamilton, I. Application of wavelet transforms to experimental spectra: Smoothing, denoising, and data set compression. Anal. Chem. 1997, 69, 78–90. [Google Scholar] [CrossRef]

- Mondal, A.; Banerjee, P.; Tang, H. A novel feature extraction technique for pulmonary sound analysis based on EMD. Comput. Methods Programs Biomed. 2018, 159, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Krishnan, S.; Athavale, Y. Trends in biomedical signal feature extraction. Biomed. Signal Process. Control 2018, 43, 41–63. [Google Scholar] [CrossRef]

- Maleki, F.; Muthukrishnan, N.; Ovens, K.; Reinhold, C.; Forghani, R. Machine learning algorithm validation: From essentials to advanced applications and implications for regulatory certification and deployment. Neuroimaging Clin. 2020, 30, 433–445. [Google Scholar] [CrossRef]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E. Evaluation of sampling and cross-validation tuning strategies for regional-scale machine learning classification. Remote Sens. 2019, 11, 185. [Google Scholar] [CrossRef]

- Barbosa, L.C.; Moreira, A.H.; Carvalho, V.; Vilaça, J.L.; Morais, P. Biosignal Databases for Training of Artificial Intelligent Systems. In Proceedings of the 9th International Conference on Bioinformatics Research and Applications, Berlin, Germany, 18–20 September 2022; pp. 74–81. [Google Scholar]

- Rocha, B.M.; Filos, D.; Mendes, L.; Vogiatzis, I.; Perantoni, E.; Kaimakamis, E.; Maglaveras, N. A respiratory sound database for the development of automated classification. In Precision Medicine Powered by Phealth and Connected Health; Springer: Berlin/Heidelberg, Germany, 2018; pp. 33–37. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. J. Clin. Epidemiol. 2009, 62, 1006–1012. [Google Scholar] [CrossRef] [PubMed]

- Innovation, V.H. Covidence Systematic Review Software. Available online: www.covidence.org (accessed on 1 August 2023).

- Whiting, P.; Rutjes, A.W.; Reitsma, J.B.; Bossuyt, P.M.; Kleijnen, J. The development of QUADAS: A tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med. Res. Methodol. 2003, 3, 25. [Google Scholar] [CrossRef]

- Jayakumar, S.; Sounderajah, V.; Normahani, P.; Harling, L.; Markar, S.R.; Ashrafian, H.; Darzi, A. Quality assessment standards in artificial intelligence diagnostic accuracy systematic reviews: A meta-research study. NPJ Digit. Med. 2022, 5, 11. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- R.A.L.E. Lung Sounds 3.2. Available online: http://www.rale.ca/LungSounds.htm. (accessed on 1 August 2023).

- Lu, X.; Bahoura, M. An integrated automated system for crackles extraction and classification. Biomed. Signal Process. Control 2008, 3, 244–254. [Google Scholar] [CrossRef]

- Bahoura, M. Pattern recognition methods applied to respiratory sounds classification into normal and wheeze classes. Comput. Biol. Med. 2009, 39, 824–843. [Google Scholar] [CrossRef]

- Tocchetto, M.A.; Bazanella, A.S.; Guimaraes, L.; Fragoso, J.; Parraga, A. An embedded classifier of lung sounds based on the wavelet packet transform and ANN. IFAC Proc. Vol. 2014, 47, 2975–2980. [Google Scholar] [CrossRef]

- Datta, S.; Choudhury, A.D.; Deshpande, P.; Bhattacharya, S.; Pal, A. Automated lung sound analysis for detecting pulmonary abnormalities. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Embc), Jeju Island, Republic of Korea, 11–15 July 2017; pp. 4594–4598. [Google Scholar]

- Oweis, R.; Abdulhay, E.; Khayal, A.; Awad, A. An alternative respiratory sounds classification system utilizing artificial neural networks. Biomed. J. 2015, 38, 153–161. [Google Scholar] [CrossRef]

- Naves, R.; Barbosa, B.H.; Ferreira, D.D. Classification of lung sounds using higher-order statistics: A divide-and-conquer approach. Comput. Methods Programs Biomed. 2016, 129, 12–20. [Google Scholar] [CrossRef]

- Racineux, J. L’auscultation à L’écoute du Poumon ASTRA; CD-Phonopneumogrammes: Paris, France, 1994. [Google Scholar]

- Coviello, J.S. Auscultation Skills: Breath & Heart Sounds; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2013. [Google Scholar]

- Wilkins, R.; Hodgkin, J.; Lopez, B. Fundamentals of Lung and Heart Sounds, 3/e (Book and CD-ROM); CV Mosby: Maryland Heights, MO, USA, 2004. [Google Scholar]

- Wrigley, D. Heart and Lung Sounds Reference Library; PESI HealthCare: Eau Claire, WI, USA, 2011. [Google Scholar]

- Lehrer, S. Understanding Lung Sounds; Saunders: Philadelphia, PA, USA, 2018. [Google Scholar]

- Fraiwan, M.; Fraiwan, L.; Khassawneh, B.; Ibnian, A. A dataset of lung sounds recorded from the chest wall using an electronic stethoscope. Data Brief 2021, 35, 106913. [Google Scholar] [CrossRef] [PubMed]

- Altan, G.; Kutlu, Y. RespiratoryDatabase@ TR (COPD Severity Analysis). 2020. Available online: https://data.mendeley.com/datasets/p9z4h98s6j/1 (accessed on 1 August 2023).

- Thinklabs Medical LLC. Thinklabs One Lung Sounds Library. Available online: https://www.thinklabs.com/sound-library (accessed on 1 August 2023).

- East Tennessee State University. Pulmonary Breath Sounds. Available online: https://faculty.etsu.edu/arnall/www/public_html/heartlung/breathsounds/contents.html (accessed on 1 August 2023).

- Bahoura, M. Analyse des Signaux Acoustiques Respiratoires: Contribution à la Detection Automatique des Sibilants par Paquets D’ondelettes. Ph.D. Thesis, Université de Rouen, Mont-Saint-Aignan, France, 1999. [Google Scholar]

- Hsiao, C.-H.; Lin, T.-W.; Lin, C.-W.; Hsu, F.-S.; Lin, F.Y.-S.; Chen, C.-W.; Chung, C.-M. Breathing sound segmentation and detection using transfer learning techniques on an attention-based encoder-decoder architecture. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 754–759. [Google Scholar]

- Grinchenko, A.; Makarenkov, V.; Makarenkova, A. Kompjuternaya auskultaciya-novij metod objektivizacii harakterictik zvykov dihaniya [Computer auscultation is a new method of objectifying the lung sounds characteristics]. Klin. Inform. I Telemeditsina 2010, 6, 31–36. [Google Scholar]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised learning. In Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar]

- Mutasa, S.; Sun, S.; Ha, R. Understanding artificial intelligence based radiology studies: What is overfitting? Clin. Imaging 2020, 65, 96–99. [Google Scholar] [CrossRef]

- Pal, S.K.; Mitra, S. Multilayer perceptron, fuzzy sets, classifiaction. IEEE Trans. Neural Netw. 1992, 3, 683–697. [Google Scholar] [CrossRef]

- Bannick, M.S.; McGaughey, M.; Flaxman, A.D. Ensemble modelling in descriptive epidemiology: Burden of disease estimation. Int. J. Epidemiol. 2020, 49, 2065–2073. [Google Scholar] [CrossRef]

- Dayhoff, J.E.; DeLeo, J.M. Artificial neural networks: Opening the black box. Cancer Interdiscip. Int. J. Am. Cancer Soc. 2001, 91, 1615–1635. [Google Scholar] [CrossRef]

- Acharya, J.; Basu, A. Deep Neural Network for Respiratory Sound Classification in Wearable Devices Enabled by Patient Specific Model Tuning. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 535–544. [Google Scholar] [CrossRef]

- Alqudah, A.M.; Qazan, S.; Obeidat, Y.M. Deep learning models for detecting respiratory pathologies from raw lung auscultation sounds. Soft Comput. 2022, 26, 13405–13429. [Google Scholar] [CrossRef] [PubMed]

- Altan, G.; Kutlu, Y.; Allahverdi, N. Deep Learning on Computerized Analysis of Chronic Obstructive Pulmonary Disease. IEEE J. Biomed. Health. Inform. 2019. [Google Scholar] [CrossRef] [PubMed]

- Bahoura, M. FPGA implementation of an automatic wheezing detection system. Biomed. Signal Process. Control 2018, 46, 76–85. [Google Scholar] [CrossRef]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung sounds classification using convolutional neural networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef] [PubMed]

- Basu, V.; Rana, S. Respiratory diseases recognition through respiratory sound with the help of deep neural network. Respiratory diseases recognition through respiratory sound with the help of deep neural network. In Proceedings of the 2020 4th International Conference on Computational Intelligence and Networks (CINE), Kolkata, India, 27–29 February 2020; pp. 1–6. [Google Scholar]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. A Neural Network-Based Method for Respiratory Sound Analysis and Lung Disease Detection. Appl. Sci. 2022, 12, 3877. [Google Scholar] [CrossRef]

- Chen, H.; Yuan, X.; Pei, Z.; Li, M.; Li, J. Triple-Classification of Respiratory Sounds Using Optimized S-Transform and Deep Residual Networks. IEEE Access 2019, 7, 32845–32852. [Google Scholar] [CrossRef]

- Chen, H.; Yuan, X.; Li, J.; Pei, Z.; Zheng, X. Automatic multi-level in-exhale segmentation and enhanced generalized S-transform for wheezing detection. Comput. Methods Programs Biomed. 2019, 178, 163–173. [Google Scholar] [CrossRef] [PubMed]

- Demir, F.; Sengur, A.; Bajaj, V. Convolutional neural networks based efficient approach for classification of lung diseases. Health Inf. Sci. Syst. 2020, 8, 4. [Google Scholar] [CrossRef]

- Demir, F.; Ismael, A.M.; Sengur, A. Classification of Lung Sounds With CNN Model Using Parallel Pooling Structure. IEEE Access 2020, 8, 105376–105383. [Google Scholar] [CrossRef]

- Perna, D. Convolutional neural networks learning from respiratory data. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2109–2113. [Google Scholar]

- Fraiwan, M.; Fraiwan, L.; Alkhodari, M.; Hassanin, O. Recognition of pulmonary diseases from lung sounds using convolutional neural networks and long short-term memory. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 4759–4771. [Google Scholar] [CrossRef]

- Gairola, S.; Tom, F.; Kwatra, N.; Jain, M. RespireNet: A Deep Neural Network for Accurately Detecting Abnormal Lung Sounds in Limited Data Setting. Ann. Int. Conf. IEEE Eng. Med. Biol. Soc. 2021, 2021, 527–530. [Google Scholar] [CrossRef]

- Garcia-Ordas, M.T.; Benitez-Andrades, J.A.; Garcia-Rodriguez, I.; Benavides, C.; Alaiz-Moreton, H. Detecting Respiratory Pathologies Using Convolutional Neural Networks and Variational Autoencoders for Unbalancing Data. Sensors 2020, 20, 1214. [Google Scholar] [CrossRef] [PubMed]

- Hazra, R.; Majhi, S. Detecting respiratory diseases from recorded lung sounds by 2D CNN. In Proceedings of the 2020 5th International Conference on Computing, Communication and Security (ICCCS), Patna, India, 14–16 October 2020; pp. 1–6. [Google Scholar]

- Jung, S.Y.; Liao, C.H.; Wu, Y.S.; Yuan, S.M.; Sun, C.T. Efficiently Classifying Lung Sounds through Depthwise Separable CNN Models with Fused STFT and MFCC Features. Diagnostics 2021, 11, 732. [Google Scholar] [CrossRef]

- Kochetov, K.; Putin, E.; Balashov, M.; Filchenkov, A.; Shalyto, A. Noise Masking Recurrent Neural Network for Respiratory Sound Classification. In Artificial Neural Networks and Machine Learning ICANN 2018; Lecture Notes in Computer Science; Springer: New York, NY, USA, 2018; pp. 208–217. [Google Scholar]

- Li, J.; Wang, C.; Chen, J.; Zhang, H.; Dai, Y.; Wang, L.; Wang, L.; Nandi, A.K. Explainable CNN With Fuzzy Tree Regularization for Respiratory Sound Analysis. IEEE Trans. Fuzzy Syst. 2022, 30, 1516–1528. [Google Scholar] [CrossRef]

- Li, J.; Yuan, J.; Wang, H.; Liu, S.; Guo, Q.; Ma, Y.; Li, Y.; Zhao, L.; Wang, G. LungAttn: Advanced lung sound classification using attention mechanism with dual TQWT and triple STFT spectrogram. Physiol. Meas. 2021, 4, 105006. [Google Scholar] [CrossRef] [PubMed]

- Minami, K.; Lu, H.; Kim, H.; Mabu, S.; Hirano, Y.; Kido, S. Automatic classification of large-scale respiratory sound dataset based on convolutional neural network. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; pp. 804–807. [Google Scholar]

- Monaco, A.; Amoroso, N.; Bellantuono, L.; Pantaleo, E.; Tangaro, S.; Bellotti, R. Multi-Time-Scale Features for Accurate Respiratory Sound Classification. Appl. Sci. 2020, 10, 8606. [Google Scholar] [CrossRef]

- Mukherjee, H.; Sreerama, P.; Dhar, A.; Obaidullah, S.M.; Roy, K.; Mahmud, M.; Santosh, K.C. Automatic Lung Health Screening Using Respiratory Sounds. J. Med. Syst. 2021, 45, 19. [Google Scholar] [CrossRef]

- Ngo, D.; Pham, L.; Nguyen, A.; Phan, B.; Tran, K.; Nguyen, T. Deep Learning Framework Applied For Predicting Anomaly of Respiratory Sounds. In Proceedings of the 2021 International Symposium on Electrical and Electronics Engineering (ISEE), Ho Chi Minh, Vietnam, 15–16 April 2021; pp. 42–47. [Google Scholar]

- Nguyen, T.; Pernkopf, F. Lung sound classification using snapshot ensemble of convolutional neural networks. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 760–763. [Google Scholar]

- Paraschiv, E.-A.; Rotaru, C.-M. Machine learning approaches based on wearable devices for respiratory diseases diagnosis. In Proceedings of the 2020 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 29–30 October 2020; pp. 1–4. [Google Scholar]

- Petmezas, G.; Cheimariotis, G.A.; Stefanopoulos, L.; Rocha, B.; Paiva, R.P.; Katsaggelos, A.K.; Maglaveras, N. Automated Lung Sound Classification Using a Hybrid CNN-LSTM Network and Focal Loss Function. Sensors 2022, 22, 1232. [Google Scholar] [CrossRef] [PubMed]

- Pham, L.; Phan, H.; Palaniappan, R.; Mertins, A.; McLoughlin, I. CNN-MoE Based Framework for Classification of Respiratory Anomalies and Lung Disease Detection. IEEE J. Biomed. Health Inf. 2021, 25, 2938–2947. [Google Scholar] [CrossRef]

- Pham, L.; Phan, H.; Schindler, A.; King, R.; Mertins, A.; McLoughlin, I. Inception-Based Network and Multi-Spectrogram Ensemble Applied To Predict Respiratory Anomalies and Lung Diseases. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2021, 2021, 253–256. [Google Scholar] [CrossRef]

- Pham Thi Viet, H.; Nguyen Thi Ngoc, H.; Tran Anh, V.; Hoang Quang, H. Classification of lung sounds using scalogram representation of sound segments and convolutional neural network. J. Med. Eng. Technol. 2022, 46, 270–279. [Google Scholar] [CrossRef] [PubMed]

- Rocha, B.M.; Pessoa, D.; Marques, A.; Carvalho, P.; Paiva, R.P. Automatic Classification of Adventitious Respiratory Sounds: A (Un)Solved Problem? Sensors 2020, 21, 57. [Google Scholar] [CrossRef] [PubMed]

- Shuvo, S.B.; Ali, S.N.; Swapnil, S.I.; Hasan, T.; Bhuiyan, M.I.H. A Lightweight CNN Model for Detecting Respiratory Diseases From Lung Auscultation Sounds Using EMD-CWT-Based Hybrid Scalogram. IEEE J. Biomed. Health Inf. 2021, 25, 2595–2603. [Google Scholar] [CrossRef]

- Tariq, Z.; Shah, S.K.; Lee, Y. Lung disease classification using deep convolutional neural network. In Proceedings of the 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, 18–21 November 2019; pp. 732–735. [Google Scholar]

- Yang, Z.; Liu, S.; Song, M.; Parada-Cabaleiro, E.; Schuller, B.W. Adventitious Respiratory Classification Using Attentive Residual Neural Networks. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 2912–2916. [Google Scholar]

- Ma, Y.; Xu, X.; Yu, Q.; Zhang, Y.; Li, Y.; Zhao, J.; Wang, G. Lungbrn: A smart digital stethoscope for detecting respiratory disease using bi-resnet deep learning algorithm. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019; pp. 1–4. [Google Scholar]

- Stitson, M.; Weston, J.; Gammerman, A.; Vovk, V.; Vapnik, V. Theory of support vector machines. Univ. Lond. 1996, 117, 188–191. [Google Scholar]

- Boujelben, O.; Bahoura, M. Efficient FPGA-based architecture of an automatic wheeze detector using a combination of MFCC and SVM algorithms. J. Syst. Archit. 2018, 88, 54–64. [Google Scholar] [CrossRef]

- Sen, I.; Saraclar, M.; Kahya, Y. Computerized Diagnosis of Respira tory Disorders. Methods Inf. Med. 2014, 53, 291–295. [Google Scholar] [PubMed]

- Serbes, G.; Ulukaya, S.; Kahya, Y.P. An Automated Lung Sound Preprocessing and Classification System Based OnSpectral Analysis Methods. In Precision Medicine Powered by pHealth and Connected Health; Springer: New York, NY, USA, 2018; pp. 45–49. [Google Scholar] [CrossRef]

- Stasiakiewicz, P.; Dobrowolski, A.P.; Targowski, T.; Gałązka-Świderek, N.; Sadura-Sieklucka, T.; Majka, K.; Skoczylas, A.; Lejkowski, W.; Olszewski, R. Automatic classification of normal and sick patients with crackles using wavelet packet decomposition and support vector machine. Biomed. Signal Process. Control 2021, 67, 102521. [Google Scholar] [CrossRef]

- Romero, E.; Lepore, N.; Sosa, G.D.; Cruz-Roa, A.; González, F.A. Automatic detection of wheezes by evaluation of multiple acoustic feature extraction methods and C-weighted SVM. In Proceedings of the 10th International Symposium on Medical Information Processing and Analysis, Cartagena, Colombia, 14–16 October 2014. [Google Scholar]

- Tasar, B.; Yaman, O.; Tuncer, T. Accurate respiratory sound classification model based on piccolo pattern. Appl. Acoust. 2022, 188, 108589. [Google Scholar] [CrossRef]

- Vidhya, B.; Nikhil Madhav, M.; Suresh Kumar, M.; Kalanandini, S. AI Based Diagnosis of Pneumonia. Wirel. Pers. Commun. 2022, 126, 3677–3692. [Google Scholar] [CrossRef]

- Zhang, Z. Introduction to machine learning: K-nearest neighbors. Ann. Transl. Med. 2016, 4, 218. [Google Scholar] [CrossRef]

- Kingsford, C.; Salzberg, S.L. What are decision trees? Nat. Biotechnol. 2008, 26, 1011–1013. [Google Scholar] [CrossRef] [PubMed]

- Chambres, G.; Hanna, P.; Desainte-Catherine, M. Automatic detection of patient with respiratory diseases using lung sound analysis. In Proceedings of the 2018 International Conference on Content-Based Multimedia Indexing (CBMI), La Rochelle, France, 4–6 September 2018; pp. 1–6. [Google Scholar]

- Kok, X.H.; Imtiaz, S.A.; Rodriguez-Villegas, E. A novel method for automatic identification of respiratory disease from acoustic recordings. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2589–2592. [Google Scholar]

- Oletic, D.; Arsenali, B.; Bilas, V. Low-power wearable respiratory sound sensing. Sensors 2014, 14, 6535–6566. [Google Scholar] [CrossRef] [PubMed]

- Tharwat, A.; Gaber, T.; Ibrahim, A.; Hassanien, A.E. Linear discriminant analysis: A detailed tutorial. AI Commun. 2017, 30, 169–190. [Google Scholar] [CrossRef]

- Naqvi, S.Z.H.; Choudhry, M.A. An Automated System for Classification of Chronic Obstructive Pulmonary Disease and Pneumonia Patients Using Lung Sound Analysis. Sensors 2020, 20, 6512. [Google Scholar] [CrossRef] [PubMed]

- Porieva, H.; Ivanko, K.; Semkiv, C.; Vaityshyn, V. Investigation of lung sounds features for detection of bronchitis and COPD using machine learning methods. Radiotekhnika Radioaparatobuduvannia 2021, 84, 78–87. [Google Scholar] [CrossRef]

- Matsuki, K.; Kuperman, V.; Van Dyke, J.A. The Random Forests statistical technique: An examination of its value for the study of reading. Sci. Stud. Read. 2016, 20, 20–33. [Google Scholar] [CrossRef] [PubMed]

- Jaber, M.M.; Abd, S.K.; Shakeel, P.M.; Burhanuddin, M.A.; Mohammed, M.A.; Yussof, S. A telemedicine tool framework for lung sounds classification using ensemble classifier algorithms. Measurement 2020, 162, 107883. [Google Scholar] [CrossRef]

- Aristophanous, M.; Penney, B.C.; Martel, M.K.; Pelizzari, C.A. A Gaussian mixture model for definition of lung tumor volumes in positron emission tomography. Med. Phys. 2007, 34, 4223–4235. [Google Scholar] [CrossRef]

- Ntalampiras, S. Collaborative framework for automatic classification of respiratory sounds. IET Signal Process. 2020, 14, 223–228. [Google Scholar] [CrossRef]

- Brown, J.C.; Smaragdis, P. Hidden Markov and Gaussian mixture models for automatic call classification. J. Acoust. Soc. Am. 2009, 125, EL221–EL224. [Google Scholar] [CrossRef]

- Jakovljević, N.; Lončar-Turukalo, T. Hidden Markov Model Based Respiratory Sound Classification. In Precision Medicine Powered by pHealth and Connected Health; Springer: New York, NY, USA, 2018; pp. 39–43. [Google Scholar]

- Ntalampiras, S.; Potamitis, I. Automatic acoustic identification of respiratory diseases. Evol. Syst. 2020, 12, 69–77. [Google Scholar] [CrossRef]

- Oletic, D.; Bilas, V. Asthmatic Wheeze Detection From Compressively Sensed Respiratory Sound Spectra. IEEE J. Biomed. Health Inf. 2018, 22, 1406–1414. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobotics 2013, 7, 21. [Google Scholar] [CrossRef]

- Tripathy, R.K.; Dash, S.; Rath, A.; Panda, G.; Pachori, R.B. Automated Detection of Pulmonary Diseases From Lung Sound Signals Using Fixed-Boundary-Based Empirical Wavelet Transform. IEEE Sens. Lett. 2022, 6, 1–4. [Google Scholar] [CrossRef]

- Peng, C.-Y.J.; Lee, K.L.; Ingersoll, G.M. An introduction to logistic regression analysis and reporting. J. Educ. Res. 2002, 96, 3–14. [Google Scholar] [CrossRef]

- Pramono, R.X.A.; Bowyer, S.; Rodriguez-Villegas, E. Automatic adventitious respiratory sound analysis: A systematic review. PLoS ONE 2017, 12, e0177926. [Google Scholar] [CrossRef]

- Reddy, E.M.K.; Gurrala, A.; Hasitha, V.B.; Kumar, K.V.R. Introduction to Naive Bayes and a Review on Its Subtypes with Applications. In Bayesian Reasoning and Gaussian Processes for Machine Learning Applications; CRC Press: Boca Raton, FL, USA, 2022; pp. 1–14. [Google Scholar] [CrossRef]

- Koning, C.; Lock, A. A systematic review and utilization study of digital stethoscopes for cardiopulmonary assessments. J. Med. Res. Innov. 2021, 5, 4–14. [Google Scholar] [CrossRef]

- Arts, L.; Lim, E.H.T.; van de Ven, P.M.; Heunks, L.; Tuinman, P.R. The diagnostic accuracy of lung auscultation in adult patients with acute pulmonary pathologies: A meta-analysis. Sci. Rep. 2020, 10, 7347. [Google Scholar] [CrossRef] [PubMed]

- Güler, İ.; Polat, H.; Ergün, U. Combining neural network and genetic algorithm for prediction of lung sounds. J. Med. Syst. 2005, 29, 217–231. [Google Scholar] [CrossRef] [PubMed]

- Xia, T.; Han, J.; Mascolo, C. Exploring machine learning for audio-based respiratory condition screening: A concise review of databases, methods, and open issues. Exp. Biol. Med. 2022, 247, 2053–2061. [Google Scholar] [CrossRef] [PubMed]

- Heitmann, J.; Glangetas, A.; Doenz, J.; Dervaux, J.; Shama, D.M.; Garcia, D.H.; Benissa, M.R.; Cantais, A.; Perez, A.; Müller, D. DeepBreath—Automated detection of respiratory pathology from lung auscultation in 572 pediatric outpatients across 5 countries. NPJ Digit. Med. 2023, 6, 104. [Google Scholar] [CrossRef]

- Tran-Anh, D.; Vu, N.H.; Nguyen-Trong, K.; Pham, C. Multi-task learning neural networks for breath sound detection and classification in pervasive healthcare. Pervasive Mob. Comput. 2022, 86, 101685. [Google Scholar] [CrossRef] [PubMed]

- Zhai, Q.; Han, X.; Han, Y.; Yi, J.; Wang, S.; Liu, T. A contactless on-bed radar system for human respiration monitoring. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Johnson, K.; Wei, W.; Weeraratne, D.; Frisse, M.; Misulis, K.; Rhee, K.; Zhao, J.; Snowdon, J. Precision medicine, AI, and the future of personalized health care. Clin. Transl. Sci. 2021, 14, 86–93. [Google Scholar] [CrossRef]

- Lal, A.; Pinevich, Y.; Gajic, O.; Herasevich, V.; Pickering, B. Artificial intelligence and computer simulation models in critical illness. World J. Crit. Care Med. 2020, 9, 13. [Google Scholar] [CrossRef]

- Lal, A.; Li, G.; Cubro, E.; Chalmers, S.; Li, H.; Herasevich, V.; Dong, Y.; Pickering, B.W.; Kilickaya, O.; Gajic, O. Development and verification of a digital twin patient model to predict specific treatment response during the first 24 hours of sepsis. Crit. Care Explor. 2020, 2, e0249. [Google Scholar] [CrossRef] [PubMed]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef]

- Richens, J.G.; Lee, C.M.; Johri, S. Improving the accuracy of medical diagnosis with causal machine learning. Nat. Commun. 2020, 11, 3923. [Google Scholar] [CrossRef]

- Lal, A.; Dang, J.; Nabzdyk, C.; Gajic, O.; Herasevich, V. Regulatory oversight and ethical concerns surrounding software as medical device (SaMD) and digital twin technology in healthcare. Ann. Transl. Med. 2022, 10, 950. [Google Scholar] [CrossRef]

| Parameter | Inclusion Criteria | Exclusion Criteria |

|---|---|---|

| Population |

|

|

| Intervention |

|

|

| Comparator |

|

|

| Outcomes |

|

|

| Study Designs |

|

|

| Database or Author Name | Country | Participants Number (Total (M/F); HC) | Abnormal Lung Sounds Labeled | Pathologies Labeled | Availability 1 | Ref. |

|---|---|---|---|---|---|---|

| R.A.L.E. Lung Sounds 3.2 | Canada | 70 (-); 17 | Crackles, Wheezes, Squawk, Stridor, Rhonchi | Asthma, COPD, Bronchiolitis, Laryngeal web, Bronchogenic carcinoma, Lung fibrosis, Cystic fibrosis. | Available online | [33] |

| ICBHI 2017 Challenge Database | Greece, Portugal | 126 (46/79); 26 | Crackles, Wheezes, Crackles + Wheezes | Asthma, Bronchiectasis, Bronchiolitis, COPD, Pneumonia, LRTI, URTI | Available online | [27] |

| KAUH database | Jordan | 120 (43/69); 35 | Crackles, Wheezes, Crepitations, Bronchial sounds, Crackles + Wheezes, Crackles + Bronchial | Asthma, Pneumonia, COPD, Bronchitis, Heart failure, Lung fibrosis, Pleural effusion | Available online | [45] |

| RespiratoryDatabase@TR | Turkey | 77 (64/13); 30 | Crackles, Wheezes | Asthma, COPD | Available online | [46] |

| Thinklabs Lung Sounds Library | United States | - | Crackles, Wheezes, Pleural rub, Rhonchi, Stridor | Asthma, Bronchiolitis, COPD, Laryngomalacia, Pulmonary edema | Available online | [47] |

| East Tennessee State University Pulmonary Breath Sounds | United States | - | Crackles, Pleural rub, Stridor, Wheezing, Rhonchus | - | Available online | [48] |

| ASTRA database | France | - | - | - | CD-ROM | [40] |

| Auscultation Skills: Breath & Heart Sounds | United States | - | - | - | CD-ROM | [41] |

| Fundamentals of Lung and Heart Sounds | United States | - | - | - | CD-ROM | [42] |

| Heart and Lung Sounds Reference Library, Wrigley | United States | - | Bronchial, Bronchovesicular, Rhonchi, Pneumonia, Wheezes, Bronchophony, Crackles, Stridor, | - | CD-ROM | [43] |

| Understanding Lung Sounds, Lehrer | United States | - | Crackles, Wheezes | - | CD-ROM | [44] |

| Bahoura 1999 | France | - | - | - | Undefined | [49] |

| Hsiao 2020 | Taiwan | 22 (12/10); - | Crackles, Wheezes | - | Undefined | [50] |

| Bogazici University Lung Acoustics Laboratory | Turkey | - | - | Bronchiectasis, Interstitial lung disease | Undefined | - |

| CORA database | Ukraine | - | - | Bronchitis, COPD | Undefined | [51] |

| Stethographics Lung Sound Samples 2 | United States | - | - | - | Undefined | - |

| 3M Littmann Lung Sounds Library | United States | - | - | - | Undefined | - |

| Mediscuss Respiratory Sounds 2 | - | - | - | - | Undefined | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia-Mendez, J.P.; Lal, A.; Herasevich, S.; Tekin, A.; Pinevich, Y.; Lipatov, K.; Wang, H.-Y.; Qamar, S.; Ayala, I.N.; Khapov, I.; et al. Machine Learning for Automated Classification of Abnormal Lung Sounds Obtained from Public Databases: A Systematic Review. Bioengineering 2023, 10, 1155. https://doi.org/10.3390/bioengineering10101155

Garcia-Mendez JP, Lal A, Herasevich S, Tekin A, Pinevich Y, Lipatov K, Wang H-Y, Qamar S, Ayala IN, Khapov I, et al. Machine Learning for Automated Classification of Abnormal Lung Sounds Obtained from Public Databases: A Systematic Review. Bioengineering. 2023; 10(10):1155. https://doi.org/10.3390/bioengineering10101155

Chicago/Turabian StyleGarcia-Mendez, Juan P., Amos Lal, Svetlana Herasevich, Aysun Tekin, Yuliya Pinevich, Kirill Lipatov, Hsin-Yi Wang, Shahraz Qamar, Ivan N. Ayala, Ivan Khapov, and et al. 2023. "Machine Learning for Automated Classification of Abnormal Lung Sounds Obtained from Public Databases: A Systematic Review" Bioengineering 10, no. 10: 1155. https://doi.org/10.3390/bioengineering10101155