Comparison between Single and Multi-Objective Evolutionary Algorithms to Solve the Knapsack Problem and the Travelling Salesman Problem

Abstract

:1. Introduction

2. Problems: Formulation and Instances

2.1. The Bi-Objective Knapsack Problem (BOKNP)

- 1.

- Data set 1A: consists of five data files (instances) for the bi-objective 0/1 unidimensional knapsack problem. The values for the profits and weights have been uniformly generated. The number of items in the instances range from 50 to 500. The tightness ratio (Equation (2)) is in the range .

- 2.

- Data set 1B: consists of 40 data files (instances) corresponding to the 10 bi-objective 0/1 unidimensional knapsack problems. Every instance has a tightness ratio . For each problem, four variants (class A, B, C and D) are given:

- 1B/A: the weights and profits are uniformly distributed within the range .

- 1B/B: these instances are created starting from data set 1B/A by defining the objectives in reverse order.

- 1B/C: the profits are generated with plateaus of values of length .

- 1B/D: these instances are created starting from data set 1B/C by defining the objectives in reverse order.

- 3.

- Data set 2: consists of 50 data files (instances) that also correspond to the bi-objective 0/1 unidimensional knapsack problem. For each data set the value for W is computed as the nearest integer value of (where P is a percentage of ). All these instances have a tightness ratio . Two types of correlated instances (WEAK and STRONG), as well as uncorrelated instances (UNCOR) were generated as follows [21]:

- UNCOR: 20 uncorrelated instances of 50 items. The profit vectors , and the weight vector are uniformly generated at random in the range for ten items, while for the remaining ones the range is considered.

- WEAK: 15 weakly correlated instances ranging in size from 50 to 1000 items, where is correlated with , i.e., , and . The weight values are uniformly generated at random in the range .

- STRONG: 15 strongly correlated instances with the number of items ranging between 50 and 1000. The weights are uniformly generated at random and are correlated with , i.e., , and . The value of is uniformly generated at random in the range .

| Set | Source | Name | Parameters |

|---|---|---|---|

| Set 1A | Gandibleux and Freville [23] | 2KNP50-r | |

| 2KNP100-50 | |||

| 2KNP500-41 | |||

| Set 1B/A | Visée et al. [24] | 2KNPn-1A | |

| Set 1B/B,C,D | Degoutin and Gandibleux [25] | 2KNPn-1B | |

| 2KNPn-1C | |||

| 2KNPn-1D | |||

| Set 2 (UNCORR) | Captivo et al. [21] | F5050Ws | |

| K5050Ws | |||

| Set 2 (WEAK) | Captivo et al. [21] | W4C50W01 | |

| W4100W1 | |||

| 4WnW1 | |||

| Set 2 (STRONG) | Captivo et al. [21] | S1C50W01 | |

| S1nW1 | |||

| 1SnW1 |

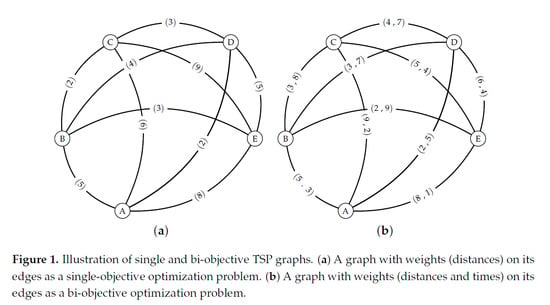

2.2. The Bi-Objective Travelling Salesman Problem (BOTSP)

- The TSPLIB Euclidean Instances [31] (files with prefix kro, from the authors Krolak/Felts/Nelson) consist of 13 instances with two objectives which are generated on the basis of the single-objective TSP instances from TSPLIB [32] (Library of Traveling Salesman Problems). The TSPLIB is a library of sample instances for the TSP (and related problems) from various sources and with different features.

- The DIMACS Clustered Instances [33] (files with prefix clus) are three instances that have been created using the random instance generator available from the 8th DIMACS implementation challenge site (the generator is available at http://dimacs.rutgers.edu/archive/Challenges/TSP/index.html).

- The DIMACS Euclidean Instances [30] (files with prefix eucl) are a set of three instances which were also generated using the DIMACS code.

| Name | Origin | Type | Source | Num. of Variables | Combinations |

|---|---|---|---|---|---|

| clusABn | DIMACS | Clustered | Lust et al. [33] | clusAn and clusBn | |

| euclABn | DIMACS | Euclidean | Paquete et al. [30] | euclAn and euclBn | |

| kroABn | TSPLIB | Euclidean | Paquete et al. [30] | kroAn and kroBn | |

| kroACn | kroAn and kroCn | ||||

| kroADn | kroAn and kroDn | ||||

| kroBCn | TSPLIB | Euclidean | Paquete et al. [30] | kroBn and kroCn | |

| kroBDn | kroBn and kroDn | ||||

| kroCDn | kroCn and kroDn |

3. Optimization Approaches

3.1. Multi-Objective Evolutionary Algorithms

- Pareto-dominance-based algorithms use the Pareto dominance relationship, where the partner of a non-dominated individual is chosen from among the individuals of the population that it dominates. Some widely known algorithms from this type are: Non-Dominated Sorting Genetic Algorithm II (NSGA-II) [35], Strength Pareto Evolutionary Algorithm 2 (SPEA2) [36] and Pareto Envelope-based Selection Algorithm II (PESA-II) [37].

- Decomposition-based algorithms transform a MOP into a set of SOPs using scalarizing functions. The resulting single-objective problems are then solved simultaneously. Some examples of algorithms that fall under this approach are Multi-Objective Genetic Local Search algorithm (MOGLS) [38], Cellular Multi-Objective Genetic Algorithm (C-MOGA) [39] and Multi-Objective Evolutionary Algorithm based on Decomposition (MOEA/D) [40], as well as their many other variants.

- Indicator-based algorithms use an indicator function to assess the quality of a set of solutions, combining the degree of convergence and/or the diversity of the objective function space with a metric. These algorithms attempt to find the best subset of Pareto non-dominated solutions based on the performance indicator. Their many variants include: Indicator Based-Selection Evolutionary Algorithm (IBEA) [41], S-Metric Selection Evolutionary Multi-Objective Optimization Algorithm (SMS-EMOA) [42], Fast Hypervolume Multi-Objective Evolutionary Algorithm (FV-MOEA) [43] and Many-Objective Metaheuristic Based on R2 Indicator (MOMBI-II) [44].

- NSGA-II [35] is a generational genetic algorithm and is one of the most popular multi-objective optimization algorithms, having been widely and successfully applied in many real-world applications. It is one of the first multi-objective algorithms to introduce elitism, i.e., the elites of a population are given the opportunity to be carried to the next generation. It uses a fast non-dominated sorting procedure based on Pareto front ranking in an effort to promote convergence, meaning it emphasizes non-dominated solutions. In addition to the reasons given above, we have selected this algorithm because it uses an explicit diversity preservation mechanism (crowding distance).

- MOEA/D [40] is probably the most representative decomposition-based multi-objective algorithm. It processes a multi-objective problem by decomposing it into a set of single-objective subproblems and then performing a heuristic search in order to optimize—simultaneously and cooperatively—said subproblems. Generally, a MOEA needs to maintain diversity in its population to produce a set of representative solutions. MOEAs, such as NSGA-II, use crowding distances to maintain diversity. In MOEA/D, a MOP is decomposed into a number of scalar optimization subproblems. Different solutions in the current population are associated with different subproblems. The diversity among these subproblems will naturally lead to diversity in the population [40], which could reinforce the rationale for selecting this algorithm in the context of this study.

- SMS-EMOA [42] is an indicator-based algorithm that implements a special selection operator that combines the hypervolume metric with the concept of Pareto dominance. Since the hypervolume is a measure frequently applied for comparing the results of MOEAs, the underlying idea is to explicitly manage and maximize the dominated hypervolume within the optimization process. Hypervolume, which is also used for comparison purposes, measures convergence, as well as diversity. The SMS-EMOA keeps a population of non-dominated and dominated individuals at a constant size. Keeping only non-dominated individuals might lead to small or even single-membered populations, and thus to a crucial loss of diversity. To avoid losing diversity, defining a lower bound for the population size was suggested in [42]. These are the reasons that make SMS-EMOA a good candidate for this study, especially to test the effectiveness of the diversity of this algorithm to improve results in MOPs.

3.2. Single-Objective Evolutionary Algorithms

- Generational Genetic Algorithm (gGA) [46]: two parents are selected from the population in order to be crossed, yielding two offspring, which are later mutated and evaluated. These newly generated individuals are placed in an auxiliary population that will replace the current population when it is completely full.

- Steady-State Genetic Algorithm (ssGA) [47]: two parents are selected and crossed, yielding two offspring that are later crossed. Then one of the resulting offspring is mutated. The mutated individual is evaluated and then inserted into the population, usually replacing the worst individual in the population (if the new one is better). Hence, the parents and offspring can co-exist in the population for the next iteration.

- Elitist Evolution Strategy ( + ) (eES) [48]: the elitist feature allows for the best solution to be always kept. The algorithm starts with a population of size . Each generation of mutated individuals is created from the current population. After the generation of the mutated individuals, there are a total of () individuals, including the parents and the new individuals generated from them. From these () individuals, the best ones are kept—as parents—for the next generation.

- Non-Elitist Evolution Strategy (, ) (neES) [48]. in this case the best mutated individuals from among the new generated are selected as parents for the next generation, i.e., none of the parents survive the next generation, meaning must hold.

3.3. Comparison of Single and Multi-Objective Approaches

4. Experimental Results

4.1. Parameter Setting

- Common operator parameters: mutation and crossover probabilities.

- Algorithm parameters: population sizes and other algorithm-specific parameters.

- Other parameters: selection, crossover, and mutation operators. These parameters were set for each optimization problem, using the same operators for all the single and multi-objective approaches.

4.2. Performance

4.3. Optimization Behavior

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Eiben, A.E.; Smith, J.E. Introduction to Evolutionary Computing, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Ma, H.; Shen, S.; Yu, M.; Yang, Z.; Fei, M.; Zhou, H. Multi-population techniques in nature inspired optimization algorithms: A comprehensive survey. Swarm Evol. Comput. 2019, 44, 365–387. [Google Scholar] [CrossRef]

- Sloss, A.N.; Gustafson, S. 2019 Evolutionary Algorithms Review. In Genetic Programming Theory and Practice XVII; Springer: Berlin/Heidelberg, Germany, 2020; pp. 307–344. [Google Scholar]

- Segura, C.; Coello, C.A.C.; Miranda, G.; León, C. Using multi-objective evolutionary algorithms for single-objective constrained and unconstrained optimization. Ann. Oper. Res. 2016, 240, 217–250. [Google Scholar] [CrossRef]

- Coello, C.A.C. Evolutionary Algorithms for Solving Multi-Objective Problems, 2nd ed.; Genetic Algorithms and Evolutionary Computation; Springer: New York, NY, USA, 2007. [Google Scholar]

- Deb, K.; Goldberg, D.E. An Investigation of Niche and Species Formation in Genetic Function Optimization. In Proceedings of the 3rd International Conference on Genetic Algorithms, Fairfax, VA, USA, 4–7 June 1989; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1989; pp. 42–50. [Google Scholar]

- Pulido, G.T.; Coello, C.A.C. Using Clustering Techniques to Improve the Performance of a Multi-objective Particle Swarm Optimizer. In Proceedings of the Genetic and Evolutionary Computation—GECCO 2004, Seattle, WA, USA, 26–30 June 2004; Deb, K., Ed.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 225–237. [Google Scholar]

- Wang, Y.N.; Wu, L.H.; Yuan, X.F. Multi-Objective Self-Adaptive Differential Evolution with Elitist Archive and Crowding Entropy-Based Diversity Measure. Soft Comput. 2010, 14, 193–209. [Google Scholar] [CrossRef]

- Watanabe, S.; Sakakibara, K. Multi-objective approaches in a single-objective optimization environment. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, Edinburgh, UK, 2–5 September 2005; Volume 2, pp. 1714–1721. [Google Scholar]

- De Armas, J.; León, C.; Miranda, G.; Segura, C. Optimisation of a multi-objective two-dimensional strip packing problem based on evolutionary algorithms. Int. J. Prod. Res. 2010, 48, 2011–2028. [Google Scholar] [CrossRef]

- Segura, C.; Coello, C.A.C.; Segredo, E.; Miranda, G.; León, C. Improving the diversity preservation of multi-objective approaches used for single-objective optimization. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 3198–3205. [Google Scholar]

- Knowles, J.D.; Watson, R.A.; Corne, D.W. Reducing Local Optima in Single-Objective Problems by Multi-objectivization. In Evolutionary Multi-Criterion Optimization; Zitzler, E., Thiele, L., Deb, K., Coello, C.A.C., Corne, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 269–283. [Google Scholar]

- Mezura-Montes, E.; Coello, C.A.C. Constrained Optimization via Multiobjective Evolutionary Algorithms. In Multiobjective Problem Solving from Nature: From Concepts to Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 53–75. [Google Scholar]

- Bui, L.; Abbass, H.; Branke, J. Multiobjective optimization for dynamic environments. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, Edinburgh, UK, 2–5 September 2005; Volume 3, pp. 2349–2356. [Google Scholar]

- Jensen, M.T. Helper-Objectives: Using Multi-Objective Evolutionary Algorithms for Single-Objective Optimisation. J. Math. Model. Algorithms 2004, 3, 323–347. [Google Scholar] [CrossRef]

- Martello, S.; Toth, P. Knapsack Problems: Algorithms and Computer Implementations; John Wiley & Sons: Hoboken, NJ, USA, 1990. [Google Scholar]

- Shmoys, D.; Lenstra, J.; Kan, A.; Lawler, E. The Traveling Salesman Problem; A Wiley-Interscience Publication; John Wiley & Sons: Hoboken, NJ, USA, 1985. [Google Scholar]

- Bengio, Y.; Lodi, A.; Prouvost, A. Machine learning for combinatorial optimization: A methodological tour d’horizon. Eur. J. Oper. Res. 2020. [Google Scholar] [CrossRef]

- Lombardi, M.; Milano, M. Boosting Combinatorial Problem Modeling with Machine Learning. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, Stockholm, Sweden, 13–19 July 2018; pp. 5472–5478. [Google Scholar]

- Vamvakas, P.; Tsiropoulou, E.E.; Papavassiliou, S. Dynamic Provider Selection & Power Resource Management in Competitive Wireless Communication Markets. Mob. Netw. Appl. 2018, 23, 86–99. [Google Scholar]

- Captivo, M.E.; Clìmaco, J.A.; Figueira, J.; Martins, E.; Santos, J.L. Solving Bicriteria 0-1 Knapsack Problems Using a Labeling Algorithm. Comput. Oper. Res. 2003, 30, 1865–1886. [Google Scholar] [CrossRef]

- Gandibleux, X. MOCOlib: Multi-Objective Combinatorial Optimization Library. Available online: http://xgandibleux.free.fr/MOCOlib/MOKP.html (accessed on 11 November 2020).

- Gandibleux, X.; Freville, A. Tabu Search Based Procedure for Solving the 0-1 MultiObjective Knapsack Problem: The Two Objectives Case. J. Heuristics 2000, 6, 361–383. [Google Scholar] [CrossRef]

- Visée, M.; Teghem, J.; Pirlot, M.; Ulungu, E. Two-phases Method and Branch and Bound Procedures to Solve the Bi–objective Knapsack Problem. J. Glob. Optim. 1998, 12, 139–155. [Google Scholar] [CrossRef]

- Ehrgott, M.; Gandibleux, X. A survey and annotated bibliography of multiobjective combinatorial optimization. OR-Spektrum 2000, 22, 425–460. [Google Scholar] [CrossRef]

- Robinson, J. On the Hamiltonian Game (A Traveling Salesman Problem); Technical Report; Rand Project Air Force: Arlington, VA, USA, 1949. [Google Scholar]

- Eiselt, H.A.; Sandblom, C.L. Traveling Salesman Problems and Extensions. In Integer Programming and Network Models; Springer: Berlin/Heidelberg, Germany, 2000; pp. 315–341. [Google Scholar]

- Florios, K.; Mavrotas, G. Generation of the exact Pareto set in Multi-Objective Traveling Salesman and Set Covering Problems. Appl. Math. Comput. 2014, 237, 1–19. [Google Scholar] [CrossRef]

- Lust, T. Speed-up techniques for solving large-scale bTSP with the two-phase pareto local search. In Proceedings of the 10th Annual Conference on Genetic and Evolutionary Computation, Atlanta, GA, USA, 12–16 July 2008; pp. 761–762. [Google Scholar]

- Paquete, L.; Stutzle, T. Design and analysis of stochastic local search for the multiobjective traveling salesman problem. Comput. Oper. Res. 2009, 36, 2619–2631. [Google Scholar] [CrossRef] [Green Version]

- Reinelt, G. TSPLIB—A Traveling Salesman Problem Library. ORSA J. Comput. 1991, 3, 376–384. [Google Scholar] [CrossRef]

- Reinelt, G. TSPLIB: Library of Traveling Salesman Problems. Available online: http://comopt.ifi.uni-heidelberg.de/software/TSPLIB95/ (accessed on 11 November 2020).

- Lust, T.; Teghem, J. Two-phase Pareto local search for the biobjective traveling salesman problem. J. Heuristics 2010, 16, 475–510. [Google Scholar] [CrossRef]

- Cui, Z.; Gao, X.Z. Special issue on evolutionary multi-objective optimization (EMO): Theory and applications. Int. J. Mach. Learn. Cybern. 2019, 10, 1927–1929. [Google Scholar] [CrossRef] [Green Version]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Zitzler, E.; Laumanns, M.; Thiele, L. SPEA2: Improving the Strength Pareto Evolutionary Algorithm. In Evolutionary Methods for Design Optimization and Control with Applications to Industrial Problems; Springer: Berlin, Germany, 2001; pp. 95–100. [Google Scholar]

- Corne, D.W.; Jerram, N.R.; Knowles, J.D.; Oates, M.J.; J, M. PESA-II: Region-based Selection in Evolutionary Multiobjective Optimization. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO’2001), San Francisco, CA, USA, 7–11 July 2001; pp. 283–290. [Google Scholar]

- Ishibuchi, H.; Murata, T. Multi-objective genetic local search algorithm (MOGLS). In Proceedings of the IEEE International Conference on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996; pp. 119–124. [Google Scholar]

- Murata, T.; Ishibuchi, H.; Gen, M. Cellular Genetic Local Search for Multi-Objective Optimization. In Proceedings of the Genetic and Evolutionary Computation Conference, San Francisco, CA, USA, 7–11 July 2000; pp. 307–314. [Google Scholar]

- Zhang, Q.; Li, H. MOEA/D: A Multiobjective Evolutionary Algorithm Based on Decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Zitzler, E.; Künzli, S. Indicator-Based Selection in Multiobjective Search. In Proceedings of the Conference on Parallel Problem Solving from Nature (PPSN VIII), Birmingham, UK, 18–22 September 2004; pp. 832–842. [Google Scholar]

- Beume, N.; Naujoks, B.; Emmerich, M. SMS-EMOA: Multiobjective selection based on dominated hypervolume. Eur. J. Oper. Res. 2007, 181, 1653–1669. [Google Scholar] [CrossRef]

- Jiang, S.; Zhang, J.; Ong, Y.; Zhang, A.N.; Tan, P.S. A Simple and Fast Hypervolume Indicator-Based Multiobjective Evolutionary Algorithm. IEEE Trans. Cybern. 2015, 45, 2202–2213. [Google Scholar] [CrossRef]

- Hernandez Gomez, R.; Coello, C.A.C. MOMBI: A new metaheuristic for many-objective optimization based on the R2 indicator. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 2488–2495. [Google Scholar]

- Wolpert, D.; Macready, W. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef] [Green Version]

- Cobb, H.G.; Grefenstette, J.J. GA for Tracking Changing Environment. In Proceedings of the 5th International Conference on GA, San Francisco, CA, USA, 8–13 August 1993. [Google Scholar]

- Whitley, D. GENITOR: A different Genetic Algorithm. In Proceedings of the Rocky Mountain Conference on Artificial Intelligence, Boulder, CO, USA, 29 March–1 April 1988. [Google Scholar]

- Rechenberg, I. Optimierung Technischer Systeme nach Prinzipien der Biologischen Evolution. Ph.D. Thesis, Technische Universität Berlin, Berlin, Germany, 1973. [Google Scholar]

- Oliveto, P.S.; He, J.; Yao, X. Time complexity of evolutionary algorithms for combinatorial optimization: A decade of results. Int. J. Autom. Comput. 2007, 4, 281–293. [Google Scholar] [CrossRef] [Green Version]

- Droste, S.; Jansen, T.; Wegener, I. On the Analysis of the (1 + 1) Evolutionary Algorithm. Theor. Comput. Sci. 2002, 276, 51–81. [Google Scholar] [CrossRef] [Green Version]

- Curry, D.M.; Dagli, C.H. Computational Complexity Measures for Many-objective Optimization Problems. Procedia Comput. Sci. 2014, 36, 185–191. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Wang, H.; Zhang, X.; Jin, Y. Effectiveness and efficiency of non-dominated sorting for evolutionary multi- and many-objective optimization. Complex Intell. Syst. 2017, 3, 247–263. [Google Scholar] [CrossRef]

- Saxena, D.K.; Duro, J.A.; Tiwari, A.; Deb, K.; Zhang, Q. Objective Reduction in Many-Objective Optimization: Linear and Nonlinear Algorithms. IEEE Trans. Evol. Comput. 2013, 17, 77–99. [Google Scholar] [CrossRef]

- Durillo, J.J.; Nebro, A.J. jMetal: A Java framework for multi-objective optimization. Adv. Eng. Softw. 2011, 42, 760–771. [Google Scholar] [CrossRef]

- López-Ibáñez, M.; Dubois-Lacoste, J.; Pérez Cáceres, L.; Birattari, M.; Stützle, T. The irace package: Iterated racing for automatic algorithm configuration. Oper. Res. Perspect. 2016, 3, 43–58. [Google Scholar] [CrossRef]

- Segura, C.; Coello, C.; Segredo, E.; Aguirre, A.H. A Novel Diversity-Based Replacement Strategy for Evolutionary Algorithms. IEEE Trans. Cybern. 2016, 46, 3233–3246. [Google Scholar] [CrossRef]

| Input Parameter | Possible Values | NSGA | MOEA/D | SMS-EMOA | gGA | eES |

|---|---|---|---|---|---|---|

| Crossover probability | [0.0, 1.0] | √ | √ | √ | √ | |

| Mutation probability | [0.0, 1.0] | √ | √ | √ | √ | √ |

| Population size | {10, 20, 50, 100, 200, 300} | √ | √ | √ | √ | () |

| Offspring population size | {1, 2, 5, 10, 20, 50, 100, 200, 300} | √ | √ | () | ||

| Selection tournament size | [2, 10] | √ | √ | √ | ||

| Hypervolume offset | {10, 20, 50, 100, 200, 500} | √ | ||||

| Neighborhood size | {10, 20, 50, 100} | √ | ||||

| Neighbor select probability | [0.0, 1.0] | √ |

| Parameter | KNP | TSP | ||||||||

| Encoding | Binary strings | Permutation of integers | ||||||||

| Initial solutions | random | random | ||||||||

| Mutation operator | Bit-flip | Permutation swap | ||||||||

| Crossover operator | Single point | PMX | ||||||||

| Selection | Tournament | Tournament | ||||||||

| NSGA | MOEA/D | SMS-EMOA | gGA | eES | NSGA | MOEA/D | SMS-EMOA | gGA | eES | |

| Crossover probability | 0.9784 | 0.9578 | 0.9512 | 0.8795 | _ | 0.9843 | 0.9421 | 0.9754 | 0.7895 | _ |

| Mutation probability | 0.0485 | 0.0578 | 0.0358 | 0.0081 | 0.2239 | 0.0163 | 0.0105 | 0.0093 | 0.1116 | 0.3806 |

| Population size | 20 | 200 | 20 | 20 | 1 | 20 | 300 | 20 | 20 | 1 |

| Offspring population size | 20 | _ | _ | 20 | 4 | 20 | _ | _ | 100 | 2 |

| Tournament size | 5 | _ | 2 | 2 | _ | 6 | _ | 2 | 4 | _ |

| Hypervolume offset | _ | _ | 200 | _ | _ | _ | _ | 200 | _ | _ |

| Neighborhood size | _ | 20 | _ | _ | _ | _ | 50 | _ | _ | _ |

| Neighbor probability | _ | 0.8895 | _ | _ | _ | _ | 0.9354 | _ | _ | _ |

| Problem | Multi-Objective | Single-Objective | Test | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NSGAII | MOEA/D | SMSEMOA | GA | ES | ||||||||||||||

| Mean | Median | Mean | Median | Mean | Median | Mean | Median | Mean | Median | |||||||||

| Set 1/A | 2KP100-50 | 76.1% | 76.1% | 81.1% | 81.6% | 77.3% | 77.8% | 96.1% | 96.3% | 93.5% | 93.6% | S | ||||||

| 2KP50-11 | 85.3% | 87.5% | 89.0% | 91.3% | 88.9% | 88.4% | 99.1% | 100.0% | 96.3% | 100.0% | S | |||||||

| 2KP50-50 | 83.4% | 84.9% | 92.0% | 93.3% | 84.0% | 85.1% | 97.8% | 98.3% | 95.4% | 97.0% | S | |||||||

| 2KP50-92 | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 99.7% | 100.0% | 98.0% | 100.0% | M | |||||||

| 2KP500-41 | 63.1% | 63.4% | 63.3% | 63.6% | 62.4% | 62.4% | 94.3% | 94.4% | 88.9% | 88.9% | S | |||||||

| Set 1/B | 2KP100-1A | 70.2% | 70.9% | 78.4% | 78.0% | 73.4% | 73.5% | 96.1% | 96.5% | 90.7% | 90.6% | S | ||||||

| 2KP100-1B | 70.1% | 70.2% | 80.1% | 79.8% | 72.5% | 72.7% | 96.7% | 96.6% | 90.4% | 90.8% | S | |||||||

| 2KP100-1C | 82.1% | 82.6% | 85.4% | 85.4% | 82.6% | 83.3% | 96.5% | 96.6% | 93.1% | 93.3% | S | |||||||

| 2KP100-1D | 83.1% | 84.4% | 88.0% | 87.8% | 83.8% | 84.8% | 96.5% | 97.0% | 93.4% | 93.8% | S | |||||||

| 2KP200-1A | 69.6% | 70.2% | 76.4% | 76.6% | 71.3% | 71.7% | 95.7% | 95.9% | 89.2% | 89.6% | S | |||||||

| 2KP200-1B | 70.7% | 70.7% | 76.7% | 76.6% | 71.6% | 71.8% | 96.1% | 96.4% | 90.2% | 90.4% | S | |||||||

| 2KP200-1C | 65.7% | 65.9% | 77.2% | 77.3% | 66.6% | 67.3% | 96.5% | 96.4% | 91.0% | 91.2% | S | |||||||

| 2KP200-1D | 65.2% | 65.7% | 73.4% | 73.7% | 67.8% | 68.6% | 96.4% | 96.1% | 90.6% | 90.8% | S | |||||||

| 2KP300-1A | 68.4% | 68.4% | 73.1% | 73.6% | 68.7% | 69.1% | 95.4% | 95.4% | 89.7% | 90.1% | S | |||||||

| 2KP300-1B | 69.8% | 70.3% | 73.7% | 73.4% | 68.8% | 68.9% | 95.9% | 96.0% | 90.5% | 90.8% | S | |||||||

| 2KP300-1C | 71.1% | 71.7% | 81.7% | 82.2% | 71.5% | 71.4% | 95.2% | 95.5% | 90.9% | 91.2% | S | |||||||

| 2KP300-1D | 80.5% | 80.3% | 82.2% | 82.4% | 77.3% | 77.4% | 95.1% | 95.3% | 89.9% | 90.1% | S | |||||||

| 2KP400-1A | 68.0% | 68.2% | 69.4% | 69.1% | 67.3% | 67.7% | 95.8% | 96.1% | 87.8% | 87.9% | S | |||||||

| 2KP400-1B | 66.2% | 65.9% | 68.2% | 68.0% | 66.1% | 66.5% | 95.5% | 95.3% | 87.5% | 87.4% | S | |||||||

| 2KP400-1C | 65.6% | 65.2% | 69.6% | 69.2% | 65.4% | 65.1% | 97.4% | 97.6% | 85.3% | 85.8% | S | |||||||

| 2KP400-1D | 55.6% | 55.7% | 59.2% | 59.8% | 56.3% | 56.4% | 96.9% | 96.9% | 87.1% | 87.0% | S | |||||||

| 2KP500-1A | 63.9% | 64.4% | 67.2% | 66.8% | 63.3% | 63.5% | 93.4% | 93.7% | 85.4% | 85.4% | S | |||||||

| 2KP500-1B | 64.0% | 63.8% | 66.8% | 66.6% | 62.7% | 62.4% | 94.1% | 94.2% | 86.6% | 87.0% | S | |||||||

| 2KP500-1C | 75.8% | 75.9% | 81.4% | 81.7% | 74.4% | 74.7% | 95.2% | 95.1% | 88.3% | 88.5% | S | |||||||

| 2KP500-1D | 72.3% | 72.7% | 71.3% | 71.4% | 69.3% | 69.7% | 95.0% | 94.9% | 88.8% | 88.3% | S | |||||||

| Set 2/UNCOR | F5050W01 | 82.9% | 84.0% | 90.7% | 91.9% | 80.7% | 83.3% | 96.9% | 97.7% | 94.0% | 93.7% | S | ||||||

| F5050W02 | 90.4% | 90.9% | 94.8% | 94.8% | 86.3% | 87.0% | 99.0% | 99.3% | 97.2% | 98.2% | S | |||||||

| F5050W03 | 89.4% | 90.0% | 95.6% | 96.4% | 84.2% | 82.2% | 99.5% | 100.0% | 97.9% | 100.0% | S | |||||||

| F5050W04 | 94.1% | 95.3% | 96.1% | 95.3% | 89.4% | 92.4% | 98.8% | 99.5% | 97.2% | 98.8% | S | |||||||

| F5050W05 | 86.2% | 84.6% | 91.1% | 93.4% | 85.1% | 84.6% | 98.6% | 100.0% | 95.2% | 94.2% | S | |||||||

| F5050W06 | 87.9% | 88.1% | 93.1% | 92.6% | 84.7% | 85.7% | 97.1% | 97.0% | 93.8% | 93.8% | S | |||||||

| F5050W07 | 88.6% | 89.9% | 93.8% | 94.9% | 86.7% | 87.8% | 99.1% | 99.7% | 97.9% | 97.9% | S | |||||||

| F5050W08 | 90.0% | 91.8% | 94.0% | 94.2% | 83.7% | 83.9% | 98.6% | 99.3% | 94.9% | 97.4% | S | |||||||

| F5050W09 | 97.2% | 98.9% | 98.8% | 99.2% | 95.5% | 96.5% | 99.0% | 99.5% | 98.8% | 99.5% | S | |||||||

| F5050W10 | 93.3% | 94.6% | 97.2% | 98.6% | 90.2% | 89.9% | 98.8% | 100.0% | 97.4% | 100.0% | S | |||||||

| K5050W01 | 87.0% | 88.2% | 92.0% | 93.9% | 80.3% | 82.1% | 95.5% | 94.4% | 92.6% | 93.9% | S | |||||||

| K5050W02 | 88.0% | 88.1% | 91.6% | 93.1% | 83.5% | 84.1% | 98.6% | 99.2% | 95.0% | 95.5% | S | |||||||

| K5050W03 | 96.3% | 97.9% | 97.7% | 98.5% | 94.4% | 94.4% | 99.1% | 99.0% | 96.7% | 97.9% | S | |||||||

| K5050W04 | 92.4% | 93.0% | 95.9% | 97.4% | 86.5% | 86.5% | 99.5% | 99.7% | 97.0% | 99.1% | S | |||||||

| K5050W05 | 79.8% | 79.9% | 89.2% | 88.1% | 76.7% | 76.8% | 98.7% | 100.0% | 93.5% | 91.5% | S | |||||||

| K5050W06 | 87.4% | 87.0% | 93.5% | 93.7% | 83.5% | 84.3% | 97.5% | 97.0% | 96.2% | 96.0% | S | |||||||

| K5050W07 | 84.6% | 85.3% | 92.5% | 94.1% | 79.0% | 80.3% | 98.8% | 98.9% | 97.6% | 98.4% | S | |||||||

| K5050W08 | 93.0% | 91.3% | 93.9% | 94.3% | 89.7% | 90.9% | 96.6% | 97.2% | 95.3% | 97.2% | S | |||||||

| K5050W09 | 91.3% | 91.6% | 94.4% | 94.2% | 85.8% | 87.8% | 98.6% | 99.4% | 96.1% | 96.1% | S | |||||||

| K5050W10 | 87.8% | 87.6% | 95.2% | 95.6% | 81.5% | 82.5% | 97.9% | 97.8% | 97.4% | 97.4% | S | |||||||

| Set 2/WEAK | 4W150W1 | 98.2% | 98.3% | 98.2% | 98.3% | 98.1% | 98.1% | 98.5% | 98.5% | 97.8% | 97.8% | S | ||||||

| 4W1W1 | 94.1% | 94.1% | 96.3% | 96.5% | 95.2% | 95.3% | 97.7% | 97.7% | 93.8% | 93.9% | S | |||||||

| 4W200W1 | 97.8% | 97.9% | 97.7% | 97.7% | 97.7% | 97.7% | 98.1% | 98.2% | 96.9% | 97.1% | - | |||||||

| 4W250W1 | 90.9% | 90.6% | 91.1% | 91.5% | 89.6% | 89.7% | 92.9% | 92.9% | 88.1% | 88.3% | S | |||||||

| 4W300W1 | 92.7% | 92.7% | 93.9% | 94.1% | 91.7% | 92.1% | 95.3% | 94.9% | 89.9% | 90.1% | S | |||||||

| 4W350W1 | 91.9% | 92.0% | 93.2% | 93.0% | 91.4% | 91.5% | 95.8% | 96.1% | 90.0% | 90.2% | S | |||||||

| 4W400W1 | 92.0% | 92.1% | 93.6% | 94.2% | 91.9% | 91.8% | 96.5% | 96.8% | 89.2% | 88.8% | S | |||||||

| 4W450W1 | 88.0% | 88.1% | 90.1% | 90.0% | 88.2% | 88.2% | 93.2% | 93.5% | 85.9% | 85.8% | S | |||||||

| 4W500W1 | 88.9% | 88.9% | 91.3% | 91.8% | 89.4% | 89.5% | 95.0% | 95.3% | 87.2% | 87.5% | S | |||||||

| 4W600W1 | 86.5% | 86.9% | 89.9% | 89.8% | 87.5% | 87.5% | 93.3% | 93.2% | 84.5% | 84.1% | S | |||||||

| 4W700W1 | 84.0% | 84.0% | 87.2% | 87.3% | 85.7% | 85.8% | 91.3% | 91.5% | 81.5% | 81.7% | S | |||||||

| 4W800W1 | 84.9% | 84.7% | 89.7% | 89.7% | 87.4% | 87.9% | 93.6% | 93.8% | 83.6% | 83.6% | S | |||||||

| 4W900W1 | 83.3% | 83.5% | 89.3% | 89.4% | 86.9% | 86.9% | 93.0% | 93.4% | 83.1% | 83.2% | S | |||||||

| W4100W1 | 94.2% | 94.5% | 94.4% | 94.8% | 93.5% | 93.7% | 94.7% | 95.0% | 92.2% | 92.6% | - | |||||||

| W4C50W01 | 98.9% | 98.8% | 99.1% | 98.8% | 98.7% | 98.8% | 99.7% | 100.0% | 98.9% | 98.8% | S | |||||||

| Set 2/STRONG | 1S1W1 | 84.9% | 85.2% | 77.2% | 76.3% | 78.4% | 77.8% | 66.7% | 66.9% | 18.8% | 18.5% | M | ||||||

| 1S250W1 | 88.1% | 88.8% | 86.0% | 85.5% | 79.9% | 80.7% | 76.4% | 77.6% | 27.6% | 27.1% | M | |||||||

| 1S300W1 | 87.3% | 88.3% | 85.9% | 85.7% | 79.5% | 79.4% | 78.3% | 78.8% | 32.7% | 31.4% | M | |||||||

| 1S350W1 | 85.0% | 85.2% | 81.7% | 82.4% | 78.1% | 78.2% | 70.8% | 70.6% | 16.7% | 17.9% | M | |||||||

| 1S400W1 | 88.6% | 88.1% | 86.2% | 85.7% | 79.0% | 79.8% | 72.2% | 73.2% | 21.0% | 19.6% | M | |||||||

| 1S450W1 | 86.3% | 85.8% | 83.9% | 85.5% | 78.4% | 79.5% | 69.9% | 68.9% | 19.4% | 18.7% | M | |||||||

| 1S500W1 | 87.8% | 87.6% | 85.5% | 85.4% | 79.6% | 80.8% | 71.8% | 71.6% | 21.9% | 22.2% | M | |||||||

| 1S600W1 | 87.3% | 87.5% | 85.0% | 84.3% | 80.3% | 80.6% | 73.0% | 73.1% | 21.1% | 20.6% | M | |||||||

| 1S700W1 | 84.9% | 85.0% | 82.6% | 82.7% | 77.3% | 77.6% | 70.2% | 70.3% | 19.9% | 20.2% | M | |||||||

| 1S800W1 | 86.5% | 86.3% | 80.8% | 81.5% | 79.4% | 80.5% | 69.0% | 69.6% | 14.0% | 13.1% | M | |||||||

| 1S900W1 | 82.9% | 83.0% | 79.3% | 80.8% | 75.3% | 76.7% | 66.4% | 66.9% | 17.6% | 17.4% | M | |||||||

| S1100W1 | 82.5% | 86.7% | 80.3% | 80.4% | 76.1% | 75.5% | 77.9% | 75.6% | 44.6% | 50.5% | M | |||||||

| S1150W1 | 82.5% | 82.0% | 82.5% | 82.0% | 75.6% | 73.0% | 74.0% | 73.0% | 26.3% | 27.5% | M | |||||||

| S1200W1 | 84.5% | 85.7% | 81.5% | 80.7% | 76.8% | 75.7% | 72.1% | 70.6% | 30.4% | 30.1% | M | |||||||

| S1C50W01 | 67.9% | 74.4% | 62.4% | 51.4% | 60.3% | 51.4% | 62.9% | 70.5% | 29.8% | 26.3% | M | |||||||

| Problem | Multi-Objective | Single-Objective | Test | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NSGAII | MOEA/D | SMSEMOA | GA | ES | ||||||||||||||

| Mean | Median | Mean | Median | Mean | Median | Mean | Median | Mean | Median | |||||||||

| Set 1A | 2KP100-50 | 76.4% | 77.2% | 86.6% | 87.4% | 79.5% | 80.4% | 97.0% | 97.1% | 95.1% | 95.5% | S | ||||||

| 2KP50-11 | 81.8% | 84.7% | 82.2% | 86.4% | 88.6% | 90.4% | 98.5% | 100.0% | 93.7% | 100.0% | S | |||||||

| 2KP50-50 | 85.9% | 86.6% | 90.9% | 90.9% | 87.3% | 88.3% | 97.3% | 97.7% | 94.1% | 93.9% | S | |||||||

| 2KP50-92 | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 99.6% | 100.0% | 98.1% | 100.0% | M | |||||||

| 2KP500-41 | 63.4% | 64.2% | 57.1% | 57.2% | 61.3% | 61.9% | 97.2% | 97.3% | 91.6% | 91.9% | S | |||||||

| Set 1B | 2KP100-1A | 74.6% | 74.9% | 84.2% | 84.8% | 75.3% | 75.8% | 97.1% | 97.4% | 92.5% | 93.6% | S | ||||||

| 2KP100-1B | 74.3% | 75.1% | 83.4% | 83.4% | 77.0% | 77.7% | 97.3% | 97.7% | 93.1% | 93.5% | S | |||||||

| 2KP100-1C | 82.2% | 82.3% | 86.9% | 86.5% | 82.7% | 82.1% | 97.4% | 97.9% | 94.1% | 94.3% | S | |||||||

| 2KP100-1D | 76.4% | 76.5% | 81.3% | 81.5% | 76.8% | 77.0% | 96.4% | 96.4% | 92.0% | 92.3% | S | |||||||

| 2KP200-1A | 69.9% | 70.3% | 77.1% | 76.5% | 70.0% | 70.5% | 96.4% | 96.5% | 91.7% | 91.6% | S | |||||||

| 2KP200-1B | 69.8% | 70.5% | 75.9% | 76.3% | 70.4% | 70.6% | 96.7% | 97.0% | 91.0% | 91.2% | S | |||||||

| 2KP200-1C | 60.9% | 61.1% | 65.3% | 65.4% | 63.3% | 63.4% | 97.4% | 97.4% | 89.5% | 89.8% | S | |||||||

| 2KP200-1D | 63.6% | 63.8% | 71.4% | 71.0% | 64.1% | 63.8% | 96.8% | 96.9% | 90.3% | 90.4% | S | |||||||

| 2KP300-1A | 67.1% | 67.2% | 71.2% | 71.5% | 66.8% | 66.7% | 95.6% | 95.6% | 89.0% | 88.5% | S | |||||||

| 2KP300-1B | 68.5% | 68.8% | 73.0% | 73.1% | 68.3% | 68.6% | 96.6% | 96.5% | 90.8% | 90.9% | S | |||||||

| 2KP300-1C | 67.1% | 67.3% | 64.5% | 64.4% | 66.8% | 66.0% | 96.4% | 96.4% | 87.1% | 87.0% | S | |||||||

| 2KP300-1D | 82.9% | 83.1% | 84.7% | 84.8% | 80.7% | 80.5% | 96.7% | 97.0% | 92.2% | 92.6% | S | |||||||

| 2KP400-1A | 66.2% | 66.7% | 73.3% | 73.4% | 66.1% | 66.1% | 94.8% | 94.8% | 88.6% | 88.5% | S | |||||||

| 2KP400-1B | 67.6% | 67.9% | 73.7% | 73.8% | 66.7% | 67.4% | 94.6% | 94.6% | 89.3% | 89.8% | S | |||||||

| 2KP400-1C | 69.2% | 69.3% | 73.6% | 73.6% | 67.8% | 68.1% | 96.2% | 96.1% | 88.9% | 88.9% | S | |||||||

| 2KP400-1D | 54.4% | 54.2% | 58.3% | 58.3% | 54.4% | 54.1% | 97.3% | 97.3% | 86.7% | 86.4% | S | |||||||

| 2KP500-1A | 67.0% | 66.8% | 70.7% | 70.6% | 66.3% | 66.2% | 97.2% | 97.6% | 88.5% | 88.6% | S | |||||||

| 2KP500-1B | 65.1% | 65.6% | 69.2% | 68.7% | 64.5% | 64.0% | 95.0% | 94.9% | 86.9% | 87.0% | S | |||||||

| 2KP500-1C | 72.1% | 72.1% | 70.0% | 70.7% | 70.1% | 70.7% | 96.1% | 96.0% | 86.2% | 85.6% | S | |||||||

| 2KP500-1D | 71.7% | 71.9% | 72.5% | 72.4% | 70.0% | 69.9% | 94.0% | 94.3% | 87.3% | 87.2% | S | |||||||

| set 2/UNCOR | F5050W01 | 86.9% | 87.8% | 93.4% | 93.5% | 82.7% | 83.4% | 97.2% | 98.0% | 95.3% | 95.9% | S | ||||||

| F5050W02 | 89.1% | 89.5% | 93.7% | 94.0% | 85.1% | 86.2% | 97.7% | 98.9% | 93.1% | 92.8% | S | |||||||

| F5050W03 | 88.6% | 89.1% | 95.0% | 94.7% | 86.6% | 87.2% | 98.1% | 100.0% | 95.8% | 95.1% | S | |||||||

| F5050W04 | 84.7% | 85.2% | 89.9% | 90.5% | 82.8% | 83.9% | 94.6% | 94.5% | 91.8% | 91.9% | S | |||||||

| F5050W05 | 91.8% | 91.7% | 96.2% | 98.7% | 84.1% | 86.7% | 98.7% | 99.0% | 97.4% | 98.7% | S | |||||||

| F5050W06 | 88.0% | 88.6% | 90.8% | 91.2% | 86.5% | 86.7% | 98.4% | 100.0% | 93.8% | 94.4% | S | |||||||

| F5050W07 | 91.4% | 91.7% | 98.6% | 99.9% | 89.6% | 91.3% | 99.7% | 100.0% | 99.3% | 99.9% | S | |||||||

| F5050W08 | 93.4% | 94.7% | 96.8% | 97.4% | 91.9% | 93.9% | 98.6% | 98.9% | 97.1% | 97.7% | S | |||||||

| F5050W09 | 94.9% | 95.4% | 98.2% | 98.6% | 93.0% | 94.3% | 98.4% | 99.5% | 97.0% | 97.1% | - | |||||||

| F5050W10 | 90.0% | 90.6% | 92.4% | 91.0% | 88.1% | 89.8% | 98.0% | 99.3% | 94.3% | 93.1% | S | |||||||

| K5050W01 | 87.0% | 86.0% | 92.9% | 93.4% | 84.7% | 85.8% | 97.3% | 97.1% | 95.0% | 96.1% | S | |||||||

| K5050W02 | 91.0% | 89.3% | 95.3% | 100.0% | 87.3% | 87.2% | 98.1% | 100.0% | 96.1% | 96.0% | S | |||||||

| K5050W03 | 89.9% | 91.0% | 92.6% | 91.1% | 86.3% | 86.7% | 98.1% | 99.6% | 93.0% | 92.9% | S | |||||||

| K5050W04 | 85.7% | 87.2% | 93.7% | 93.8% | 83.3% | 84.7% | 98.7% | 98.9% | 96.8% | 99.3% | S | |||||||

| K5050W05 | 87.0% | 87.3% | 93.7% | 94.7% | 84.5% | 84.4% | 98.8% | 99.2% | 97.2% | 98.1% | S | |||||||

| K5050W06 | 90.5% | 91.3% | 94.2% | 93.5% | 88.1% | 88.5% | 97.1% | 99.8% | 91.8% | 92.1% | S | |||||||

| K5050W07 | 84.2% | 85.6% | 91.9% | 92.3% | 81.4% | 82.9% | 97.7% | 98.5% | 95.6% | 94.6% | S | |||||||

| K5050W08 | 94.1% | 94.3% | 96.8% | 97.0% | 92.3% | 93.5% | 97.8% | 97.8% | 95.3% | 96.1% | S | |||||||

| K5050W09 | 90.2% | 91.7% | 95.2% | 98.7% | 85.1% | 86.7% | 99.6% | 100.0% | 97.7% | 99.9% | S | |||||||

| K5050W10 | 81.1% | 80.8% | 87.4% | 87.5% | 78.2% | 79.0% | 97.5% | 97.5% | 95.1% | 96.1% | S | |||||||

| set 2/WEAK | 4W150W1 | 95.5% | 95.6% | 95.7% | 96.0% | 94.9% | 95.2% | 96.1% | 96.6% | 93.4% | 93.5% | - | ||||||

| 4W1W1 | 86.5% | 86.4% | 93.1% | 93.5% | 89.6% | 90.0% | 94.5% | 94.7% | 85.7% | 85.9% | S | |||||||

| 4W200W1 | 93.6% | 93.9% | 93.4% | 93.8% | 93.3% | 93.6% | 93.8% | 94.2% | 89.9% | 89.9% | - | |||||||

| 4W250W1 | 91.9% | 91.9% | 92.4% | 92.3% | 90.6% | 90.7% | 93.2% | 93.3% | 89.2% | 89.6% | S | |||||||

| 4W300W1 | 93.6% | 93.5% | 94.6% | 94.4% | 92.1% | 92.1% | 95.3% | 95.4% | 91.4% | 91.2% | - | |||||||

| 4W350W1 | 93.3% | 93.7% | 94.4% | 94.4% | 92.7% | 92.4% | 96.2% | 96.5% | 90.9% | 90.9% | S | |||||||

| 4W400W1 | 90.8% | 91.4% | 92.5% | 92.4% | 90.7% | 90.3% | 94.0% | 94.3% | 88.4% | 88.4% | S | |||||||

| 4W450W1 | 89.8% | 89.9% | 92.0% | 92.0% | 90.0% | 90.3% | 94.2% | 94.2% | 87.2% | 87.5% | S | |||||||

| 4W500W1 | 90.9% | 90.5% | 93.2% | 93.9% | 91.1% | 90.6% | 95.9% | 96.1% | 88.4% | 88.8% | S | |||||||

| 4W600W1 | 89.0% | 89.2% | 92.6% | 92.5% | 90.1% | 90.1% | 95.2% | 95.2% | 87.3% | 87.5% | S | |||||||

| 4W700W1 | 88.3% | 88.6% | 92.1% | 92.2% | 90.5% | 90.3% | 95.0% | 95.4% | 86.2% | 86.0% | S | |||||||

| 4W800W1 | 87.8% | 87.8% | 92.8% | 93.1% | 90.2% | 90.1% | 95.7% | 95.8% | 86.6% | 86.9% | S | |||||||

| 4W900W1 | 83.0% | 83.2% | 89.2% | 89.1% | 86.5% | 86.5% | 91.7% | 91.7% | 82.8% | 83.3% | S | |||||||

| W4100W1 | 95.5% | 96.1% | 95.7% | 96.2% | 94.9% | 95.1% | 95.7% | 96.0% | 93.8% | 94.0% | - | |||||||

| W4C50W01 | 98.6% | 98.3% | 98.7% | 98.3% | 98.7% | 100.0% | 99.2% | 100.0% | 98.5% | 100.0% | S | |||||||

| set 2/STRONG | 1S1W1 | 48.4% | 48.4% | 30.6% | 29.8% | 41.0% | 41.4% | 96.4% | 96.5% | 86.6% | 86.8% | S | ||||||

| 1S250W1 | 68.4% | 68.7% | 52.0% | 52.4% | 54.2% | 55.2% | 95.1% | 95.1% | 86.9% | 87.0% | S | |||||||

| 1S300W1 | 65.1% | 66.7% | 52.8% | 52.2% | 49.6% | 50.5% | 94.6% | 94.9% | 86.3% | 86.6% | S | |||||||

| 1S350W1 | 62.1% | 63.4% | 47.2% | 47.1% | 47.3% | 47.5% | 95.1% | 95.1% | 87.0% | 87.0% | S | |||||||

| 1S400W1 | 66.9% | 66.7% | 56.6% | 57.5% | 54.4% | 54.4% | 94.5% | 94.6% | 87.2% | 87.1% | S | |||||||

| 1S450W1 | 61.3% | 61.2% | 48.8% | 49.0% | 47.8% | 47.9% | 95.2% | 95.3% | 86.6% | 86.3% | S | |||||||

| 1S500W1 | 54.0% | 54.2% | 39.6% | 38.9% | 41.2% | 41.3% | 94.2% | 94.0% | 84.7% | 84.9% | S | |||||||

| 1S600W1 | 54.9% | 55.6% | 40.1% | 40.7% | 42.6% | 43.1% | 95.0% | 95.1% | 85.4% | 85.1% | S | |||||||

| 1S700W1 | 58.7% | 59.4% | 48.2% | 47.8% | 50.7% | 51.6% | 95.9% | 95.9% | 87.4% | 87.5% | S | |||||||

| 1S800W1 | 53.0% | 52.5% | 38.1% | 36.6% | 44.6% | 44.7% | 95.9% | 96.1% | 86.4% | 86.1% | S | |||||||

| 1S900W1 | 50.8% | 50.9% | 35.5% | 34.2% | 40.7% | 40.8% | 95.4% | 95.5% | 85.4% | 85.7% | S | |||||||

| S1100W1 | 76.0% | 76.3% | 68.3% | 68.5% | 64.5% | 66.5% | 94.2% | 94.5% | 85.9% | 86.5% | S | |||||||

| S1150W1 | 78.3% | 79.7% | 70.7% | 72.7% | 65.6% | 67.0% | 95.8% | 96.1% | 91.2% | 92.0% | S | |||||||

| S1200W1 | 67.7% | 68.5% | 56.9% | 59.5% | 51.7% | 52.1% | 92.5% | 92.6% | 85.9% | 86.4% | S | |||||||

| S1C50W01 | 84.8% | 87.3% | 81.6% | 81.8% | 79.6% | 82.6% | 92.5% | 92.6% | 87.9% | 90.9% | S | |||||||

| Problem | Multi-Objective | Single-Objective | Test | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NSGA-II | MOEA/D | SMS-EMOA | gGA | eES | |||||||||||||

| Mean | Median | Mean | Median | Mean | Median | Mean | Median | Mean | Median | ||||||||

| clusAB100 | 71.3% | 72.0% | 59.7% | 60.3% | 22.5% | 20.8% | 65.6% | 67.3% | 62.2% | 61.2% | M | ||||||

| clusAB300 | 64.3% | 64.5% | 56.4% | 55.7% | 31.2% | 32.0% | 64.8% | 66.2% | 63.0% | 63.9% | - | ||||||

| clusAB500 | 62.1% | 62.5% | 66.2% | 68.5% | 71.2% | 72.9% | 31.7% | 31.2% | 29.5% | 30.3% | M | ||||||

| euclAB100 | 83.2% | 82.5% | 77.2% | 77.2% | 29.2% | 29.3% | 82.6% | 82.8% | 81.8% | 82.45% | - | ||||||

| euclAB300 | 72.2% | 72.3% | 68.8% | 68.8% | 29.8% | 30.8% | 79.2% | 79.2% | 78.3% | 78.6% | S | ||||||

| euclAB500 | 72.7% | 72.7% | 74.0% | 73.2% | 61.9% | 63.3% | 42.5% | 43.8% | 45.7% | 45.7% | M | ||||||

| kroAB100 | 83.1% | 83.9% | 71.2% | 71.1% | 27.3% | 26.5% | 79.0% | 78.3% | 77.7% | 79.1% | M | ||||||

| kroAB1000 | 69.3% | 69.4% | 74.3% | 74.7% | 93.0% | 92.7% | 11.8% | 11.7% | 10.9% | 10.9% | M | ||||||

| kroAB150 | 72.7% | 73.1% | 61.9% | 61.6% | 20.7% | 20.5% | 79.1% | 80.2% | 77.7% | 78.2% | S | ||||||

| kroAB200 | 67.3% | 68.8% | 58.5% | 57.7% | 21.9% | 22.1% | 77.9% | 77.2% | 78.6% | 79.4% | S | ||||||

| kroAB300 | 69.9% | 70.3% | 65.2% | 64.9% | 30.4% | 28.6% | 76.8% | 77.5% | 77.6% | 79.0% | S | ||||||

| kroAB400 | 73.7% | 73.5% | 64.4% | 65.0% | 39.1% | 39.6% | 58.2% | 57.4% | 59.2% | 58.4% | M | ||||||

| kroAB500 | 68.8% | 68.4% | 68.8% | 67.8% | 62.8% | 63.5% | 38.0% | 37.8% | 38.1% | 39.2% | M | ||||||

| kroAB750 | 67.2% | 67.4% | 71.7% | 71.7% | 84.7% | 85.4% | 19.9% | 19.9% | 18.8% | 18.3% | M | ||||||

| kroAC100 | 82.6% | 83.1% | 74.8% | 75.4% | 31.6% | 31.6% | 80.1% | 79.4% | 78.9% | 80.2% | M | ||||||

| kroAD100 | 80.9% | 81.0% | 72.6% | 73.1% | 27.4% | 25.8% | 77.0% | 76.4% | 75.8% | 77.1% | M | ||||||

| kroBC100 | 81.1% | 81.3% | 74.2% | 73.9% | 31.6% | 32.3% | 77.9% | 77.2% | 77.1% | 76.1% | M | ||||||

| kroBD100 | 80.8% | 81.1% | 71.7% | 72.9% | 26.9% | 27.1% | 76.5% | 75.7% | 75.6% | 74.6% | M | ||||||

| kroCD100 | 79.4% | 79.9% | 70.1% | 71.8% | 28.0% | 29.0% | 74.5% | 73.9% | 76.3% | 76.9% | M | ||||||

| Problem | Multi-Objective | Single-Objective | Test | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NSGA-II | MOEA/D | SMS-EMOA | gGA | eES | |||||||||||||

| Mean | Median | Mean | Median | Mean | Median | Mean | Median | Mean | Median | ||||||||

| clusAB100 | 78.3% | 79.0% | 69.7% | 69.3% | 31.6% | 33.5% | 70.9% | 71.1% | 70.1% | 71.9% | M | ||||||

| clusAB300 | 61.7% | 61.5% | 54.4% | 55.4% | 27.0% | 24.4% | 63.9% | 64.9% | 61.6% | 62.4% | - | ||||||

| clusAB500 | 68.2% | 68.8% | 69.4% | 69.6% | 72.8% | 73.6% | 38.5% | 37.7% | 38.2% | 38.4% | M | ||||||

| euclAB100 | 84.3% | 84.0% | 76.4% | 75.6% | 28.3% | 29.7% | 81.4% | 81.5% | 80.0% | 81.3% | M | ||||||

| euclAB300 | 71.2% | 72.3% | 63.1% | 62.5% | 23.0% | 23.6% | 79.5% | 80.2% | 76.1% | 76.7% | S | ||||||

| euclAB500 | 67.3% | 67.1% | 67.7% | 67.1% | 58.1% | 58.2% | 32.9% | 33.2% | 33.1% | 33.3% | M | ||||||

| kroAB100 | 81.6% | 82.3% | 72.0% | 73.6% | 24.5% | 23.9% | 77.2% | 76.5% | 76.3% | 75.3% | M | ||||||

| kroAB1000 | 67.4% | 67.2% | 72.6% | 72.0% | 91.7% | 91.4% | 11.1% | 11.2% | 10.0% | 10.0% | M | ||||||

| kroAB150 | 74.7% | 74.8% | 66.5% | 66.2% | 24.5% | 25.0% | 78.5% | 79.4% | 77.4% | 78.7% | S | ||||||

| kroAB200 | 72.2% | 72.2% | 63.9% | 64.0% | 24.1% | 24.6% | 81.8% | 82.0% | 80.9% | 81.2% | S | ||||||

| kroAB300 | 71.5% | 71.7% | 65.3% | 65.5% | 31.6% | 31.9% | 75.1% | 74.2% | 76.0% | 77.0% | S | ||||||

| kroAB400 | 70.2% | 69.5% | 66.9% | 67.7% | 43.0% | 41.5% | 62.3% | 61.0% | 60.7% | 60.1% | M | ||||||

| kroAB500 | 65.2% | 66.1% | 64.1% | 64.1% | 54.6% | 53.0% | 30.9% | 33.1% | 28.8% | 29.8% | M | ||||||

| kroAB750 | 65.9% | 66.2% | 70.6% | 70.8% | 85.3% | 85.1% | 16.8% | 16.9% | 16.6% | 16.3% | M | ||||||

| kroAC100 | 76.6% | 77.0% | 65.5% | 66.0% | 21.2% | 20.0% | 71.1% | 70.6% | 73.0% | 73.6% | M | ||||||

| kroAD100 | 81.2% | 81.6% | 69.3% | 70.0% | 21.8% | 21.7% | 76.4% | 76.2% | 72.3% | 73.5% | M | ||||||

| kroBC100 | 83.0% | 83.0% | 74.4% | 75.0% | 29.6% | 29.0% | 77.3% | 76.7% | 79.0% | 79.6% | M | ||||||

| kroBD100 | 79.1% | 79.6% | 72.3% | 74.3% | 20.0% | 19.7% | 77.4% | 77.1% | 72.9% | 74.2% | - | ||||||

| kroCD100 | 82.3% | 82.8% | 72.6% | 73.4% | 28.7% | 28.9% | 75.3% | 74.7% | 77.1% | 77.7% | M | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahrach, M.; Miranda, G.; León, C.; Segredo, E. Comparison between Single and Multi-Objective Evolutionary Algorithms to Solve the Knapsack Problem and the Travelling Salesman Problem. Mathematics 2020, 8, 2018. https://doi.org/10.3390/math8112018

Mahrach M, Miranda G, León C, Segredo E. Comparison between Single and Multi-Objective Evolutionary Algorithms to Solve the Knapsack Problem and the Travelling Salesman Problem. Mathematics. 2020; 8(11):2018. https://doi.org/10.3390/math8112018

Chicago/Turabian StyleMahrach, Mohammed, Gara Miranda, Coromoto León, and Eduardo Segredo. 2020. "Comparison between Single and Multi-Objective Evolutionary Algorithms to Solve the Knapsack Problem and the Travelling Salesman Problem" Mathematics 8, no. 11: 2018. https://doi.org/10.3390/math8112018