1. Introduction

With the increasing complexity of practical problems, global optimization plays a significant role in many real-world applications, including engineering design, manufacturing system, economics, physical science, machine learning and other related fields [

1]. Due to the difficulty and inefficiency in finding the global extremum using traditional optimization methods, metaheuristics can be a practical and elegant method to provide a satisfactory solution to practical engineering optimization problems [

2].

Metaheuristic methods have been classified into different literary categories, such as evolutionary-based algorithms, swarm-based algorithms, physics-based algorithms and hybrid algorithms. Evolutionary-based methods originate from the theory of evolution, such as genetic algorithms [

3] and differential evolution [

4]. In recent years, there have been many effective and competitive evolutionary-based algorithms, including QANA [

5] and DMDE [

6]. Swarm-based algorithms emulate the collective decision-making of various social groups. Particle swarm optimization (PSO) [

7,

8,

9] is an excellent classical swarm-based algorithm.

PSO has many attractive advantages, such as its simple implementation and fewer controlling parameters. Thus, it is widely applied in feature selection, wireless communications, image processing, electrical power systems and other fields [

10]. Physics-based algorithms are inspired by the laws of natural physics. Simulated annealing [

11], gravitational search algorithm (GSA) [

12] and artificial electric field optimization [

13] are classical and popular physics-based methods. Furthermore, hybrid algorithms combine several methods to obtain better results.

The state transition algorithm (STA) [

14,

15] is a novel metaheuristic method for global optimization that was proposed in recent years. Different from many popular population-based metaheuristic methods, such as PSO and artificial bee colony (ABC) [

16,

17], STA is an individual-based metaheuristic method. The basic idea of STA is to regard a solution as a state, and the update of a solution can be considered as a state transition.

When solving an optimization problem, state transformation operators of STA are adopted alternately, and then the candidate solution set is generated by a sampling technique. Finally, the incumbent best solution is updated by a selection criterion. Due to the local and global search capabilities of the state transformation operators of STA, it has been successfully applied in many engineering problems, such as PID controller design [

18,

19], image segmentation [

20], nonlinear system identification [

21], feature selection [

22,

23], wind power forecasting [

24,

25] and other industrial applications [

26,

27,

28,

29].

Recently, a modified STA, named parameter optimized state transition algorithm (POSTA), was proposed in [

30]. Based on a statistical study, POSTA provides a parameter selection mechanism to select the optimal parameters for the expansion operator, rotation operator and axesion operator in STA. Using appropriate operator parameters, POSTA has better solution accuracy and stronger global exploration ability than does STA. However, the historical information in POSTA is not sufficiently utilized. As a result, POSTA still suffers from slow convergence speed and low solution accuracy on specific problems in the later stage when facing complex high-dimensional global optimization problems.

To speed up the convergence during the whole search process in STA, Nelder–Mead (NM) simplex search [

31,

32] was introduced. NM simplex search is a classical direct search method with a fast convergence speed. The NM simplex is a geometric figure consisting of

vertices in

n-dimensional space, which is able to store the information of

historical solutions in STA.

The operators in the NM method consist of a sequence of distinct geometric transformations (reflection, contraction...), which is able to utilize the historical information in STA. For decades, combining NM simplex search with metaheuristic methods has been a potential way to speed up the convergence [

33,

34]. However, few existing studies have used NM simplex search as a way to utilize the historical information. In this paper, a hybrid method is proposed by applying NM simplex search to make use of the historical information in STA.

To further improve the solution accuracy in later stages of the search, a quadratic interpolation (QI) strategy is also introduced in the hybrid STA. QI is a classical local search method and is widely used [

35,

36,

37,

38]. In QI, three known points are used as the search agents to generate a quadratic curve. The generated curve is seen as an approximate shape of the target function. To make full use of the historical information in later stages of the search, three historical solutions of STA are selected as the QI search agents to generate a new analytical solution with low computing cost. To a certain extent, QI can efficiently strengthen the exploitation capacity of STA.

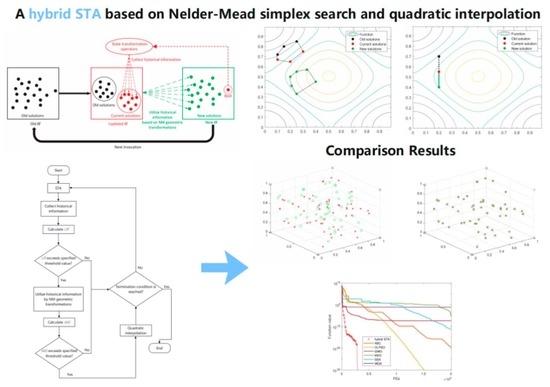

In summary, to make full use of the historical information in STA in different stages of search, a hybrid STA based on NM simplex search and QI is proposed in this study. Compared to STA, the hybrid STA overcomes the shortcoming of low convergence speed in the later stage of the search and has a perfect performance in both benchmark functions and practical problems. The main contributions of this paper can be summarized as follows:

(1) An efficient hybrid STA is proposed that successfully combines the merits of three distinct methods: the wide exploration capacity of STA, the fast convergence speed of NM simplex search and the strong exploitation capacity of QI.

(2) NM simplex search is adopted innovatively to utilize historical information for a faster convergence speed.

(3) The superiority and the effectiveness of the hybrid STA are verified by comparing six competitive metaheuristic methods with the STA family on 15 benchmarks and the wireless sensor network localization problem.

The remainder of the article is organized as follows. In

Section 2, the algorithmic background of STA, NM simplex search and QI is reviewed. In

Section 3, the proposed hybrid STA based on NM simplex search and QI is presented in detail. In

Section 4, the proposed method is compared with six competitive metaheuristic methods and the STA family on 15 benchmark functions to demonstrate its efficiency and stability. In

Section 5, hybrid STA is applied in the wireless sensor network localization problem to illustrate its ability to solve engineering problems.

3. Proposed Hybrid Method

3.1. NM-STA

In traditional STA, the historical information is not utilized sufficiently. As shown in

Figure 2a and

Figure 3a, the historical information is utilized by a translation operator. However, only the latest two historical solutions are collected, and only a linear search is applied to utilize the information. This inefficiency leads to the low convergence speed of STA on specific problems.

In past decades, combining NM simplex search with metaheuristic methods has been a popular way to speed up convergence. The general combination strategies between NM simplex search and metaheuristic methods can be summarized into two types: the staged pipelining type combination and the eagle strategy type combination [

41]. In the first type, NM simplex search is applied to the superior individuals in the population [

42]. In the second type, NM simplex search is applied in the exploitation stage as a local search method [

33,

43]. However, none of the existing combinations have seen NM simplex search as a way to utilize the information of historical solutions.

In this section, a hybrid STA with NM simplex search (NM-STA) is proposed. In NM-STA, a historical information mechanism based on NM simplex search is proposed to make use of the historical information. In the proposed mechanism, the historical information is collected based on a collection strategy, stored in the NM simplex and then utilized by the NM geometric transformations. The detailed historical information mechanism in NM-STA is illustrated as follows.

3.1.1. The Historical Information Set

In NM-STA, a historical information set called is used to store the historical solutions generated by the state transformation operators. has the capacity to store the coordinates of vertices in D-dimensional space. Therefore, can be seen as an NM simplex. For a D-dimensional problem, is capable of storing the information of historical solutions.

The historical information contained in

is more sufficient than that in the translation operator. As shown in

Figure 2 and

Figure 3,

contains information about a set of old solutions and a set of current solutions, but only an old solution and a current solution are considered in the translation operator.

3.1.2. Utilization of the Historical Information

In NM-STA, the NM geometric transformations are applied for utilization. As shown in

Figure 1, the operators in the NM method consist of a sequence of distinct geometric transformations, which are more comprehensive than the linear transformation in the translation operator. As a result, NM-STA is able to utilize the historical information more comprehensively as shown in

Figure 2 and

Figure 3.

The detailed steps of utilizing historical information in NM-STA are illustrated in Algorithm 3. When Algorithm 3 is invoked,

is input as the initial simplex. After that, the NM geometric transformations are applied to the initialized simplex. In the inner iterations, the NM geometric transformations are run

times.

| Algorithm 3 Utilize historical information based on NM geometric transformations |

- Input:

Updated historical information set - Output:

New historical information set , - 1:

initial simplex ← - 2:

for each do - 3:

NM geometric transformations - 4:

end for - 5:

← new simplex - 6:

← best solution in

|

Considering that each iteration of NM geometric transformations approximately produces a new vertex, running

times approximately produces a new simplex with

new vertices. Therefore, a new simplex with

new vertices is generated, and they are then stored in

. As shown in

Figure 3b, the new

contains

new solutions, and the top solution in

is denoted as

.

is then sent to the state transformation operators as the input of the current best solution.

3.1.3. Collection of Historical Information

An appropriate collection strategy of historical information is proposed to provide more promising input for utilization. Overall, the historical information is collected based on the collection strategy, stored in and utilized by the NM geometric transformations. The utilization of historical information in NM-STA is illustrated in Algorithm 3. If an invocation of utilization is terminated, then the historical information needs to be re-collected before next utilization. Therefore, between the invocations of utilization, a collection strategy for historical information is considered.

In the collection strategy, two type of solutions are considered: old solutions and current solutions. As shown in

Figure 3b, old solutions are the solutions generated in the last invocation of utilization. After the termination of the last utilization, the state transformation operators begin to generate solutions based on

. Between the invocations of utilization, those solutions generated by STA are considered as current solutions.

This collection strategy is seen as an extended version of that in the translation operator. As shown in

Figure 2a and

Figure 3a, the translation operator uses an old solution and a current solution as the historical information. In NM-STA, a set of old solutions and a set of current solutions are used as the historical information as shown in

Figure 2b and

Figure 3b.

The collection strategy is implemented by updating

. As shown in Algorithm 4, a current solution

is used to update

in an invocation. In the implementation, Algorithm 4 is invoked iteratively to update

with multiple current solutions. As shown in

Figure 3b,

is updated by multiple current solutions before been utilized. To control the ratio between old solutions and current solutions in

, a parameter named the update rate (UR) is proposed:

where

and

are, namely, the number of old solutions and the number of current solutions in

.

is calculated after each update, and only when

exceeds a certain threshold value will the utilization be invoked.

| Algorithm 4 Collect historical information. |

- Input:

Old historical information set , current solution - Output:

Updated historical information set - 1:

replace the worst solution in with - 2:

return updated

|

3.2. Properties of NM-STA

In this section, the fast convergence of NM-STA is briefly illustrated by a comparison between NM-STA and STA. To illustrate the effect of utilizing historical information, one classical test function is used, namely Rosenbrock (F7). NM-STA and STA are tested against the function in 2-

D space, and each method is only terminated when a certain criterion is satisfied. After termination, the corresponding number of function evaluations (FEs) is recorded. For both STA and NM-STA,

is set to 50, and

is set to 10. In NM-STA, the threshold value for

is set to 0.5, and the parameters in the NM simplex search are set as

and

, which are the same as in the standard implementation of an NM simplex search [

31].

To demonstrate the faster convergence speed of NM-STA, a 2-

D Rosenbrock test function is used. This unimodal function has a global minimum (1,1) that lies in a narrow, parabolic valley. The valley is easy to reach but further convergence is very difficult [

44]. In the test for Rosenbrock function, the methods are terminated if the current solution

satisfies:

where

indicates the global minimum. If the specified accuracy

is met, the test is considered as a ‘success’. In this section,

is set at

.

The statistical results given in

Table 1 reveal that NM-STA is able to reach the same accuracy with much less FEs. In

Figure 4, the solution paths of STA and NM-STA on the Rosenbrock function are portrayed, where both methods start from the same starting point (0, 0.75), and only the first 10 solutions are plotted in the figures for the convenience of observation. It is clear that the solution path of NM-STA is much more efficient.

By comparing the two paths, it can be seen that both methods quickly reach the valley. After that, STA searches aimlessly in the valley and moves slowly while NM-STA finds a promising direction and moves towards the optimum efficiently. This brief experiment demonstrates that the utilization of historical information can lead to a more promising search direction; therefore, NM-STA has a faster convergence speed than basic STA.

3.3. Combination NM-STA with QI

As discussed above, STA and NM simplex search are optimization methods with distinct characteristics. As a novel metaheuristic method, STA has a strong global exploration capacity; however, its convergence speed and exploitation capacity decrease in the later stages of searching. As a classical direct search method, NM simplex search has a fast convergence speed but is not stable in global search. However, when it comes to the neighbor of the global minimum, both STA and NM are not very powerful. On the contrary, QI is able to approximate the global minimum by an analytical solution but is only effective when the search agents are close enough to the global minimum. Thus, QI is used in the hybrid STA.

To invoke QI in later stage of the search, the average accuracy of the solutions in the historical information set

is calculated in each iteration as:

where

indicates the global best solution.

If meets a certain threshold value, the solutions are considered to be in the neighborhood of the global optimum. Then, QI will be invoked to improve the solution accuracy. In the implementation of QI, two QI search agents and are randomly chosen from the historical information set , and the third search agent is the current best solution.

3.4. The Hybrid STA (NMQI-STA)

To combine the merits of the three methods, NM simplex search and QI are applied to utilize the historical information of STA. Therefore, an enhanced hybrid STA based on NM simplex search and QI (NMQI-STA) is proposed. The hybrid STA’s step-wise procedure is summarized as follows:

- Step 1:

Produce solutions randomly as original solutions.

- Step 2:

Use state transformation operators to produce current solutions. Every best solution found by the operators will be placed into the historical information set H.

- Step 3:

Calculate the value of . If meets the threshold value, go to Step 4; otherwise, go back to Step 2.

- Step 4:

Utilize the historical information in H based on NM geometric transformations to update H and the current best solution.

- Step 5:

Calculate the value of . If meets the threshold value, go to Step 6; otherwise, go back to Step 2.

- Step 6:

Utilize QI with the historical information in H.

- Step 7:

Check whether the termination conditions are met. If not, go back to Step 2; otherwise, the algorithm is terminated.

As shown in

Figure 5, the proposed method uses an eagle strategy [

45] to maximize the efficiency and stability. In the exploration stage, NM simplex search utilizes the historical information of STA to generate promising solutions. As a result, the convergence speed is continuously accelerated. In the exploitation stage, QI utilizes the historical information to enhance the local search capacity. Consequently, the solution accuracy is improved.

For comparison, a hybrid STA with QI (QI-STA) was also implemented. The strategy of QI-STA is essentially the same as NMQI-STA. The only difference is that the NM simplex search is disabled in QI-STA.

4. Experimental Results and Discussions

We tested the proposed method against a set of benchmark functions. All methods were implemented in a MATLAB R2018a environment with an Intel Core i7-7700, 3.60 GHz processor on a 64-bit Windows 10 operating system.

4.1. Benchmark Functions and Parameter Settings

The details of the benchmark functions are shown in

Table 2. Notably,

and

indicate the global optimal value and the range of the search space. Generally, in

Table 2, there are two types of benchmark functions, called unimodal and multimodal. A function with a single minimum in the specified range is called unimodal. Unlike unimodal, multimodal functions have many local minimums that the method may be trapped in.

All the parameter settings of other algorithms were the same as in the references. For all members in the STA family, was set to 50, was set to 10, the threshold value for was set to 0.5, and the threshold value for was set to . To guarantee fairness and avoid arbitrariness, each test in the following experiments was performed 30 times independently. In each independent test, a method was terminated if any of the following criteria were satisfied:

4.2. Comparison with Other Metaheuristic Methods

To examine the effectiveness of the hybrid STA, several population-based metaheuristic methods were used, including ABC [

16,

17], CLPSO [

46,

47], GWO [

48,

49], MVO [

50,

51], SSA [

52,

53] and WOA [

54,

55]. These meteheuristic methods have been applied in various fields and have achieved state-of-the-art performance in different problems. For each benchmark function, the number of decision variables

D was set to 20, 30, 50 and 100, respectively. The computational results are listed in

Table 3,

Table 4,

Table 5 and

Table 6, and the average convergence curves of all test functions with

are given in

Figure 6.

As shown in the experiment results, the hybrid STA outperformed ABC, CLPSO, GWO, MVO and SSA in most of the cases. Despite WOA demonstrating better performance on functions such as F3 and F13, the hybrid STA performed better than WOA in the other 10 benchmark functions. WOA failed to remain stable in finding acceptable solutions on F2, F7, F8, F11 and F14. On the contrary, the hybrid STA showed incomparably convergence speed and solution accuracy on F8 and 11 and showed more robustness on multimodal functions, such as F2, F7 and F14. Therefore, the proposed method was more effective and robust than these competitive metaheuristic methods.

4.3. Comparison among the STA Family

To comprehensively verify the effect of NM simplex search and QI in the proposed method, the STA family (STA, NM-STA, QI-STA and hybrid STA) were tested against the benchmark functions listed in

Table 2. For each benchmark function, the number of decision variables

D was set to 30. The statistical results are shown in

Table 7, and the average convergence curves are given in

Figure 7.

As shown in the experiment results, both the convergence speed and accuracy of the hybrid STA were better than for STA. The average FEs of the hybrid STA were much less than those for STA on F1, F8, F9 and F11, which means that the convergence speed of the hybrid STA was much faster than STA. The convergence accuracy of STA was significantly improved on F1, F2, F5, F7, F8 and F10.

From the above discussion, when STA is enhanced with both NM simplex search and QI, the merits of the three distinct methods are combined. The convergence curves and the statistical results show that hybrid STA combines the merits of all three: the powerful exploration capacity of STA, the fast convergence speed of NM simplex search and the deep exploitation capacity of QI.

4.4. Effectiveness Analysis

In order to evaluate the effectiveness of hybrid STA, the overall effectiveness (

) [

56], which is a useful metric, is computed in this section. The OE of each algorithm is calculated using Equation (

20), where

N presents the number of test functions and

L presents the losing test functions of each algorithm. The results are reported in

Table 8.

4.5. Non-Parametric Statistical Test Analysis

In

Table 3,

Table 4,

Table 5 and

Table 6, the first results of these algorithms’ performances are presented. To compare the effectiveness of hybrid STA statistically, a powerful and sensitive non-parametric test, named the Wilcoxon signed-rank sum test, is performed. This test, where the

p-value is computed with a statistical significance value

= 0.05, can present the significant difference between pairs of algorithms. The results of the test for dimensions of 20, 30, 50 and 100 are listed in

Table 9,

Table 10,

Table 11 and

Table 12. The results of

p-value <

indicate that the hybrid STA had better performance than the compared algorithms.

5. Wireless Sensor Network Application

A wireless sensor network (WSN) is a self-organizing network composed of a large number of sensor nodes deployed in a predetermined area [

57]. A WSN can monitor the information in the deployment area and deliver effective information in the real time. The node-positioning technology of sensor is the basis of the entire wireless sensor network. As it is widely used in medical, military, environmental science, space exploration and other fields, the requirements on its positioning accuracy are continually increasing [

58,

59,

60,

61,

62].

Many researchers have applied the metaheuristic method with a node-positioning algorithm. Wang [

63] proposed a PSO clustering algorithm based on mobile aggregation for WSN. The algorithm used PSO to perform virtual clustering in the routing process, which improved the positioning accuracy and reduced the transmission delay. Sharma [

64] proposed a genetic algorithm based on the improved distance vector Hop and applied it to the WSN positioning problem to improve the positioning accuracy. Cui [

65] obtained the estimated location of unknown nodes using a differential evolution algorithm, which effectively reduced the range error and obtained high positioning accuracy.

5.1. Wireless Sensor Network Localization Problem

There are m anchor nodes (d represents the dimensions, which are two or three), and there are n unknown nodes . The Euclidean distance between the unknown node and unknown node is called , and . The Euclidean distance between the unknown node and anchor node is called , and . Furthermore, , and , where represents the sensor communication distance.

The wireless sensor network localization problem (SNL) is to estimate the position of

n unknown nodes

, which should satisfy:

Considering that there is noise in the real situation, there is no guarantee that the formula above is workable. To make the model universal, the SNL is reformulated as the following non-convex optimization problem by using the least-squares algorithm:

5.2. Experimental Results and Analysis

To further test the performance of hybrid STA, it was applied to the SNL problem. Furthermore, it was compared with other optimization algorithms, such as ABC, CLPSO, GWO, WOA, MVO and SSA. All the parameter settings of other algorithms were the same as in the references.

In the two-dimensional SNL problem, the number of anchor nodes was set as 4, and the number of unknown nodes was set as 50. The coordinates of the anchor nodes were (0,0), (0,1), (1,0) and (1,1). The radio range was 0.3. In the three-dimensional SNL problem, the number of anchor nodes was set as 8, and the number of unknown nodes was set as 50. The coordinates of the anchor nodes were (0,0,0), (0,0,1), (0,1,0), (1,0,0), (1,1,0), (1,0,1), (0,1,1) and (1,1,1). The radio range was 0.35. In

Figure 8, the data marked in red are the true locations of the unknown nodes, and the data marked in green are the locations of the nodes as calculated by the algorithms. Furthermore, the data marked in blue are the locations of the anchor nodes.

Figure 8a,b show the localization results of the WOA in two-dimensional and three- dimensional SNL problems.

Figure 8c,d show the localization results of the hybrid STA in the two-dimensional and three-dimensional SNL problems. After using the hybrid STA, the estimation location almost coincided with the true location. However, after using the WOA, the estimation error remained large between the estimation location and the true location. In the SNL problem, the hybrid STA performed much better than WOA.

Figure 9a,b show the convergent curves of the hybrid STA and other methods for the 2-D and 3-D SNL problems, respectively. Compared with other metaheuristic methods, the optimization accuracy and convergence speed of the hybrid STA were clearly better compared with the other algorithms.

6. Conclusions

In this paper, we proposed a hybrid STA based on Nelder–Mead simplex search and QI. In the hybrid STA, both NM simplex search and QI were applied to utilize the historical information. Specifically, in the exploration stage, NM simplex search utilized the historical information of STA to generate promising solutions. In the exploitation stage, QI utilized the historical information to enhance the local search capacity.

The proposed method used an eagle strategy to maximize the efficiency and stability. The proposed method enjoys the merits of the three methods: the global exploration capacity of STA, the fast convergence speed of NM simplex search and the strong exploitation ability of QI. The superiority and effectiveness of the proposed method was demonstrated by testing on 15 benchmark functions as well as the SNL problem and was compared with six other well-known metaheuristic methods.

In the proposed hybrid method, an eagle strategy was used to control the selection of vertices in the NM stage. However, the selection of vertices requires further study. In our future work, the quantitative properties of the vertices will be considered in the collection of historical information. It would also be interesting to apply the proposed historical information mechanism to other metaheuristic methods. In addition, other approaches of utilizing the historical information will be considered as well.