Processing at the Edge: A Case Study with an Ultrasound Sensor-Based Embedded Smart Device

Abstract

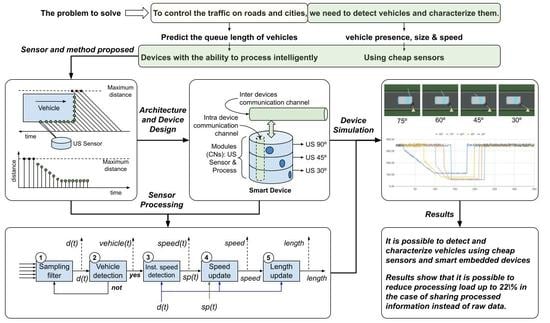

:1. Introduction

2. Background and Related Work

2.1. Placing Elements at Different Levels

2.2. Control Node Characterisation at the Edge Level

3. The Proposed Solution

3.1. Vehicle Detection and Characterisation Method

3.2. Vehicle Detection and Characterisation Process

4. Experiments and Results

4.1. Simulations

4.2. Prototyping

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CN | control node |

| CNN | convolutional neural network |

| I2C | inter-integrated circuit |

| IoT | Internet of Things |

| MLP | multi-layer perceptron |

| US | ultrasonic |

| WSN | wireless sensor networks |

References

- Xia, F.; Yang, L.T.; Wang, L.; Vinel, A. Internet of things. Int. J. Commun. Syst. 2012, 25, 1101. [Google Scholar] [CrossRef]

- Amurrio, A.; Azketa, E.; Javier Gutierrez, J.; Aldea, M.; Parra, J. A review on optimization techniques for the deployment and scheduling of distributed real-time systems. Rev. Iberoam. Autom. Inform. Ind. 2019, 16, 249–263. [Google Scholar] [CrossRef]

- Zare, R.N. Knowledge and distributed intelligence. Science 1997, 275, 1047–1048. [Google Scholar] [CrossRef]

- Poza-Lujan, J.L.; Sáenz-Peñafiel, J.J.; Posadas-Yagüe, J.L.; Conejero, J.A.; Cano, J.C. Use of Receiver Operating Characteristic Curve to Evaluate a Street Lighting Control System. IEEE Access 2021, 9, 144660–144675. [Google Scholar] [CrossRef]

- Sun, Z.; Bebis, G.; Miller, R. On-road vehicle detection using optical sensors: A review. In Proceedings of the 7th International IEEE Conference on Intelligent Transportation Systems (IEEE Cat. No. 04TH8749), Washington, WA, USA, 3–6 October 2004; pp. 585–590. [Google Scholar]

- Li, W.; Li, H.; Wu, Q.; Chen, X.; Ngan, K.N. Simultaneously detecting and counting dense vehicles from drone images. IEEE Trans. Ind. Electron. 2019, 66, 9651–9662. [Google Scholar] [CrossRef]

- Lozano Dominguez, J.M.; Mateo Sanguino, T.J. Review on V2X, I2X, and P2X Communications and Their Applications: A Comprehensive Analysis over Time. Sensors 2019, 19, 2756. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Golovnin, O.; Privalov, A.; Stolbova, A.; Ivaschenko, A. Audio-Based Vehicle Detection Implementing Artificial Intelligence. In International Scientific and Practical Conference in Control Engineering and Decision Making; Springer: Berlin/Heidelberg, Germany, 2020; pp. 627–638. [Google Scholar]

- Poza-Lujan, J.L.; Uribe-Chavert, P.; Sáenz-Peñafiel, J.J.; Posadas-Yagüe, J.L. Distributing and Processing Data from the Edge. A Case Study with Ultrasound Sensor Modules. In International Symposium on Distributed Computing and Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; pp. 190–199. [Google Scholar]

- Körner, M.F.; Bauer, D.; Keller, R.; Rösch, M.; Schlereth, A.; Simon, P.; Bauernhansl, T.; Fridgen, G.; Reinhart, G. Extending the automation pyramid for industrial demand response. Procedia CIRP 2019, 81, 998–1003. [Google Scholar] [CrossRef]

- Jennex, M.E. Big data, the internet of things, and the revised knowledge pyramid. ACM SIGMIS Database 2017, 48, 69–79. [Google Scholar] [CrossRef]

- Shi, W.; Dustdar, S. The promise of edge computing. Computer 2016, 49, 78–81. [Google Scholar] [CrossRef]

- Hadi, S.N.; Murata, K.T.; Phon-Amnuaisuk, S.; Pavarangkoon, P.; Mizuhara, T.; Jiann, T.S. Edge computing for road safety applications. In Proceedings of the 2019 23rd International Computer Science and Engineering Conference (ICSEC), Phuket, Thailand, 30 October–1 November 2019; pp. 170–175. [Google Scholar]

- Odat, E.; Shamma, J.S.; Claudel, C. Vehicle classification and speed estimation using combined passive infrared/ultrasonic sensors. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1593–1606. [Google Scholar] [CrossRef]

- Liu, J.; Han, J.; Lv, H.; Li, B. An ultrasonic sensor system based on a two-dimensional state method for highway vehicle violation detection applications. Sensors 2015, 15, 9000–9021. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mendoza Merchán, E.V.; Benitez Pina, I.F.; Núñez Alvarez, J.R. Network of multi-hop wireless sensors for low cost and extended area home automation systems. RIAI-Rev. Iberoam. Autom. Inform. Ind. 2020, 17, 412–423. [Google Scholar]

- D’Andrea, R.; Dullerud, G.E. Distributed control design for spatially interconnected systems. IEEE Trans. Autom. Control. 2003, 48, 1478–1495. [Google Scholar] [CrossRef] [Green Version]

- Hernández Bel, A. Dispositivo Modular Configurable para la Detección de Vehículos, y Viandantes, y con Soporte a la Iluminación de la Vía e Información de Tráfico. Master’s Thesis, DISCA, UPV, Valencia, Spain, 2020. [Google Scholar]

- Panagopoulos, Y.; Papadopoulos, A.; Poulis, G.; Nikiforakis, E.; Dimitriou, E. Assessment of an Ultrasonic Water Stage Monitoring Sensor Operating in an Urban Stream. Sensors 2021, 21, 4689. [Google Scholar] [CrossRef] [PubMed]

- Andang, A.; Hiron, N.; Chobir, A.; Busaeri, N. Investigation of ultrasonic sensor type JSN-SRT04 performance as flood elevation detection. In IOP Conference Series: Materials Science and Engineering; IOP: Bandung, Indonesia, 2019; Volume 550, p. 012018. [Google Scholar]

- Prasetyono, A.; Adiyasa, I.; Yudianto, A.; Agit, S. Multiple sensing method using moving average filter for automotive ultrasonic sensor. In Journal of Physics: Conference Series; IOP: Bristol, UK, 2020; Volume 1700, p. 012075. [Google Scholar]

- Semiconductors, P. The I2C-bus specification. Philips Semicond. 2000, 9397, 00954. [Google Scholar]

- Weng, M.; Huang, G.; Da, X. A new interframe difference algorithm for moving target detection. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 1, pp. 285–289. [Google Scholar]

- Gandhi, M.M.; Solanki, D.S.; Daptardar, R.S.; Baloorkar, N.S. Smart Control of Traffic Light Using Artificial Intelligence. In Proceedings of the 2020 5th IEEE International Conference on Recent Advances and Innovations in Engineering (ICRAIE), Jaipur, India, 1–3 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- McGugan, W. Beginning Game Development with Python and Pygame: From Novice to Professional; Apress: New York, NY, USA, 2007. [Google Scholar]

| 90° | 75° | 60° | 45° | 30° | |

|---|---|---|---|---|---|

| Samples front | - | 12 | 25 | 44 | 76 |

| Samples side | 84 | 83 | 83 | 82 | 81 |

| Calculated Speed | - | 2.06 m/s | 2.16 m/s | 2.20 m/s | 2.22 m/s |

| Relative Error | - | 8.44% | 4.00% | 2.22% | 1.33% |

| CN30 | CN45 | CN90 | |

|---|---|---|---|

| tControl(AVG) | 32.34 ms | 28.97 ms | 235.73 ms |

| tControl (STD) | 3.85 ms | 2.15 ms | 18.93 ms |

| tResponse (AVG) | 51.38 ms | 31.38 ms | 276.54 ms |

| tResponse (STD) | 3.18 ms | 2.18 ms | 20.49 ms |

| tLatency (AVG) | 1340.81 ms | 1362.25 ms | - |

| tLatency (STD) | 90.90 ms | 87.34 ms | - |

| Speed (AVG) | - | - | 10.12 m/s |

| Speed (STD) | - | - | 1.17 m/s |

| Speed (Rel.E) | - | - | 5.79% |

| Length (AVG) | - | - | 3.76 m |

| Length (STD) | - | - | 0.15 m |

| Length (Rel.E) | - | - | 1.61% |

| CN30 | CN45 | CN90 | |

|---|---|---|---|

| tControl(AVG) | 45.51 ms | 49.65 ms | 113.04 ms |

| tControl (STD) | 5.34 ms | 1.44 ms | 1.06 ms |

| tResponse (AVG) | 105.16 ms | 56.67 ms | 132.22 ms |

| tResponse (STD) | 1.77 ms | 4.77 ms | 12.06 ms |

| tLatency (AVG) | 1339.41 ms | 1362.65 ms | - |

| tLatency (STD) | 92.30 ms | 85.94 ms | - |

| Speed (AVG) | 10.55 m/s | 12.92 m/s | 10.08 m/s |

| Speed (STD) | 1.01 m/s | 1.18 m/s | 0.97 m/s |

| Speed (Rel.E) | 5.79% | 17.77% | 4.12% |

| Length (AVG) | - | - | 3.71 m |

| Length (STD) | - | - | 0.09 m |

| Length (Rel.E) | - | - | 0.78% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Poza-Lujan, J.-L.; Uribe-Chavert, P.; Sáenz-Peñafiel, J.-J.; Posadas-Yagüe, J.-L. Processing at the Edge: A Case Study with an Ultrasound Sensor-Based Embedded Smart Device. Electronics 2022, 11, 550. https://doi.org/10.3390/electronics11040550

Poza-Lujan J-L, Uribe-Chavert P, Sáenz-Peñafiel J-J, Posadas-Yagüe J-L. Processing at the Edge: A Case Study with an Ultrasound Sensor-Based Embedded Smart Device. Electronics. 2022; 11(4):550. https://doi.org/10.3390/electronics11040550

Chicago/Turabian StylePoza-Lujan, Jose-Luis, Pedro Uribe-Chavert, Juan-José Sáenz-Peñafiel, and Juan-Luis Posadas-Yagüe. 2022. "Processing at the Edge: A Case Study with an Ultrasound Sensor-Based Embedded Smart Device" Electronics 11, no. 4: 550. https://doi.org/10.3390/electronics11040550