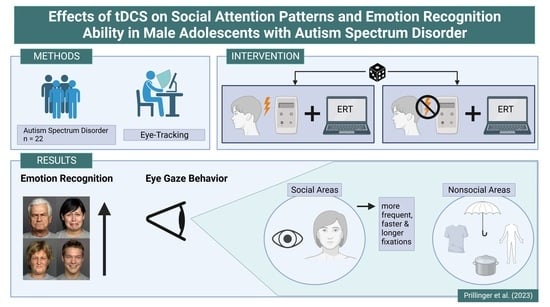

Effects of Transcranial Direct Current Stimulation on Social Attention Patterns and Emotion Recognition Ability in Male Adolescents with Autism Spectrum Disorder

Abstract

:1. Introduction

2. Materials and Methods

2.1. Design and Participants

2.2. Intervention

2.3. Eye Tracking Stimuli and Procedure

2.4. Eye-Tracking Apparatus

2.5. Classification of Fixations and Areas of Interest

2.6. Statistical Analysis

3. Results

3.1. Morphing Task

3.2. Face Emotion Task

3.3. Social Scenes Task

3.4. MASC

4. Discussion

4.1. Emotion Recognition Performance

4.2. Fixation Rates, Number of Fixations, and Influence on Emotion Recognition Performance

4.3. Time to First Fixation

4.4. Fixation Duration

4.5. General Discussion, Strengths, and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization (WHO). International Statistical Classification of Diseases and Related Health Problems, 11th Revision (ICD-11); WHO, 2021. Licensed under Creative Commons Attribution-NoDerivatives 3.0 IGO licence (CC BY-ND 3.0 IGO). Available online: https://icd.who.int/browse11 (accessed on 10 June 2023).

- de la Torre-Luque, A.; Viera-Campos, A.; Bilderbeck, A.C.; Carreras, M.T.; Vivancos, J.; Diaz-Caneja, C.M.; Aghajani, M.; Saris, I.M.J.; Raslescu, A.; Malik, A.; et al. Relationships between social withdrawal and facial emotion recognition in neuropsychiatric disorders. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2022, 113, 110463. [Google Scholar] [CrossRef] [PubMed]

- Frith, C.D. Social cognition. Philos. Trans. R. Soc. B Biol. Sci. 2008, 363, 2033–2039. [Google Scholar] [CrossRef] [PubMed]

- Uljarevic, M.; Hamilton, A. Recognition of emotions in autism: A formal meta-analysis. J. Autism Dev. Disord. 2013, 43, 1517–1526. [Google Scholar] [CrossRef]

- Lozier, L.M.; Vanmeter, J.W.; Marsh, A.A. Impairments in facial affect recognition associated with autism spectrum disorders: A meta-analysis. Dev. Psychopathol. 2014, 26, 933–945. [Google Scholar] [CrossRef]

- Yeung, M.K. A systematic review and meta-analysis of facial emotion recognition in autism spectrum disorder: The specificity of deficits and the role of task characteristics. Neurosci. Biobehav. Rev. 2022, 133, 104518. [Google Scholar] [CrossRef] [PubMed]

- Tsikandilakis, M.; Kausel, L.; Boncompte, G.; Yu, Z.; Oxner, M.; Lanfranco, R.; Bali, P.; Urale, P.; Peirce, J.; López, V.; et al. “There Is No Face Like Home”: Ratings for Cultural Familiarity to Own and Other Facial Dialects of Emotion With and Without Conscious Awareness in a British Sample. Perception 2019, 48, 918–947. [Google Scholar] [CrossRef]

- Barisnikov, K.; Thomasson, M.; Stutzmann, J.; Lejeune, F. Sensitivity to emotion intensity and recognition of emotion expression in neurotypical children. Children 2021, 8, 1108. [Google Scholar] [CrossRef]

- Harms, M.B.; Martin, A.; Wallace, G.L. Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychol. Rev. 2010, 20, 290–322. [Google Scholar] [CrossRef]

- Gao, X.; Maurer, D. A happy story: Developmental changes in children’s sensitivity to facial expressions of varying intensities. J. Exp. Child Psychol. 2010, 107, 67–86. [Google Scholar] [CrossRef]

- Chita-Tegmark, M. Social attention in ASD: A review and meta-analysis of eye-tracking studies. Res. Dev. Disabil. 2016, 48, 79–93. [Google Scholar] [CrossRef]

- Guillon, Q.; Hadjikhani, N.; Baduel, S.; Rogé, B. Visual social attention in autism spectrum disorder: Insights from eye tracking studies. Neurosci. Biobehav. Rev. 2014, 42, 279–297. [Google Scholar] [CrossRef] [PubMed]

- Pierce, K.; Marinero, S.; Hazin, R.; McKenna, B.; Barnes, C.C.; Malige, A. Eye tracking reveals abnormal visual preference for geometric images as an early biomarker of an autism spectrum disorder subtype associated with increased symptom severity. Biol. Psychiatry 2016, 79, 657–666. [Google Scholar] [CrossRef]

- Chita-Tegmark, M. Attention Allocation in ASD: A Review and Meta-analysis of Eye-Tracking Studies. Rev. J. Autism Dev. Disord. 2016, 3, 209–223. [Google Scholar] [CrossRef]

- Black, M.H.; Chen, N.T.M.; Lipp, O.V.; Bölte, S.; Girdler, S. Complex facial emotion recognition and atypical gaze patterns in autistic adults. Autism 2020, 24, 258–262. [Google Scholar] [CrossRef]

- Kliemann, D.; Dziobek, I.; Hatri, A.; Steimke, R.; Heekeren, H.R. Atypical reflexive gaze patterns on emotional faces in autism spectrum disorders. J. Neurosci. 2010, 30, 12281–12287. [Google Scholar] [CrossRef]

- Reisinger, D.L.; Shaffer, R.C.; Horn, P.S.; Hong, M.P.; Pedapati, E.V.; Dominick, K.C.; Erickson, C.A. Atypical Social Attention and Emotional Face Processing in Autism Spectrum Disorder: Insights From Face Scanning and Pupillometry. Front. Integr. Neurosci. 2020, 13, 76. [Google Scholar] [CrossRef] [PubMed]

- Wieckowski, A.T.; Flynn, L.T.; Richey, J.A.; Gracanin, D.; White, S.W. Measuring change in facial emotion recognition in individuals with autism spectrum disorder: A systematic review. Autism 2020, 24, 1607–1628. [Google Scholar] [CrossRef] [PubMed]

- Yamada, Y.; Inagawa, T.; Hirabayashi, N.; Sumiyoshi, T. Emotion Recognition Deficits in Psychiatric Disorders as a Target of Non-invasive Neuromodulation: A Systematic Review. Clin. EEG Neurosci. 2022, 53, 506–512. [Google Scholar] [CrossRef]

- Andò, A.; Vasilotta, M.L.; Zennaro, A. The modulation of emotional awareness using non-invasive brain stimulation techniques: A literature review on TMS and tDCS. J. Cogn. Psychol. 2021, 33, 993–1010. [Google Scholar] [CrossRef]

- Wilson, J.E.; Trumbo, M.C.; Tesche, C.D. Transcranial direct current stimulation (tDCS) improves empathy and recognition of facial emotions conveying threat in adults with autism spectrum disorder (ASD): A randomized controlled pilot study. NeuroRegulation 2021, 8, 87–95. [Google Scholar] [CrossRef]

- Nitsche, M.A.; Paulus, W. Excitability changes induced in the human motor cortex by weak transcranial direct current stimulation. J. Physiol. 2000, 527, 633–639. [Google Scholar] [CrossRef]

- García-González, S.; Lugo-Marín, J.; Setien-Ramos, I.; Gisbert-Gustemps, L.; Arteaga-Henríquez, G.; Díez-Villoria, E.; Ramos-Quiroga, J.A. Transcranial direct current stimulation in Autism Spectrum Disorder: A systematic review and meta-analysis. Eur. Neuropsychopharmacol. 2021, 48, 89–109. [Google Scholar] [CrossRef]

- Sousa, B.; Martins, J.; Castelo-Branco, M.; Gonçalves, J. Transcranial Direct Current Stimulation as an Approach to Mitigate Neurodevelopmental Disorders Affecting Excitation/Inhibition Balance: Focus on Autism Spectrum Disorder, Schizophrenia, and Attention Deficit/Hyperactivity Disorder. J. Clin. Med. 2022, 11, 2839. [Google Scholar] [CrossRef] [PubMed]

- Prillinger, K.; Radev, S.T.; Amador de Lara, G.; Klöbl, M.; Lanzenberger, R.; Plener, P.L.; Poustka, L.; Konicar, L. Repeated Sessions of Transcranial Direct Current Stimulation on Adolescents With Autism Spectrum Disorder: Study Protocol for a Randomized, Double-Blind, and Sham-Controlled Clinical Trial. Front. Psychiatry 2021, 12, 1–14. [Google Scholar] [CrossRef]

- Pfeiffer, U.J.; Vogeley, K.; Schilbach, L. From gaze cueing to dual eye-tracking: Novel approaches to investigate the neural correlates of gaze in social interaction. Neurosci. Biobehav. Rev. 2013, 37, 2516–2528. [Google Scholar] [CrossRef] [PubMed]

- Senju, A.; Johnson, M.H. The eye contact effect: Mechanisms and development. Neurosci. Biobehav. Rev. 2009, 33, 1204–1214. [Google Scholar] [CrossRef] [PubMed]

- Von Dem Hagen, E.A.H.; Stoyanova, R.S.; Rowe, J.B.; Baron-Cohen, S.; Calder, A.J. Direct gaze elicits atypical activation of the theory-of-mind network in Autism spectrum conditions. Cereb. Cortex 2014, 24, 1485–1492. [Google Scholar] [CrossRef] [PubMed]

- Qiao, Y.; Hu, Q.; Xuan, R.; Guo, Q.; Ge, Y.; Chen, H.; Zhu, C.; Ji, G.; Yu, F.; Wang, K.; et al. High-definition transcranial direct current stimulation facilitates emotional face processing in individuals with high autistic traits: A sham-controlled study. Neurosci. Lett. 2020, 738, 135396. [Google Scholar] [CrossRef]

- Black, M.H.; Chen, N.T.M.; Iyer, K.K.; Lipp, O.V.; Bölte, S.; Falkmer, M.; Tan, T.; Girdler, S. Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neurosci. Biobehav. Rev. 2017, 80, 488–515. [Google Scholar] [CrossRef]

- Bölte, S.; Rühl, D.; Schmötzer, G.; Poustka, F. Diagnostisches Interview für Autismus-Revidiert (ADI-R); Huber: Bern, Switzerland, 2006. [Google Scholar]

- Poustka, L.; Rühl, D.; Feineis-Matthews, S.; Poustka, F.; Hartung, M.; Bölte, S. ADOS-2. Diagnostische Beobachtungsskala für Autistische Störungen—2. Deutschsprachige Fassung der Autism Diagnostic Observation Schedule; Huber: Mannheim, Germany, 2015. [Google Scholar]

- McLaren, M.E.; Nissim, N.R.; Woods, A.J. The effects of medication use in transcranial direct current stimulation: A brief review. Brain Stimul. 2018, 11, 52–58. [Google Scholar] [CrossRef]

- Docter, P.; Del Carmen, R. Inside Out; Walt Disney Studios Motion Pictures: Burbank, CA, USA, 2015. [Google Scholar]

- Ebner, N.; Riediger, M.; Lindenberger, U. FACES—A database of facial expressions in young, middle-aged, and older women and men: Behav. Res. Methods 2010, 42, 351–362. [Google Scholar] [CrossRef]

- Holland, C.A.C.; Ebner, N.C.; Lin, T.; Samanez-Larkin, G.R. Emotion identification across adulthood using the Dynamic FACES database of emotional expressions in younger, middle aged, and older adults. Cogn. Emot. 2018, 33, 245–257. [Google Scholar] [CrossRef]

- O’Reilly, H.; Lundqvist, D.; Pigat, D.; Baron, K.; Fridenson, S.; Tal, S.; Meir, N.; Berggren, S.; Lassalle, A.; Golan, O.; et al. The EU-Emotion Stimulus Set; University of Cambridge: Cambridge, UK, 2012. [Google Scholar]

- O’Reilly, H.; Pigat, D.; Fridenson, S.; Berggren, S.; Tal, S.; Golan, O.; Bölte, S.; Baron-Cohen, S.; Lundqvist, D. The EU-Emotion Stimulus Set: A validation study. Behav. Res. Methods 2016, 48, 567–576. [Google Scholar] [CrossRef]

- Tobii Pro AB. Tobii Studio (Version 3.4.5) [Computer software]; Tobii AB: Danderyd, Sweden, 2016. [Google Scholar]

- Müller, N.; Baumeister, S.; Dziobek, I.; Banaschewski, T.; Poustka, L. Validation of the Movie for the Assessment of Social Cognition in Adolescents with ASD: Fixation Duration and Pupil Dilation as Predictors of Performance. J. Autism Dev. Disord. 2016, 46, 2831–2844. [Google Scholar] [CrossRef] [PubMed]

- Dziobek, I.; Fleck, S.; Kalbe, E.; Rogers, K.; Hassenstab, J.; Brand, M.; Kessler, J.; Woike, J.K.; Wolf, O.T.; Convit, A. Introducing MASC: A movie for the assessment of social cognition. J. Autism Dev. Disord. 2006, 36, 623–636. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing, Foundation for Statistical Computing. 2022. Available online: https://www.r-project.org/ (accessed on 1 May 2023).

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 48. [Google Scholar] [CrossRef]

- Leung, D.; Ordqvist, A.; Falkmer, T.; Parsons, R.; Falkmer, M. Facial emotion recognition and visual search strategies of children with high functioning autism and Asperger syndrome. Res. Autism Spectr. Disord. 2013, 7, 833–844. [Google Scholar] [CrossRef]

- Rutherford, M.D.; Towns, A.M. Scan path differences and similarities during emotion perception in those with and without autism spectrum disorders. J. Autism Dev. Disord. 2008, 38, 1371–1381. [Google Scholar] [CrossRef] [PubMed]

- Ye, A.X.; Leung, R.C.; Schäfer, C.B.; Taylor, M.J.; Doesburg, S.M. Atypical resting synchrony in autism spectrum disorder. Hum. Brain Mapp. 2014, 35, 6049–6066. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Gu, H.; Zhao, J. Atypical gaze patterns to facial feature areas in autism spectrum disorders reveal age and culture effects: A meta-analysis of eye-tracking studies. Autism Res. 2021, 14, 2625–2639. [Google Scholar] [CrossRef]

- Birmingham, E.; Bischof, W.F.; Kingstone, A. Social attention and real-world scenes: The roles of action, competition and social content. Q. J. Exp. Psychol. 2008, 61, 986–998. [Google Scholar] [CrossRef]

- Habel, U.; Windischberger, C.; Derntl, B.; Robinson, S.; Kryspin-Exner, I.; Gur, R.C.; Moser, E. Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 2007, 45, 2369–2377. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.; Xiao, G.; He, K.; Zhang, L.; Wu, X.; Li, D.; Zhu, C.; Tian, Y.; Hu, P.; Qiu, B.; et al. Increased Accuracy of Emotion Recognition in Individuals with Autism-Like Traits after Five Days of Magnetic Stimulations. Neural Plast. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Donaldson, P.H.; Kirkovski, M.; Rinehart, N.J.; Enticott, P.G. A double-blind HD-tDCS/EEG study examining right temporoparietal junction involvement in facial emotion processing. Soc. Neurosci. 2019, 14, 681–696. [Google Scholar] [CrossRef]

- Esse Wilson, J.; Trumbo, M.C.; Wilson, J.K.; Tesche, C.D. Transcranial direct current stimulation (tDCS) over right temporoparietal junction (rTPJ) for social cognition and social skills in adults with autism spectrum disorder (ASD). J. Neural Transm. 2018, 125, 1857–1866. [Google Scholar] [CrossRef] [PubMed]

- Kadosh, R.C.; Levy, N.; O’Shea, J.; Shea, N.; Savulescu, J. The neuroethics of non-invasive brain stimulation. Curr. Biol. 2012, 22, R108–R111. [Google Scholar] [CrossRef] [PubMed]

- Brunoni, A.R.; Nitsche, M.A.; Bolognini, N.; Bikson, M.; Wagner, T.; Merabet, L.; Edwards, D.J.; Valero-Cabre, A.; Rotenberg, A.; Pascual-Leone, A.; et al. Clinical research with transcranial direct current stimulation (tDCS): Challenges and future directions. Brain Stimul. 2012, 5, 175–195. [Google Scholar] [CrossRef]

- Blakemore, S.J. Development of the social brain in adolescence. J. R. Soc. Med. 2012, 105, 111–116. [Google Scholar] [CrossRef]

- Burnett, S.; Bird, G.; Moll, J.; Frith, C.; Blakemore, S.J. Development during adolescence of the neural processing of social emotion. J. Cogn. Neurosci. 2009, 21, 1735–1750. [Google Scholar] [CrossRef]

- Blakemore, S.J. The social brain in adolescence. Nat. Rev. Neurosci. 2008, 9, 267–277. [Google Scholar] [CrossRef]

- Rosenthal, I.A.; Hutcherson, C.A.; Adolphs, R.; Stanley, D.A. Deconstructing Theory-of-Mind Impairment in High-Functioning Adults with Autism. Curr. Biol. 2019, 29, 513–519.e6. [Google Scholar] [CrossRef] [PubMed]

- Andreou, M.; Skrimpa, V. Theory of mind deficits and neurophysiological operations in autism spectrum disorders: A review. Brain Sci. 2020, 10, 393. [Google Scholar] [CrossRef] [PubMed]

- Arain, M.; Haque, M.; Johal, L.; Mathur, P.; Nel, W.; Rais, A.; Sandhu, R.; Sharma, S. Maturation of the adolescent brain. Neuropsychiatr. Dis. Treat. 2013, 9, 449–461. [Google Scholar] [CrossRef] [PubMed]

| Active | Sham | |||||||

|---|---|---|---|---|---|---|---|---|

| Pre | Post | Pre | Post | |||||

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| Age | 14.00 | 1.90 | - | - | 14.27 | 1.90 | - | - |

| ERT duration | 19.17 | 5.05 | 14.43 | 2.63 | 19.06 | 4.38 | 13.85 | 2.05 |

| MASC duration | 35.48 | 4.44 | 31.3 | 4.53 | 36.96 | 5.31 | 34.12 | 9.54 |

| Morphing score | 30.18 | 6.40 | 34.91 | 5.34 | 32.82 | 2.52 | 36.64 | 3.38 |

| Social Scenes score | 6.00 | 2.37 | 7.09 | 1.22 | 6.45 | 0.93 | 7.27 | 0.65 |

| Face Emotion Score | 9.55 | 3.27 | 13.45 | 3.14 | 12.00 | 2.14 | 14.64 | 1.12 |

| MASC-R score | 21.91 | 7.73 | 24.73 | 8.05 | 23.91 | 6.41 | 27.27 | 5.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prillinger, K.; Radev, S.T.; Amador de Lara, G.; Werneck-Rohrer, S.; Plener, P.L.; Poustka, L.; Konicar, L. Effects of Transcranial Direct Current Stimulation on Social Attention Patterns and Emotion Recognition Ability in Male Adolescents with Autism Spectrum Disorder. J. Clin. Med. 2023, 12, 5570. https://doi.org/10.3390/jcm12175570

Prillinger K, Radev ST, Amador de Lara G, Werneck-Rohrer S, Plener PL, Poustka L, Konicar L. Effects of Transcranial Direct Current Stimulation on Social Attention Patterns and Emotion Recognition Ability in Male Adolescents with Autism Spectrum Disorder. Journal of Clinical Medicine. 2023; 12(17):5570. https://doi.org/10.3390/jcm12175570

Chicago/Turabian StylePrillinger, Karin, Stefan T. Radev, Gabriel Amador de Lara, Sonja Werneck-Rohrer, Paul L. Plener, Luise Poustka, and Lilian Konicar. 2023. "Effects of Transcranial Direct Current Stimulation on Social Attention Patterns and Emotion Recognition Ability in Male Adolescents with Autism Spectrum Disorder" Journal of Clinical Medicine 12, no. 17: 5570. https://doi.org/10.3390/jcm12175570