1. Introduction

Stroke is one of the highest causes of death in Malaysia, with more than 40,000 survivors are managing their health today [

1]. Globally, there were 6.2 million deaths caused by stroke in 2017, where the highest rates of stroke mortality countries were reported in Eastern Europe, Africa, and Central Asia [

2]. Stroke is caused by the insufficient supply of oxygen to the brain, damaging damage brain cells. This is turn will definitely affect some brain functions which results in stroke survivors to have difficulties in daily living, such as mobility, communication and expressing their thoughts. Also, stroke patients often suffer from emotional and behavioral changes due to their dissatisfaction with the current conditions.

Past studies have been carried out to investigate emotional changes in stroke patients as the influence of their physiological phenomenon [

3,

4,

5]. These studies revealed that emotions and thoughts are seen as interactive reactions and are intimately related to the health and physiological problems. This leads to the increase risk of a second or recurrent stroke with persistent depression. Therefore, emotion recognition of stroke patients is very helpful in the diagnosis of their psychological and physiological conditions.

The assessment of the emotional conditions and mood of stroke patients is required during rehabilitation to identify the presence of mental health problems such as persistent depression and mood disorders. This also assists in identifying the severity of associated functional impairment of the patients.

Conventionally, emotion assessment can be done through interviews with patients [

3,

6], an observation on patients’ behaviors [

6] as well as using standardized measures such as the Hospital Depression and Anxiety Scale (HADS) [

6] and Beck Depression Inventory (BDI) [

7]. These standardized measures determine the emotional states of the patient by scoring. However, the conventional approaches could be cheated by patients and information that is acquired then will be not accurate. Consequently, researchers tried other approaches to understand the emotional states of patients. Recent studies have stated that emotion assessment can be performed using physiological signals [

8], such as skin conductance (SC) [

9], respiration signal [

10], electrocardiogram (ECG) [

11], and electroencephalogram (EEG) [

12].

This paper is organized as follows:

Section 1, the problem and the context of the study in emotion assessment in stroke patients is discussed. Then,

Section 2 reviews the literature on EEG analysis and non-linear features. This section also discusses the use of bispectrum features in EEG analysis.

Section 3 focuses on the materials and methods used in this study, including the description of the EEG data, preprocessing, feature extraction, statistical analysis and classification methods. The next section presents the results and their discussions. Lastly, the summary of this paper is briefly discussed.

2. Related Works

EEG is the brain signal that can be measured from placing electrode sensors along the scalp to record the electrical activity of the brain that happens near the surface of the scalp [

13]. EEG signal can be used for diagnostic purposes and any abnormalities detected from it connotes that there is brain disorder in that person.

From previous research, the brain has been reported as having higher responsibility and involvement in emotional activities [

14]. The brain as the center of emotions is responsible to give responses when it perceives a stimulus. Hence, brain signals are able to provide emotional information of a stroke patient. With this concept, most recent studies of emotion assessment for stroke patients have utilized brain signals. Adamaszek et al. studied the emotional impairment using the event-related potentials (ERP) of a stroke patient [

15], Doruk et al. studied the emotional impairment in stroke patients by comparing the emotional score in the Stroke Impact Scale (SIS) with the EEG features, the EEG power asymmetry and coherence [

16]. Bong et al. assessed the emotions of stroke patients by using EEG signals in the time-frequency domain [

12]. In this study, the electroencephalogram (EEG)-based emotion recognition algorithm is proposed to study the emotional states of stroke patients.

According to previous studies, stroke patients suffer from emotional impairment and consequently, hence their emotional experiences are less significant compared to normal people [

15,

17,

18,

19]. In another related study by Bong et al. [

12], left brain damage (LBD) stroke patients were most dominant in perceiving sadness emotion and RBD stroke patients were most dominant in anger emotion. In addition, the authors’ dominant frequency band was the beta band by using wavelet packet transform (WPT) with Hurst exponent feature. The highest accuracy obtained was 76.04% in the happiness emotion for the normal control (NC) group using the features from beta to gamma band.

Previous emotional classification studies by Yuvaraj et al. [

20] have also shown that the accuracy of the feature extracted from the single frequency band was higher in the beta and gamma bands. Also, the highest accuracy was obtained when all frequency bands from delta to gamma were combined. The author obtained an average accuracy of 66.80% in NC using the combinations of the five frequency bands. In addition, they obtained an average accuracy of 64.73% with the beta band and 65.80% with the gamma band of NC. These results were optimized with the application of the feature selection technique.

The brain is a chaotic dynamical system [

21,

22,

23], where EEG signals are generated by nonlinear deterministic processes. This is also referred to as deterministic chaos theory with nonlinear coupling interactions between neuronal populations [

24,

25]. In contrast with linear analysis, nonlinear analysis methods will give more meaningful information about the emotional state changes of stroke patients. Over the last few years, a number of research works have been reported on analyzing EEG signals by using non-linear methods [

25,

26,

27]. For example, a recurrence measure was applied to study the seizure EEG signals [

25]. Zappasodi et al. used fractal dimension (FD) to study the neuronal impairment in stroke patients [

26]. In addition, Acharya et al. studied sleep stage detection in EEG signals by using different nonlinear dynamic methods, higher order spectra (HOS) features and recurrence quantification analysis (RQA) features [

28]. In their study, HOS was used to extract momentous information which helped with the diagnosis of neurological disorders.

HOS has been claimed as an effective method for analyzing EEG signals. HOS feature has been the most commonly used nonlinear feature. It is the frequency domain or spectral representation of higher order cumulants of a random process. HOS only includes cumulants with third order and above. HOS gains its advantage with the elimination of Gaussian noise and provides a high signal to noise ratio (SNR) [

29,

30]. HOS provides the ability to extract information deviation from Gaussian and preserves the phase information of signals. Thus, HOS is able to estimate the phase of the non-Gaussian parametric signals. In addition, HOS detects and characterizes nonlinearities in signals. In contrast, the second order measure is power spectrum, which can only reveal linear and Gaussian information of signals.

The third order HOS is bispectrum and is able to preserve phase information of EEG signals. Bispectrum is the easiest HOS to be worked out [

31]. Bispectrum has been utilized in the emotional study in EEG signals. Yuvaraj et al. applied bispectrum to study the difference between Parkinson’s disease patients and normal people in six discrete emotions (happiness, sadness, fear, anger, surprise, and disgust) [

27,

32]. Hosseini applied bispectrum to classify the two emotional states (calm and negatives states) of normal subjects [

33].

However, the emotional states of stroke patients are yet to be analyzed using the bispectrum features. Hence, in this work, the bispectrum feature is used to classify stroke patients’ EEG signals in different emotional states.

Bispectrum is proven in its ability to detect quadratic phase coupling (QPC), a phenomenon of nonlinearity interaction in EEG signals. QPC is the sum of phases at two frequency variables given by

[

34,

35]. Bispectrum can be estimated through two approaches: direct and indirect methods. For a stationary, discrete time, random process

, the direct method is estimated by taking the 1D-Fourier transform of the discrete series given by:

where

Bi is the bispectrum magnitude,

E [ ] denotes statistical expectation operation,

is the Fourier transform (1-D FFT) of the time series,

and * denote the complex conjugate.

For the indirect method, bispectrum is estimated by first estimating the third order cumulants of the random process

. Then the

nth-order moment is equal to the expectation over the process multiplied by the (

n − 1) lagged version of itself. Therefore, the third order moment,

is:

where

E [ ] denotes statistical expectation operation,

are lags of the moment sequence.

The third order cumulant sequence,

, is identical to its third order moment sequence. It can be calculated by taking an expectation over the process multiplied by 2 lagged versions given by:

The bispectrum,

is the 2D-Fourier transform of the third order cumulant function is given by:

for

,

, and

.

Bispectrum is a symmetric function as shown in

Figure 1. The shaded area is the non-redundant region of computation of the bispectrum, where

, which is sufficient to describe the whole bispectrum [

36].

4. Results and Discussions

Bispectrum features were extracted from the EEG signals in three groups of subjects (LBD, RBD, and NC) for the analysis of six emotions, namely anger (A), disgust (D), fear (F), happiness (H), sadness (S), and surprise (SU). The contour plots of the estimated bispectrum using the anger emotion of one subject from the LBD group was plotted for the alpha, beta and gamma bands as shown in

Figure 4. The plots of the bispectrum magnitude show the relationship between the two bispectrum frequency variables,

and

, of the anger emotion. In

Figure 4, the

(x-axis) and

(y-axis) are phased coupled. Frequency variables that are phase coupled indicate the presence of quadratic phase coupling (QPC) [

31], where the QPC represents the underlying neuronal interaction of the emotional state at the frequencies (

and

). The higher magnitude indicates stronger QPC between the frequencies. The red color represents the greatest increase in the magnitude of bispectrum, while the blue color represents the greatest decrease in the magnitude of bispectrum.

The distribution of the bispectrum over the (

,

) plane differs in each frequency band. The alpha band in

Figure 4a shows more bispectrum distribution at lower phase coupled frequencies, which is between (0.04, 0.04) Hz and (0.1, 0.1) Hz in the non-redundant region and other symmetry regions. Whereas the beta band in

Figure 4b and gamma band in

Figure 4c show the bispectrum distribution at higher phase coupled frequencies. These are between (0.1, 0.1) Hz and (0.2, 0.2) Hz in the beta band and between (0.3, 0.3) Hz and (0.4, 0.4) Hz in the gamma band. Moreover, the maximum magnitude of the bispectrum in the alpha band is the lowest among the three frequency bands. The beta band has larger maximum bispectrum magnitude than the alpha band, while the gamma band has the largest maximum bispectrum magnitude among the frequency bands.

Figure 5,

Figure 6 and

Figure 7 show the bispectrum plots in the non-redundant region and one symmetry region of the six emotions of Subjects #1 from NC, LBD and RBD groups, respectively. From these figures, the different emotional states have different bispectrum distribution over the plane with different phased coupled peaks and maximum magnitude for each. In the past studies, bispectrum has been claimed as a useful signal classification method as it is able to show distinctive distribution in different conditions, such as the left-hand motor imagery and the right-hand motor imagery [

42]. The bispectrum provides an EEG feature that is able to recognize these two conditions. Another study has shown that the bispectrum feature is different before meditation and during meditation [

43]. This study revealed that the bispectrum exhibit more phase-coupled distribution during meditation than the state before meditation. In addition, the maximum bispectrum magnitude increased during meditation. For the non-human experiment, the “induced’’ ischemic stroke in rat showed the difference bispectrum distribution in different states of ischemia [

44]. In this study, the bispectrum distribution decreased as the rat turns from normal state to ischemic state. Consequently, the distinctive bispectrum pattern of the six emotional states presented in this study implies that the emotional states of each group were distinguishable by applying bispectrum analysis. The significant difference of the emotional states using the bispectrum feature is further validated by the statistical analysis using ANOVA as shown in

Table 2.

From the experiment, six types of bispectrum features were extracted from preprocessed EEG data of LBD, RBD and NC. The statistical test using ANOVA was performed on the extracted features and the degrees of freedom were 45,354. The results are shown in

Table 2 in three different frequency bands for LBD, RBD and NC respectively. For a

p-value less than or equal to 0.05, this indicates that the differences between some of the means of the emotional states are statistically significant. The significant bispectrum features imply that there is an interaction of neuronal subcomponents at different frequencies in different emotional states. The shaded

p-values show the feature which are statistically not significant between the means of emotion classes as they are larger than 0.05. All the bispectrum features were statistically significant in LBD, RBD and NC except the second moment of the diagonal elements in bispectrum (

H4), thus,

H4 was discarded in classification. Moreover, from

Table 2, the F values are higher in

H1 and

H3 for LBD,

H2 and

H5 in RBD and

v,

H5, and

H2 in NC. The highest overall F values are in the LBD group, while NC has comparably smaller values compared to both LBD and RBD groups.

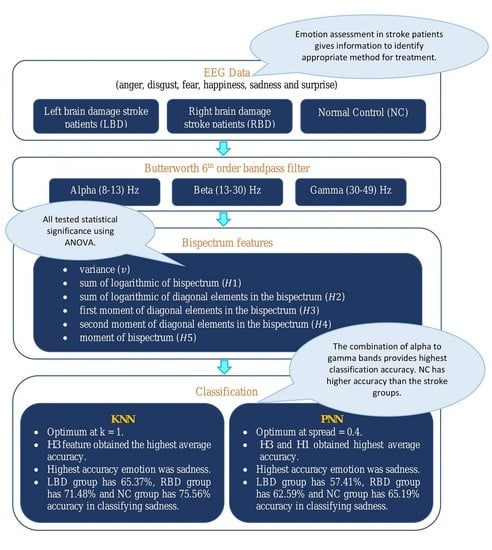

In emotion classification, the features were trained with varying k values for KNN and spread values for PNN. The classifiers were tested for all groups and frequency bands.

Figure 8,

Figure 9 and

Figure 10 show the classification performance of varying

k values in three individual EEG frequency sub-bands (alpha, beta, and gamma) and the combination of the three bands using Cityblock KNN classifier. From the figures, the average accuracy of the bispectrum features was similar across all

k values tested for alpha, beta, and gamma bands. However, the

k value of 1 achieved the highest average accuracy when using the features from the combination of the alpha to gamma band. Moreover, the combination of the alpha to gamma band significantly performs better than other frequency bands for all

k values as shown in

Figure 8,

Figure 9 and

Figure 10. The beta band, on the other hand, is the single band that performs best among the three EEG sub-bands.

In

Figure 11,

Figure 12 and

Figure 13, the average accuracy of the emotion classification of varying spread values using PNN classifier are plotted for LBD, RBD and NC, respectively. For most of the features, the accuracies are consistent at the spread value between 0.1 and 0.6. Then the accuracy is observed to gradually drop when spread value is larger than 0.6 and further declines when spread value increases. The spread value of 0.4 was chosen to classify the six emotional states as it has achieved the optimum accuracy for most of the features. Similarly, the combination of frequency bands has the highest average accuracy for all spread values. The beta frequency band is the best performance individual frequency band among the three frequency sub-bands in all groups.

Table 3 shows the average accuracy of all emotional states of the bispectrum features from the combination of all bands from alpha to gamma using KNN and PNN classifiers. For both classifiers, the optimum parameters for LBD, RBD and NC are found to be the same. The optimum

k value in KNN classification for all three groups is 1, and the optimum spread values in PNN classification is 0.4. In

Table 3, the KNN is observed to have higher average accuracy than the PNN classifier for all three groups. Notably, the

H3 feature is seen to have the highest average accuracy feature in all three groups using KNN, whereas the

H3 feature achieves the highest accuracy in the LBD group using PNN. Meanwhile, the

H1 feature obtains highest average accuracy in RBD and NC using PNN. According to the results obtained, the top three features are

,

H1, and

H2 for all the groups, whereas the worst performing bispectrum feature is the variance. The highest average classification accuracy is 65.40% in the NC group using KNN. Hence the

H3 feature is considered the most effective bispectrum feature in this study.

The confusion matrix of the

H3 feature in KNN emotion classification is presented in

Table 4,

Table 5 and

Table 6 for LBD, RBD and NC, respectively. From

Table 4, the highest predicted class is happiness in LBD. In

Table 5, the RBD group has the highest predicted value in sadness and surprise emotions, whereas the NC group has the highest predicted value in sadness emotion in

Table 6. For PNN classification, the confusion matrix is presented in

Table 7,

Table 8 and

Table 9 for each subject group. Likewise, the PNN classification predicted the happiness emotion correctly in the LBD group, as shown in

Table 7. In addition, the sadness emotion has the highest classification accuracy in RBD and NC groups as shown in

Table 8 and

Table 9.

The classification rates using the KNN classifier for individual emotions are show in

Figure 14. From the figure, the emotion with highest accuracy in all groups was sadness, where the LBD group achieved 65.37%, RBD group achieved 71.48% and NC group achieved 75.56%. Meanwhile, the fear emotion recorded the lowest accuracy in all the three groups, which was 53.52%, 57.96% and 60.74% for LBD, RBD and NC respectively.

The accuracy of the individual emotional states classified using the PNN classifier is shown in

Figure 15. The PNN classifier has the same highest accuracy emotion with KNN, which was the sadness emotion. The LBD group has 57.41%, RBD has 62.59% and NC has 65.19% classification accuracy for sadness emotion using PNN. In

Figure 15, the lowest classification accuracy for LBD and NC groups is the surprise emotion, which is 50.93% and 47.22%, respectively. In the RBD group, on the other hand, disgust emotion recorded the lowest classification accuracy of only 50.00%.

In this work, the surprise and fear achieved lower recognition rates compared to other emotions. The happiness was the most accurately recognized emotion, as well as the facial expressions for anger, sadness and disgust [

45,

46]. According to past studies, there is no convincing evidence for the surprise and fear emotions to be accurately recognized [

47,

48,

49].

The emotional state that shows highest classification accuracy in each group (LBD, RBD and NC) indicates that the emotion is more significant compared to other emotional states in the respective groups. From this current result, all of the LBD, RBD and NC groups show highest classification accuracy for sadness. Meanwhile, NC group exhibits the highest average accuracy for both classifiers, followed by RBD with LBD trailing behind.

As a result, in this study, the LBD and RBD stroke patients have recorded a lower classification accuracy compared to the NC. This suggests that the emotional states of NC are more significant than the stroke patients. In order to validate the significant differences among the three groups, ANOVA was used to test the statistical difference among the average accuracy obtained from the KNN classifier and the resultant p-value was less than 0.05. Hence, the emotion classification accuracy for LBD, RBD and NC were statistically significant. This signifies the significant difference among the three groups, which implies that there are differences in the emotional experiences between LBD, RBD and NC groups. From this work, the NC group was observed to have the highest emotion classification accuracy, followed by the RBD group and the LBD group performed worst. Therefore, the NC group has the highest efficiency in EEG emotional classification with the use of machine learning, while the LBD group the lowest.

This work is significant to those past studies in which only second order measures of statistics, such as the power spectrum [

50,

51], which is a linear feature, was used. The power spectrum can only reveal the amplitude information about the EEG signals, the phase information, such as the phase coupling in the signal, cannot be observed by applying the power spectrum. Furthermore, the use of linear approaches has ignored the nonlinear characteristics of the EEG signals, thus, bispectrum was implemented in this study to detect and characterize the nonlinearities of EEG signals. Also, this current study using the bispectrum was able to provide distinctive information for different emotional states which was useful for emotion classification by achieving the highest accuracy of 75.56% using the

. bispectrum feature.