Augmented Reality Implementations in Stomatology

Abstract

:1. Introduction

2. Methods

2.1. Panoramic Radiography

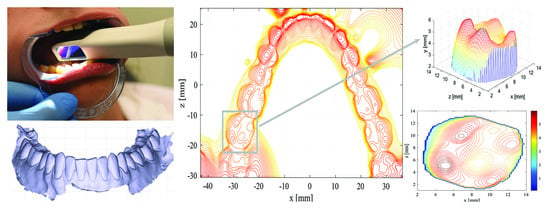

2.2. Camera Systems in Orthodontic Measures Evaluation

2.3. Scanning Technologies in Spatial Model Construction

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Interrante, V.; Hollerer, T.; Lecuyer, A. Virtual and augmented reality. IEEE Comput. Graph. Appl. 2018, 38, 28–30. [Google Scholar] [CrossRef]

- Nayyar, A.; Nguyen, G.N. Augmenting Dental Care: A Current Perspective. In Emerging Technologies for Health and Medicine: Virtual Reality, Augmented Reality, Artificial Intelligence, Internet of Things, Robotics, Industry 4.0; Le, D., Le, C., Tromp, J.G., Nguyen, G., Eds.; Wiley-Scrivener Publishing LLC: Beverly, MA, USA, 2018. [Google Scholar]

- Hettig, J.; Engelhardt, S.; Hansen, S.; Mistelbauer, G. AR in VR: Assessing surgical augmented reality visualizations in a steerable virtual reality environment. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1717–1725. [Google Scholar] [CrossRef] [PubMed]

- Kavalcová, L.; Škába, R.; Kyncl, M.; Rousková, B.; Procházka, A. The diagnostic value of MRI fistulogram and MRI distal colostogram in patients with anorectal malformations. J. Pediatr. Surg. 2013, 48, 1806–1809. [Google Scholar] [CrossRef] [PubMed]

- Procházka, A.; Schatz, M.; Centonze, F.; Kuchyňka, J.; Vyšata, O.; Vališ, M. Extraction of breathing features using MS Kinect for sleep stage detection. SPRINGER Signal Image Video Process. 2016, 10, 1279–1286. [Google Scholar] [CrossRef]

- Kwon, H.; Park, Y.; Han, J. Augmented reality in dentistry: A current perspective. Acta Odontol. Scand. 2018, 76, 497–503. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.K.; Yang, C.H.; Hsieh, Y.H.; Wang, J.C.; Hung, C.C. Augmented reality (AR) and virtual reality (VR) applied in dentistry. Kaohsiung J. Med. Sci. 2018, 34, 243–248. [Google Scholar] [CrossRef] [PubMed]

- Llena, C.; Folguera, S.; Forner, L.; Rodriguez-Lozano, F. Implementation of augmented reality in operative dentistry learning. Eur. J. Dent. Educ. 2018, 22, e122–e130. [Google Scholar] [CrossRef]

- Wang, J.; Suenaga, H.; Hoshi, K.; Yang, L.; Kobayashi, E.; Sakuma, I.; Liao, H. Augmented reality navigation with automatic marker-free image registration using 3D image overlay for dental surgery. IEEE Trans. Biomed. Eng. 2014, 61, 1295–1304. [Google Scholar] [CrossRef]

- Touati, R.; Richert, R.; Millet, C.; Farges, J.; Sailer, I.; Ducret, M. Comparison of two innovative strategies using augmented reality for communication in aesthetic dentistry: A pilot study. J. Healthc. Eng. 2019, 2019, 7019046. [Google Scholar] [CrossRef]

- Joda, T.; Gallucci, G.; Wismeijer, D.; Zitzmann, N. Augmented and virtual reality in dental medicine: A systematic review. Comput. Biol. Med. 2019, 108, 93–100. [Google Scholar] [CrossRef]

- Sun, T.; Lan, T.; Pan, C.; Lee, H.E. Dental implant navigation system guide thesurgery future. Kaohsiung J. Med. Sci. 2018, 34, 56–64. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Jiang, W.; Zhang, B.; Qu, X.; Ning, G.; Zhang, X.; Liao, H. Augmented reality surgical navigation with accurate CBCT-patient registration for dental implant placement. Med. Biol. Eng. Comput. 2019, 57, 47–57. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Ma, L.; Zhang, B.; Fan, Y.; Qu, X.; Zhang, X.; Liao, H. Evaluation of the 3D augmented reality-guided intraoperative positioning of dental implants in edentulous mandibular models. Int. J. Oral Maxillofac. Implant. 2018, 33, 1219–1228. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.; Liu, F.; Chai, G.; Pan, J.; Jiang, T.; Lin, L.; Xin, Y.; Zhang, Y.; Li, Q. A novel augmented reality system for displaying inferior alveolar nerve bundles in maxillofacial surgery. Sci. Rep. 2017, 7, 42365. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kašparová, M.; Gráfová, L.; Dvořák, P.; Dostálová, T.; Procházka, A.; Eliášová, H.; Pruša, J.; Kakawand, S. Possibility of reconstruction of dental plaster cast from 3D digital study models. Biomed. Eng. Online 2013, 12, 49. [Google Scholar] [CrossRef] [PubMed]

- Bukhari, S.; Reddy, K.; Reddy, M.; Shah, S. Evaluation of virtual models (3Shape OrthoSystem) in assessing accuracy and duration of model analyses based on the severity of crowding. Saudi J. Dent. Res. 2017, 8, 11–18. [Google Scholar] [CrossRef]

- Jacob, H.; Wyatt, G.; Buschang, P. Reliability and validity of intraoral and extraoral scanners. Prog. Orthod. 2015, 16, 38. [Google Scholar] [CrossRef] [PubMed]

- Thoma, J.; Havlena, M.; Stalder, S.; Gool, L. Augmented reality for user-friendly intra-oral scanning. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 97–102. [Google Scholar]

- Mangano, F.; Gandolfi, A.; Luongo, G.; Logozzo, S. Intraoral scanners in dentistry: A review of the current literature. BMC Oral Health 2017, 17, 149. [Google Scholar] [CrossRef] [PubMed]

- Yadollahi, M.; Procházka, A.; Kašparová, M.; Vyšata, O.; Mařík, V. Separation of overlapping dental arch objects using digital records of illuminated plaster casts. Biomed. Eng. Online 2015, 14, 67. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Procházka, A.; Kašparová, M.; Yadollahi, M.; Vyšata, O.; Grajciarová, L. Multi-camera systems use for dental arch shape measurement. Vis. Comput. 2015, 31, 1501–1509. [Google Scholar] [CrossRef]

- Yadollahi, M.; Procházka, A.; Kašparová, M.; Vyšata, O. The use of combined illumination in segmentation of orthodontic bodies. SPRINGER Signal Image Video Process. 2015, 9, 243–250. [Google Scholar] [CrossRef]

- Hošťálková, E.; Vyšata, O.; Procházka, A. Multi-dimensional biomedical image de-noising using Haar transform. In Proceedings of the 15th International Conference on Digital Signal Processing, Wales, UK, 1–4 July 2007; pp. 175–179. [Google Scholar]

- Jerhotová, E.; Švihlík, J.; Procházka, A. Biomedical image volumes denoising via the wavelet transform. In Applied Biomedical Engineering; INTECH: Rijeka, Croatia, 2011; pp. 435–458. [Google Scholar]

- Suenaga, H.; Tran, H.; Liao, H.; Masamune, K.; Dohi, T.; Hoshi, K.; Takato, T. Vision-based markerless registration using stereo vision and an augmented reality surgical navigation system: A pilot study. BMC Med. Imaging 2015, 15, 51. [Google Scholar] [CrossRef] [PubMed]

- Koopaie, M.; Kolahdouz, S. Three-dimensional simulation of human teeth and its application in dental education and research. Med. J. Islam. Repub. Iran 2016, 30, 461. [Google Scholar] [PubMed]

- Kašparová, M.; Procházka, A.; Gráfová, L.; Yadollahi, M.; Vyšata, O.; Dostálová, T. Evaluation of dental morphometrics during the orthodontic treatment. Biomed. Eng. Online 2014, 13, 68. [Google Scholar] [CrossRef] [PubMed]

- Mangano, F.; Veronesi, G.; Hauschild, U.; Mijiritsky, E.; Mangano, C. Trueness and Precision of Four Intraoral Scanners in Oral Implantology: A Comparative in Vitro Study. PLoS ONE 2016, 11, e0163107. [Google Scholar] [CrossRef] [PubMed]

- Lee, K. Comparison of two intraoral scanners based on three-dimensional surface analysis. Prog. Orthod. 2018, 19, 6. [Google Scholar] [CrossRef] [PubMed]

- Richert, R.; Goujat, A.; Venet, L.; Viguie, G.; Viennot, S.; Robinson, P.; Farges, J.; Fages, M.; Ducret, M. Intraoral scanner technologies: A review to make a successful impression. J. Healthc. Eng. 2017, 2017, 8427595. [Google Scholar] [CrossRef] [PubMed]

- Barone, S.; Paoli, A.; Razionale, A. Creation of 3D multi-body orthodontic models by using independent imaging sensors. Sensors 2013, 13, 2033–2050. [Google Scholar] [CrossRef]

- Logozzo, S.; Zanetti, E.; Franceschini, G.; Kilpela, A.; Makinen, A. Recent advances in dental optics—Part I: 3D intraoral scanners for restorative dentistry. Opt. Lasers Eng. 2014, 54, 203–221. [Google Scholar] [CrossRef]

- Kašparová, M.; Halamová, S.; Dostálová, T.; Procházka, A. Intra-oral 3D scanning for the digital evaluation of dental arch parameters. Appl. Sci. 2018, 8, 1838. [Google Scholar] [CrossRef]

- Song, T.; Yang, C.; Dianat, O.; Azimi, E. Endodontic guided treatment using augmented reality on a head-mounted display system. Healthc. Technol. Lett. 2018, 5, 201–207. [Google Scholar] [CrossRef]

- Gráfová, L.; Kašparová, M.; Kakawand, S.; Procházka, A.; Dostálová, T. Study of edge detection task in dental panoramic X-ray images. Dentomaxillofac. Radiol. 2013, 42, 20120391. [Google Scholar] [CrossRef] [PubMed]

- Procházka, A.; Vyšata, O.; Kašparová, M.; Dostálová, T. Wavelet transform in biomedical image segmentation and classification. In Proceedings of the 2011 7th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 4–6 September 2011; pp. 1–4. [Google Scholar]

- Chang, J.S.; Shih, A.C.C.; Liao, H.Y.M.; Fang, W.H. Using Normal Vectors for Stereo Correspondence Construction. In Knowledge-Based Intelligent Information and Engineering Systems; Khosla, R., Howlett, R.J., Jain, L.C., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3681, pp. 582–588. [Google Scholar]

- Gao, K.; Chen, H.; Zhao, Y.; Geng, Y.; Wang, G. Stereo matching algorithm based on illumination normal similarity and adaptive support weight. Opt. Eng. 2013, 52, 027201. [Google Scholar] [CrossRef]

- Ramalingam, S.; Taguchi, Y. A theory of minimal 3D point to 3D plane registration and its generalization. Int. J. Comput. Vis. 2013, 102, 73–90. [Google Scholar] [CrossRef]

- Kravitz, N.; Groth, C.; Jones, P.; Graham, J.; Redmond, W. Intraoral digital scanners. J. Clin. Orthod. 2014, 48, 337–347. [Google Scholar]

- Martin, C.; Chalmers, E.; McIntyre, G.; Cochrane, H.; Mossey, P. Orthodontic scanners: What’s available? J. Orthod. 2015, 42, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Hong-Seok, P.; Chintal, S. Development of high speed and high accuracy 3D dental intra oral scanners. Procedia Eng. 2015, 100, 1174–1181. [Google Scholar] [CrossRef]

- Ahn, J.; Park, A.; Kim, J.; Lee, B.; Eom, J. Development of three-dimensional dental scanning apparatus using structured illumination. Sensors 2017, 17, 1634. [Google Scholar] [CrossRef]

- Logozzo, S.; Franceschini, A.; Kilpela, A.; Caponi, M.; Governi, L.; Blois, L. A comparative analysis of intraoral 3d digital scanners for restorative dentistry. Int. J. Med. Technol. 2011, 5, 1–18. [Google Scholar]

- Nedelcua, R.; Olssonb, P.; Nyströmb, I.; Rydénc, J.; Thora, A. Accuracy and precision of 3 intraoral scanners and accuracy of conventional impressions: A novel in vivo analysis method. J. Dent. 2018, 69, 110–118. [Google Scholar] [CrossRef]

- Medina-Sotomayor, P.; Pascual-Moscardo, A.; Camps, I. Relationship between resolution and accuracy of four intraoral scanners in complete-arch impressions. J. Clin. Exp. Dent. 2018, 10, e361–e366. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goshtasby, A. Image Registration: Principles, Tools and Methods; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Dostálová, T.; Kašparová, M.; Kříž, P.; Halamová, V.; Jelínek, M.; Bradna, P.; Mendřický, J. Intraoral scanner and stereographic 3D print in dentistry-quality and accuracy of model-new laser application in clinical practice. Laser Phys. 2018, 28, 125602. [Google Scholar] [CrossRef]

- Prasad, S.; Kader, N.; Sujatha, G.; Raj, T.; Patil, S. 3D printing in dentistry. J. 3D Print. Med. 2018, 2, 89–91. [Google Scholar] [CrossRef]

- Zaharia, C.; Gabor, A.; Gavrilovici, A.; Stan, A.; Idorasi, A.; Sinescu, C.; Negrutiu, M. Digital dentistry: 3D printing applications. J. Interdiscip. Med. 2017, 2, 50–53. [Google Scholar] [CrossRef]

- Wesemann, C.; Muallah, J.; Mah, J.; Bumann, A. Accuracy and efficiency of full-arch digitalization and 3D printing: A comparison between desktop model scanners, an intraoral scanner, a CBCT model scan, and stereolithographic 3D printing. Quintessence Int. 2017, 48, 41–50. [Google Scholar] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Procházka, A.; Dostálová, T.; Kašparová, M.; Vyšata, O.; Charvátová, H.; Sanei, S.; Mařík, V. Augmented Reality Implementations in Stomatology. Appl. Sci. 2019, 9, 2929. https://doi.org/10.3390/app9142929

Procházka A, Dostálová T, Kašparová M, Vyšata O, Charvátová H, Sanei S, Mařík V. Augmented Reality Implementations in Stomatology. Applied Sciences. 2019; 9(14):2929. https://doi.org/10.3390/app9142929

Chicago/Turabian StyleProcházka, Aleš, Tatjana Dostálová, Magdaléna Kašparová, Oldřich Vyšata, Hana Charvátová, Saeid Sanei, and Vladimír Mařík. 2019. "Augmented Reality Implementations in Stomatology" Applied Sciences 9, no. 14: 2929. https://doi.org/10.3390/app9142929