Multiple Stationary Human Targets Detection in Through-Wall UWB Radar Based on Convolutional Neural Network

Abstract

:1. Introduction

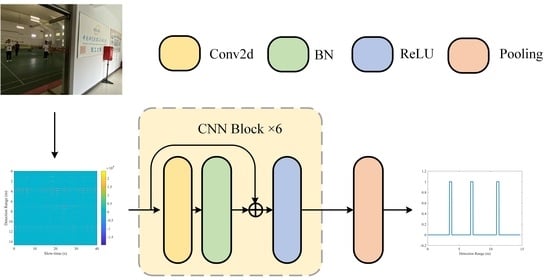

2. Signal Model

3. Proposed Multiple Stationary Human Targets Detection Method

3.1. Step A: Pre-Processing

3.1.1. Adaptive Background Subtraction

3.1.2. Filtering in Fast-Time Dimension

3.1.3. Advance Normalization

3.2. Step B: TPOI Extraction

3.3. Step C: TPOI Clustering

4. Dataset and Experimental Setups

5. Results

5.1. Experiment with a Single Target

5.2. Experiment with Multiple Targets

5.3. Experiments with Different Target Intervals

5.4. Experiments in Different Scenarios

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vu, V.T.; Sjogren, T.K.; Pettersson, M.I.; Gustavsson, A.; Ulander, L. Detection of moving targets by focusing in UWB SAR—Theory and experimental results. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3799–3815. [Google Scholar] [CrossRef]

- Huang, Q.; Qu, L.; Wu, B.; Fang, G. UWB through-wall imaging based on compressive sensing. IEEE Trans. Geosci. Remote Sens. 2009, 48, 1408–1415. [Google Scholar] [CrossRef]

- Le, C.; Dogaru, T.; Nguyen, L.; Ressler, M. Ultrawideband (UWB) radar imaging of building interior: Measurements and predictions. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1409–1420. [Google Scholar] [CrossRef]

- Yarovoy, A.G.; Ligthart, L.P.; Matuzas, J.; Levitas, B. UWB radar for human being detection. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 10–14. [Google Scholar] [CrossRef] [Green Version]

- Lv, H.; Lu, G.H.; Jing, X.J.; Wang, J. A new ultra-wideband radar for detecting survivors buried under earthquake rubbles. Microw. Opt. Technol. Lett. 2010, 52, 2621–2624. [Google Scholar] [CrossRef]

- Liu, L.; Fang, G. A novel UWB sampling receiver and its applications for impulse GPR systems. IEEE Geosci. Remote Sens. Lett. 2010, 7, 690–693. [Google Scholar] [CrossRef]

- Zhuge, X.; Yarovoy, A.G. A sparse aperture MIMO-SAR-based UWB imaging system for concealed weapon detection. IEEE Trans. Geosci. Remote Sens. 2010, 49, 509–518. [Google Scholar] [CrossRef]

- Wu, S.; Tan, K.; Xu, Y.; Chen, J.; Meng, S.; Fang, G. A simple strategy for moving target imaging via an experimental UWB through-wall radar. In Proceedings of the 2012 14th International Conference on Ground Penetrating Radar (GPR), Shanghai, China, 4–8 June 2012; pp. 961–965. [Google Scholar]

- Rohman, B.P.A.; Andra, M.B.; Nishimoto, M. Through-the-Wall Human Respiration Detection Using UWB Impulse Radar on Hovering Drone. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6572–6584. [Google Scholar] [CrossRef]

- Ma, Y.; Qi, F.; Wang, P.; Liang, F.; Lv, H.; Yu, X.; Li, Z.; Xue, H.; Wang, J.; Zhang, Y. Multiscale residual attention network for distinguishing stationary humans and common animals under through-wall condition using ultra-wideband radar. IEEE Access 2020, 8, 121572–121583. [Google Scholar] [CrossRef]

- Shen, H.; Xu, C.; Yang, Y.; Sun, L.; Cai, Z.; Bai, L.; Clancy, E.; Huang, X. Respiration and heartbeat rates measurement based on autocorrelation using IR-UWB radar. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1470–1474. [Google Scholar] [CrossRef]

- Sachs, J.; Aftanas, M.; Crabbe, S.; Drutarovsky, M.; Klukas, R.; Kocur, D.; Nguyen, T.T.; Peyerl, P.; Rovnakova, J.; Zaikov, E. Detection and tracking of moving or trapped people hidden by obstacles using ultra-wideband pseudo-noise radar. In Proceedings of the 2008 European Radar Conference, Amsterdam, The Netherlands, 30–31 October 2008; pp. 408–411. [Google Scholar]

- Yan, K.; Wu, S.; Ye, S.; Fang, G. A Novel Wireless-Netted UWB Life-Detection Radar System for Quasi-Static Person Sensing. Appl. Sci. 2021, 11, 424. [Google Scholar] [CrossRef]

- Pan, J.; Ye, S.; Shi, C.; Yan, K.; Liu, X.; Ni, Z.; Yang, G.; Fang, G. 3D imaging of moving targets for ultra-wideband MIMO through-wall radar system. IET Radar Sonar Navigat. 2021, 15, 261–273. [Google Scholar] [CrossRef]

- Xia, Z.; Fang, G.; Ye, S.; Zhang, Q.; Chen, C.; Yin, H. A novel handheld pseudo random coded UWB radar for human sensing applications. IEICE Electron. Expr. 2014, 11, 20140981. [Google Scholar] [CrossRef] [Green Version]

- Charvat, G.L.; Kempel, L.C.; Rothwell, E.J.; Coleman, C.M.; Mokole, E. A through-dielectric radar imaging system. IEEE Trans. Antennas Propag. 2010, 58, 2594–2603. [Google Scholar] [CrossRef]

- Harikesh, D.; Chauhan, S.S.; Basu, A.; Abegaonkar, M.P.; Koul, S.H. Through the Wall Human Subject Localization and Respiration Rate Detection Using Multichannel Doppler Radar. IEEE Sens. J. 2020, 21, 1510–1518. [Google Scholar] [CrossRef]

- Wang, G.; Munoz-Ferreras, J.M.; Gu, C.; Li, C.; Gomez-Garcia, R. Application of linear-frequency-modulated continuous-wave (LFMCW) radars for tracking of vital signs. IEEE Trans. Microw. Theory Tech. 2014, 62, 1387–1399. [Google Scholar] [CrossRef]

- Wang, G.; Gu, C.; Inoue, T.; Li, C. A hybrid FMCW-interferometry radar for indoor precise positioning and versatile life activity monitoring. IEEE Trans. Microw. Theory Tech. 2014, 62, 2812–2822. [Google Scholar] [CrossRef]

- Charvat, G.L.; Kempel, L.C.; Rothwell, E.J.; Coleman, C.M.; Mokole, E.L. A through-dielectric ultrawideband (UWB) switched-antenna-array radar imaging system. IEEE Trans. Antennas Propag. 2012, 60, 5495–5500. [Google Scholar] [CrossRef]

- Browne, K.E.; Burkholder, R.J.; Volakis, J.L. Through-wall opportunistic sensing system utilizing a low-cost flat-panel array. IEEE Trans. Antennas Propag. 2010, 59, 859–868. [Google Scholar] [CrossRef]

- Liu, L.; Liu, S. Remote detection of human vital sign with stepped-frequency continuous wave radar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 775–782. [Google Scholar] [CrossRef]

- Lu, B.; Song, Q.; Zhou, Z.; Wang, H. A SFCW radar for through wall imaging and motion detection. In Proceedings of the 2011 8th European Radar Conference, Manchester, UK, 12–14 October 2011; pp. 325–328. [Google Scholar]

- Lu, B.; Song, Q.; Zhou, Z.; Zhang, X. Detection of human beings in motion behind the wall using SAR interferogram. IEEE Geosci. Remote Sens. Lett. 2012, 9, 968–971. [Google Scholar]

- Lazaro, A.; Girbau, D.; Villarino, R. Analysis of vital signs monitoring using an IR-UWB radar. Progr. Electromagn. Res. 2010, 100, 265–284. [Google Scholar] [CrossRef] [Green Version]

- Sharafi, A.; Baboli, M.; Eshghi, M.; Ahmadian, A. Respiration-rate estimation of a moving target using impulse-based ultra wideband radars. Australas. Phys. Eng. Sci. Med. 2012, 35, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Liu, Z.; Barrowes, B.E. Through-wall bio-radiolocation with UWB impulse radar: Observation, simulation and signal extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 791–798. [Google Scholar] [CrossRef]

- Staderini, E.M. UWB radar in medicine. IEEE AESS Syst. Mag. 2002, 17, 13–18. [Google Scholar] [CrossRef]

- Venkatesh, S.; Anderson, C.R.; Rivera, N.V.; Buehrer, R.M. Implementation and analysis of respiration-rate estimation using impulse-based UWB. In Proceedings of the 2005 IEEE Military Communications Conference, Atlantic City, NJ, USA, 17–20 October 2005; pp. 3314–3320. [Google Scholar]

- Nezirovic, A. Stationary clutter-and linear-trend suppression in impulse-radar-based respiratory motion detection. In Proceedings of the 2011 IEEE International Conference on Ultra-Wideband (ICUWB), Bologna, Italy, 14–16 September 2011; pp. 331–335. [Google Scholar]

- Nezirovic, A.; Yarovoy, A.G.; Ligthart, L.P. Signal processing for improved detection of trapped victims using UWB radar. IEEE Trans. Geosci. Remote Sens. 2009, 48, 2005–2014. [Google Scholar] [CrossRef]

- Xu, Y.; Dai, S.; Wu, S.; Chen, J.; Fang, G.Y. Vital sign detection method based on multiple higher order cumulant for ultrawideband radar. IEEE Trans. Geosci. Remote Sens. 2011, 50, 1254–1265. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, F.; Xue, H.; Li, Z.; An, Q.; Wang, J. Detection and identification of multiple stationary human targets via Bio-Radar based on the cross-correlation method. Sensors 2016, 16, 1793. [Google Scholar] [CrossRef] [Green Version]

- Lv, H.; Li, W.; Li, Z.; Zhang, Y.; Jiao, T.; Xue, H.; Liu, M.; Jing, X.; Wang, J. Characterization and identification of IR-UWB respiratory-motion response of trapped victims. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7195–7204. [Google Scholar] [CrossRef]

- Xu, Y.; Shao, J.; Chen, J.; Fang, G.Y. Automatic detection of multiple trapped victims by ultra-wideband radar. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1498–1502. [Google Scholar] [CrossRef]

- Acar, Y.E.; Saritas, I.; Yaldiz, E. An experimental study: Detecting the respiration rates of multiple stationary human targets by stepped frequency continuous wave radar. Measurement 2021, 167, 108268. [Google Scholar] [CrossRef]

- Song, Y.; Jin, T.; Dai, Y.; Song, Y.; Zhou, X. Through-wall human pose reconstruction via UWB MIMO radar and 3D CNN. Remote Sens. 2021, 13, 241. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Fioranelli, F.; Jing, X.; Yarovoy, A.; Yang, Y. Human motion recognition with limited radar micro-Doppler signatures. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6586–6599. [Google Scholar] [CrossRef]

- Lai, G.; Lou, X.; Ye, W. Radar-Based Human Activity Recognition With 1-D Dense Attention Network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, P.; Guo, S.; Li, H.; Wang, X.; Cui, G.; Jiang, C.; Kong, L. Through-wall human motion recognition based on transfer learning and ensemble learning. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Li, H.; Cui, G.; Guo, S.; Kong, L.; Yang, X. Human target detection based on FCN for through-the-wall radar imaging. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1565–1569. [Google Scholar] [CrossRef]

- Zhang, J.; Tao, J.; Shi, Z. Doppler-radar based hand gesture recognition system using convolutional neural networks. In Proceedings of the International Conference in Communications, Signal Processing, and Systems, Harbin, China, 14–17 July 2017; pp. 1096–1113. [Google Scholar]

- Jia, Y.; Guo, Y.; Song, R.; Wang, G.; Chen, S.; Zhong, X.; Cui, G. ResNet-based counting algorithm for moving targets in through-the-wall radar. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1034–1038. [Google Scholar] [CrossRef]

- Hu, J.; Jiang, T.; Cui, Z.; Hou, T. Design of UWB pulses based on Gaussian pulse. In Proceedings of the 2008 3rd IEEE International Conference on Nano/Micro Engineered and Molecular Systems, Sanya, China, 6–9 January 2008; pp. 651–655. [Google Scholar]

- Nahar, S.; Phan, T.; Quaiyum, F.; Ren, L.; Fathy, A.E.; Kilic, O. An electromagnetic model of human vital signs detection and its experimental validation. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 338–349. [Google Scholar] [CrossRef]

- Zetik, R.; Crabbe, S.; Krajnak, J.; Peyerl, P.; Sachs, J.; Thoma, R. Detection and localization of persons behind obstacles using M-sequence through-the-wall radar. In Proceedings of the Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Security and Homeland Defense V, Orlando, FL, USA, 10 May 2006; p. 6201. [Google Scholar]

- Wu, S.; Yao, S.; Liu, W.; Tan, K.; Xia, Z.; Meng, S.; Chen, J.; Fang, G.; Yin, H. Study on a novel UWB linear array human respiration model and detection method. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 125–140. [Google Scholar] [CrossRef]

- Du, G.; Wang, X.; Wang, G.; Zhang, Y.; Li, D. Speech recognition based on convolutional neural networks. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing (ICSIP), Beijing, China, 13–15 August 2016; pp. 708–711. [Google Scholar]

- Dumitru, E.; Christian, S.; Alexander, T.; Dragomir, A. Scalable object detection using deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2147–2154. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Wilpon, J.; Rabiner, L. A modified K-means clustering algorithm for use in isolated work recognition. IEEE Trans. Signal Process. 1985, 33, 587–594. [Google Scholar] [CrossRef]

- Yang, G.; Ye, S.; Ji, Y.; Zhang, X.; Fang, G. Radiation Enhancement of an Ultrawideband Unidirectional Folded Bowtie Antenna for GPR Applications. IEEE Access 2020, 8, 182218–182228. [Google Scholar] [CrossRef]

| Index | Input Size | Operator | Kernel Size | Stride | Padding | Output Size |

|---|---|---|---|---|---|---|

| 1 | 1 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 64 × 800 × 600 |

| 2 | 64 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 128 × 800 × 600 |

| 3 | 128 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 256 × 800 × 600 |

| 4 | 256 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 128 × 800 × 600 |

| 5 | 128 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 64 × 800 × 600 |

| 6 | 64 × 800 × 600 | Conv2d | 3 × 3 | 1 × 1 | 1 × 1 | 1 × 800 × 600 |

| 7 | 1 × 800 × 600 | Pooling | - | - | - | 1 × 800 × 1 |

| Parameter | Value |

|---|---|

| Pulse waveform | Gaussian pulse |

| −10 dB bandwidth | 400 MHz |

| Pulse repeated frequency | 32 kHz |

| Average number | 128 |

| Fast-time sampling rate | 16 GHz |

| Slow-time sampling rate | 15 Hz |

| Sampling points | 4096 |

| Method | Missed Alarm Rate | Average Range Error |

|---|---|---|

| The method in [34] | 65% | 15 cm |

| The method in [35] | 39% | 17 cm |

| The method in [36] | 44% | 15 cm |

| The proposed method | 3% | 6 cm |

| Method | Calculation Time |

|---|---|

| The method in [34] | 0.18 s |

| The method in [35] | 0.42 s |

| The method in [36] | 0.12 s |

| The proposed method | 0.14 s |

| Experiment | Actual Range | Extracted Range |

|---|---|---|

| Experiment 5.1 | 5.00 m, and 6.00 m | 4.94 m, and 6.07 m |

| Experiment 5.2 | 5.50 m, and 6.00 m | 5.50 m, and 6.06 m |

| Experiment 5.3 | 5.70 m, and 6.00 m | 5.88 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, C.; Zheng, Z.; Pan, J.; Ni, Z.-K.; Ye, S.; Fang, G. Multiple Stationary Human Targets Detection in Through-Wall UWB Radar Based on Convolutional Neural Network. Appl. Sci. 2022, 12, 4720. https://doi.org/10.3390/app12094720

Shi C, Zheng Z, Pan J, Ni Z-K, Ye S, Fang G. Multiple Stationary Human Targets Detection in Through-Wall UWB Radar Based on Convolutional Neural Network. Applied Sciences. 2022; 12(9):4720. https://doi.org/10.3390/app12094720

Chicago/Turabian StyleShi, Cheng, Zhijie Zheng, Jun Pan, Zhi-Kang Ni, Shengbo Ye, and Guangyou Fang. 2022. "Multiple Stationary Human Targets Detection in Through-Wall UWB Radar Based on Convolutional Neural Network" Applied Sciences 12, no. 9: 4720. https://doi.org/10.3390/app12094720