Timing Predictability and Security in Safety-Critical Industrial Cyber-Physical Systems: A Position Paper †

Abstract

:1. Introduction

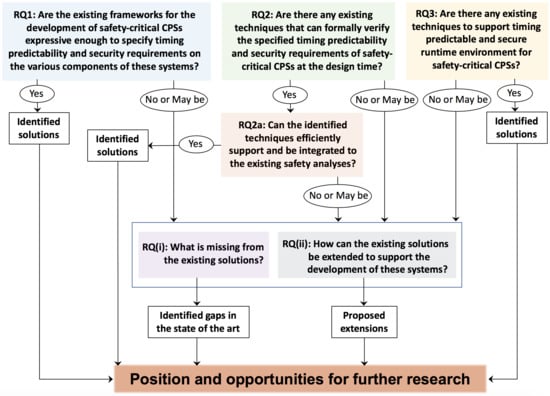

1.1. Paper Contributions

- RQ1

- Are the existing frameworks for the development of safety-critical CPSs expressive enough to specify timing predictability and security requirements on the various components in these systems?

- RQ2

- Are there any existing techniques that can formally verify the specified timing predictability and security requirements of safety-critical CPSs at the design time?

- RQ2a

- If the answer to RQ2 is “yes”, can the identified techniques efficiently support and be integrated to the existing safety analyses?

- RQ3

- Are there any existing techniques to support timing predictable and secure runtime environment for safety-critical CPSs?

- RQ (i)

- What is missing from the existing solutions?

- RQ (ii)

- How can the existing solutions be extended to support the development of time-critical, secure and safety-critical CPSs?

1.2. Paper Outline

2. Running Example: Autonomous Quarry

3. Timing Predictability in CPSs

3.1. Predictability in Time-Critical Embedded Systems

3.2. Predictability in Time-Critical CPSs

- Ready Time of Inputs: The ready time of inputs in the CPS is referred to as the interval of time between the instant when the value of a sensor that is deployed in the physical process changes and the instant when the changed value appears at the input interface of the embedded system. The ready time of all sensor inputs coming from the physical processes should be timing predictable.

- Ready Order of Inputs: In the case of more than one sensor value arriving at the input interface of an embedded system, the computed output that controls the physical processes depends heavily on the arrival order of the inputs. The desired function of the CPS requires a specific arrival order of the inputs from the sensors that are deployed in the physical processes, which must be timing predictable. This is referred to as the order predictability.

3.3. Timing Predictability as a Prerequisite for Functional Safety

4. Security in Time-Critical CPSs

4.1. Security Challenges and Solutions in Embedded Systems and CPSs

- Resource Gap: Many embedded systems struggle to fulfil the requirements on computation and energy consumption required for security solutions.

- Flexibility: Given that security is dynamic in nature, it can be challenging for embedded systems (which are often static) to provide a platform that is flexible enough and able to support constant security updates.

- Tamper Resistance: Embedded systems struggle to counteract attacks caused by malware, which are capable of executing downloaded applications.

- Security Assurance: Assurance of security for embedded systems that tend to have increased complexity is a challenge.

- Cost: Security solutions are usually costly, hence it is a challenge to find the right balance in regard to an acceptable security level and the system design investment given low-cost devices.

4.2. Security as a Prerequisite for Timing Predictability

4.3. Security as a Prerequisite for Functional Safety

5. Position and Opportunities for Further Research

5.1. Timing Predictability of Safety-Critical CPSs

- Are the existing models, languages and frameworks for the development of the CPSs expressive enough to specify the timing requirements not only on the computation and communication times but also on the ready times and ready order of the inputs that are acquired from the physical processes?

- Are there any existing methods and techniques that can formally verify the specified timing requirements at the design time to support pre-runtime timing predictability verification of the CPSs?

- If the answer to Research Question 2 is “yes”, can the identified techniques efficiently support and be integrated to the existing safety analyses?

- Are there any existing techniques to support timing predictable run-time environment for the CPSs that can provide bounded delays with regards to the computation, communication, ready times and ready order of the sensor inputs?

5.2. Security of Safety-Critical CPSs

- Are the existing models, languages and frameworks for the development of CPSs expressive enough to specify security requirements in CPSs?

- Are there any existing methods and techniques that can formally verify the specified security requirements in CPSs?

- If the answer to Research Question 2 is “yes”, can the identified techniques efficiently support and be integrated to the existing safety analyses?

- Are there any existing techniques to support secure run-time environment for CPSs?

5.3. Artificial Intelligence for Safety-Critical ICPS

5.4. Limitations

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CPS | Cyber-physical System |

| ES | Embedded System |

| ICPS | Industrial Cyber-physical System |

| ML | Machine Learning |

| RL | Reinforcement Learning |

| RSU | Road-side Unit |

| RTOS | Real-time Operating System |

| AUTOSAR | AUTomotive Open System ARchitecture |

| RCM | Rubus Component Model |

| TADL | Timing Augmented Description Language |

| ADL | Architecture Description Language |

| ISO | International Organization for Standardization |

References

- Lee, E.A.; Seshia, S.A. Introduction to Embedded Systems: A Cyber-Physical Systems Approach; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Lee, E.A. Cyber Physical Systems: Design Challenges. In Proceedings of the 2008 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008; pp. 363–369. [Google Scholar]

- Li, Q.; Yao, C. Real-Time Concepts for Embedded Systems, 1st ed.; CRC Press, Inc.: Boca Raton, FL, USA, 2003. [Google Scholar]

- Barr, M. Embedded Systems Glossary. Available online: http://www.netrino.com/Embedded-Systems/Glossary (accessed on 20 February 2020).

- Barr, M.; Massa, A. Programming Embedded Systems; O’Reilly Media, Inc.: Sevastopol, CA, USA, 2006. [Google Scholar]

- Mubeen, S.; Lisova, E.; Feljan, A.V. A Perspective on Ensuring Predictability in Time-critical and Secure Cooperative Cyber Physical Systems. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 1379–1384. [Google Scholar]

- Stankovic, J.A.; Ramamritham, K. What is predictability for real-time systems? Real-Time Syst. 1990, 2, 247–254. [Google Scholar] [CrossRef]

- Thiele, L.; Wilhelm, R. Design for Timing Predictability. Real-Time Syst. 2004, 28, 157–177. [Google Scholar] [CrossRef]

- Kirner, R.; Puschner, P. Time-Predictable Computing. In Software Technologies for Embedded and Ubiquitous Systems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 23–34. [Google Scholar]

- Grund, D.; Reineke, J.; Wilhelm, R. A Template for Predictability Definitions with Supporting Evidence. In Proceedings of the Bringing Theory to Practice: Predictability and Performance in Embedded Systems, Grenoble, France, 18 March 2011; Open Access Series in Informatics. Volume 18, pp. 22–31. [Google Scholar]

- Ravi, S.; Raghunathan, A.; Kocher, P.; Hattangady, S. Security in Embedded Systems: Design Challenges. ACM Trans. Embed. Comput. Syst. 2004, 3, 461–491. [Google Scholar] [CrossRef]

- Serpanos, D.N.; Voyiatzis, A.G. Security Challenges in Embedded Systems. ACM Trans. Embed. Comput. Syst. 2013, 12, 66:1–66:10. [Google Scholar] [CrossRef]

- Jurjens, J. Developing Secure Embedded Systems: Pitfalls and How to Avoid Them. In Proceedings of the Companion to the Proceedings of the 29th International Conference on Software Engineering (ICSE COMPANION’07), Minneapolis, MN, USA, 20–26 May 2007; pp. 182–183. [Google Scholar]

- Kissel, R. Glossary of Key Information Security Terms; U.S. Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2006.

- Lisova, E.; Uhlemann, E.; Åkerberg, J.; Björkman, M. Towards secure wireless TTEthernet for industrial process automation applications. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–4. [Google Scholar]

- Doyle, M.G. How Volvo CE Is Engineering a Quarry Run by Electric Loaders and Haulers for Big Cuts to Costs and Emissions. 2016. Available online: https://www.equipmentworld.com (accessed on 15 September 2018).

- Šurković, A.; Hanić, D.; Lisova, E.; Čaušević, A.; Lundqvist, K.; Wenslandt, D.; Falk, C. Incorporating Attacks Modeling into Safety Process. In Computer Safety, Reliability, and Security; Gallina, B., Skavhaug, A., Schoitsch, E., Bitsch, F., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 31–41. [Google Scholar]

- Sha, L.; Abdelzaher, T.; Rzén, K.E.A.; Cervin, A.; Baker, T.P.; Burns, A.; Buttazzo, G.; Caccamo, M.; Lehoczky, J.P.; Mok, A.K. Real Time Scheduling Theory: A Historical Perspective. Real-Time Syst. 2004, 28, 101–155. [Google Scholar] [CrossRef]

- Lo Bello, L.; Mariani, R.; Mubeen, S.; Saponara, S. Recent Advances and Trends in On-Board Embedded and Networked Automotive Systems. IEEE Trans. Ind. Inf. 2019, 15, 1038–1051. [Google Scholar] [CrossRef]

- Timing Augmented Description Language (TADL2) Syntax, Semantics, Metamodel Ver. 2, Deliverable 11 August 2012. Available online: https://itea3.org/project/timmo-2-use.html (accessed on 20 February 2020).

- EAST-ADL Domain Model Specification, V2.1.12. Available online: http://www.east-adl.info/Specification/V2.1.12/EAST-ADL-Specification_V2.1.12.pdf (accessed on 20 February 2020).

- Mubeen, S.; Nolte, T.; Sjödin, M.; Lundbäck, J.; Lundbäck, K.L. Supporting timing analysis of vehicular embedded systems through the refinement of timing constraints. Softw. Syst. Model. 2017, 18, 39–69. [Google Scholar] [CrossRef] [Green Version]

- AMALTHEA4public Project. 2020. Available online: http://www.amalthea-project.org (accessed on 20 February 2020).

- AUTOSAR Techincal Overview, Release 4.1, Rev. 2, Ver. 1.1.0. The AUTOSAR Consortium, October 2013. Available online: http://autosar.org (accessed on 20 February 2020).

- Feiertag, N.; Richter, K.; Nordlander, J.; Jonsson, J. A Compositional Framework for End-to-End Path Delay Calculation of Automotive Systems under Different Path Semantics; CRTS Workshop; IEEE Communications Society: Piscataway, NJ, USA, 2008. [Google Scholar]

- Mubeen, S.; Mäki-Turja, J.; Sjödin, M. Support for End-to-End Response-Time and Delay Analysis in the Industrial Tool Suite: Issues, Experiences and a Case Study. Comput. Sci. Inf. Syst. 2013, 10, 453–482. [Google Scholar] [CrossRef]

- Sun, B.; Li, X.; Wan, B.; Wang, C.; Zhou, X.; Chen, X. Definitions of predictability for Cyber Physical Systems. J. Syst. Archit. 2016, 63, 48–60. [Google Scholar] [CrossRef] [Green Version]

- Mubeen, S.; Nikolaidis, P.; Didic, A.; Pei-Breivold, H.; Sandström, K.; Behnam, M. Delay Mitigation in Offloaded Cloud Controllers in Industrial IoT. IEEE Access 2017, 5, 4418–4430. [Google Scholar] [CrossRef]

- Rohmad, M.S.; Hashim, H.; Saparon, A. Lightweight cryptography on programmable system on chip: Standalone software implementation. In Proceedings of the 2015 IEEE Symposium on Computer Applications Industrial Electronics (ISCAIE), Langkawi, Malaysia, 12–14 April 2015; pp. 151–154. [Google Scholar]

- Bansod, G.; Raval, N.; Pisharoty, N. Implementation of a New Lightweight Encryption Design for Embedded Security. IEEE Trans. Inf. Forensics Secur. 2015, 10, 142–151. [Google Scholar] [CrossRef]

- Robert Bosch GmbH, CAN with Flexible Data-Rate (CAN FD), White Paper, Ver. 1.1. 2012. Available online: https://www.can-cia.org/fileadmin/resources/documents/proceedings/2012_hartwich.pdf (accessed on 20 February 2020).

- Hiroaki, I.; Edahiro, M.; Sakai, J. Towards scalable and secure execution platform for embedded systems. In Proceedings of the 2007 Asia and South Pacific Design Automation Conference, Yokohama, Japan, 23–26 January 2007; pp. 350–354. [Google Scholar]

- Konrad, S.; Cheng, B.H.C. Real-time Specification Patterns. In Proceedings of the 27th International Conference on Software Engineering, ICSE ’05, St. Louis, MO, USA, 15–21 May 2005; ACM: New York, NY, USA, 2005; pp. 372–381. [Google Scholar]

- Kornecki, A.; Zalewski, J. Safety assurance for safety-critical embedded systems: Qualification of tools for complex electronic hardware. In Proceedings of the 1st International Conference on Information Technology, Gdansk, Poland, 18–21 May 2008. [Google Scholar]

- International Organization for Standardization (ISO). ISO 26262: Road Vehicles—Functional Safety; ISO: Geneva, Switzerland, 2011. [Google Scholar]

- Weinstock, C.B.; Lipson, H.F.; Goodenough, J. Arguing Security–Creating Security Assurance Cases. Software Engineering Institute, Carnegie Mellon University, USA. 2014. Available online: https://resources.sei.cmu.edu/asset_files/WhitePaper/2013_019_001_293637.pdf (accessed on 20 February 2020).

- Johnson, P.; Gorton, D.; Lagerström, R.; Ekstedt, M. Time between vulnerability disclosures: A measure of software product vulnerability. Comput. Secur. 2016, 62, 278–295. [Google Scholar] [CrossRef]

- Melchers, R. On the ALARP Approach to Risk Management. Reliab. Eng. Syst. Saf. 2001, 71, 201–208. [Google Scholar] [CrossRef]

- Girs, S.; Šljivo, I.; Jaradat, O. Contract-Based Assurance for Wireless Cooperative Functions of Vehicular Systems. In Proceedings of the 43rd Annual Conference of the IEEE Industrial Electronics Society (IECON), Beijing, China, 29 October–1 November 2017. [Google Scholar]

- Čaušević, A. A Risk and Threat Assessment Approaches Overview in Autonomous Systems of Systems. In Proceedings of the the 26th IEEE International Conference on Information, Communication and Automation Technologies, Sarajevo, Bosnia-Herzegovina, 26–28 October 2017. [Google Scholar]

- Hänninen, K.; Hansson, H.; Thane, H.; Saadatmand, M. Inadequate Risk Analysis Might Jeopardize the Functional Safety of Modern Systems. CoRR 2018. abs/1808.10308. Available online: http://xxx.lanl.gov/abs/1808.10308 (accessed on 20 February 2020).

- Lisova, E.; Šljivo, I.; Čaušević, A. Safety and Security Co-Analyses: A Systematic Literature Review. IEEE Syst. J. 2019, 13, 2189–2200. [Google Scholar] [CrossRef] [Green Version]

- Leveson, N.G. Safeware: System Safety and Computers; ACM: New York, NY, USA, 1995. [Google Scholar]

- SAE J3061. Cybersecurity Guidebook for Cyber-Physical Vehicle Systems; SAE International: Warrendale, PA, USA, 2016. [Google Scholar]

- Johnson, N.; Kelly, T. An Assurance Framework for Independent Co-assurance of Safety and Security. CoRR 2019. abs/1903.01220. Available online: https://arxiv.org/pdf/1903.01220.pdf (accessed on 20 February 2020).

- Mubeen, S.; Lawson, H.; Lundbäck, J.; Gålnander, M.; Lundbäck, K. Provisioning of Predictable Embedded Software in the Vehicle Industry: The Rubus Approach. In Proceedings of the 4th IEEE/ACM International Workshop on Software Engineering Research and Industrial Practice, Buenos Aires, Argentina, 21 May 2017. [Google Scholar]

- Paulitsch, M.; Ruess, H.; Sorea, M. Non-functional Avionics Requirements. In Leveraging Applications of Formal Methods, Verification and Validation; Springer: Berlin/Heidelberg, Germany, 2008; pp. 369–384. [Google Scholar]

- Löfwenmark, A. Timing Predictability in Future Multi-Core Avionics Systems. Licentiate’s Thesis, Department of Computer Science and Information Systems, Linköping University, Linköping, Sweden, 2017. [Google Scholar]

- Feld, T.; Biondi, A.; Davis, R.I.; Buttazzo, G.; Slomka, F. A survey of schedulability analysis techniques for rate-dependent tasks. J. Syst. Softw. 2018, 138, 100–107. [Google Scholar] [CrossRef] [Green Version]

- Mubeen, S.; Mäki-Turja, J.; Sjödin, M. MPS-CAN Analyzer: Integrated Implementation of Response-Time Analyses for Controller Area Network. J. Syst. Archit. 2014, 60, 828–841. [Google Scholar] [CrossRef]

- Becker, M.; Mubeen, S.; Dasari, D.; Behnam, M.; Nolte, T. A generic framework facilitating early analysis of data propagation delays in multi-rate systems (Invited paper). In Proceedings of the 2017 IEEE 23rd International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Hsinchu, Taiwan, 16–18 August 2017. [Google Scholar]

- Becker, M.; Dasari, D.; Mubeen, S.; Behnam, M.; Nolte, T. End-to-end timing analysis of cause-effect chains in automotive embedded systems. J. Syst. Archit. 2017, 80, 104–113. [Google Scholar] [CrossRef]

- International Electrotechnical Commission. IEC 60812: Analysis Techniques for System Reliability-Procedure for Failure Mode and Effects Analysis (FMEA); International Electrotechnical Commission: Geneva, Switzerland, 2006. [Google Scholar]

- North Atlantic Treaty Organization. Engineering for System Assurance NATO in Programmes; NATO: Washington, DC, USA, 2010; Available online: https://standards.globalspec.com/std/1236626/nato-aep-67 (accessed on 20 February 2020).

- Weaver, R.; Fenn, J.; Kelly, T. A Pragmatic Approach to Reasoning about the Assurance of Safety Arguments. In Proceedings of the 8th Australian Workshop on Safety Critical Systems and Software, Canberra, Australia, 9–10 October 2003. [Google Scholar]

- Šljivo, I.; Lisova, E.; Afshar, S. Agent-centred Approach for Assuring Ethics in Dependable Service Systems. In Proceedings of the 13th IEEE World Congress on Services, Honolulu, HI, USA, 25–30 June 2017. [Google Scholar]

- Haley, C.; Laney, R.; Moffett, J.; Nuseibeh, B. Security Requirements Engineering: A Framework for Representation and Analysis. IEEE Trans. Softw. Eng. 2008, 34, 133–153. [Google Scholar] [CrossRef] [Green Version]

- McDermott, J.; Fox, C. Using abuse case models for security requirements analysis. In Proceedings of the 15th Annual Computer Security Applications Conference (ACSAC’99), Phoenix, AZ, USA, 6–10 December 1999; pp. 55–64. [Google Scholar]

- Raspotnig, C.; Karpati, P.; Katta, V. A Combined Process for Elicitation and Analysis of Safety and Security Requirements. In Enterprise, Business-Process and Information Systems Modeling; Springer: Berlin/Heidelberg, Germany, 2012; pp. 347–361. [Google Scholar]

- Gu, T.; Lu, M.; Li, L. Extracting interdependent requirements and resolving conflicted requirements of safety and security for industrial control systems. In Proceedings of the 1st International Conference on Reliability Systems Engineering (ICRSE), Beijing, China, 21–23 October 2015; pp. 1–8. [Google Scholar]

- Howard, G.; Butler, M.; Colley, J.; Sassone, V. Formal Analysis of Safety and Security Requirements of Critical Systems Supported by an Extended STPA Methodology. In Proceedings of the IEEE European Symposium on Security and Privacy Workshops (EuroS PW), Paris, France, 26–28 April 2017; pp. 174–180. [Google Scholar] [CrossRef] [Green Version]

- Iliasov, A.; Romanovsky, A.; Laibinis, L.; Troubitsyna, E.; Latvala, T. Augmenting Event-B modelling with real-time verification. In Proceedings of the 1st International Workshop on Formal Methods in Software Engineering: Rigorous and Agile Approaches (FormSERA), Zurich, Switzerland, 2 June 2012; pp. 51–57. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Johnson, L.A. DO-178B, Software Considerations in Airborne Systems and Equipment Certification. Crosstalk October 1998, 199. Available online: http://www.dcs.gla.ac.uk/johnson/teaching/safety/reports/schad.html (accessed on 20 February 2020).

- ISO 26262: Road Vehicles-Functional Safety, International Standard ISO/FDIS; ISO: Geneva, Switzerland, 2011.

- Garcıa, J.; Fernández, F. A comprehensive survey on safe reinforcement learning. J. Mach. Learn. Res. 2015, 16, 1437–1480. [Google Scholar]

- Kitchenham, B.; Brereton, P. A systematic review of systematic review process research in software engineering. Inf. Softw. Technol. 2013, 55, 2049–2075. [Google Scholar] [CrossRef]

- Zhang, H.; Babar, M.A. Systematic reviews in software engineering: An empirical investigation. Inf. Softw. Technol. 2013, 55, 1341–1354. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mubeen, S.; Lisova, E.; Vulgarakis Feljan, A. Timing Predictability and Security in Safety-Critical Industrial Cyber-Physical Systems: A Position Paper. Appl. Sci. 2020, 10, 3125. https://doi.org/10.3390/app10093125

Mubeen S, Lisova E, Vulgarakis Feljan A. Timing Predictability and Security in Safety-Critical Industrial Cyber-Physical Systems: A Position Paper. Applied Sciences. 2020; 10(9):3125. https://doi.org/10.3390/app10093125

Chicago/Turabian StyleMubeen, Saad, Elena Lisova, and Aneta Vulgarakis Feljan. 2020. "Timing Predictability and Security in Safety-Critical Industrial Cyber-Physical Systems: A Position Paper" Applied Sciences 10, no. 9: 3125. https://doi.org/10.3390/app10093125