Instantaneous Extraction of Indoor Environment from Radar Sensor-Based Mapping

Abstract

:1. Introduction

- The proposed method removes the ghost targets generated by the multiple reflections of radio waves and extracts the structure of the indoor environment.

- The proposed method not only removes general ghost targets, but also performs partial interpolation of the unmeasured areas, resulting in an extracted structure of the indoor environment that closely resembles the actual environment.

- Unlike conventional methods for structure extraction, the proposed method does not require parameter adjustments in different environments and can extract the structure of an indoor environment using the same parameter settings.

2. Radar Signal Analysis

2.1. Distance and Velocity Estimation Using FMCW Radar Signal

2.2. Angle Estimation Using MIMO Antenna System

3. Indoor Environment Mapping Using Radar Sensor

3.1. Radar Sensor Used in Measurements

3.2. Measurement Environment

3.3. Target Detection Results

4. Proposed Environment Extraction Method

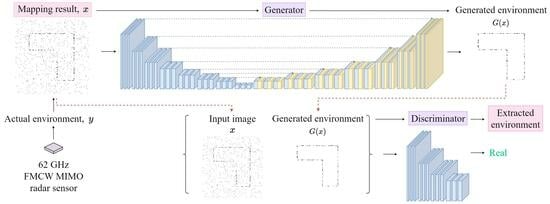

4.1. Conditional GAN for Environment Extraction

4.1.1. Basic Structure of Conditional GAN

4.1.2. Structure of Proposed Method

5. Performance Evaluation

5.1. Performance Evaluation of the Proposed Method

5.2. Comparison between the Proposed Method and Conventional Methods

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CAU | Chung-Ang University |

| CGAN | Conditional generative adversarial network |

| DBSCAN | Density-based spatial clustering of applications with noise |

| FOV | Field of view |

| FMCW | Frequency-modulated continuous wave |

| GAN | Generative adversarial network |

| KAU | Korea Aerospace University |

| KNN | k-nearest neighbor |

| MIMO | Multiple-input and multiple-output |

| ReLU | Rectified linear unit |

| SC | Structural content |

| SSIM | Structural similarity index measure |

References

- Zhang, A.; Atia, M.M. Comparison of 2D localization using radar and lidar in long corridors. In Proceedings of the 2020 IEEE SENSORS. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Vargas, J.; Alsweiss, S.; Toker, O.; Razdon, R.; Santos, J. An overview of autonomous vehicles sensors and their vulnerability to weather conditions. Sensors 2021, 21, 5397. [Google Scholar] [CrossRef] [PubMed]

- Dogru, S.; Marques, L. Evaluation of an automotive short range radar sensor for mapping in orchards. In Proceedings of the 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 78–83. [Google Scholar]

- Marck, J.W.; Mohamoud, A.; Houwen, E.V.; Heijster, R.V. Indoor radar SLAM: A radar application for vision and GPS denied environments. In Proceedings of the 2013 European Radar Conference, Nuremberg, Germany, 9–11 October 2013; pp. 471–474. [Google Scholar]

- Lu, C.X.; Rosa, S.; Zhao, P.; Wang, B.; Chen, J.; Stankovic, A.; Trigoni, N.; Markham, A. See through smoke: Robust indoor mapping with low-cost mmwave radar. In Proceedings of the 18th ACM International Conference on Mobile Systems, Toronto, ON, Canada, 16–18 June 2020; pp. 14–27. [Google Scholar]

- Kwon, S.-Y.; Kwak, S.; Kim, J.; Lee, S. Radar sensor-based ego-motion estimation and indoor environment mapping. IEEE Sens. J. 2023, 23, 16020–16031. [Google Scholar] [CrossRef]

- Martone, M.; Marino, A. Editorial for the Special Issue “SAR for Forest Mapping II”. Remote Sens. 2023, 15, 4376. [Google Scholar] [CrossRef]

- Abdalla, A.T.; Alkhodary, M.T.; Muqaibel, A.H. Multipath ghosts in through-the-wall radar imaging: Challenges and solutions. ETRI J. 2018, 40, 376–388. [Google Scholar] [CrossRef]

- Choi, J.W.; Kim, J.H.; Cho, S.H. A counting algorithm for multiple objects using an IR-UWB radar system. In Proceedings of the 2012 3rd IEEE International Conference on Network Infrastructure and Digital Content, Beijing, China, 21–23 September 2012; pp. 591–595. [Google Scholar]

- Feng, R.; Greef, E.D.; Rykunov, M.; Sahli, H.; Pollin, S.; Bourdoux, A. Multipath ghost recognition for indoor MIMO radar. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5104610. [Google Scholar] [CrossRef]

- Jiang, M.; Guo, S.; Luo, H.; Yao, Y.; Cui, G. A Robust Target Tracking Method for Crowded Indoor Environments Using mmWave Radar. Remote Sens. 2023, 15, 2425. [Google Scholar] [CrossRef]

- Stephan, M.; Santra, A. Radar-based human target detection using deep residual U-Net for smart home applications. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 175–182. [Google Scholar]

- Mohanna, A.; Gianoglio, C.; Rizik, A.; Valle, M. A Convolutional Neural Network-Based Method for Discriminating Shadowed Targets in Frequency-Modulated Continuous-Wave Radar Systems. Sensors 2022, 22, 1048. [Google Scholar] [CrossRef] [PubMed]

- Jeong, T.; Lee, S. Ghost target suppression using deep neural network in radar-based indoor environment mapping. IEEE Sens. J. 2022, 22, 14378–14386. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Singh, H.; Chattopadhyay, A. Multi-target range and angle detection for MIMO-FMCW radar with limited antennas. arXiv 2023, arXiv:2302.14327. [Google Scholar]

- Grythe, J. Beamforming Algorithms-Beamformers; Technical Note; Norsonic: Tranby, Norway, 2015; pp. 1–5. [Google Scholar]

- Lee, S.; Kwon, S.-Y.; Kim, B.-J.; Lee, J.-E. Dual-mode radar sensor for indoor environment mapping. Sensors 2021, 21, 2469. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Abadie, J.P.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Li, R.L.; Hu, Y.F. Noise reduction to text categorization based on density for KNN. In Proceedings of the 2003 International Conference on Machine Learning and Cybernetics, Xi’an, China, 5 November 2003; pp. 3119–3124. [Google Scholar]

- Sha’Abani, M.N.A.H.; Fuad, N.; Jamal, N.; Ismail, M.F. kNN and SVM classification for EEG: A review. In Proceedings of the 5th International Conference on Electrical, Control and Computer Engineering, Singapore, 29–30 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 555–565. [Google Scholar]

- Lim, S.; Lee, S. Hough transform based ego-velocity estimation in automotive radar system. Electron. Lett. 2021, 57, 80–82. [Google Scholar] [CrossRef]

- Wang, J.; Howarth, P.J. Use of the Hough transform in automated lineament. IEEE Trans. Geosci. Remote Sens. 1990, 28, 561–567. [Google Scholar] [CrossRef]

- Moallem, M.; Sarabandi, K. Polarimetric study of MMW imaging radars for indoor navigation and mapping. IEEE Trans. Antennas Propag. 2014, 62, 500–504. [Google Scholar] [CrossRef]

- Dosselmann, R.; Yang, X.D. A comprehensive assessment of the structural similarity index. Signal Image Video Process. 2009, 5, 81–91. [Google Scholar] [CrossRef]

- Memom, F.; Unar, M.A.; Memom, S. Image Quality Assessment for Performance Evaluation of Focus Measure Operators. arXiv 2016, arXiv:1604.00546. [Google Scholar]

| Detection Mode | Long-Range Mode | Short-Range Mode |

|---|---|---|

| Bandwidth, B (GHz) | 1.5 | 3 |

| The number of chirps, | 256 | 256 |

| The number of time samples, | 128 | 128 |

| Maximum detectable range (m) | 20 | 10 |

| Range resolution (m) | 0.1 | 0.05 |

| FOV (∘) |

| Parameter | Value |

|---|---|

| The size of generator input layer | 3 |

| The size of generator output layer | 3 |

| Activation function of generator | ReLU |

| The size of discriminator input layer | 3 |

| The size of discriminator output layer | 1 |

| Activation function of discriminator | ReLU |

| The number of training dataset | 5000 |

| The number of epochs | 100 |

| Measurement Environment | E1 | E2 | E3 |

|---|---|---|---|

| Proposed method | 0.9530 | 0.9562 | 0.9653 |

| KNN algorithm | 0.9036 | 0.9485 | 0.9361 |

| Hough transform | 0.8711 | 0.9388 | 0.9852 |

| DBSCAN | 0.8971 | 0.9463 | 0.9359 |

| Measurement Environment | E1 | E2 | E3 |

|---|---|---|---|

| Proposed method | 0.9935 | 0.9987 | 1.0001 |

| KNN algorithm | 0.9822 | 0.9927 | 0.9832 |

| Hough transform | 0.9896 | 0.9852 | 0.9855 |

| DBSCAN | 0.9836 | 0.9930 | 0.9833 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, S.; Kwak, S.; Lee, S. Instantaneous Extraction of Indoor Environment from Radar Sensor-Based Mapping. Remote Sens. 2024, 16, 574. https://doi.org/10.3390/rs16030574

Cho S, Kwak S, Lee S. Instantaneous Extraction of Indoor Environment from Radar Sensor-Based Mapping. Remote Sensing. 2024; 16(3):574. https://doi.org/10.3390/rs16030574

Chicago/Turabian StyleCho, Seonmin, Seungheon Kwak, and Seongwook Lee. 2024. "Instantaneous Extraction of Indoor Environment from Radar Sensor-Based Mapping" Remote Sensing 16, no. 3: 574. https://doi.org/10.3390/rs16030574