Water Stream Extraction via Feature-Fused Encoder-Decoder Network Based on SAR Images

Abstract

:1. Introduction

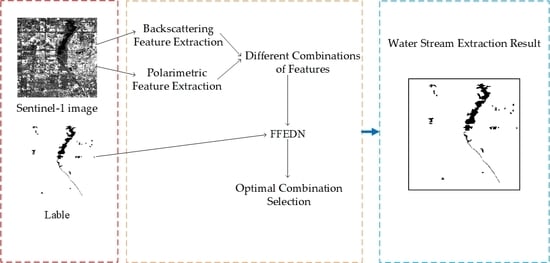

- The water features from BFs and PFs are fully integrated by using feature-fused block and the influence of different combinations on water stream extraction is explored. Especially, the influence of PFs obtained by the newly model-based decomposition adapted to dual-pol SAR images was first discussed in the task of water stream extraction.

- An effective water stream extraction model FFEDN is proposed. It has an outstanding capability of feature learning and feature fusing, which improves the extraction accuracy.

2. Materials and Methods

2.1. Study Area

2.2. Data and Pre-Processing

2.2.1. Sentinel-1A Data and Pre-Processing

2.2.2. Ground Truth Data and Pre-Processing

2.3. Water Stream Extraction

2.3.1. Feature Acquisition and Combination

2.3.2. FEEDN Model

2.3.3. Optimal Combination Selection

3. Results

3.1. Implementation Details

3.2. Evaluation of Different Combinations with FFEDN

3.2.1. Qualitative Evaluation

3.2.2. Quantitative Evaluation

3.3. Ablation Study of FFEDN with Feature Combination C

3.3.1. Qualitative Evaluation

3.3.2. Quantitative Evaluation

3.4. Evaluation of Different Methods with Feature Combination C

3.4.1. Compared Methods

3.4.2. Qualitative Evaluation

3.4.3. Quantitative Evaluation

3.5. Extraction Result of Study Area

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Peng, G. Modern Water Network Construction Practice and Its Effectiveness Analysis of Linyi City; Shandong Agricultural University: Taian, China, 2012. [Google Scholar]

- Cheng, T.; Liu, R.-M.; Zhou, X. Water Information Extraction Method in Geographic National Conditions Investigation Based High Resolution Remote Sensing Images. Bull. Surv. Mapp. 2014, 4, 86–89. [Google Scholar]

- Dong, Y. River Network Structure and Evaluation of Its Characteristics; Tianjin University: Tianjin, China, 2018. [Google Scholar]

- Wan, L.; Liu, M.; Wang, F.; Zhang, T.; You, H.J. Automatic extraction of flood inundation areas from SAR images: A case study of Jilin, China during the 2017 flood disaster. Int. J. Remote Sens. 2019, 40, 5050–5077. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A new technique for surface water mapping using Landsat imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Ogilvie, A.; Belaud, G.; Delenne, C.; Bailly, J.-S.; Bader, J.-C.; Oleksiak, A.; Ferry, L.; Martin, D. Decadal monitoring of the Niger Inner Delta flood dynamics using MODIS optical data. J. Hydrol. 2015, 523, 368–383. [Google Scholar] [CrossRef] [Green Version]

- Isikdogan, F.; Bovik, A.; Passalacqua, P. RivaMap: An automated river analysis and mapping engine. Remote Sens. Environ. 2017, 202, 88–97. [Google Scholar] [CrossRef]

- Domeneghetti, A.; Tarpanelli, A.; Brocca, L.; Barbetta, S.; Moramarco, T.; Castellarin, A.; Brath, A. The use of remote sensing-derived water surface data for hydraulic model calibration. Remote Sens. Environ. 2014, 149, 130–141. [Google Scholar] [CrossRef]

- Vimal, S.; Kumar, D.N.; Jaya, I. Extraction of drainage pattern from ASTER and SRTM data for a River Basin using GIS tools. Int. Proc. Chem. Biol. Environ. Eng. 2012, 33, 120–124. [Google Scholar]

- Khan, A.; Richards, K.S.; Parker, G.T.; Mcrobie, A.; Mukhopadhyay, B. How large is the Upper Indus Basin? The pitfalls of auto-delineation using DEMs. J. Hydrol. 2014, 509, 442–453. [Google Scholar] [CrossRef]

- Gülgen, F. A stream ordering approach based on network analysis operations. Geocarto Int. 2017, 32, 322–333. [Google Scholar] [CrossRef]

- Kumar, B.; Patra, K.C.; Lakshmi, V. Error in digital network and basin area delineation using d8 method: A case study in a sub-basin of the Ganga. J. Geol. Soc. India 2017, 89, 65–70. [Google Scholar] [CrossRef]

- Tsai, Y.-L.S.; Klein, I.; Dietz, A.; Oppelt, N. Monitoring Large-Scale Inland Water Dynamics by Fusing Sentinel-1 SAR and Sentinel-3 Altimetry Data and by Analyzing Causal Effects of Snowmelt. Remote Sens. 2020, 12, 3896. [Google Scholar] [CrossRef]

- Christopher, B.O.; Georage, A.B.; James, D.W.; Kirk, T.S. River network delineation from Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101910. [Google Scholar]

- González, C.; Bachmann, M.; Bueso-Bello, J.-L.; Rizzoli, P.; Zink, M. A Fully Automatic Algorithm for Editing the TanDEM-X Global DEM. Remote Sens. 2020, 12, 3961. [Google Scholar] [CrossRef]

- Li, N.; Lv, Z.; Guo, Z. SAR image interference suppression method by integrating change detection and subband spectral cancellation technology. J. Syst. Eng. Electron. 2021, 43, 2484–2492. [Google Scholar]

- Ardhuin, F.; Stopa, J.; Chapron, B.; Collard, F.; Smith, M.; Thomson, J.; Doble, M.; Blomquist, B.; Persson, O.; Collins, C.O.; et al. Measuring ocean waves in sea ice using SAR imagery: A quasi-deterministic approach evaluated with Sentinel 1 and in situ data. Remote Sens. Environ. 2017, 189, 211–222. [Google Scholar] [CrossRef] [Green Version]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR data for detecting floodwater in urban and agricultural areas: The role of the interferometric coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M.; Filoso, S.; Wang, Y. Delineation of inundated area and vegetation along the amazon floodplain with the SIR-C synthetic aperture radar. IEEE Trans. Geosci. Remote Sens. 1995, 33, 896–904. [Google Scholar] [CrossRef] [Green Version]

- Silveira, M.; Heleno, S. Water Land Segmentation in SAR Images using Level Sets. In Proceedings of the 2008 IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 1896–1899. [Google Scholar]

- Qin, X.; Yang, J.; Li, P.; Sun, W. Research on Water Body Extraction from Gaofen-3 Imagery Based on Polarimetric Decomposition and Machine Learning. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6903–6906. [Google Scholar]

- Hao, C.; Yunus, A.P.; Subramanian, S.S.; Avtar, R. Basin-wide flood depth and exposure mapping from SAR images and machine learning models. J. Environ. Manag. 2021, 297, 113367. [Google Scholar] [CrossRef]

- Marzi, D.; Gamba, P. Inland Water Body Mapping Using Multitemporal Sentinel-1 SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11789–11799. [Google Scholar] [CrossRef]

- Dai, M.; Leng, B.; Ji, K. An Efficient Water Segmentation Method for SAR Images. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1129–1132. [Google Scholar]

- Shen, G.; Fu, W. Water Body Extraction using GF-3 Polsar Data–A Case Study in Poyang Lake. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 4762–4765. [Google Scholar]

- Li, Z.; Wang, R.; Zhang, W.; Hu, F.; Meng, L. Multiscale features supported DeepLabV3 optimization scheme for accurate water semantic segmentation. IEEE Access 2019, 7, 155787–155804. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, P.; Li, Z.; Xing, J.; Xing, X.; Yuan, Z. Automatic extraction of water and shadow from SAR images based on a multi-resolution dense encoder and decoder network. Sensors 2019, 19, 3576. [Google Scholar]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of SAR images: Flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Li, J.; Wang, S. An automatic method for mapping inland surface waterbodies with Radarsat-2 imagery. Int. J. Remote Sens. 2015, 36, 1367–1384. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, G. The Modified Encoder-decoder Network Based on Depthwise Separable Convolution for Water Segmentation of Real Sar Imagery. In Proceedings of the 2019 International Applied Computational Electromagnetics Society Symposium, Nanjing, China, 8–11 August 2019; pp. 1–2. [Google Scholar]

- Lv, J.; Chen, J.; Hu, J.; Zhang, Y.; Lu, P.; Lin, J. Area Change Detection of Luoma Lake Based on Sentinel-1A. In Proceedings of the 2018 International Conference on Microwave and Millimeter Wave Technology, Chengdu, China, 7–11 May 2018; pp. 1–3. [Google Scholar]

- Lobry, S.; Denis, L.; Tupin, F.; Fjørtoft, R. Double MRF for water classification in SAR images by joint detection and reflectivity estimation. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; pp. 2283–2286. [Google Scholar]

- Guo, Z.S.; Wu, L.; Huang, Y.B.; Guo, Z.W.; Zhao, J.H.; Li, N. Water-Body Segmentation for SAR Images: Past, Current, and Future. Remote Sens. 2022, 14, 1752. [Google Scholar] [CrossRef]

- Irwin, K.; Braun, A.; Fotopoulos, G.; Roth, A.; Wessel, B. Assessing Single-Polarization and Dual-Polarization TerraSAR-X Data for Surface Water Monitoring. Remote Sens. 2018, 10, 949. [Google Scholar] [CrossRef] [Green Version]

- Dirscherl, M.; Dietz, A.J.; Kneisel, C.; Kuenzer, C. A Novel Method for Automated Supraglacial Lake Mapping in Antarctica Using Sentinel-1 SAR Imagery and Deep Learning. Remote Sens. 2021, 13, 197. [Google Scholar] [CrossRef]

- Li, J.; Wang, C.; Xu, L.; Wu, F.; Zhang, H.; Zhang, B. Multitemporal Water Extraction of Dongting Lake and Poyang Lake Based on an Automatic Water Extraction and Dynamic Monitoring Framework. Remote Sens. 2021, 13, 865. [Google Scholar] [CrossRef]

- Katiyar, V.; Tamkuan, N.; Nagai, M. Near-Real-Time Flood Mapping Using Off-the-Shelf Models with SAR Imagery and Deep Learning. Remote Sens. 2021, 13, 2334. [Google Scholar] [CrossRef]

- Nemni, E.; Bullock, J.; Belabbes, S.; Bromley, L. Fully Convolutional Neural Network for Rapid Flood Segmentation in Synthetic Aperture Radar Imagery. Remote Sens. 2020, 12, 2532. [Google Scholar] [CrossRef]

- Guo, W.; Yuan, H.Y.; Xue, M.; Wei, P.Y. Flood inundation area extraction method of SAR images based on deep learning. China Saf. Sci. J. 2022, 32, 177–184. [Google Scholar]

- Xue, W.B.; Yang, H.; Wu, Y.L.; Kong, P.; Xu, H.; Wu, P.H.; Ma, X.S. Water Body Automated Extraction in Polarization SAR Images with Dense-Coordinate-Feature-Concatenate Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12073–12087. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, P.; Xing, J.; Li, Z.; Xing, X.; Yuan, Z. A Multi-scale Deep Neural Network for Water Detection from SAR Images in the Mountainous Areas. Remote Sens. 2020, 12, 3205. [Google Scholar] [CrossRef]

- Zhang, S.; Xu, Q.; Wang, H.; Kang, Y.; Li, X. Automatic Waterline Extraction and Topographic Mapping of Tidal Flats from SAR Images Based on Deep Learning. Geophys. Res. Lett. 2022, 49, e2021GL096007. [Google Scholar] [CrossRef]

- Baumhoer, C.A.; Dietz, A.J.; Kneisel, C.; Kuenzer, C. Automated extraction of Antarctic glacier and ice shelf fronts from Sentinel-1 imagery using deep learning. Remote Sens. 2019, 11, 2529. [Google Scholar] [CrossRef] [Green Version]

- Heidler, K.; Mou, L.; Baumhoer, C.; Dietz, A.; Zhu, X.X. HED-UNet: Combined segmentation and edge detection for monitoring the Antarctic coastline. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; p. 9351. [Google Scholar]

- Zheng, X.; Chen, J.; Zhang, S.; Chen, J. Water Extraction of SAR Image based on Region Merging Algorithm. In Proceedings of the 2017 International Applied Computational Electromagnetics Society Symposium, Suzhou, China, 1–4 August 2017; pp. 1–2. [Google Scholar]

- Mascolo, L.; Cloude, S.R.; Lopez-Sanchez, J.M. Model-Based Decomposition of Dual-Pol SAR Data: Application to Sentinel-1. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Mascolo, L.; Lopez-Sanchez, J.M.; Cloude, S.R. Thermal Noise Removal from Polarimetric Sentinel-1 Data. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M. Urban flood mapping with an active self-learning convolutional neural network based on TerraSAR-X intensity and interferometric coherence. ISPRS J. Photogramm. Remote Sens. 2019, 152, 178–191. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Weng, L.; Xu, Y.; Xia, M.; Zhang, Y.; Xu, Y. Water areas segmentation from remote sensing images using a separable residual SegNet network. ISPRS Int. J. Geo-Inf. 2020, 9, 256. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Encoder-decoder with Atrous separable convolution for semantic image segmentation. Lect. Notes Comput. Sci. 2018, 11211, 833–851. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, Y.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Wu, W.; Yang, Z.; Yu, J.; Zhao, B.; Liu, X.; Yang, H.; Mas, E.; Koshimura, S. Enhancement of Detecting Permanent Water and Temporary Water in Flood Disasters by Fusing Sentinel-1 and Sentinel-2 Imagery Using Deep Learning Algorithms: Demonstration of Sen1Floods11 Benchmark Datasets. Remote Sens. 2021, 13, 2220. [Google Scholar] [CrossRef]

- Konapala, G.; Kumar, S.; Ahmad, S. Exploring Sentinel-1 and Sentinel-2 diversity for flood inundation mapping using deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 180, 163–173. [Google Scholar] [CrossRef]

- Hartmann, A.; Davari, A.; Seehaus, T.; Braun, M.; Maier, A.; Christlein, V. Bayesian U-Net for Segmenting Glaciers in SAR Imagery. arXiv 2021, arXiv:2101.03249v2. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I. CBAM: Convolutional Block Attention Module. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume 11211, ISBN 978-3-030-01233-5. [Google Scholar]

- Asaro, F.; Murdaca, G.; Prati, C. Learning Deep Models from Weak Labels for Water Surface Segmentation in Sar Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6048–6051. [Google Scholar]

- Shrestha, B.; Stephen, H.; Ahmad, S. Impervious Surfaces Mapping at City Scale by Fusion of Radar and Optical Data through a Random Forest Classifier. Remote Sens. 2021, 13, 3040. [Google Scholar] [CrossRef]

- Ren, Y.; Li, X.; Yang, X.; Xu, H. Development of a Dual-Attention U-Net Model for Sea Ice and Open Water Classification on SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 202219, 1–5. [Google Scholar] [CrossRef]

| ID | Time (M/D/Y) | Range Spacing (m) | Azimuth Spacing (m) | Orbit Direction | Processing Level |

|---|---|---|---|---|---|

| 1 | 14 May 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 2 | 14 May 2021 | 2.33 | 13.95 | Ascending | L1-SLC (IW) |

| 3 | 14 May 2021 | 2.33 | 13.95 | Ascending | L1-SLC (IW) |

| 4 | 14 May 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 5 | 21 May 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 6 | 4 June 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 7 | 4 June 2021 | 2.33 | 13.93 | Ascending | L1-SLC (IW) |

| 8 | 20 July 2021 | 2.33 | 13.95 | Ascending | L1-SLC (IW) |

| 9 | 20 July 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 10 | 22 July 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 11 | 27 July 2021 | 2.33 | 13.95 | Ascending | L1-SLC (IW) |

| 12 | 27 July 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| 13 | 27 July 2021 | 2.33 | 13.95 | Ascending | L1-SLC (IW) |

| 14 | 27 July 2021 | 2.33 | 13.93 | Ascending | L1-SLC (IW) |

| 15 | 27 July 2021 | 2.33 | 13.94 | Ascending | L1-SLC (IW) |

| Label | Type | Total Number of Samples | Number of Training Samples | Number of Test Samples |

|---|---|---|---|---|

| 0 | Water | 239,232,614 | 191,386,092 | 47,846,522 |

| 1 | Non-Water | 1,355,651,482 | 1,084,521,186 | 271,130,296 |

| BF | PF | ||||

|---|---|---|---|---|---|

| Combination A | √ | √ | |||

| Combination B | √ | √ | √ | ||

| Combination C | √ | √ | √ | ||

| Combination D | √ | √ | √ | ||

| Combination E | √ | √ | √ | √ | √ |

| BFs (1024,1024,2) | PFs (1024,1024,3) | ||

|---|---|---|---|

| Conv2D Filters = 64, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (1024,1024,64) | Conv2D Filters = 64, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (1024,1024,64) |

| Conv2D Filters = 64, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (1024,1024,64) | Conv2D Filters = 64, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (1024,1024,64) |

| Layer | Parameters | Output shape | |

| Concat | (1024,1024,128) | ||

| MaxPooling2D | Kernel_size = 2 | (512,512,128) | |

| Conv2D | Filters = 256, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (512,512,256) | |

| MaxPooling2D | Kernel_size = 2 | (256,256,256) | |

| Conv2D | Filters = 512, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (256,256,512) | |

| Up-Sampling | Kernel_size = 2 | (512,512,512) | |

| Pyramid Pooling Module | Kernel_size = 1,2,3,6 | (512,512,512) | |

| Attention Block | (512,512,512) | ||

| Conv2D | Filters = 256, kernel_size = 3, activation = ‘ReLU’, BatchNormalization | (512,512,256) | |

| Up-Sampling | Kernel_size = 2 | (1024,1024,256) | |

| Conv2D | Filters = 128, kernel_size = 3, activation = ’ReLU’, BatchNormalization | (1024,1024,128) | |

| Conv | kernel_size = 1, activation = ’Sigmod’, | (1024,1024,1) | |

| Generated Label | |||

|---|---|---|---|

| Water | Non-Water | ||

| Ground truth | Water | True Positive (TP) | False Negative (FN) |

| Non-Water | False Positive (FP) | True Negative (TN) | |

| Extraction Result 2 | ||||

|---|---|---|---|---|

| Correct | Incorrect | Total | ||

| Extraction Result 1 | Correct | |||

| Incorrect | ||||

| Total | ||||

| Configuration | Version |

|---|---|

| GPU | GeForce RTX 3080Ti |

| Memory | 64 G |

| Language | Python 3.8.3 |

| Frame | Tensorflow 1.14.0 |

| Precision | Recall | IoU | OA | AA | |

|---|---|---|---|---|---|

| Combination A | 81.63% | 79.50% | 67.43% | 80.29% | 80.31% |

| Combination B | 83.52% | 87.73% | 74.79% | 85.92% | 86.01% |

| Combination C | 95.21% | 91.79% | 87.73% | 93.35% | 93.41% |

| Combination D | 89.73% | 81.66% | 74.68% | 84.79% | 85.13% |

| Combination E | 88.14% | 87.02% | 77.90% | 87.50% | 87.50% |

| Combination A and B | 52.67 |

| Combination A and C | 107.92 |

| Combination A and D | 61.75 |

| Combination A and E | 56.01 |

| Method | PPM | ABL | |

|---|---|---|---|

| Strategy A | FFEDN | ||

| Strategy B | (w/o) PPM | - | |

| Strategy C | (w/o) ABL | - | |

| Strategy D | (w/o) PPM and ABL | - | - |

| Precision | Recall | IoU | OA | AA | |

|---|---|---|---|---|---|

| Strategy A | 95.21% | 91.79% | 87.73% | 93.35% | 93.41% |

| Strategy B | 89.17% | 85.51% | 75.01% | 85.20% | 81.92% |

| Strategy C | 87.36% | 86.69% | 76.57% | 86.64% | 86.01% |

| Strategy D | 83.15% | 81.39% | 68.85% | 81.19% | 79.62% |

| Classifier | Parameters | Description | Value |

|---|---|---|---|

| SVM | C Kernel | Penalty coefficient Kernel function | 2 Rbf |

| RF | N_estimators | Number of decision trees | 550 |

| Methods | Precision | Recall | IoU | OA | AA |

|---|---|---|---|---|---|

| FFEDN | 95.21% | 91.79% | 87.73% | 93.35% | 93.41% |

| RF | 78.99% | 73.56% | 61.38% | 75.15% | 75.30% |

| SVM | 76.29% | 73.49% | 59.83% | 74.39% | 74.43% |

| U-Net | 87.85% | 78.94% | 71.17% | 82.21% | 82.62% |

| U-Net-ResNet | 84.99% | 83.07% | 72.44% | 83.84% | 83.85% |

| MSF-MLSAN | 90.14% | 85.55% | 78.23% | 87.46% | 87.56% |

| WENET | 89.21% | 84.43% | 76.61% | 86.38% | 86.50% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, D.; Wang, C.; Wu, L.; Yang, X.; Guo, Z.; Dang, X.; Zhao, J.; Li, N. Water Stream Extraction via Feature-Fused Encoder-Decoder Network Based on SAR Images. Remote Sens. 2023, 15, 1559. https://doi.org/10.3390/rs15061559

Yuan D, Wang C, Wu L, Yang X, Guo Z, Dang X, Zhao J, Li N. Water Stream Extraction via Feature-Fused Encoder-Decoder Network Based on SAR Images. Remote Sensing. 2023; 15(6):1559. https://doi.org/10.3390/rs15061559

Chicago/Turabian StyleYuan, Da, Chao Wang, Lin Wu, Xu Yang, Zhengwei Guo, Xiaoyan Dang, Jianhui Zhao, and Ning Li. 2023. "Water Stream Extraction via Feature-Fused Encoder-Decoder Network Based on SAR Images" Remote Sensing 15, no. 6: 1559. https://doi.org/10.3390/rs15061559