1. Introduction

Common Agricultural Policy (CAP), introduced as early as 1957 under the Treaty on the Functioning of the European Union, is one of the most important EU strategies, not only for receiving more than one-third of the EU’s budget, but also for supporting a range of socioeconomic, environmental, and political issues at an EU level and on a national scale. CAP serves as an instrument for improving agricultural productivity, meeting the expected demand for affordable, high-quality food provision [

1] while preserving the natural environment [

2].

In Europe, implementation of the CAP at national level is facilitated through the Land Parcel Identification System (LPIS), covering each Member State (MS) terrestrial territory [

2]. LPIS is an information system that gathers information relevant to cropland at the parcel level [

3]. It is regularly used for verifying applications and claims of the farmers through administrative and on-the-spot checks by responsible authorities, verifying eligibility criteria, and testing compliance with commitments and mandatory requirements [

4]. The new regulation (Regulation (EU) 2018/746) adopted in 2018 for the CAP 2013–2020 transforms the verification process from the sample-based approach to an all-inclusive monitoring approach to the declarations.

Earth Observation is not relevant only to the verification of regulatory compliance, but it is also crucial for providing explicit spatial information essential for rural land management, food security [

5], market trend forecasting, and analysis. The impact of changes in land use and consequently in land cover over time receives increasing attention since it concerns climate change, decline of biodiversity, degradation of water resources and soil, and outbreaks of infectious disease [

6]. Not surprisingly, cropland and landcover mapping, based on EO data over rural areas, has been an active field of research over the past five decades. Cropland occupies a significant area in most European countries, and its utilization reflects the evolution towards the sustainable development and management of environmental resources [

7,

8]. That is why it is usually chosen to be examined in the framework of its spatial pattern. Throughout the years, an increasing number of studies have developed and evaluated diverse methodological approaches aiming to provide reliable, high accurate agricultural use and land cover information.

The temporal and spatial information of crop distribution is essential [

9,

10] in order to face several global challenges, such as climate change and increased food demand [

11]. Satellite imagery provides data that allow near-real-time monitoring, serving the early detection of changes in land [

12] and more effective terrain management. Contrary to manual labor, which demands effort and time and varies depending on the geographical region, remote sensing allows the creation of more accurate crop maps [

9], which gather all necessary details for the amount and the type of agricultural fields. Spatial and temporal differences observed in crop growth, health, and yield are easily identified thanks to the evolvement of technology and the emergence of remote sensing data. Objectivity, timeliness, and accessibility in less accessible fields are some of the new potential [

13] through aerial imagery. The analysis of these imagery is certainly faster and at a lower cost than the traditional methods of cropland mapping [

14]. The issue of low resolution of the agricultural data collected by ground surveys is solved by the development and disposal of satellite observations in medium and high spatial resolution, which can cover broad areas of land across space and time [

10]. Even if the resolution is not sufficient, it is possible to fuse images in order to augment it and identify ground objects at finer scales [

15]. In most cases, the task of cropland mapping does not extend at a global scale since the classification algorithms cannot map all the existing types of crops as distinct classes [

16].

The earlier paradigm of this domain relied on the use of the spectral information derived from single-date imagery on critical growth stages and the assumption that land cover and cropland types have distinct spectral signatures [

17]. However, the spectral behavior of many crops is similar during the peak growing season period, and identifying the “optimal” sensing time is also challenging due to the crop development dynamics and the associated spectral changes within short time intervals [

18]. To overcome misclassifications arising from the use of single-date imagery, classification approaches exploiting both the spectral and temporal information found in multitemporal remote sensing data have been proposed [

17]. Multitemporal image acquisitions can be used to monitor and record spectral changes along the year, extracting information on the seasonality of vegetation, growth dynamics, and conditions [

19]. Until the launch of the Sentinel-2A and Sentinel-2B data satellites in 2015 and 2017, respectively, multitemporal time series analysis was used on crop classification and rural mapping over large areas, mainly relying on the use of high temporal but low spatial resolution MODIS and medium temporal/spatial resolution Landsat data [

20]. Crop and rural mapping becomes especially challenging with such data in heterogenous areas, as the Mediterranean rural landscapes are characterized by high fragmentation, small agricultural holdings, crop diversity, and within-crop spectral variability due to local weather conditions and topography [

18]. On the other hand, the Sentinel-2 constellation, acquiring images with 10 m spatial resolution every 5–6 days around the globe, has the potential to provide detailed maps of agricultural land cover and is defined as the basic source of EO information in the case of 2020+ Common Agricultural Policy [

20,

21].

The improved characteristics of the new satellite systems also necessitate the development of new processing workflows that can support analysis of the spectral time series and streamline information extraction. Machine learning approaches such as the Random Forest (RF) algorithm have been employed successfully to exploit image time series in agricultural land cover studies [

22,

23]. In recent years, deep learning (DL) networks have been increasingly used by the EO community, increasing automation to multi-level feature representation and extraction as well as to end-to-end learning and information extraction without any need from the analyst to know the statistical distribution of the input data [

24] and introduce features or rules manually [

25]. It is common to be chosen as the preferred computing paradigm for several tasks since, in most cases, they can beat human labor in terms of effectiveness and time [

26]. Among the several different DL architectures, such as deep belief networks [

27,

28], generative adversarial networks [

29], transformers [

30] and autoencoders [

31,

32], convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are the most extensively used models for complex remote sensing applications [

33,

34]. Prominent examples are satellite image fusion for improved land use/land cover classification [

35], object detection [

36,

37], and change detection [

38,

39] in remote sensing images, as well as the delineation of agricultural fields from satellite images [

40]. CNNs are appealing to the remote sensing community due to their inherent nature to exploit the two-dimensional structure of images, efficiently extracting spectral and spatial features, while RNNs can handle sequential input in continuous dimensions with sequential long-range dependency, thus making them appropriate for the analysis of the spectral–temporal information in time series stacks [

19,

34,

41,

42,

43,

44].

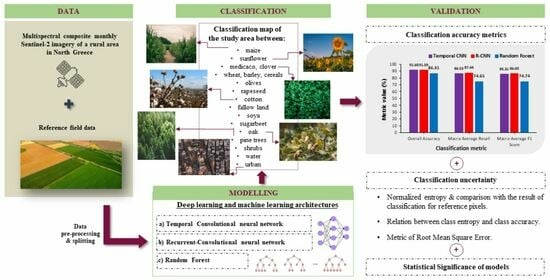

In line with this evolvement and since the data and the algorithm design are two of the most significant factors for the success of the classification [

45], this paper describes the development of two different DL models for agricultural land cover and crop type mapping through a detailed fifteen class scheme in a heterogeneous area in Northern Greece, using a time series of Sentinel-2 images acquired in 2020. The two DL models, namely a Temporal CNN and an R-CNN are compared against an RF classifier, a robust machine learning algorithm used extensively in remote sensing studies. Given the high dependence of the success of these models on the data representation, a different, not so-common, type of standardization was tested and finally implemented on the reference instances. The modeling and predicting in the study area were limited to the spectral bands’ measurements of the raw data without integrating information from spectral indices derived from combinations of the existing bands. Overall, this study underlines the whole process of feature extraction, construction, and reforming using the smallest possible amount of satellite knowledge. Our study advances the field of remote sensing data mapping by employing an extended classification scheme in a complex, heterogeneous area, focusing on multiple crop types. To address known accuracy and reliability errors in reference data coming from farmers’ declarations [

22,

46,

47,

48] in our study, we relied only on recently verified ground reference data from the state agency managing such databases. The reference samples were used with optimized deep learning models to generate reliable and concrete findings. Finally, the novelty of our work is further reflected in our unique approach to assessing the results. Instead of relying solely on traditional measures of classification accuracy, we also calculate the Shannon entropy of class probability estimates. This approach defines accuracy not merely in conventional terms but also considers the entropy of all potential probabilities or votes toward different class labels for a pixel, serving as an innovative uncertainty measure. This dual analysis not only provides a comprehensive view of the classification but introduces a novel method of interpreting accuracy within the field.

The paper is organized as follows:

Section 2 presents a brief introduction to the CNN and RNN architectures. Then,

Section 3 presents the region of research, the reference data, the pre-processing, and the validation approach for the developed DL architectures.

Section 4 reports the performance of the predictive models through certain evaluation metrics and visual representation of classification, as well as in terms of classification uncertainty.

Section 5 discusses the results of this study in relation to earlier works, and finally,

Section 6 summarizes the main findings and the conclusions of the whole work.

All the classification models were designed, trained, and evaluated efficiently on the CPU, while the writing and the execution of python code (version 3.8.8) were performed on Jupyter Notebook.

2. Feedforward and Recurrent Neural Networks

Depending on the flow of signals, NNs are divided into two types of topologies: the feedforward and the recurrent NNs (

Figure 1). In this study, both topologies of neural networks are examined, with their proposed analytical architectures being presented in

Section 3.

2.1. Feedforward Neural Networks and Temporal Convolutions

Feedforward neural networks are formed by various layers, known as dense or fully-connected layers, each of which includes a certain number of units-neurons. Each one of them is connected with all the units in the following layer, and any such connection is characterized by a weight.

The output of most of the layers, depending on their type, is produced after applying a function called the activation function. Non-linear or piecewise linear activation functions are the most preferred so that the NN is able to learn non-linear and complex mappings between its inputs and outputs [

49].

If we suppose that there are training instances, then the training set includes all the pairs of the form . Thus, we have that , where is the length of each -variate input time series and is the number of classes. On the contrary, each vector , whose components are all zero except one component that equals 1, represents the output of a neural network. The unique value of 1 is placed in this index, which is equal to the class label of the training instance.

The aim of training an NN is the estimation of output vectors

to approximate, in the best way possible, the corresponding target vectors

. That is why the criterion used to evaluate the model fitting to training data is the minimization of an error function, known as Cross-Entropy:

For this purpose, the network parameters, i.e., weights and biases of all layers, are updated after each training step according to an optimization algorithm using a subset of training data.

In a temporal convolutional layer, a series of filters known as convolutional kernels are applied to the two-dimensional output of the previous layer. Each filter is a matrix of weights that slides only across the height of the input of the current layer, being element-wise multiplied with a patch of a given size. Then, the individual products are summed together to produce a single number. The output of each filter is a one-dimensional feature map, whose size is given by

The variable represents the height of the representation of th layer, whereas corresponds to the height of each filter. The width of each convolutional kernel coincides with that of the layer’s input, which is why there is no reference to it. Furthermore, padding and stride are two additional hyper-parameters for th layer, from which the first one is the number of zeros added at the edges of the layer’s input, and the second one is the step of the filter’s movement across one direction. In the end, the symbol denotes the floor of the number inside it, i.e., the largest integer that is less than or equal to the inside value.

Figure 2 depicts the application of three convolutional kernels into a multivariate time series. The output representation consists of three stacked one-dimensional feature maps.

2.2. Recurrent Layers and 2D Convolutions

Long Short-Term Memory NN (LSTM), a particular class of RNNs, is a type of network of memory blocks that overcomes the limitation of exclusive learning of the short-term dependencies. At any given point in time, the LSTM unit consists of a memory cell and three gates: forget, input, and output. A forget gate establishes how much information derived from the previous time steps is significant, and an input gate controls the amount of the total imported input in the LSTM unit, which should pass into the memory cell and consequently should be memorized and used. On the contrary, an output gate assesses the content of memory, which will be exposed at the outside of the corresponding LSTM unit.

In recent years, research has begun into the efficiency of those NNs that combine the forward-backward nature of LSTM NNs and the spatial convolution of 2D Neural Networks. They have been designed and tested in several remote sensing applications, from the classification of satellite images [

50,

51] to change detection in land cover [

52].

A filter used in a 2D convolutional layer is a 3D matrix (height

width

depth) and is element-wise multiplied by the same-sized patches of the layer’s input [

53]. Its spatial dimensions are smaller than the corresponding dimensions of the input of this specific layer, leading to sliding over both the width and the height of the input volume. The weights are shared across all convolutions which use the same filter, resulting in a reduction of parameters compared to the use of a fully-connected layer.

5. Discussion

The post-2020 reform of the CAP is based on the use of Earth Observation (EO) data in the crop-monitoring and eligibility verification process [

20]. The EU’s Copernicus programme, providing freely distributed data with increased revisit capacity, spatial and spectral resolution, and systematic and frequent global coverage, is the main source of information used to support the implementation of the new CAP. The focus of this study was the development of two supervised DL classification methods for agricultural land cover mapping and crop classification using a Sentinel-2 image temporal dataset. In addition, we compared the two structures based on neural networks with a well-established RF algorithm. This machine learning approach was selected because it has been shown from prior research to produce successful results [

87,

88]. Architecture development for the two DL models was not a trivial task since identifying the optimal type of layers and the proper network structure (i.e., hyperparameters) is a rather subjective procedure not following standard approaches [

19]. After the determination of the parameters’ values by means of cross-validation, we trained all three classifiers in order to test, afterward, their performance on the remaining test pixels.

The complexity of agriculture from the perspective of remote sensing can justify the implementation of monthly data. Different crop types usually follow diverse phenological cycles, with the stages and periods of growth not coinciding with one another. Even land parcels of the same crop type may appear to have dissimilar temporal and spectral behavior [

87]. The selection of specific dates or seasons for the acquisition of image data [

89,

90], either being relevant to the phenological stages of the crops or suggested by certain algorithms, is proposed by many remote sensing studies in order to carry out the multitemporal classification. However, this fact can prevent the transferability of methodology to another region because the satellite imagery products downloaded at the chosen dates may be problematic for the desired task (cloudy conditions, similar spectral signature of classes) [

91]. Thus, in order to facilitate the discrimination of crop types and support an operational land cover classification, we extended the examined annual time span to a monthly basis.

The chosen types of neural networks were far from random. It has been proven that Temporal Convolutional Neural Networks can work well with sequential data, while 2D CNNs obtain this possibility in parallel with automatic feature extraction after a combination of their architecture with recurrent layers. In addition, LSTMs learn both short-term and long-term dependencies, being free of the phenomenon of vanishing gradients. Yet, the classification scheme adopted in our study is challenging since only 10 out of the 15 classes correspond to crops. In that case, errors might be induced by DL algorithms focusing only on temporal feature representation and neglecting the spatial patterns derived from the 10 m data over the complex Mediterranean rural landscape.

The results indicated that both models performed exceptionally well, with a slight superiority of the R-CNN’s architecture over Temporal CNN’s, while RF appears to be unable to reach their classification performance. The combination of LSTM units with peephole connections and 2D convolutions achieved the highest macro average F1 Score of 86.03% in the validation set compared to 85.31% applying the Temporal CNN’s architecture. In the study of Zhong et al. [

19], for classifying a 13-class crop scheme using enhanced vegetation index image stacks, a Conv1D-based model achieved higher accuracy compared to the best accuracy achieved by a model based on LSTM layers, although both models shared the same settings of dropout and fully-connected layers. Also, in the same study, the accuracy of both Conv1D and combined LSTM-Conv2D models was superior to the non-deep learning architecture. Also, Zhao et al. [

92], in a study in North China for the discrimination of six different crops and forested areas using dense Sentinel-2 time series data, identified that 1D CNN slightly outperformed the LSTM models.

While both models attained similar classification accuracies, the assessment of the classification uncertainty indicated that the Temporal CNN model managed to assign individual pixels to be classified with lower uncertainty compared to the R-CNN model. It should be noted, as earlier studies highlight, that the CNN model presents less computational and time costs compared to LSTMs [

14].

In regard to input features used for the classification task in our study, both models were developed using only the original bands of the Sentinel-2 images. Since our study involved different algorithms optimization and comparison, we avoided computing and using spectral indices, relying on previous research findings that suggested that additional features increase the input dimension and consequently the computational cost without significant improvement in classification accuracy [

91]. Also, Cai et al. [

17] and Xin et al. [

93] identified limited improvement in terms of classification accuracy when using spectral indices along with the original bands for crop mapping.

However, this might not always be the case, and a significant body of the literature has identified and underlined the merits of considering spectral indices in the classification process. Zhong et al. [

19] have identified that the use of a single spectral index as input to Temporal CNN-based models outperformed the result obtained from the full-band models. Yao et al. [

94], through a feature space analysis, identified that spectral information of original bands was not adequate to distinguish crops, while original and modified (considering red-edge bands) NDVI indices were important for increasing class separability. Also, in a European-wide crop-type mapping study, yearly indicators based on spectral indices were deemed necessary for securing satisfactory classification accuracy compared to the original bands [

95]. The importance of spectral indices as features for crop type mapping has also been identified in a study involving lower spatial resolution (i.e., MODIS) time series data for crop classification in the USA [

96]. Based on the above findings, in the future and in order to address this limitation of our study, we will extend our approach by exploring in detail the importance of the spectral indices as explanatory features for increasing the classification accuracy of the study.

Earlier studies have developed DL models based on time series stacks of the original satellite images after cloud filtering (individual image approach) [

17,

97]. In our study, in order to minimize the data volume of the medium-high spatial resolution Sentinel-2 images and the associated computational cost, we employed a monthly composite method, which, based on the classification accuracy metrics, performed similarly to the individual-image approach. In addition, such a temporal composite approach might include fewer uncertainties when compared to, for example, the use of gap-filling interpolation methods, which may introduce erroneous observations in the time series and affect the robustness of the results [

17].

The two DL models evaluated are not based on expert-rules or shape-fitting functions in order to incorporate information on temporal growth dynamics in the classification process, providing, thus, a robust, end-to-end approach for agricultural cover mapping [

19].

6. Conclusions

Despite the scarcity of training samples and the existing diversity of crops in the study region, a classification with relatively high values in accuracy was achieved. The performance of modern time series forecasting algorithms, such as a Temporal Convolutional Neural Network and a 2D Convolutional Neural Network consisting of a layer with LSTM units with peephole connections, was compared with that of one of the most commonly used machine learning algorithms in remote sensing community, Random Forest. For this purpose, a novel multilevel methodological approach was proposed for land cover and crop type classification, including all the steps, starting with downloading and transforming raw satellite imagery and ending with predicting and monitoring the spatial distribution of land cover classes in the whole study area.

The way of splitting the reference instances into training, validation, and test subsets is crucial for the efficiency of feature learning and land cover estimation. In general, it is more prudent to split the available data on the basis of parcels’ distribution in classes. The fact that each object consists of pixels of similar spectral signature is a primary reason. In other words, when the model is trained using a number of pixels inside an object, and then it attempts to predict the class of another pixel belonging to the same object, the within-class variability of the pixels’ features in the object is negligible enough to guarantee the independence between the training and test instances.

Our model, which consists of both recurrent and convolutional layers, outperforms both the neural network being composed of 1D convolutional layers and the constructed RF in every measure being explored, except for overall accuracy. The difference in overall accuracy between the first two models is negligible, while the percentage difference regarding the macro average recall and macro average F1 Score between the R-CNN and each one of the remaining models is certainly higher.

Through the defined models’ architectures, fine-grained land cover maps were generated, with each class label being assigned to every pixel of the study area’s image. Furthermore, the additional representation of the uncertainty’s measurements in maps, in which the ambiguously classified areas were denoted as red, helped not only to the visual identification of certain problematic parcels but also to the discrimination of crop types in the rest sub-regions. It is very significant that the land cover maps can be further extended and interpreted to a percentage distribution of the classes covering the area, thus offering an alternative way of qualitative and quantitative evaluation that can be easily generalized at a global scale. This fact plays a key role in crop mapping, decision-making, and policy design for sustainable terrain management.

It is worth noting, as mentioned above, that it was attempted to find and capture possible correlations between the classes’ size, the accuracy per class, and the normalized entropy per class, with satisfactory conclusions. In test instances, classes such as ‘water’, ‘oak’, and ‘shrubs’ yielded amongst the lowest normalized entropies in conjunction with the highest accuracies, allowing their easy segmentation between all the classes. In summary, the RF architecture offered more uncertain predictions at the pixel level compared to those of neural networks. It seems that the size of the classes itself did not affect the confidence of the results, but the combination of the type of a class with its size might matter. As a result, a class containing only a few pixels, with its objects varying at a substantial degree (because of different agronomic practices), is expected that it will have higher normalized entropy.

Overall, this work integrated multitemporal and multispectral Sentinel-2 images combined with data from OPEKEPE for the classification of land cover on a particular region in Greece among 15 classes, most of which concern crop types. This discrimination was far from negligible since the chosen number was not so large to pose problems of confusion between similar crop classes (because of close spectral signature) and simultaneously not so small to lose the desired representative performance of the actual land distribution. Mapping the current study area was a challenging task due to its unique soil biodiversity as part of Mediterranean landscaping, especially when the data were generated by medium-resolution satellites. This area was not wholly within the boundaries of a specific city or a regional unit; that’s why its land combined diverse agricultural and management practices. Moreover, this study described a framework for land cover classification and cropland mapping, offering, in the end, operational products, such as a classification map at the pixel level, which are innovative for Greece. Despite the relatively low amount of labeled reference information, which was opposed to the demand of the developed algorithms, the results proved that the land distribution was successfully captured. Future research should consider exploring the discriminatory power of spectral indices as additional features to the original image bands for increasing the classification accuracy of the DL algorithms.