A Real-Time Tracking Algorithm for Multi-Target UAV Based on Deep Learning

Abstract

:1. Introduction

2. Model and Methods

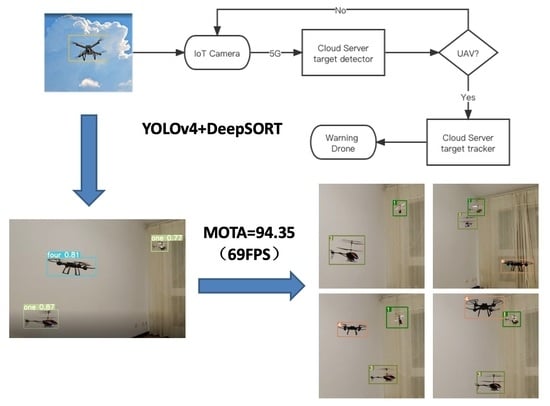

2.1. System Model

2.1.1. Target Detection Algorithms

- True positives (YP): correctly predicted by the model as positive samples;

- True negatives (TN): correctly predicted as negative samples by the model;

- False positives (FP): negative samples are wrongly predicted as positive samples by the model;

- False negatives (FN): a positive sample is incorrectly predicted as negative by the model.

2.1.2. Target Tracking Algorithms:

- ID Switch (IDSW): indicates the number of times that the tracking ID of the same target changes in a tracking task;

- Tracing fragmentation: the number of times the status of the same tracing target changes from tracing to fragmentation to tracing in a tracing task;

2.2. Method

2.2.1. Target Detector

2.2.2. Target Tracker

- The trajectory matches the detection box. For slow-moving objects between the front and back frames, the detector can successfully detect them and then the tracking can be realized;

- The detection box does not exist, or the detector is missed. There is a trace, but the detection box cannot be matched; the detector performance thus needs to be improved to reduce the rate of missed detection;

- The trajectory does not match the detection box and the UAV target moves too fast, so it flies out of the field of view, causing matching failure;

- The two detection boxes overlap and there is occlusion between the targets, but the minimum cosine distance of the special diagnosis map can be calculated by cascading matching in DeepSORT to achieve re-recognition.

2.3. Dataset Creation

3. Experiments

3.1. Training and Analysis of Model

3.2. DPU Deployment of Target Detector

3.3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, K.; Li, H.; Li, C.; Zhao, X.; Wu, S.; Duan, Y.; Wang, J. An Automatic Defect Detection System for Petrochemical Pipeline Based on Cycle-GAN and YOLO v5. Sensors 2022, 22, 7907. [Google Scholar] [CrossRef] [PubMed]

- Chohan, U.W.; van Kerckhoven, S. Activist Retail Investors and the Future of Financial Markets: Understanding YOLO Capitalism; Taylor and Francis: Oxfordshire, UK, 2023. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Pan, J. Augmented Memory for Correlation Filters in Real-Time UAV Tracking. In Proceedings of the International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Soft Computing. Researchers from Shanghai Jiao-Tong University Detail New Studies and Findings in the Area of Soft Computing (Collaborative model based UAV tracking via local kernel feature). Comput. Wkly. News 2018. [Google Scholar]

- Aglyamutdinova, D.B.; Mazgutov, R.R.; Vishnyakov, B.V. Object Localization for Subsequent UAV Tracking. In Proceedings of the ISPRS International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Riva del Garda, Italy, 4–7 June 2018; Volume XLII-2. [Google Scholar]

- Sun, Z.; Wang, Y.; Gong, C.; Laganiére, R. Study of UAV tracking based on CNN in noisy environment. Multimed. Tools Appl. 2020, 80. [Google Scholar] [CrossRef]

- Xie, J.; Huang, S.; Wei, D.; Zhang, Z. Multisensor Dynamic Alliance Control Problem Based on Fuzzy Set Theory in the Mission of Target Detecting and Tracking. J. Sens. 2022, 2022, 7919808. [Google Scholar] [CrossRef]

- Kwok, D.; Nejo, T.; Costello, J.; Okada, H. IMMU-31. Tumor-Specific Alternative Splicing Generates Spatially-Conserved Hla-Binding Neoantigen Targets Detected Through Integrative Transcriptomic and Proteomic Analyses. Neuro Oncol. 2021, 23 (Suppl. 6), vi99. [Google Scholar] [CrossRef]

- DENSO TEN Limited. Patent Issued for Radar Device and Target Detecting Method (USPTO 10,712,428). Comput. Netw. Commun. 2020. [Google Scholar]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Yu, Z.; Zhongyin, G.; Jianqing, W.; Yuan, T.; Haotian, T.; Xinming, G. Real-Time Vehicle Detection Based on Improved YOLO v5. Sustainability 2022, 14, 12274. [Google Scholar]

- Tan, L.; Lv, X.; Lian, X.; Wang, G. YOLOv4_Drone: UAV image target detection based on an improved YOLOv4 algorithm. Comput. Electr. Eng. 2021, 93, 107261. [Google Scholar] [CrossRef]

- Hansen, L.; Kuangang, F.; Qinghua, O.; Na, L. Real-Time Small Drones Detection Based on Pruned YOLOv4. Sensors 2021, 21, 3374. [Google Scholar]

- Jinhui, L. Multi-target detection method based on YOLOv4 convolutional neural network. J. Phys. Conf. Ser. 2021, 1883, 012075. [Google Scholar]

- Fei, C.; Huanxin, Z.; Xu, C.; Runlin, L.; Shitian, H.; Juan, W.; Li, S. Remote Sensing Aircraft Detection Method Based on LIGHTWEIGHT YOLOv4; National University of Defense Technology: Changsha, China, 2021. [Google Scholar]

- Li, F.; Gao, D.; Yang, Y.; Zhu, J. Small target deep convolution recognition algorithm based on improved YOLOv4. Int. J. Mach. Learn. Cybern. 2022, prepublish. [Google Scholar] [CrossRef]

- Jun, W.S.; Fan, P.Y.; Gang, C.; Li, Y.; Wei, W.; Zhi, X.C.; Zhao, S.Y. Target Detection of Remote Sensing Images Based on Deep Learning Method and System. In Proceedings of the 2021 3rd International Conference on Advanced Information Science and System, Sanya, China, 26–28 November 2021; pp. 370–376. [Google Scholar] [CrossRef]

- Li, X.; Luo, H. An Improved SSD for Small TARGET detection. In Proceedings of the 2021 6th International Conference on Multimedia and Image Processing (ICMIP 2021), Zhuhai, China, 8–10 January 2021; pp. 15–19. [Google Scholar] [CrossRef]

- Sun, W.; Yan, D.; Huang, J.; Sun, C. Small-scale moving target detection in aerial image by deep inverse reinforcement learning. Soft Comput. 2020, 24, 5897–5908. [Google Scholar] [CrossRef]

- Andrade, R.O.; Yoo, S.G.; Ortiz-Garces, I.; Barriga, J. Security Risk Analysis in IoT Systems through Factor Identification over IoT Devices. Appl. Sci. 2022, 12, 2976. [Google Scholar] [CrossRef]

- Zhimin, G.; Yangyang, T.; Wandeng, M. A Robust Faster R-CNN Model with Feature Enhancement for Rust Detection of Transmission Line Fitting. Sensors 2022, 22, 7961. [Google Scholar]

- Mian, Z.; Peixin, S.; Xunqian, X.; Xiangyang, X.; Wei, L.; Hao, Y. Improving the Accuracy of an R-CNN-Based Crack Identification System Using Different Preprocessing Algorithms. Sensors 2022, 22, 7089. [Google Scholar]

- Ren, J.; Jiang, X. A three-step classification framework to handle complex data distribution for radar UAV detection. Pattern Recognit. 2021, 111, prepublish. [Google Scholar] [CrossRef]

- Guo, X.; Zuo, M.; Yan, W.; Zhang, Q.; Xie, S.; Zhong, I. Behavior monitoring model of kitchen staff based on YOLOv5l and DeepSort techniques. MATEC Web Conf. 2022, 355, 03024. [Google Scholar] [CrossRef]

- Qiu, X.; Sun, X.; Chen, Y.; Wang, X. Pedestrian Detection and Counting Method Based on YOLOv5+DeepSORT; Tibet University: Lhasa, China, 2021. [Google Scholar]

- He, Y.; Li, X.; Nie, H. A Moving Object Detection and Predictive Control Algorithm Based on Deep Learning. J. Phys. Conf. Ser. 2021, 2002, 012070. [Google Scholar] [CrossRef]

- Rueda, M.G.V.; Hahn, F. Target detect system in 3D using vision apply on plant reproduction by tissue culture. In Proceedings of the Aerospace/Defense Sensing, Simulation, and Controls, Orlando, FL, USA, 21 March 2001; Volume 4390. [Google Scholar]

- Li, W.; Feng, X.S.; Zha, K.; Li, S.; Zhu, H.S. Summary of Target Detection Algorithms. J. Phys. Conf. Ser. 2021, 1757, 012003. [Google Scholar] [CrossRef]

- Durga, B.K.; Rajesh, V. A ResNet deep learning based facial recognition design for future multimedia applications. Comput. Electr. Eng. 2022, 104, 108384. [Google Scholar] [CrossRef]

| The Network Structure | Classification of Network | Light Weight Network |

|---|---|---|

| backbone network | VGG, ResNet, ResNeXt, DenseNet | SqueezeNet, MobileNet, ShuffleNet |

| neck network | additional layer | Characteristics of the fusion |

| SPP, ASPP, RFB, SAM | FPN, BiFPN, NAS-FPN, ASFF | |

| one-stage algorithm | RPN, SSD, YOLO, RetinaNet | CornetNet, CenterNet, CentripetalNet |

| two-stage algorithm | Mask R-CNN, Fast R-CNN, Faster R-CNN | Reppoints |

| Black Four Rotor | White Four Rotor | Yellow Single Rotor | Red Single Rotor | Total |

|---|---|---|---|---|

| 873 | 828 | 650 | 849 | 3200 |

| Model | YOLOv4 | YOLOv4 Double Branch Detection | YOLOv4 Data Augmented | UAV Target Detector |

|---|---|---|---|---|

| Training time | 10.5 h | 10.4 h | 10.6 h | 10.5 h |

| mPA (IoU = 0.5) | 0.968 | 0.959 | 0.990 | 0.988 |

| Speed | 64FPS | 69FPS | 64FPS | 70FPS |

| Model | FPS | FP | FN | FM | GT | IDSW | MOTA |

|---|---|---|---|---|---|---|---|

| Target Detector + DeepSORT | 69 | 0 | 90 | 13 | 1591 | 11 | 0.9365 |

| Target Detector + Target Tracker | 69 | 0 | 85 | 10 | 1591 | 6 | 0.9435 |

| YOLOv3 + Target Tracker | 54 | 0 | 87 | 28 | 1591 | 8 | 0.9215 |

| CenterNet + Target Tracker | 25 | 0 | 503 | 13 | 1591 | 31 | 0.66436 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, T.; Liang, H.; Yang, Q.; Fang, L.; Kadoch, M.; Cheriet, M. A Real-Time Tracking Algorithm for Multi-Target UAV Based on Deep Learning. Remote Sens. 2023, 15, 2. https://doi.org/10.3390/rs15010002

Hong T, Liang H, Yang Q, Fang L, Kadoch M, Cheriet M. A Real-Time Tracking Algorithm for Multi-Target UAV Based on Deep Learning. Remote Sensing. 2023; 15(1):2. https://doi.org/10.3390/rs15010002

Chicago/Turabian StyleHong, Tao, Hongming Liang, Qiye Yang, Linquan Fang, Michel Kadoch, and Mohamed Cheriet. 2023. "A Real-Time Tracking Algorithm for Multi-Target UAV Based on Deep Learning" Remote Sensing 15, no. 1: 2. https://doi.org/10.3390/rs15010002