A Novel Method for Digital Orthophoto Generation from Top View Constrained Dense Matching

Abstract

:1. Introduction

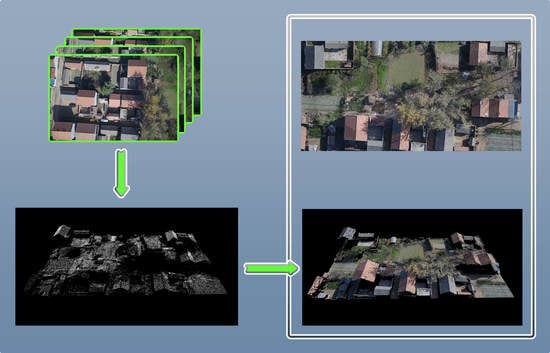

- This paper presented a top view constrained dense matching method to generate a digital orthophoto without a general 3D reconstruction.

- The dense matching works on a raster with a top-down propagation significantly improves the generation speed of the digital orthophoto.

- Testing on various scenes and with some commercial software shows that the method is reliable and efficient.

2. Related Work

3. Methods

3.1. Sparse 3D Point Generation

3.2. Rasterization

3.3. Square Patch Optimization

3.4. Occlusion Detection

| Algorithm 1: Digital orthophoto generation process. |

|

4. Experiment and Analysis

4.1. Test on Various Scene

4.2. Elevation of the Occlusion Detection

4.3. Evaluation of the Accuracy and Efficiency

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wolf, P.R.; Dewitt, B.A.; Wilkinson, B.E. Elements of Photogrammetry with Applications in GIS; McGraw-Hill Education: New York, NY, USA, 2014. [Google Scholar]

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a high-precision true digital orthophoto map based on UAV images. ISPRS Int. J. Geo-Inf. 2018, 7, 333. [Google Scholar] [CrossRef] [Green Version]

- Verhoeven, G.; Doneus, M.; Briese, C.; Vermeulen, F. Mapping by matching: A computer vision-based approach to fast and accurate georeferencing of archaeological aerial photographs. J. Archaeol. Sci. 2012, 39, 2060–2070. [Google Scholar] [CrossRef]

- Barazzetti, L.; Brumana, R.; Oreni, D.; Previtali, M.; Roncoroni, F. True-orthophoto generation from UAV images: Implementation of a combined photogrammetric and computer vision approach. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2014, 2, 57–63. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Yan, L.; Sun, Y.; Cui, X.; Mortimer, H.; Li, Y. True orthophoto generation using line segment matches. Photogramm. Rec. 2018, 33, 113–130. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, Y.; Xu, S.; Bu, S.; Han, P.; Wan, G. DenseFusion: Large-Scale Online Dense Pointcloud and DSM Mapping for UAVs. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4766–4773. [Google Scholar]

- Lin, T.Y.; Lin, H.L.; Hou, C.W. Research on the production of 3D image cadastral map. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI), Chiba, Japan, 13–17 April 2018; pp. 259–262. [Google Scholar]

- Zhao, Y.; Cheng, Y.; Zhang, X.; Xu, S.; Bu, S.; Jiang, H.; Han, P.; Li, K.; Wan, G. Real-Time Orthophoto Mosaicing on Mobile Devices for Sequential Aerial Images with Low Overlap. Remote Sens. 2020, 12, 3739. [Google Scholar] [CrossRef]

- Lin, Y.C.; Zhou, T.; Wang, T.; Crawford, M.; Habib, A. New orthophoto generation strategies from UAV and ground remote sensing platforms for high-throughput phenotyping. Remote Sens. 2021, 13, 860. [Google Scholar] [CrossRef]

- Hood, J.; Ladner, L.; Champion, R. Image processing techniques for digital orthophotoquad production. Photogramm. Eng. Remote Sens. 1989, 55, 1323–1329. [Google Scholar]

- Fu, J. DOM generation from aerial images based on airborne position and orientation system. In Proceedings of the 2010 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010; pp. 1–4. [Google Scholar]

- Li, Z.; Wegner, J.D.; Lucchi, A. Topological map extraction from overhead images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1715–1724. [Google Scholar]

- Amhar, F.; Jansa, J.; Ries, C. The generation of true orthophotos using a 3D building model in conjunction with a conventional DTM. Int. Arch. Photogramm. Remote Sens. 1998, 32, 16–22. [Google Scholar]

- Wang, X.; Zhang, X.; Wang, J. The true orthophoto generation method. In Proceedings of the 2012 First International Conference on Agro- Geoinformatics (Agro-Geoinformatics), Shanghai, China, 2–4 August 2012; pp. 1–4. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, Y. A new method of occlusion detection to generate true orthophoto. In Proceedings of the 2016 24th International Conference on Geoinformatics, Galway, Ireland, 14–20 August 2016; pp. 1–4. [Google Scholar]

- De Oliveira, H.C.; Dal Poz, A.P.; Galo, M.; Habib, A.F. Surface gradient approach for occlusion detection based on triangulated irregular network for true orthophoto generation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 443–457. [Google Scholar] [CrossRef] [Green Version]

- Gharibi, H.; Habib, A. True Orthophoto Generation from Aerial Frame Images and LiDAR Data: An Update. Remote Sens. 2018, 10, 581. [Google Scholar] [CrossRef] [Green Version]

- Moulon, P.; Monasse, P.; Marlet, R. Global fusion of relative motions for robust, accurate and scalable structure from motion. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 3248–3255. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Shen, S. Accurate multiple view 3d reconstruction using patch-based stereo for large-scale scenes. IEEE Trans. Image Process. 2013, 22, 1901–1914. [Google Scholar] [CrossRef] [PubMed]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy assessment of a UAV block by different software packages, processing schemes and validation strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Jiang, G.; Li, Y. A Novel Method for Digital Orthophoto Generation from Top View Constrained Dense Matching. Remote Sens. 2023, 15, 177. https://doi.org/10.3390/rs15010177

Zhao Z, Jiang G, Li Y. A Novel Method for Digital Orthophoto Generation from Top View Constrained Dense Matching. Remote Sensing. 2023; 15(1):177. https://doi.org/10.3390/rs15010177

Chicago/Turabian StyleZhao, Zhihao, Guang Jiang, and Yunsong Li. 2023. "A Novel Method for Digital Orthophoto Generation from Top View Constrained Dense Matching" Remote Sensing 15, no. 1: 177. https://doi.org/10.3390/rs15010177